I definitely tend towards minimalism in my personal projects. That often translates into getting stuff done with the smallest number of parts, or the cheapest parts, or the lowest tech. Oddly enough that doesn’t extend to getting the project done in the minimum amount of time, which is a resource no less valuable than money or silicon. The overkill road is often the smoothest road, but I’ll make the case for taking the rocky, muddy path. (At least sometimes.)

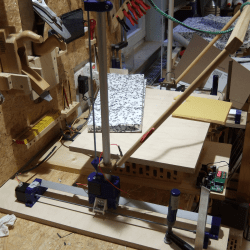

There are a bunch of great designs for CNC hot-wire foam cutters out there, and they range from the hacky to the ridiculously over-engineered, with probably most of them falling into the latter pile. Many of the machines you’ll see borrow heavily from their nearest cousins, the CNC mill or the 3D printer, and sport hardened steel rails or ballscrews and are constructed out of thick MDF or even aluminum plates.

All a CNC foam cutter needs to do is hold a little bit of tension on a wire that gets hot, and pass it slowly and accurately through a block of foam, which obligingly melts out of the way. The wire moves slowly, so the frame doesn’t need to handle the acceleration of a 3D printer head, and it faces almost no load so it doesn’t need any of the beefy drives and ways of the CNC mill. But the mechanics of the mill and printer are so well worked out that most makers don’t feel the need to minimize, simply build what they already know, and thereby save time. They build a machine strong enough to carry a small child instead of a 60 cm length of 0.4 mm wire that weighs less than a bird’s feather.

All a CNC foam cutter needs to do is hold a little bit of tension on a wire that gets hot, and pass it slowly and accurately through a block of foam, which obligingly melts out of the way. The wire moves slowly, so the frame doesn’t need to handle the acceleration of a 3D printer head, and it faces almost no load so it doesn’t need any of the beefy drives and ways of the CNC mill. But the mechanics of the mill and printer are so well worked out that most makers don’t feel the need to minimize, simply build what they already know, and thereby save time. They build a machine strong enough to carry a small child instead of a 60 cm length of 0.4 mm wire that weighs less than a bird’s feather.

I took the opposite approach, building as light and as minimal as possible from the ground up. (Which is why my machine still isn’t finished yet!) By building too little, too wobbly, or simply too janky, I’ve gotten to see what the advantages of the more robust designs are. Had I started out with an infinite supply of v-slot rail and ballscrews, I wouldn’t have found out that they’re overkill, but if I had started out with a frame that resisted pulling inwards a little bit more, I would be done by now.

Overbuilding is expedient, but it’s also a one-way street. Once you have the gilded version of the machine up and running, there’s little incentive to reduce the cost or complexity of the thing; it’s working and the money is already spent. But when your machine doesn’t quite work well enough yet, it’s easy enough to tell what needs improving, as well as what doesn’t. Overkill is the path of getting it done fast, while iterated failure and improvement is the path of learning along the way. And when it’s done, I’ll have a good story to tell. Or at least that’s what I’m saying to myself as I wait for my third rail-holder block to finish printing.

Building it the wrong ways, then understanding it and fixing the problems teach you the reasons for some things, along with the understanding of the importance of some points in the design, that building the overkill variation wouldn´t.

The same way someone that always build houses in the hills wouldn´t really understand the problems caused when building a house near a river that floods.

And the knowledge gained when building your machine the weak way will come useful when you need to build the next machine, or for other work conditions, when you will know if you need a reinforced tool holder, because of this and that, and so can design a machine better suited to a task.

Well, you could have written the newsletter this week! Perfectly said.

The latest tech is a hot mess (to use an over-burdened term). Python library dependency-‘heck’ (so my comment doesn’t get thrown away). Microcontrollers with unexcusable documentation nightmares. Open software with far too many cooks in the kitchen but no head chef – resulting in hilarious build processes and inconsistent design methodology.

All hail RF projects that attempt to do it all with 2n3904s, 2n2222s, and 2n3866s, or something similar. Why do you think there’s such an interest in Ben Eater’s 6502 project? Way past time having the technology work FOR you instead of it working AGAINST you.

Who cares if you are able to get something working faster if all the components and software change or become unavailable next month and you have no idea what’s going at the lower-levels, anyways? Have fun revisiting the project a year from now and starting from scratch.

Python dependancy heck is pretty rare. Code size is small, so you can just directly include a frozen full copy of a library in your repo and totally control updates yourself. Sometimes interpreter versions break things, and I wish they’d stop until v4, but it’s easy to fix and uncommon.

Likewise, an Atmega328/ESP32 and Arduino project will almost certainly still work in five years, and even if ESP disappears, the code will run on the next thing.

I am *not* a fan of the full Antifragile lifestyle in general, but one of Taleb’s useful points is the barbell strategy: High tech and zero-tech are both useful, but the usual DIYer approach of trying to stay minimal, while also using the latest shiny new stuff, and building a lot from scratch, and using lots of patches and hacks, can very easily suck.

Open source is great when it’s used by millions of people, and there’s a huge team making sure it keeps working. It’s also pretty great on a smaller scale when treated with that level of respect.

But a lot of the time older non-commercial software becomes some kind of philosophy dissertation in microservices, and a usability nightmare. Like with KiCad, which started working on usability a few years ago, and still doesn’t have the quality of UX that LibrePCB, which is brand new has.

It’s hard to do things your architecture wasn’t designed for. And DIY often has no architecture at all, so it’s hard to do anything!

I have never had an arduino source code last for 5 years let alone 2, the only way to avoid that is to provide the hex. Then your faced with countless people that can’t upload it or complain its closed

I’ve almost never had problems on newer Arduino, but even when there is problems, there are so many people with programming knowledge, that I suspect more people can fix it themselves than can debug their wiring mistake in a multivibrator.

Arduino tends to attract a higher than average concentration of total newbies, but in general across the whole hacker scene, I’d guess that most of the younger generation have more experience with code than solder.

The “better” ARM based arduinos tend to be buggy, and upgrades change behavior sometimes, so your old code breaks for unknown reasons that are probably listed somewhere in some developer forum, but never communicated to the users.

@Dude oh yeah, those. ARM development does kinda seem to be more problematic than people realize. Probaby because there are dozens of popular chips, and none of them are direct clones.

ESP8266 on the other hand, is a fairly mature technology at this point, which is about as easy as one could expect any kind of embedded work to be. I don’t really have enough ARM experience to know what all the fuss is about, but I’m always annoyed when someone uses one of those “better” chips other than an ESP.

I hardly ever encounter anything too complicated for an Atmega, but still simple enough that it wouldn’t benefit from WiFi or Bluetooth.

And of course, Atmega328, and probaby Tiny85 although I haven’t used it much, is rock solid.

The big things to go wrong is low power modes on the counterfeit clones, or memory fragmentation when someone doesn’t understand that Strings use dynamic allocation, but if you’re using them for the stuff you would otherwise use 555s ands logic gates for, I would expect good reliability.

Every technology has it’s things to learn and such, that’s what makes embedded systems a paid profession.

Sure would be nice if they would stop randomly changing APIs and stuff. I think a lot of engineers actually like breaking changes, because they don’t want people to run old code, and they like the whole idea of software as a continually maintained service, not a product that you can just buy and keep.

Look at the awfulness of Snapcraft forced updates and the discussion threads around it, and it’s pretty clear how paranoid they are about allowing even pro users to run old code.

I work in a startup. Keeping thing simple would make sense. But… The lead guy’s vanity makes him meddle and whimsically change direction or reinterpret decisions. So rather than keeping things simple, they’re complex and not built on engineering principles. Will this startup succeed? Discuss.

Complex: Seems fine to me, most successful commercial tech is complex for a reason

Not built on engineering principles: imscared.jpg

https://en.wikipedia.org/wiki/Conway%27s_law

This law has held in my own experiences, and every multinational in history.

I generally respect the people in different specialized fields…

and know most engineers design code like a web designer would build an airplane…

All code is terrible, but some of it is useful at times…

;-)

Simplicity and efficiency are key engineering objectives for me, and I feel that they come from some subconscious drive that I can’t explain. It’s the same thing that makes people think “you could have done that with a 555 instead of a raspberry pi.”

But I also find that this is a relative scale, and what is simple for one person to do with a 555 is difficult to do on a Linux computer, and vice versa for someone else.

So I think it also has to do with problem solving and overcoming challenges. The same stuff that makes people miniaturize things, or build a microcomputer from discrete transistors, or port doom to some random widget just because you can.

These things I think are fundamental to the hacker ethos, and why I read Hackaday. In other words, Elliot, I think you’re in good company.

Was supposed to be a new comment not a reply… oops?

Indeed, there’s lots of overlap though.

For me cheapness is usually the highest priority, as I’m usually trying to do projects that are really beyond my budget. But that does mean I’ll always side on the over engineered end of the design – after doing some calculations – using just one sheet of heavier duty ply/steel tube etc because you cut/designed the parts with enough meat to really handle the loads is much cheaper than having to buy new materials – So my projects only end up underspec’d and making me nervous if I have scraps in stock that might do the job, stuff in my junk from other projects pile is effectively free afterall…

While I do like the ol’ do it with a 555 mentality, throwing something like a Pi at the project is often for me better – a pi zero is all in one, ready to go fully functional for almost anything you might want and costs peanuts, the 555 either requires PCB fab or lots of work dead bugging (which is a Skill I’ve yet to really try and learn) or on protoboard – which soon adds up to similar cost to a pi zero, as that stuff isn’t free… Certainly very good reasons not to use a Pi or Arduino exist, but on cost ground they are very hard to argue with.

There’s a bit of an inherent conflict with simplicity. It’s a vauge and only somewhat reliable proxy for reliability, cost, or ease of use, but a very strong aesthetic and philosophical desire shared by some but not all people.

I think this is why there can never, at any point, be one primary Linux distro most people agree on, because it’s a division with no solution other than to have separate systems. There’s no distro that I would enjoy, that the guy who used vim and dwm would want.

For me, simplicity means basically nothing, as long as the complexity is encapsulated and tucked away somewhere, and I can’t think of any time I’d ever use a 555 unless I was trying to save every possible penny. Standardization, future proofing, robustness against user error, and aesthetics are way more important to me.

If someone blinks an LED or makes an alert tone with a 555, I’m probably going to think it would have looked nicer with a fade-in on an MCU, and the alert should use multiple tones to get more attention.

Other people seem to have a really mathematical worldview, and want their design to make some kind of statement about pure logic. Things like “Multiple tones are more noticeable” are arbitrary and empirical, and they’re more interested in logical things things like “This was the absolute most pure expression of this idea I could design” or “This programming language represents code and data the same way”.

There’s probably a bit of the old furniture craftsmanship tradition. You can make a table that looks great and lasts forever, for dirt cheap, just by using plastic coatings, replacable parts, CAD simulations, Exact Constraint design, etc.

And someone else could come along with no training and build one from your plans, or some other engineer with the same goal could design something very similar, just by following the same general process, that you can learn from a book and case studies.

Minimalist craftsman types want to make something that makes you say “Oh wow, that person must have some real talent, and the kind of experience you can only get the hard way”.

Non-minimalist types like me want something that makes people say “Yep, they followed all the best practices, this will work no matter what idiot tries to break it, and they hid all the ugly parts really well!”

The point of the “use a 555” is that a circuit that is designed with the required logic contains no software, so you don’t need the extra step of flashing it. Put the batteries in, and it just works.

Plus, the logic circuit cannot crash, corrupt its flash, etc. It’s more fault tolerant in general, so for example in a brown-out condition the beeper continues to emit a tone at a lower frequency where the MCU would simply stop working.

The value of designing things for a function is maybe clearer in mechanical engineering. Most of the time a cam and a follower, or a constrained linkage, is just obviously better than throwing in a whole robotics platform with computer controlled servomotors and actuators.

PS. it’s trivial to make a 555 produce a variable tone, because it has the often neglected pin 5 that controls the reference voltage levels, so you can do frequency modulation with it.

@Dude I’m not so convinced a cam and follower will be better than a robotics platform in the absolute general case.

Motors and drivers are getting *really* cheap these days, and *really* reliable. Mechanical things break and wear out too, but they usually can’t detect, report, compensate for, or shut themselves down. What’s more likely, the firmware crashes on your multimotor system, or an old sock jams up the simple system with one motor and a bunch of linkages,and a gear grinds up? I don’t think there’s any way to know without all the details, but my best guess would be that more software contol and monitoring will probably be better in an average consumer environment.

Maybe not in a jet engine, or a medical device, but I’ll leave those to the mechanical engineers.

You might be able to modulate pin 5 on a 555 timer… but with what? The more control you want over the result, the more parts you need to add, and the harder it is to make changes. You might wind up using more power. Maybe next year, they find out some DIN standard warning tone reduces accidents in one setting by 30%, but it takes 8 chips to do it analog.

Something like at Atmega85 is already so abuse tolerant that I wouldn’t even consider it’s failure rate till after I was done looking at the failure rate of almost everything else in the system.

In space where one mistake means you’ll wind up like Sam Jones in a Leslie Fish song, by all means, do whatever your bazillion years of experience and science tells you will work.

But on a home 3D printer, washing machine, or an alarm clock, I’m way more worried about the buttons on the side than any of the not-power-handling electronics wearing out or doing anything unexpected, and I’m actually a little *more* worried about analog in a lot of cases, because it doesn’t have any checksums or anything for noise on long wires.

> in the absolute general case

No, but it gets pretty close. I mean, if you want a kitchen cabinet to swing open in a certain way, would you a) design a bespoke linkage that does the job, b) put in an Raspberry Pi and two miniature stepper motors? That’s the ridiculous example, but you get the point. A huge advantage is, the linkage or cam-follower needs no electricity to function because it is completely passive.

In the case of the 555, the “passive” argument goes back to needing software, and needing to develop/debug software, and then having the issue of updating firmware… That is why the “minimal” solution is also often the most foolproof: if your device is running an entire Linux SoC just to blink some lights, you actually have a million moving bits that can go wrong inside your black box. Who knows what sort of bugs and counter overflows you left behind? With a 555 you know definitely that it will do just one thing until something goes physically wrong with it, and it will keep doing that beyond the end of data retention of your SoC’s flash chip.

>but with what?

The 556 chip was made for just this point. It has two 555’s in it.

> Maybe next year

Regulatory fickleness is a thing you simply cannot design for, because it is arbitrary and to a great extent lobbied in by your competitors to attack the points that you didn’t plan for. If you invent a better mouse trap, all the other companies will cry crocodile tears for the mouse and try to get it banned for being too effective.

If it suddenly became necessary to have the possibility to change the warning tone every year, then you would have to use a MCU – but in general designing for that sort of contingencies will leave you with a design that has everything in it, costs a million, weighs a ton, and only 1% of all that stuff actually does what the thing is supposed to do.

>because it doesn’t have any checksums or anything for noise on long wires

It either doesn’t need any because the noise is inconsequential for the action, or it already has been accounted for in the design. For example, a standard 5 Volt CMOS logic IC switches low at 1.5 volts and high at 3.5 Volts, and the voltages in between are for hysteresis (schmitt-trigger inputs).

This is like the case of analog vs. digital TV: in one case a transient error will result in a blip on the screen that is over in 1/60th of a second, while in the other case the same error may drop an I-frame packet and cause the picture to freeze for three seconds. Hence why the digital transmission technique absolutely requires redundancy measures, while the analog one simply does not.

A checksum is largely necessary because data corruption along serial links can cause the MCU to misbehave in weird ways, crash or lock up, which the properly designed analog circuitry simply couldn’t do, or would instantly recover from. The digital system is often trying to be too economical about its construction, which then results in situations where an error along a single wire (like a CAN bus) causes unrelated problems elsewhere and the entire system goes down, which then requires that you place an independent (often analog) safety circuit around it to nanny the MCU.

Then finally there’s also the difference in philosophy between centralization and modularity.

You can build a device where every aspect is controlled by one busy computer in the middle, or you can build it so every function is a module with a simple interface, such as an input that signals “Go”, and an output that says “I’m done”. These modules can then be chained together in series and parallel, operated by a central logic or by themselves. Since they are modules, you can replace any one with a different one and build other systems, or improve a part of the system by independently re-designing just that part without affecting the others.

This is more of a systems automation thing, but you can find the same point in the Arduino world as well. For example, you want to add a servomotor that moves between a couple set points. You could make your MCU generate the signals and spend a whole lot of time re-writing and optimizing the rest of your code around the fact, or you could just plonk in a 555 servo control circuit and fix the timing with a couple trim pots. What you will have made there is an independent module that you can just build whenever you need one, that will work with simple logic – no software, no MCU required – and you can re-use that for the next project. You can even use it as it is: you don’t need to have the program ready to put the motor in the project and poke the wires with a screwdriver to make it move and see where it needs to go. This is concurrent engineering that speeds up development.

The modularity approach favors the “555 method” because you don’t want it to do anything more than what it does, and you want it to be simple to the point that you can see what it does directly from the circuit diagram without referring to some code listing and your bad memory. No black boxes, and don’t overload functionality to the point that the module becomes a project onto itself.

Of course you could have some sort of “do everything” module where you have a re-programmable MCU and connectors for this and that potential purpose, but that would be a waste of time because in the end it would only be a bad compromise and less economical to have.

The module which we call B is supposed to be acting as glue between parts A and C, but because it is so generic it usually doesn’t contain the actual means to interface with these two (unless it’s I2C, SPI, etc.), just the provisions to add the means. That means you merely kicked the can down the road and now you have to design and build the interface between A and B, and B and C, and then program B to perform its function.

That’s exactly what an Arduino is for many, many projects out there, and why people come out to say “You could have done that with a 555”. When you know your thing is to have a button and a buzzer, you better just design both so they can be mashed together directly without the Arduino in the middle. If you also want it to send you an MQTT message every time, that’s your business and you can do it however you like.

@Dude I supposed there’s a whole lot of variance between domains. For something literally as simple as a kitchen cabinet, I’d probably be “a bit miffed” if I couldn’t open it without electricity, especially when that’s a case where mechanical is at it’s best, a very common task that people understand very well, and has proven itself to be reliable.

Other things, like cars from what I can tell, have proved to be more reliable the more advanced and digitally controlled you get.

Maybe less repairable, but that’s a consequence of proprietary-ness, not complexity, and I’d argue software and electronics are more repairable now than ever, because so many people have experience with them. I’ve always found the mechanical aspect of almost any project to take a lot of time, and there’s invariably some part that requires dexterity that I’m going to risk breaking even if I understand the design perfectly.

High tech is extremely “book learning friendly”, you can be confident it will work by virtue of following the procedure, and there are not really as many steps like “Adjust this with an expensive tool you don’t have, or just kinda eyeball it”.

Mechanical and analog systems just have more possible interactions. Friction, EMI, cable resistance, heat, wear, vibration, parts colliding with each other, corrosion, parts tolerance, weight, G force when someone drops it, and the like, stiffness, mostly have no software equivalents.

There are code bugs, but they aren’t affected by issues in the production line, or in hand assembly, and you can test it extensively with automated tests.

Mechanical is getting closer, with 3D printing and CAD being cheap, but it’s still harder to get right than an Arduino sketch, and there’s still no easy to use FOSS FEA for FDM prints.

And as to modularity and reusability, to a programmer, there is nothing as reusable as an Arduino library. You get it right once, and it’s solved. You don’t have to build or buy anything to use it. Centralized computer control separates function from hardware. There’s no cost or waste to change it, because it’s all done in software.

Most of the time, you probably could do exactly what someone did with a 555 and a few passives. But could the person who did it? And how much time would it take? Would they need to order additional parts? And would it be able to do all the stuff they planned for version 2?

In some cases, analog and mechanical can be more reliable than digital, but it’s a bit of an arcane art, that can only be learned through long experience, probably a high level of intelligence, and some rather expensive textbooks.

Digital is predictable, repeatable, more able to monitor it’s own state, and one type of microcontroller can replace having to keep a bunch of random gates and timers around. I suppose the word that best describes well done, by the book digital is “Idiot proof”, while getting mechanical right is often a fine art.

>Other things, like cars from what I can tell, have proved to be more reliable the more advanced and digitally controlled you get.

Funny, I have just the opposite impression. Recently a colleague was having troubles with the handbrake jamming in her car, and the issue turned out to be a sensor failure, but it then cascaded down to the point where the engine wouldn’t run and the doors wouldn’t open. In an older car with analog electronics, such faults would simply cause the dashboard lights to go crazy but not affect the ECU or the central locking because they’re different and independent systems.

>to a programmer, there is nothing as reusable as an Arduino library. You get it right once, and it’s solved.

That’s largely because you don’t have to solve the hardware interface. You just assume it’s there, and that you can plug widget X into port Y and that’s it. That’s a perfectly valid assumption in the software world, where what you’re interfacing with is also software in one sense or another. You abstract the hardware layer away, and it just works.

I had a recent experience in trying to make the Arduino stepper library function with unipolar motors and a simple controller: four signals to switch the coils at about 2 kHz max rate. It was supposed to be just that kind of “drop in” job to get it working and move on, but the library (a black box to me) just wouldn’t work right. It generated the correct patterns, but in a weird pin order that wasn’t documented anywhere, and the timing was off because it was built with the assumption that you can plug the four wires anywhere, for example any two wires in PORTD and two in PORTB, which means each bit is written to the registers independently, which obviously leads to the problem that the signal pattern doesn’t update at the same time. The library functions are also blocking, so you can’t do anything else while the motor turns, which is handled by turning the motor a small number of steps at a time, which leads to jittering.

The generic library “works” and affords more flexibility for the user, but it’s a bad compromise in general. So, I wrote my own interrupt-based handler that bangs the bits out, minding the hardware limitations. I could have used someone else’s different library, but again with the black box issue it would have taken me longer to figure out whether that library actually does what I want it to do, and nothing else. It’s quicker and less bug-prone to build the code from the ground up than try to whittle someone else’s “universal stepper library” down to the bits that I actually need, and only those bits so I’m not running some secret sauce that would interfere with something else.

If for example I would need the Timer1 for something else, I would have to use a different stepper motor control scheme. Maybe I can still use Timer1 for both purposes, but I have to make a special case where every fourth time it does X instead of Y… etc. etc. There is no “get it right once” here, because many cases are different and with contradicting requirements.

> it’s a bit of an arcane art, that can only be learned through long experience

There’s literally community college courses on the subject that you can take, plus the plethora of white papers from manufacturers, a web full of tinkerers, or just picking up the datasheet of your favorite MCU and reading it for once.

You might find it interesting for example that your Arduino has an analog comparator in it, so you don’t have to waste time to analogRead() a value and compare it in software, but instead you can have the hardware generate you an interrupt or a status flag whenever the reference voltage level is met. Of course this interferes with the Arduino environment by blocking the serial port, so you can’t actually use it…

Oh, sorry, the AINx inputs are on PD6 and PD7 on the ATMega328 so you can use them with the Arduino Uno. But if you connect the reference voltage to the ADC mux internally, then analogRead() function stops working. It will probably do that anyways when you touch any of the relevant registers.

>Digital is predictable, repeatable, more able to monitor it’s own state

Except when it crashes and fails to monitor itself. Often times “digital” is just the inmates running the asylum, because there’s exactly one computer doing everything, one bus for everything, etc.

Even if you have a double redundant computer system, it is often not enough. I know of at least one case where the “safety” was a backup computer system that took over control if the first one failed, but then the same fault that tripped the first computer tripped the second one as well, and the factory burned a million dollar motor.

In the safety directives, you’ll find requirements for independent safety circuits and independent logic exactly because you can’t trust the computer to run right and monitor its own state. If you want the thing to have a CE marking for example, there are certain rules: It is not enough that your main controller can detect a fault condition, you also need an independent safety system, with double redundant switches, cables, etc. to check the main controller. In order to avoid liability, you must be able to show that in case of a fault the system goes into a safe state /regardless/ of what your computer is doing. The MCU can’t be monitoring itself to be safe.

This is a difference between active and passive safety. You want to talk about predictable and repeatable, you design with passive safety. Active safety assumes certain things, such as that you have power to the device, that your communications work, that your software is running… for example the safety buzzer: if your MCU just crashed, it won’t sound the buzzer when you press the button. If instead you built the 555 circuit that makes the sound on a press of the button and also sends the information down to the MCU, this is more passively safe because it doesn’t depend on what the MCU is doing. The warning is issued by the hardware whether the software notices it or not, so you can still sound the horn of your car even if the CAN bus controller has crashed.

Active safety is about reacting to failures, and what you would prefer to react to is the condition where the passive safety has already brought the system down to a safe state. This often means mechanical interlocks and switches that save you from stupid things. For example, if your washing machine door might be forced open because of a latch failure, it needs a switch that will physically cut the power to the motor. It’s not enough that the MCU can detect the door opening and turn the motor off, because eventually you’ll run into the case where the MCU crashes and the motor keeps spinning, and the user comes in and forces the door open.

Analog often has the same issue though. The safety standards require triple redundancy lockstep logic and the like, but the more important point is that the digital controls are considered best practice at all.

“The digital way”, when properly implemented, requires a lot of complexity, that’s just the nature of it. If you try to do digital, but not actually follow digital best practices, you’re gonna have a bad time.

Analog systems also use all kinds of redundancy as part of their best practice. Switches wear our and sensors get clogged with old peanut butter. They also rely on extremely high quality parts.

You might have worked with a cam or a linkage that was more reliable than a digital control module, but it was probably a pretty nice one. Systems that aren’t life safety critical are usually very cost sensitive, and need to be operated by untrained users.

I have seen probably a dozen eBay knockoff Atmega328s in real systems, but I’ve yet to see a failure definitively caused by one. I’ve seen broken wires, plenty of bad microswitches, unknown faults in analog systems that nobody debugged because we were upgrading, people blowing stuff up with bad wiring connections, and the like.

I’ve also seen a lot of power supply and power handling failures, and lots of issues with anything hand-assembled, obviously more often with higher parts count.

I’ve also seen a lot of code bugs, but once something is working and tested, it rarely needs more than a power cycle and a bug report, and doesn’t often wear out or permanently fail.

Analog excels at completely hard cutting something, when you need to guarantee it doesn’t operate, as in a washing machine, and it does extremely well when the total system has a small and defined scope, and every part is high quality.

In your car horn example, you are probably right that the analog horn might be just a little more reliable, in a simple setup without dozens of other features, and where every part was very high quality.

But there’s a lot that can go wrong in a tangle of dozens of non-redundant individual wires, to control horns and lights and various things that all have their own failure rate that may be higher than the digital.

Analog shutoffs and passive safety aren’t going anywhere (Although passively harmless is one step better than passively safe, where possible), but I think there’s a really good reason the general direction is towards more computer control.

Getting that level of reliability with analog systems often means expensive parts and expert users, or it means giving up features, whereas digital can get to 99.9% very cheaply and easily, because all the tricks and redundancy are easier to manage than thousands of wires or hundreds of chips on a board.

Analog is great for getting that extra 0.01% of errors that can cause disasters out, but usually you still need a computer to tell the operator when he’s made a mistake anyway.

> it does extremely well when the total system has a small and defined scope, and every part is high quality.

Which is why the analog/hardware solution often excels, or is just as good, in the case of the modular design where each module IS small and definite in scope, and possibly designed by/for someone else as a part of a larger project.

I don’t think it generally needs every part to be “high quality”; that is merely down to your required tolerances and/or whether the part is subject to a lot of wear and tear. For example, if a switch is expected to open once within the lifespan of the machine, you might as well make it a strip of foil that breaks in two, essentially a mechanical fuse that is replaced every time, instead of putting in a special mercury wetted hermetically sealed reed switch designed for infinite cycle life.

> because all the tricks and redundancy are easier to manage than thousands of wires or hundreds of chips on a board.

I think that’s describing an attempt to replace a centralized computer controller with an equivalent analog computer, which isn’t what I’m talking about. I’m talking about each part of the machine doing its own thing, using suitable mechanical and electronic hardware means that are minimal, elegant, and fit for that particular task, so the central controller needs to be nothing more than a dumb sequencer, or the system can run without any central control at all, just one module passing information along to the next. Break down the problem into smaller pieces, and the complexity goes away into individually solvable easy problems.

Although if you really did go down the way to build an actual analog computer instead, the analog computer could be more elegant and simpler. They often were by the sheer necessity of having a minimal parts count in a time when a single transistor cost dollars and adding another valve would be another filament to burn out in X hours. A humble op-amp or two can do magic, but then we’re getting to the point that you’re making, which is turning the design into an esoteric exercise in analytical math and voodoo tricks, which is something that people with programming, product design, or systems automation background rarely have especially these days.

>Systems that aren’t life safety critical are usually very cost sensitive, and need to be operated by untrained users.

I’m trying to think of a situation where such down-to-cost systems would be the case, but I find when you go down that rabbit hole you instead get ASICs that are single purpose – essentially the analog hardware solution crammed onto a single silicon chip and hidden under a black glue blob. Their designers are again “analog wizards” who take the internals of a 555 and put them in the design to avoid throwing some CPU core in just to bling a LED.

The “Put an AVR in it” -solution exists in the medium volume, medium price, short lifespan product category like in a smart lightbulb, or a wireless SD card, where the company expects to turn out a product that remains on the market for a couple years until it either fails to attract attention, or it is replaced by cheaper clones.

Here’s my large format 3D-ish foam cutter. Ended up making an 11’ x 8’ one too https://adamblumhagen.net/foam-cutter/.

That’s awesome! And with the polargraph-style design, you can scale it up and down very easily.

I’ve seen some videos of a smaller dual-polargraph XYUV wire cutter like yours, but I don’t know how far that ended up. In principle, it should work fine because the wire is light. (Maybe with a third motor for tension on the bottom to control the swaying and hold it in plane?)

I honestly wanted to look into this, but was scared off by having to write my own motion control. I probably shouldn’t have been.

Generating the slicer that takes arbitrary 3D models and converts them into slices for this machine is a really cool (difficult!) problem, and it looks like that’s where you are now.

I wonder if you can’t first make a low-poly version of the model and then use the vertices to feed the cutter, improving resolution as necessary. Might need to find a low-poly algorithm that does trapezoids instead of triangles, so that each side of the plotter is always in motion.

Between writing the newsletter and reading it again, I got an Arduino Mega / Ramps 1.4 setup running mine for four independent axes, cut a few demo pieces, and then burnt out the Mega clone by running too high a voltage on the motor shield. Back to eBay…

But I got my wing-generation code figured. It’s a much easier problem. :)

I usually try my best to reduce the actual physical parts count, weight, and size, but I never try to simplify the software, and I always plan for a fairly powerful computer and communication chip.

And there’s a few other parts, life self protected drivers and H bridges instead of discrete MOSFETs, etc, that always seem pretty clear. Sensors and anything else that gives more flexibility and options for the controller can almost always be put to some kind of good use later.

The value of minimalism, outside of educational projects, as far as I’m concerned, is to reduce waste and weight. Something like a ball bearing instead of plain, that will make the thing last longer, or future-proof electronics that make everything super convenient and reduce the temptation to upgrade to a better one, can reduce the overall amount of material needed.

Code is free and light I’m perfectly happy to use 1000 lines to save a spring or a chip, but I’ll definitely think twice every time I put a steel rod or something in a project.

Exact Constraint is a very interesting design method which is very useful in removing unnecessary material, while getting a better result that is easier to build.

Code is free, but programmer-hours are not, and a lot of the hours are spent just staring at the screen going “hmm…” which makes management very unhappy. Any bit of code you have, you also need to document and test for function if you were being serious about it.

Many people discount software as something you get on the side, because “it’s just a few lines of code, shouldn’t take long”, or “It’s just a minor change. Please add X, Y, Z” , which then requires a complete re-factoring of how the program is built. This then results in products with c**p for software, bloated generic barely functional and ill-thought out junk that cheapens an otherwise nice gadget. When you haven’t allocated any resources to do the software, the software guys just cram the whole toolbox in it and cover it up. This is the equivalent of installing a piece of machinery by a permanent application of locking pliers: no time to get the correct nuts and bolts, just fix it by any means and move on.

That’s another reason why I prefer to design things with their functionality built right into the hardware rather than being controlled by software from elsewhere, because I know the software will be neglected, done by someone who doesn’t understand the product, doesn’t have a deep insight to its function, and ultimately doesn’t care because they have a deadline and a cost target to meet. There’s no luxury of trying out different schemes and what-ifs: you only get so many billable hours to spend, so you make it “work” and the rest is someone else’s problem.

Sometimes that someone is the future me, which means I’m saving myself some work and bother by designing the part to already do the thing it’s supposed to do all by itself. It’s then easier for me to change this function or behavior, because I don’t have to go back to the big software blob somewhere out there and change that as well to make it co-operate with the changes.

Basically, suppose a customer wants to change the motion profile of an actuator. They want it to go zigzag instead of zugzug. Alright, well, where do I have to go to make that change? If the design is modular, then the change can be made within the context of the actuator itself, but if it’s centralized to a big program somewhere else, then you’re dealing with a game of Jenga where pulling one block might topple the whole tower, and you might not have the permission or access to make that change in the first place.

I’ve only worked for small companies, where there is no distinction between the hardware and software people, or even between the designers, field engineers, and busisness people.

If using software meant I had to trust someone else’s software, and they weren’t a vendor or programmer I specifically had a lot of respect for, I might well wind up thinking like you after a bit.

Because I have, in fact, seen large dump loads of crap software. Most of it actually written almost begrudgingly by people who prefer the analog way, and refuse to actually learn software development, because to them, all software sucks.

Or, sometimes it’s because they insist on reinventing everything and not using any standards, and writing everything from scratch in C++.

Or sometimes it’s because it’s a commercial product that has to be locked down and vendor lock-in-ified, and you can’t do things the easy way, so all your time is spent security devices against your customers.

And, when proprietary cloud services and the like come into play, I can definitely see the appeal of analog.

But a typical project for me is far more in-house, and if I’m designing hardware, I’m probably writing the firmware myself too, without anything but FOSS libraries. The only people who will touch the code are part of the team.

For me, changing an actuator profile(Something I actually did recently albiet on a completely non-critical tiny system) would be more like:

Log into the webUI, mess with the cue list, jump to the start cue to test it out, hit save, use a script to pull all that cue data from the controller to my laptop, commit it to the Git repo.

I’m 15 so for me that means I always pick the cheapest option. Can’t afford to go overkill really. As the article says, this is not the easiest option, so it does not lead to me finishing many projects. I think I’ve developed enough skills now though that I can successfully take the harder path.

I am at a very dfferent point in life and yet I am also doing the cheapest option. It is ”easy” for noob to ”pay to win” the ‘look at me” game on HaD by buying lots of premade modules and/or expensive parts. It is fun to doing something with a $0.20-$0.30 microcontroller over the usual overhyped Arduinos.

My projects might not work because I refuse to copy/paste some arduino or some other large bloated frameworks, but I might ended up learning something on the way or from the failure. I feel it is more of a personal challenge to develope my own designs. If 100% of my projects finishes, then I am not challenging myself enough.

Yeah, that’s exactly what I do. I don’t use the 200kb ESP8266 to blink an LED, I learn about the non wifi version that’s a tenth of the size. Learning something matters more to me than finishing a project.

If you really need that project to work – like in the case of this hot wire cutter perhaps being required for your other projects – I’ve found its far better to do a little maths, and then overspec so it will definitely work. Its far cheaper to buy slightly more expensive materials the first time round, than have to buy two lots.

I don’t finish alot of projects, at least in any kind of timely fashion as being broke does really slow one down at times. Definitely feel like it leads to learning more before I even try a project though – a great deal of background reading to find out if its really viable. But that is paper knowledge and doesn’t always mean much, till you try to apply it you just won’t know if you really understand it well enough.

So I love both of your ethos, where ‘learning matters more’ and you want to fail sometimes to be sure you are ‘challenging yourself enough’. I’d have to say I wouldn’t call any of my projects a failure though, just not finished yet… I don’t think I’ve ever properly abandoned an idea, yet at least, just found out I need more time to make the tooling to make the tool to make the…. But as I started some projects I’ve still to finish more than 20 years ago… Does that count as failure yet?

“Does that count as failure yet?” Not until you give up.

(But there’s no shame there, either, if you’ve learned that you’re chasing something impossible. Figuring out that you can’t do something is good too.)

In this case, I could have sunk at least $100 into precision rails, or maybe compromised on the travel and precision by using drawer slides, but half of the “fun” is working out a cheap and cheerful not-super-precise, but scalable axis. I’d had half of the work done from a previous drawbot, so there’s that too…

Relevant Akin’s laws:

33. A good plan violently executed now is better than a perfect plan next week.

34. Do what you can, where you are, with what you have.

35. A designer knows that they have achieved perfection not when there is nothing left to add, but when there is nothing left to take away.

36. Any run-of-the-mill engineer can design something which is elegant. A good engineer designs systems to be efficient. A great engineer designs them to be effective.

My #1 rule of thumb: every termination costs a dollar. Doesn’t matter whether it’s a 64-pin QFP ADC or a 0603 0.1uF cap. Most of that cost is time. Which is absolutely not free, not even for fun or educational stuff.

BTW some of my projects never finish to my original vision because they work well enough as is. I don’t want to take them offline for weeks just to add the extra features that I had provisioned for.

Ill join you with that one – once its doing the job you needed it to do its good enough – Ill add the next feature when it stops working

I hear ya. I’ve been roasting coffee in the same ghetto coffee roaster setup (with a trivial popper replacement) for like 9 years now, even though it was meant to be a quick lashup. It just works…

I argued here that the power of “working” is a good reason to start underdesigned, but it’s probably also a good reason to finish up a project so that it’s nice looking. Because I’m not going to want to do that part later on either…

I kinda do both ends, or one extreme to the other. If I think I know the problem inside out, I cobble something that works just barely. If I think the problem is not well understood, and will reveal depths or even worse, get fractal, then I start with overkill hoping for lots of slack to get around new aspects as I make it up as I go along.

Now one thing in particular that’s a maximum time, minimalist materials exercise, is a thing I’ve been doing as “therapy” when the electrons won’t dance to my baton/wand/sonic-screwdriver (Or insert appropriate metaphor in your current operating paradigm). It’s… making device stands out of cereal box cardboard (Or whatever box, just that type of cardboard) .. right, just laminate 10 layers with epoxy and use it like plywood or plexi LOL… I guess I just stumbled into doing this and don’t really have superclear objectives, but it’s some notion of making a universal, rescalable, plan for a sturdy stand, that will hold anything from large laptops to phones and tablets to meters on your bench, using minimal amount of card, at various angles you want (at build time). Now it’s super darn simple to just make a phone stand or one for a light tablet like a kindle fire 7 that will last you a week, it’s yer old plate stand format, back to back Js or Ls.. but dogear a corner or nudge it a bit too hard and it’s gone topply. So, I’m trying to improve performance to hold heavier objects and take a few knocks, even maybe up to holding those cheap 24″ TVs you scored that were on wall mounts so had their pedestals thrown out. All this proceeds at a “when I’m fed up with something else” or “when I feel like dicking around” pace, so really it’s only got as far as testing easily assembled beam structures.. you guessed it, triangular is winning. However, arranging joints and interfaces between the beams in ways that spread stress so as not to crease or crumple them is harder than you might think. At least in ways that are not fiddly to assemble or require invention of a endoscopic stapler or something. I guess I might be aiming for fastener agnostic as well, trying not to make it matter if you tape, staple or glue.

Maybe it’s a stupid way to make a tablet stand after all, but it’s cheap, you can have one the right height, shape and angle beside the PC for doc handling, one on the bench at 45 degrees maybe and one to fit a narrow space where you charge it. Ultimately I guess, I’m a) just dicking around but b) hoping to come up with more universal insights into thin sheet material use, such that I could build sturdy and light, large structures out of aluminum eavestroughs (rain gutters) or drip edge flashing or something like that.

One thing you should definitely do with laminating your own multi-ply cardboard is preshape it on a wood/plastic form so the laminated blank already has the stiffening curves and beam shapes you wanted – Though I think you’d be better off wetforming the sheets and using woodglues for that. Don’t need to saturate the paper/card, just get it properly damp – I use a mist spray bottle and just give it a spritz more as needed.

Wetforming paper/card really is magic, turns some of the really angular origami blanks into such lovely curves and really does stiffen things up, it sticks to that formed shape really surprisingly well…. Though never ever get roped into making 200 roses for your best freind’s wedding tables – It puts you off folding for a while. The end results are astonishingly pretty, and very durable though. So I guess it was worth it.

Well if a machine does what you want and you take a piece off and it still does what you do it’s overbuilt.

Until you find a certain pattern it can’t cut well without the missing brace, or a heavy material it can’t handle with a crappy bearing, or an operator who leaves it unattended and it starts the house on fire, because you removed the safety shutoff, or you notice a subtle annoying buzz it makes without one part….

Or when you try to move it, and you realize the only reason the simpler version worked was because everything was adjusted *juuuust* right.