The 30th anniversary of the World Wide Web passed earlier this year. Naturally, this milestone was met with truckloads of nerdy fanfare and pining for those simpler times. In three decades, the Web has evolved from a promising niche experiment to being an irreplaceable component of global discourse. For all its many faults, the Web has become all but essential for billions around the world, and isn’t going anywhere soon.

As the mainstream media lauded the immense success for the Web, another Internet information system also celebrated thirty years – Gopher. A forgotten heavyweight of the early Internet, the popularity of Gopher plummeted during the late 90s, and nearly disappeared entirely. Thankfully, like its plucky namesake, Gopher continued to tunnel across the Internet well into the 21st century, supported by a passionate community and with an increasing number of servers coming online.

What is Gopher?

In the northern summer of 1991, while the primordial Web was being bootstrapped at CERN, the first Gopher servers were coming online at the University of Minnesota (PDF). Spawned from the need for a campus-wide information system, the Gopher client and server software eventually escaped out onto the wider Internet, and into the hands of eager early adopters.

In the northern summer of 1991, while the primordial Web was being bootstrapped at CERN, the first Gopher servers were coming online at the University of Minnesota (PDF). Spawned from the need for a campus-wide information system, the Gopher client and server software eventually escaped out onto the wider Internet, and into the hands of eager early adopters.

The name ‘Gopher’ is a play on ‘Go-fer’, usually an employee or volunteer that fetches and delivers item requests. In the same way, the Gopher client was designed to be able to retrieve information from a Gopher server, and present that information in a human-readable format. The gopher also happens to be the University of Minnesota’s mascot.

For a time, Gopher was the new hotness. Users could search for and find text files via way of a simple hierarchical menu structure, reminiscent of Web hyperlinks but more regimented in their implementation, and not unlike the file and folder structure of contemporary operating systems. Setting up a Gopher server was relatively easy and only required modest hardware – reportedly, the first Gopher servers used off-the-shelf Apple computers, including the Macintosh IIci and SE/30 running A/UX (Apple UNIX).

While a resource in its own right, Gopher acted as a jumping off point to other parts of the Internet. If something wasn’t available on Gopher, chances were you could tunnel from Gopherspace all the way to the Web, or to an FTP server, or to a newsgroup, to find the content you were looking for. Veronica (or “Very Easy Rodent-Oriented Net-wide Index to Computer Archives”) was a robust Gopher server search engine, and was constantly expanding its database of Gopher sites. Other services such as Wide Area Information Server (WAIS) were also available.

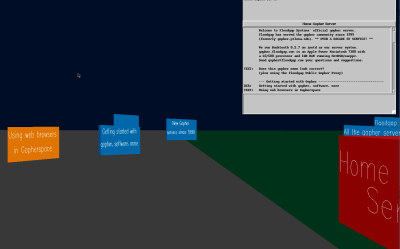

While Gopher had a promising start, the Web was destined to become the preferred method of accessing information on the Internet. Hypertext steadily grew in popularity among the masses, fueled by the likes of NCSA Mosaic, one of the first mega-successful Web browsers. The rigidity of Gopherspace was starting to look a little tired when compared with the colorful and illustrative Web, which was becoming increasingly more ‘surf-able’, thanks to speedier modems and their ability to support a richer multimedia experience. Despite some truly fascinating experiments such as GopherVR, community concerns over licensing costs imposed by the University of Minnesota was the figurative straw that nearly saw the end of Gopher forever. By the late 1990s, Gopher servers had become an endangered species, while the Web reigned supreme as the premier Internet experience.

Why Gopher Today?

It’s been decades since Gopher was last in vogue. There is a desire, nay, an assumption today that accessing the Internet must be a bombastic multimedia experience, a characteristic that bolstered the early Web to where it is now. With its roots as a relatively pedestrian (if not efficient) method to transfer research data, the Web is now central to how we consume media. It goes without saying that the Web has eclipsed Gopher in almost every respect, and it’s understandable that some would see Gopher as a peculiar, vestigial relic of the old Internet.

I first logged onto Gopher earlier this year, long past its prime, and was instantly met with a certain je ne sais quoi. Nostalgia and desiderium came in waves. Here was a service quite utterly different to the Web, but not for the reasons that I expected.

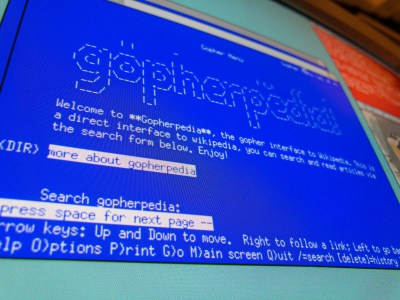

The first session started over at gopher://gopher.floodgap.com:70, but it didn’t take long to start traversing Gopherspace at warp speed. The text-only interface certainly made things speedier compared to the relatively bloated Web, but the real speed came from the simplicity of the layout – regimented menus, all alike but clearly labelled, meant that navigation through Gopherspace was effortless. Text-only formatting ensured that every single piece of content was as legible as the next. Weather and news were easily accessible, as was software (especially for vintage computers), phlogs (Gopher blogs) and more. Veronica-2, a revision of the original Veronica search engine, is Gopher’s answer to Google (although ‘I Veronicaed it’ isn’t as catchy). Further digging revealed modern proxies for reddit and Wikipedia, both welcome finds that further delayed my inevitable return to the Web.

Using Gopher was not only intuitive, it was fun. Framing Gopher as a vestigial relic of the old Internet doesn’t do it justice – using the service felt genuinely informative, and in many respects it bested the Web.

How to Gopher

Modern clients have made getting onto Gopher easier than ever – or at least as easy as it used to be, as today’s browsers have dropped support for Gopher. The Overbite project has solutions for using Gopher on older versions of Firefox, but there are several standalone GUI clients available for all major operating systems. Lynx naturally supports Gopher, and is a great choice for computers of any age. Clients are also available for modern smartphones.

If finding a client is too big a hurdle, there are proxy services available that allow access to Gopher content over HTTP, such as the Floodgap Public Gopher proxy. This allows Gopher sites to render in almost any modern HTTP browser, and is a great choice for first timers.

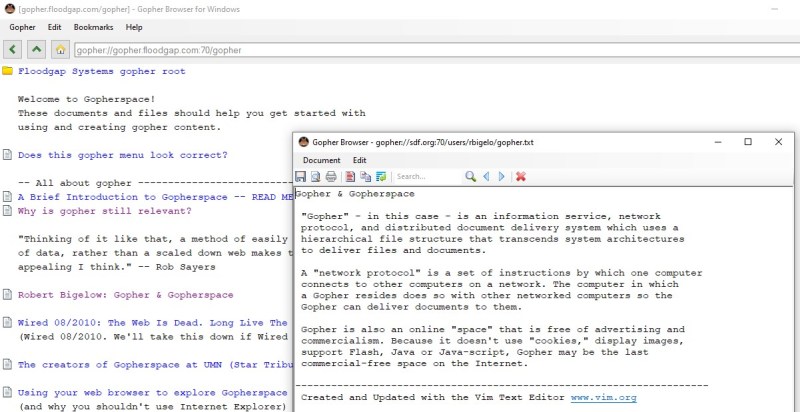

Speaking of Floodgap, users old and new will often find their Gopher sessions starting on the Floodgap server at gopher://gopher.floodgap.com:70/. This server has been accessible since 1999 and lists all the major services and sites that Gopher has to offer. Floodgap also has plenty of helpful tips and tricks for new Gopher users, and does a great job at explaining the Gopher philosophy.

Once you’ve leapt into Gopherspace, there’s not a lot more that needs to be said on ‘how’ to Gopher. The experience can feel very linear, and this can either be a good thing or a bad thing depending on your point of view. At times, navigating Gopher can feel like browsing a catalogue of files on a local disk, jumping from one folder to the next, examining files, then traveling backwards on the path just traveled, up to another jumping off point. A text-based browser like Lynx feels especially fast for this kind of hierarchical navigation.

Downloading files is similarly effortless, which is a good thing since most Gopher browsers don’t support in-line images (although more are adopting this functionality as time goes by).

Learning ‘how to Gopher’ is, at most, a five minute exercise.

Privacy? What Privacy?

Gopher is very much a product of its time. While this is somewhat charming, there are some concerns around individual privacy.

Encryption? Forget about it. This makes using Gopher an effortless experience for even the oldest of computers, however it’s worth noting the potential privacy issues. Much like the early WWW, your browsing history, form submissions and other information is all transmitted in plain text, meaning that it’s a trivial task to intercept this data. Currently, it’s not a good idea to use Gopher for anything vaguely private or personal. There are proposals and back-of-the-napkin concepts on how to deal with encryption on Gopher, but to date there has been precious little progress in this area. Projects like Gophernicus might be of interest to those looking into setting up a Gopher server with extra security.

In contrast to the modern Web, publicly accessible server logs are most definitely a thing on Gopher, even on relatively popular servers like Floodgap. It’s possible to track (or be tracked) all over Gopherspace through these logs, and it’s something to keep in mind if you’re at all conscious about security and privacy.

Take Your Next Vacation in Gopher Country

Let’s get this out of the way – there is some irony in advocating for Gopher on the World Wide Web. But here I am, doing just that.

It would be all too easy to compare Gopher’s ousting as a viable Internet protocol with many of the other ‘format wars’ from the previous decades — VHS over Betamax, Blu-Ray over HD-DVD. When framed this way, it would make sense to attribute any talk of a Gopher resurgence to technological neanderthals, perhaps those that still have a chip on their shoulder after the ‘war’ was lost in the 1990s.

For better or for worse, we will now always spend the majority of our online existence on the Web. The resounding success of the Web to capture people’s imagination doesn’t mean that Gopher is a write-off, a relic. These days, it’s actually quite the opposite. Where it once competed for dominance, Gopher now exists in harmony with the Web. HTTP proxies allow access to Gopher content, blurring the lines between the two protocols. For those that decide to dig deeper, they’ll realise that Gopher was never really defeated after all — it exists now as it (pretty much) always did, a refuge for people and content that doesn’t quite fit onto the Web.

Cameron Kaiser, sysadmin for the Floodgap server, puts it nicely in a phlog:

It would be remiss to dismissively say Gopher was killed by the Web, when in fact the Web and Gopher can live in their distinct spheres and each contribute to the other. With the modern computing emphasis on interoperability, heterogeneity and economy, Gopher continues to offer much to the modern user, as well as in terms of content, accessibility and inexpensiveness. Even now clearly as second fiddle to the World Wide Web, Gopher still remains relevant.

My brief sojourn down into Gopherspace started as a nostalgic trip, but has genuinely left an impact. While the Web has long overtaken Gopher as our primary medium for online communication, webmasters would do well to take inspiration from Gopher.

Check out Where Have all the Gophers Gone for more fascinating insights about the rise and near-extinction of Gopher, as well as articles by Minnpost and TidBITS.

Well, you should make a >10 minutes Youtube video about how to gopher properly !

Nice article. Do FidoNet next? :-)

Kermit!

Could you imagine all server being apple today?

With zero privacy in life :D

… where Apple don’t allow you to have root access…

I think some lessons on unix user permissions are in order. Maybe you can explain to us how to administer a unix machine without root access.

I mean, folks seem to get by (for the most part) without root access to their unix-based Android phones

Please explain how to set up a gopher server on Android.

I don’t. Rooting is always the first thing I do with a new phone.

To X:

There’s actually an app in the Google Play store that lets you run a Gopher server. “Servers Ultimate”, I use it for git, FTP, and IRC in certain scenarios. Have fun.

Linux is not UNIX

Mr Rimmer misspelled ‘gnu’ . . . happens

Does anyone else remember when you used FTP way back when and you were warned that your download might cost thousands if not tens of thousands of dollars (or some such nonsense)? Maybe it wasn’t FTP, my memories of that distant past are somewhat foggy.

I used FTP way back, but haven’t heard of this. Who was said to have been charged this amount? Was it data transmission between servers routing the transfer that racked up charges?

I wish I remember, and it may have been a specific FTP client on sun workstations or something. It was of course trumped up nonsense all around. My searches have yet to turn anything up, but I remember seeing the warning countless times. Long before the WWW. Hearing about gopher stirred up this ancient memory.

It’s interesting to be reminded of old pricing models. I was watching a TV show from about 15 years ago and one of the characters made reference to “saving your weekend minutes”. Remember when we had a quota on how much time we could talk on the phone? Now phone conversation has all but vanished from our lives.

Absolutely. Cell phones use to be like that. Heck I still have my Panasonic DECT phone with toll-saver feature. It would send your call out the cheapest long-distance provider.

It may have been a specific FTP client (such as on a Sun workstation). It was all complete nonsense intended to inhibit some kind of activity. I have had not luck with searches finding the exact text (or any other mention of this), but I saw the warning countless times back in the day.

It was possible to download patches for software from the company’s BBS which meant calling long distance with your modem and downloading the patch software which in some cases could be several megabytes. At 2400 baud it was 58 minutes per megabyte. A 19,200 baud modem would download 1 megabyte in 7 minutes or so. Long distances charges could build quite rapidly.

Are you sure that wasn’t UUCP?

A lot of stuff was incredibly configurable. And since it was on some server, until it was on your own computer, administrators could control things.

Pine, for email and Usenet, could be set to not allow posting if your reply wasn’t more than you quoted. It was intended for you to trim the quoting, but I remember enough posts where someone said “I’m adding junk here because I can’t post until I add more”.

Once ppp became the norm, and people ran the software on their computers,nobody else was configuring for you.

I think you are thinking of modem dialers. I’m pretty sure most of those had a warning when you put in the number manually, because there were many 1-900 scams that would charge your phone bill $5-$10 a minute while connected.

Nope, not a modem. Never had anything to do with those from my office at the University, we were among the first folks on the internet. And it wasn’t UUCP either, although those warnings may have been inspired by UUCP related charges since there were UUCP links here and there in the internet in those days.

I was hoping there were some old grey-hairs that would remember the warning from my poor description.

And sorry about the double post above. Sometimes Hackaday either throws my posts away or delays them for 20 minutes like it did this time. Pretty infrequent, but fairly non-deterministic.

I vaguely remember this warning from the early 90s or maybe late ’80s, but I remember it as a joke warning, just to tell people not to get too carried away with a then-limited resource. I wish my memory were clearer.

I was concerned enough that i had one of those RJ-11 A-B switches in my phone line going to my modem so I could physically disconnect when I was not using my PC. Didn’t want my PC dialing a 900number in the middle of the night.

Our caller ID device in the bedroom did have a in-use light, but all that did was alert us to our son viewing porn at 2 am.

Now kids have their own smart phones, so that particular ship has sailed.

That was Usenet, specifically the newsreader rn.

“This program posts news to thousands of machines throughout the entire civilized world. Your message will cost the net hundreds if not thousands of dollars to send everywhere. Please be sure you know what you are doing.”

It didn’t actually cost that much, but it got the point across.

It seems I do remember seeing that occasionally. It was definitely back in the days of toll dialup, expensive long distance, and charge-per-minute “ISPs” like CompuServe, so it was probably just a disclaimer for those “huge” 5MB files that you were trying to download at 4800baud

I’m beginning to see a pattern here, is it almost like the University of Minnesota trying to break things:

1993 – announced that it would charge licensing fees for the use of its implementation of the Gopher server.

(That Fear, Uncertainty, and Doubt mostly killed off the Gopher protocol. If they had picked GNU GPL 1.0 back then, the Internet would probably be different now).

2021 – Intentionally submitting poor quality and vulnerable patches to the Linux kernel, wasting the developers’ time.

(University of Minnesota is now banned from submitting patches to the Linux Kernel, at least if submitted from a unm.edu email account).

I need to see what they do between now and 2049 to be sure that there is a pattern though :)

Or fill in some of the dates between now and back then. I’m sure they’ve been caught doing similarly stupid stuff on more than one occasion. Minnesota is a boring place. People get up to mischief.

Yep, a mischievous comment that I made earlier has been deleted.

(I almost attended U of MN)

That’s not quite how the UofMN linux thing went down. The people there at the university were concerned that no one was actually looking at commits and that could be a vulnerability. That’s the thing about open source… anyone can look, but is anyone actually doing it? I mean think of those terrible SSH and DNS vulnerabilities that went on for YEARS.

The bogus patches they submitted were intentionally bad to see if they would be caught. Basically pen-testing the commit process. If they wouldn’t have been caught, they would have called back the patches and told everyone of the problems. This wasn’t a nefarious plot to weaken linux… this was a (admittedly misguided) attempt to see if the process was secure and bring the risk to light. The process caught the bad patches.

IMHO the ban was an overreaction. Basically, the maintainers saying “how dare you question us, get out of here forever”. Maybe it wasn’t the U of MN’s role to pen-test the linux commit process, but in the open source world – who’s is it? I was frankly glad that someone was thinking through these possibilities and making sure they got the attention they should have. But the neckbeards got butthurt over it.

While the conventional wisdom says that “licensing fees” killed off Gopher that’s not really the case. Check out my analysis at https://www.scribd.com/document/211995069/Internet-Gopher-Bridge-to-the-Web-Alberti . And think about it – licensing software is now ubiquitous. No, the situation with Gopher was more complex than that.

The Linux Kernel situation was truly a botch – rogue researchers violating what few agreements they’d made after being caught the first time, and a research oversight board that didn’t understand what they were supposedly overseeing. But those issues never made it to the security office at the University of Minnesota, so the damage was done before it could be forestalled or even well addressed by those who would have been, and eventually were, appalled by the whole situation. The University is doing its best to take the lessons learned by the situation to improve its processes so that similar situations are less likely to occur.

Bob Alberti, Internet Gopher co-author, RFC 1436

Not as a practical thing but just for fun I would love to see a gopher interface added to Hackaday. If not the main site perhaps it would just serve the retro.hackaday.com feed.

Imagine seeing someone in a coffee shop with a cyberdeck surfing HaD via GopherVR. That would make it all worth it right there!

https://github.com/LawnGnome/wp-gopher

This is dated, written for WordPress 3.0 and in Python 2 but how hard could it be? Just a little script to parse a request string, run a db query and return the content.

You know you want to do this HaD…..

Well, starting to keep retro.hackaday.com up to date would certainly be a first step.

I never understood why HaD did do so.

+1 on keeping the retro site up to date!

Gopher is such a simple protocol it’s child’s play to implement. I have been running Gopher server for some time now which mirrors my blog (and some other sites) directly from the database. All with a few lines of PHP and no maintenance. If there were some Hackaday blog API I could easily hook that in to provide all the HaD content through goper no problem.

WWW is still fully transparent about what servers/domains you visit.

Sure, if you open up the dev tools and look at the network inspector. Though you might be surprised to find that a visit to hackaday.com takes you to supplyframe.com, parsely.com, and yt3.ggpht.com

Funny they show up in my router logs as possible DNS-rebind attacks.

You can get it by sniffing TLS traffic due to SNI, which sends the hostname in plaintext to the server during the initial TLS handshake. There is a TLS 1.3 extension that allows encrypted handshakes, but it requires the client to know the server’s cert already.

It’s not ideal, but it would otherwise be difficult to deploy TLS everywhere with IPv4 address exhaustion.

Some years ago, I wrote a Gopher server module for Apache2: https://metacpan.org/pod/Apache::GopherHandler

The night I met the woman who I eventually married, I mentioned this offhand, and she instantly knew what it was, since she grew up in Minnesota in the 90s. That’s right, I married the first woman I met who knew what Gopher was.

All that said, the protocol is terrible. Binary file transfers are finished by simply closing the connection, rather than having a length header. It constantly tears down and rebuilds TCP connections, which is inefficient. It’s protocol design amateur hour. To be clear, the oldest versions of HTTP had many of these same flaws, but it had the opportunity to fix most of them. The Gopher revival people could do the same, but tend to treat bringing up these issues as an attack, rather than as a chance to fix things. As a result, they’ve dithered around for about two decades now.

Since backwards compatibility isn’t that important for a protocol that’s mostly a novelty at this point, creating something new with the same philosophy, but fixing the flaws, would be feasible.

My dad was in the gopher team (the team that developed gopher, not one of the sports teams). I told him about either your module or a standalone server, can’t remember which, and he was pretty confused about why somebody would do that.

LOL, mostly because I could. It was an interesting exercise in Apache2’s server framework, which is flexible enough that you can implement non-HTTP servers in it.

Hah, say hi to him for me.

Oh the protocol is dreadful, but keep in mind at the time that those of us who coded Gopher had to first be introduced to the concept of “client-server communications” by Lindner and McCahill before we started writing: THAT’S how old gopher is! Client-server protocols were a complete paradigm shift after (for me) about 15 years of writing mainframe code. And using a blend of column-position demarkation (the first character indicates the item type) and character-delimited demarkation (using a whitespace ‘tab’ character no less!) is completely mental. But we were pioneers hacking our way through the brambly undergrowth, there were no conventions for such things yet.

Bob Alberti, Gopher co-author, RFC 1436

Don’t forget the search engines, Archie, created here in Montreal at McGill, for FTP, and Veronica and Jughead, for Gopher.

McGill used a Gopher server into the 2000s. For information, but it included a classified ad section. I bought a used computer to run Linux from someone advertising there in June 2001.

I forget exactly when they turned off that server.

with the cost of VMs and cloud computing these days, it seems like something light-weight and low traffic like Gopher would cost a University less per year than staff coffee does for a day.

Archie, Gopher and FTP-Via-Email, there’s some memories from my wild and adventurous youth on the fancy new “internet connected computers” at high school.

Wow, I’d remembered Gopher, but until now I’d forgotten ftp by email. Back in the early 90s I wrote Usenet “server” that transferred articles via email from an internet connected site to a site that only had email connectivity, so that netnews could be used at sites that only had email as a connection to the outside world. More than one email admin cursed me for that when users got carried away with the number of newsgroups they tried to mirror. Obviously you only wanted to mirror a readable number of groups. This was a long time ago, of course, it spooled the articles into a news spool that was read directly from the Unix filesystem by a newsreader such as TIN. No nntp used at all on the email only end of the connection.

Years ago I contributed to the Gopher Manifesto and, when it appeared that was about to disappear, talked my brother into hosting it ad infinitum. You can read it, here: http://27.org/gopher-manifesto/

Come on… Gopher still is very hyped where I’m active in the nets…

And privacy? Just wrap it in stunnel.

Or…

We should de-bloat lots of stuff. Instead of messing around with crypting everything in 99.7% the same and a 0.3% incompatible way, we should start access by opening a VPN tunnel to the target location instead. Maybe add a kind of phone-book to TOR’s hidden services to get easier hostnames. Using TOR does not only fit the desire to hide from others, it is a world wide VPN. Use it!

There’s one gopher tie to U of Minnesota that no ones pointed out yet. Their football team is called the Minnesota Golden Gophers.

From the article:

“The gopher also happens to be the University of Minnesota’s mascot.”

Um… It isn’t just the football team, it is the university’s mascot. In the article: “The gopher also happens to be the University of Minnesota’s mascot.”

I thought it was the fact that gophers live in tunnels and this is a tcp ip tunneling protocol. Just me?

> Gopher clients are still available for all major operating systems.

Yip:

https://www.youtube.com/watch?v=7VAoi0wFT-o

For those who fancy a taster, from a www browser,

https://gopher.floodgap.com/gopher/gw?gopher://gopher.floodgap.com:70/1/

I have a vague recollection of being able to search Amazon for book titles via gopher in the early 90’s at the university library

I remember trawling the internet back then. Wandering around military gopher servers trying to see what there was to see and I stumbled across a proposal from some General whose name I can’t remember warning that some country would eventually be the first one to send weapons into space in violation of the Space Weapon Ban Treaty, and since it was going to happen anyways that America should be the first one to do it to beat all the rest. Ah, those were the days when no one knew what the hell security was.

Pretty cool to know about the gopher protocol. I’ve heard about it a couple times, but didn’t actually tried it because, at the same time, I found the gemini protocol which seemed more interesting to me.

Maybe Hackaday can take a look at gemini (https://gemini.circumlunar.space/ or https://fosdem.org/2021/schedule/event/retro_gemini/) and write an article about it.

yeah, is like how could an article be written about Gopher and fail to not mention its modern successor, Gemini? Weird.

I’m a little surprised there’s no mention of Gemini so far, which is a new Gopher-like protocol supporting TLS developed by the same people that are into Gopher. The syntax for Gemini documents feels like stripped-down Markdown so it’s actually easier to make a Gemini page than 90s HTML. It’s a fun rabbit hole to go down (unlike, say the Fediverse where you may see things you don’t want to see). See the FAQ here: https://gemini.circumlunar.space/docs/faq.gmi

Yep, while reading this article, I’ve thought of Gemini. I like how simple it is while still mandating TLS for security.

“(although ‘I Veronicaed it’ isn’t as catchy)”

the obvious solution is to say “I Asked Veronica” instead, thereby avoiding the use of a proper name as a verb.

Gopher is nice; my server is a few lines of sh, because I’ve not had time to implement it in a decent language. It’s amusing to pretend the WWW and fake encryption such as TLS provides security and privacy. At least Gopher doesn’t lie. Here’s my Gopher Hole, which is a better version of my website:

gopher://verisimilitudes.net

https://gopher.floodgap.com/gopher/gw.lite?=verisimilitudes.net+70+31

It’s disgusting to see Gemini mentioned here. I’d told Solderpunk about its flaws, but he didn’t listen. Now all of the hipster idiots use it, because it’s different enough to be cool to them, of course not so different that they wouldn’t immediately understand it, but this also removes any reason it could have for existing.

I actually do like Gemini, but I can see where the haters are coming from. I came across a recent blog post that does a pretty good job of summarizing the issues with Gemtext: https://gerikson.com/blog/comm/Gemini-misaligned-incentives.html

And that’s only the issues with the markup language. There’s still the issue of a lack of length field in the header which forces one to link to an http server for large downloads.

I do wish Solderpunk at al been a bit less opinionated and gone with something like a flavor of Markdown over https (although I agree with the blog post about the omission of numbered links, they are one of the worst things about Markdown, and don’t make sense in a protocol that makes a point of leaving all formatting decisions to the client).

Fascinating read. And paradox, too. Here do I sit in the middle of the night and read about how Gopher was superseded by the www as a dominant medium, while simultaneously worrying that “good ol’ world wide web” as a medium might not survive the APP hype in the long run.

Nah. So many “apps” are nothing more than a custom browser to a website these days. Programmers are lazy. “App” programmers even more so.

Yes. My big tablet has a “google app”. I can’t figure out what it does that a browser doesn’t.

>I can’t figure out what it does that a browser doesn’t.

Leak more of your info?

Hi all, the screenshot of the Windows client is my Gopher Browser for Windows ,a popular client for Windows users.

You can download it here: http://www.mattowen.co.uk/gopher/gopher-client-browser-for-windows

Thanks!

Thanks to my friend Ben Tomhave for letting me know about this very interesting post.

What Gopher remains good for to this day is when the last-mile communication is the bottleneck, when you’ve got very low bandwidth to the client. For example during the late 90s, early 2000s, you’d see flip-phone manufacturers trying to introduce web browsers with these horribly eviscerated little web browsers that couldn’t display images. Those were a mess, and if the phone manufacturers had done a little bit of thinking, putting gopher browsers on those phones would have made more sense.

Where gopher might make sense in the future would be interplanetary communications. If Mars is 15 light-seconds away, you’re going to want a lightweight transmission protocol and you’re only going to want to download big media files deliberately, not as part of someone else’s multimedia page. It would be efficient to use a gopher browser to select the Mars-based files you’d like to access from Earth.

Those interested in learning more about the history of Internet Gopher could read my document here https://www.scribd.com/document/211995069/Internet-Gopher-Bridge-to-the-Web-Alberti . There is a very interesting podcast at https://www.stayinaliveintech.com/podcast/2021/s4-e3/bob-alberti-you-can-go-your-own-way-part-1 , and Twin Cities Public Television (TPT) has also provided an excellent history of computers in Minnesota https://tpt.org/solid-state

Bob Alberti, Internet Gopher co-author, RFC 1436

As someone who was at university in the 90s and was working helpdesk when they turned off the Gopher server to run their first WWW site (they were running parallel for a bit) I wanted to say thank you for your work, even if it seems everyone here is ignoring your posts. I suddenly wonder what you guys thought of that upstart Andreessen and his fancy GUI browser. :) (Yeah I know there was Lynx/HTML/CERN etc…)

I can’t speak for other members of the team, but I didn’t hold any illusions about Gopher’s persistence over time. Text-only vs. integrated text and graphics seemed like a clear andvancement, and we weren’t being funded by the University to grow Gopher at all. So the growth of the web etc. was not something that bothered me, and in fact I left the Gopher team to become the webmaster of network.com (CDC spinoff Network Systems, which was bought by StorageTek which was bought by Sun which was bought by Oracle, which is where the domain goes now).

No the REAL stress was the shift from non-for-profit NSFnet culture to only-for-profit Internet culture that was emerging. Gopher received the brunt of that (to this day) as people involved with helping grow Gopher during the not-for-profit era objected to the idea of software licensing of Gopher by the University of Minnesota. Meanwhile of course nobody on the Gopher team was being paid anything to support it, and the burden of doing so increased daily. It was really stressful to be accused of avarice when we weren’t making any money but meanwhile early Silicon Valley adopters were positioning themselves to make fortunes.

Just try typical gopher media projects gopher://gopher.erb.pw/1/roman This is another side for you.

Years ago I too wrote a basic Gopher server implementation for Node. It was a surprisingly simple task, and required much less lines of code that I’ve initially thought.

https://github.com/PastorGL/Gopher.js (not sure if it is still good for an actual Node version though)

People are building modern gopher holes now using Flask Gopher. Mozz.us

I use Gopher instead of the darkweb because nobody will look for me there. So what I write is a secret between me and the rest of the Gopher readers.