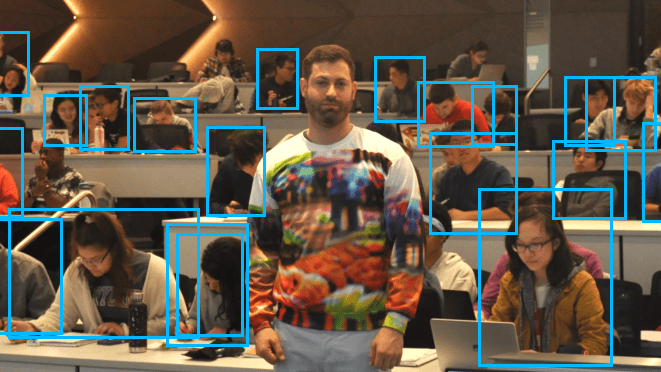

Ugly sweater season is rapidly approaching, at least here in the Northern Hemisphere. We’ve always been a bit baffled by the tradition of paying top dollar for a loud, obnoxious sweater that gets worn to exactly one social event a year. We don’t judge, of course, but that’s not to say we wouldn’t look a little more favorably on someone’s fashion choice if it were more like this AI-defeating adversarial ugly sweater.

The idea behind this research from the University of Maryland is not, of course, to inform fashion trends, nor is it to create a practical invisibility cloak. It’s really to probe machine learning systems for vulnerabilities by making small changes to the input while watching for changes in the output. In this case, the ML system was a YOLO-based vision system which has little trouble finding humans in an arbitrary image. The adversarial pattern was generated by using a large set of training images, some of which contain the objects of interest — in this case, humans. Each time a human is detected, a random pattern is rendered over the image, and the data is reassessed to see how much the pattern lowers the object’s score. The adversarial pattern eventually improves to the point where it mostly prevents humans from being recognized. Much more detail is available in the research paper (PDF) if you want to dig into the guts of this.

The pattern, which looks a little like a bad impressionist painting of people buying pumpkins at a market and bears some resemblance to one we’ve seen before in similar work, is said to work better from different viewing angles. It also makes a spiffy pullover, especially if you’d rather blend in at that Christmas party.

“vulnerabilities” eh, and they are ultimately trying to find them so they can be “fixed’ no doubt.

I have my own views, both on the subject of using such technologies as well as on developing and improving them.

Some 600 USD safety goggles and a green high powered DPSS laser to burn out those dystopian orwellian AI tracking cameras is the only answer at this point.

The Slippery slope is not even a fallacy anymore: They are taking away our freedom step by step.

You forgot “with our taxpayer money” :)

By “they” you mean “my fellow humans” Don’t pretend you are not a member of this club.

Actually no they are the 1% who are born psychopaths and if you are not 100% humane are you really 100% human?

For the party! After 54 hours and 38 minutes of excruciating torture I can say with certainty that I, of my own volition, love big brother with all my heart and soul, more than anything.

You really think the guy saying “You will own nothing and you will be happy” is in club humanity? I’m not so sure. There’s a good reason they don’t call it the “Earth Economic Forum”…this isn’t their first world.

Does that laser thing actually work? Is there evidence of that?

https://youtu.be/pPKapHixxew?t=490

I think it does degrade a CCD quite a bit!

I was at a fast food place and I saw my picture on a tv screen behind the counter and found the lipstick camera peeking at me overhead and I happened to have a high powered green laser pointer in my pocket, and the effect is not instant, or I should say the instant effect is the AGC, but after nailing it for a minute or two I was able to make a pretty good dent in it as far as taking pixels out. The sensors are more resilient than you might think, but look at the number of youtube videos where they record welding.

So you’re fine damaging someone else’s property, while inside their building?

I don’t like the loss of privacy that is becoming more prevalent in public spaces, but this is just vandalism.

As Morse would say: Instant is the coffee

Absolutely. burn it completely out. Antifa and BlM didnt mind burning our cities down… anyone who would track me with facial recognition will happily get a green laser to the lens

Freedom to do what? Pick ones nose in public?

Exist without being watched all the time. We are evolved organisms. Part of our evolved nature is that believing we may be secretly watched stresses us. That’s a biological reality, and it’s dumb to ignore it.

… and it’s actually true that people who find you with face detection/recognition may make decisions to your detriment, including decisions that may be either correct or incorrect from their point of view. For example, you can easily end up as a false positive in somebody’s Evil Detection algorithm. A few false positives mean nothing to them, but being one can mean a lot to you, depending on what reactions are available to them.

There’s also the matter of inflexible enforcement of rules. The thing about being in charge of enforcing rules is that you end up being absolutist, because (1) any exceptions are chances to be unfair, and you don’t want to be seen as being unfair; and (2) you usually end up feeling a personal investment in the rules, and losing a lot of your ability to see when they’re not helping. At the same time, it’s almost impossible to make rules that *always* make sense, and if you did have such a set of rules it would probably be too complicated to remember or enforce reliably.

One historical check on the damage from inflexibility has been that enforcers didn’t tend to actually know about violations unless they were complained about… which tended to select for violations that were actually hurting somebody. Enforcing the same set of rules absolutely and infallibly can easily be intolerable.

I don’t think this is entirely correct. For the vast stretch of human history we lived in small groups where you knew everyone and what they were doing, essentially all the time. And they knew what you were doing.

Our notions of privacy really only date to relatively recent times – even the larger cities of early historical and medieval times had limited anonymity. So notions of privacy are an aberration in our history and our evolution, perhaps two to three hundred years out of our hundreds of thousands.

Don’t get me wrong, I like my privacy and the difference now is that some toad in his mother’s basement in Tomsk can know as much about you as your neighbors does. But the notion that we had privacy until recently is incorrect.

@AI

We had total “global privacy” before, and actions weren’t recorded forever. So if you said something bad about someone, the record would be oral, and oral history is gossip. So if you and the other party recalls different how something happened, it’s not possible to say who is right. People forget things, and if you did something shameful, there was always the possibility of moving somewhere else with a clean slate.

We are moving to a world that everything you say or do can be recorded essentially forever. There are no moving anywhere because everything is global. And if AI says you are a terrorist because someone that looks like you did something, you are tagged essentially forever.

The inflexibility pointed by Sok Puppette reinforces this: lawmakers will be less inclined to consider the AI committing a mistake because that would make everyone tagged by the AI to claim “AI mistake” when convicted (remember the case of the exploding Galaxy batteries: a dozen or so exploding phones killed an entire phone model), and the makers of the AI would try to secretly deal with the false positives. After a while, the general population believes the AI cannot fail, so nobody would believe you if the AI tagged you as a thief.

A quick search on Google for people thrown in prison for mistakes on facial recognition software is scary. Here in Brazil a musician from an orchestra spent 363 days on a prison because he has been “recognized” as part of the group that stole a car. Even with videos from his workplace showing him working at the time of the theft, he was convicted and spent almost a year jailed until his appeal was listened on a higher court and he was freed. And still it was a close vote: 4 to free him, 3 to keep him. Because the AI said it was him, and AI cannot be bribed, is impartial, and is not biased. Right?

>But the notion that we had privacy until recently is incorrect.

For the vast majority of human history, you could simply wander off alone into the bushes because there wouldn’t be anyone around for miles to watch after you. There weren’t enough people until couple hundred years ago, such that you’d always run into someone. The population of the USA or the EU would be literally the entire world back in the 14th century, and back in the hunter gatherer times if you saw a chip of wood floating down the river, your neighbor was too close and you’d go kill them with a hatchet for invading your tribe’s territory.

There was simply not enough people to police your behavior. That’s basically why we invented or evolved the notion of gods to keep people in line while nobody’s watching.

Freedom to not being watched while picking the nose.

It’s enough

Indeed they are. It is going on around you every day silently but relentlessly. Look at the camera systems in our cities, the overwatch of the AI in almost every building under the auspice of “loss prevention” or “better customer service”. Always watching, recording and dissecting our lives and us. Soon we will be no more than slaves of those who control the AI. And soon after we will all be slaves of it.

BTW, I hear the Apple augmented reality glasses are rumored to use an iris scan for identification.

Makes me fear they (the industry/researchers) are busy everywhere to develop a damn DNA scanner that can be mass produced and fitted into smartphones, as the ultimate closing of the trap.

Mass produced DNA scanner would enable a LOT of very useful things. I´m impatient !

” I´m impatient !”

You could end up being “in patient” at a government gulag as they continually improve citizen monitoring.

Government cameras aren’t the biggest concern. Since there’s so cheap and ubiquitous one is more like to be caught on a business or citizens camera.

I understand that humans use an extensive facial and clothing scan for identification. Humans print up identification cards to assist the recognition process and they even use verbal cues to assist in the recognition process. It’s all very shocking, the total lack of autonomy. It’s as if they depend on each other.

Bah! It’s the same for your aliens. Just look at yourselves for a minute. You’re not that different you know.

Meh, sure it would be harder to spoof than some other biometric scanners like fingerprint or iris scanners, but, the movie Gattaca showed that even a DNA sensor could theoretically be spoofed.

But, with the cheapness of fingerprint scanning tech and with face unlock tech in every phone, why would they need to go to the extremes of scanning DNA in real time?

Remember not so long ago we used to spit into a test tube to have it tested for COVID?

LMAO. You mean where we believed that a non diagnostic test was diagnosing us for a set of symptoms that became called covid? Yeah sure do.

I notice that it also missed three other unobscured people – the girl in the back right wearing the grey sweater is inexplicable. But the two guys on the left on the loud-patterned shirts – time for us all to start wearing camo and/or plaid shirts I think. And the one in the hoodie with his face down – I almost missed him/her myself; old school tactic. Time for plaid hoodies?

Take this picture and a similar stock photo of a lecture hall, and squint your eyes.

It becomes immediately apparent why the computer is fooled – the shirt appears like there are people just adjacent but nobody exactly at that spot. It’s like there’s a hole through the guy’s abdomen that resembles the irrelevant background information. The computer is looking for the tell-tale shape of a person, but finds what appears to be like a gap between people.

1. Looked for link to buy. Isn’t for sale. :(

2. Project sponsored by Facebook AI.

3. Guess I’ll stick with my tinfoil hat thankyouverynuch

That tin foil hat would be spotted by AI a mile away…

Not if it is lining the inside of another cap.

Anti-AI stuff sponsored by Facebook-AI? Sounds like the purple anti-robot camouflage in Robert Asprin’s Phule’s Company series. IIRC the company that made the robots also made the purple camo and designed the robots to react as though anyone wearing the purple camo was invisible.

You see a pumpkin market, I see people milling about on the sort of hideous carpet they install in large hotel conference centers. With that in mind, the reason the AI vision system fails to identify (or make eye contact with, you might say) the wearer of this sweater becomes obvious: what happens in Vegas stays in Vegas.

*Invisible to one extremely specific AI

There’s probably no way to make this apply to image recognition universally. It’s mapping out one VERY specific accidental wrinkle in the neural net.

Yeah my thoughts too. Only, I don’t think I’d want to be the one to test it in front of a self-driving car lol

You could probably paint camo on your face, or get a weird looking tattoo, but ultimately, if a human can identify you as a person then, eventually the AI will be able to as well.

What sweater? What guy?

I don’t see it.

Are you self-aware?

Just out of curiosity I pointed my camera at the photo and, sure enough, a box appeared around the guy’s face. So not invisible to AI… Just unnoticed by the one flawed model they trained.

The camera is probably tuned to look only for faces, while the more general AI is trying to spot the overall shape of a person in case the face is occluded.

Your camera possibly doesn’t even use any AI model, just a simple Haar Cascade.

I can draw two circles and a line on paper and my camera will sometimes pick it up as a face.

Late Faking News December 4th 2022: Carnage at the stores, pedestrians wearing ugly Christmas sweaters targetted by Grinchy Autonomous vehicles…

iirc this shirt was a plot point in william gibson’s zero history

Kind of, wasn’t it implied though that it was a pattern that was put in deliberately as a back door?

O.B.I.T.

https://en.wikipedia.org/wiki/O.B.I.T.

This works the other way, btw, I put a jacket on a chair with a soccerball on top – boom, instant person.

Yes and no. I’ve personaly seen the damage a high power laser can do to an image sensor. It burnt a neat line in moments, but only a line, if you want to actually prevent the camera from seeing and the AI being able to recognise then you’d need to take out a reasonable amount of the sensor. This is made difficult by the nature of the lens and sensor, in order to do this you need to move around the field of view which is difficult without getting up close and personal. Also I don’t know what the lower limit for laser power would be to do this, the one I saw was a 5W show laser.

Dammit, that was supposed to be a reply to Sok Puppette

We never truly had privacy, just the presumption of privacy, and that others would respect it. Past few decades, there has been a lot of movement for disrespecting others, not just privacy.

I like cameras, own a bunch of them My security cameras are a great deterent, people on there better behavior. My dashcam is a great witness in court. Cameras can be useful tools. But any tool, can also be mis-used. Out in public, there is no right to privacy, you can only hope that others respect your presumption of privacy. Like wise, you should be doing things in public, that are best done in private…

I agree, but the problem is that these “tools” are not in the hands of the people. The tools are wielded by the likes of facebook and google, who aggregate the output of millions od cameras/photos so they can gain massive benefit from the tools, to the detriment of the public at large.

TURN OFF THE ELECTRICITY AND BOOM ! AI GONE FOREVER : )

If AI can’t see you…they might have temperature monitors…which can still basically see you…if your warm bodied.