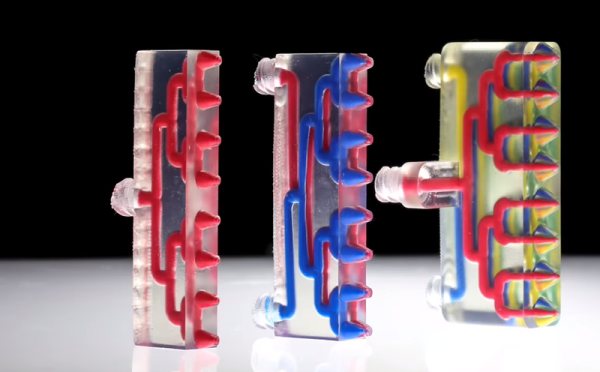

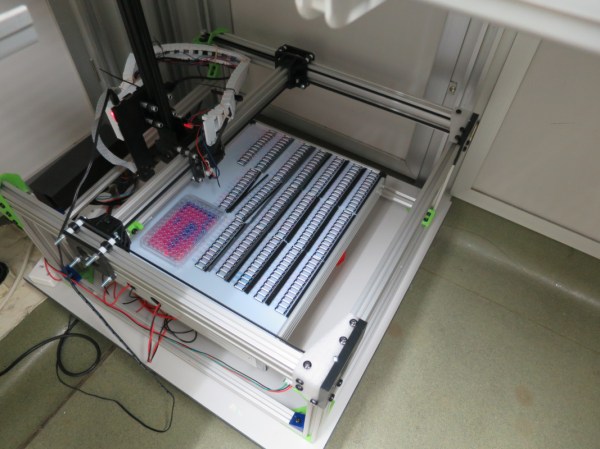

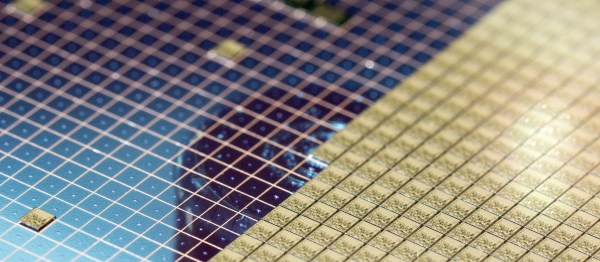

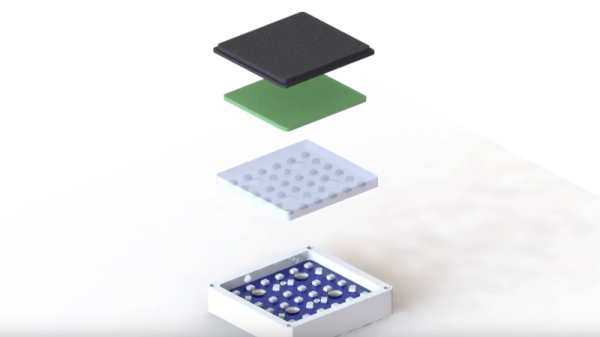

When you zoom in on a fractal you find it is made of more fractals. Perhaps that helped inspire the Harvard 3D printers that have various arrays of mixing nozzles. In the video below you can see some of the interesting things you can do with an array of mixing nozzles. The coolest, we think, is a little multi-legged robot that uses vacuum to ambulate across the bench. The paper, however, is behind a paywall.

There are really two ideas here. Mixing nozzles are nothing new. Usually, you use them to mimic a printer with two hot ends. That is, you print one material at a time and purge the old filament out when switching to the new filament. This is often simpler than using two heads because with a two head arrangement, both the heads have to be at the same height, you must know the precise offset between the heads, and you generally lose some print space since the right head can’t cross the left head and vice versa. Add more heads, and you multiply those problems. We’ve also seen mixing nozzles provide different colors.

Continue reading “Multi Material 3D Printing Makes Soft Robot”