What it is:

Some would argue that replicating the human brain in silicon is impossible. However, the folks over at Brains in Silicon of Stanford University might disagree. They’ve created a circuit board capable of simulating one million neurons and up to 6 billion synapses in real-time. Yes, that’s billion with a “B”. They call their new type of computer The Neurogrid.

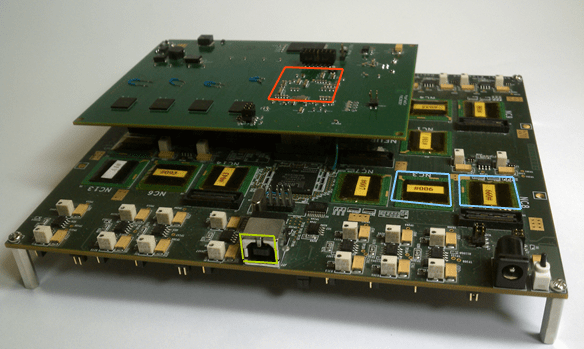

The Neurogrid board boasts 16 of their Neurocore chips, with each one holding 256 x 256 “neurons”. It attempts to function like a brain by using analog signals for computations and digital signals for communication. “Soft-wires” can run between the silicon neurons, mimicking the brain’s synapses.

Be sure to stick around after the break, where we discuss the limitations of the Neurogrid, along with a video from its creators.

What it is not:

Though very neat and impressive, The Neurogrid fails at addressing a key component of Artificial Intelligence – the software architecture. Developing the hardware to replicate the vast interconnections of neurons is a great start, but we must also develop an understanding of how the brain is intelligent from a software side in order to use the hardware to its fullest potential.

Consider the problem with computers and pattern recognition. The human brain does this almost effortlessly. But this is a very difficult thing for a computer to do. Typically, we would start off by making some sort of template. Then we would compare the incoming data to the template, and make the appropriate decisions based on the comparison. What does it matter if you use an x86 machine, an FPGA or a Neurogrid board to carry out this task? The idea of comparing incoming data to a template is not how the brain recognizes patterns. There are no templates in your head.

So you argue “Well, the brain is a massively parallel analog computer, so once we can replicate this parallel structure in hardware, our current software models will work much better.”

Maybe. But consider the following analogy. You have one hundred stones that you need to move to the other side of a desert. And it takes one million steps to cross the desert. So you hire one hundred people to carry the stones across the desert. Hiring more than 100 people will not get the stones across the desert any faster. You still have to walk one million steps. *

There comes a point when simply adding more parallel processes will no longer equate to increased efficiency. We should ask ourselves if there are other ways to get across the desert, rather than trying to make the old way (walking) more efficient.

*Parallel analogy borrowed from “On Intelligence” by Jeff Hawkins.

[youtube http://www.youtube.com/watch?v=D3T1tiVcRDs&w=560&h=315%5D

mehh.. We do now know the high medium level architecture of the brain. The problem is not replicating it.. The problem is growing it… We all were a simplified fetus nervous system and with experience and a genes we grow to our current selves.

Replicating that can be answer to your architecture problem…

Maybe an higher level system can clean, rewire and reprogram the current circuit in a cycle like activity… We could call that “sleep”…

I remain skeptical that we can simulate the brain in silico without invoking quantum mechanical interactions and far more precise atomic scale precision than we currently can wield. But nothing seems to rule it out as impossible either.

Check out Drexler, specifically Chapter 5 of his book, Engines of Creation: The Coming Era of Nanotechnology, which speaks about this in far more detail.

http://e-drexler.com/d/06/00/EOC/EOC_Chapter_5.html

The brain is not quantum!

[Citation Needed]

The brain is simply too hot for macroscopic quantum effects. Besides, the QM hypothesis smells like a case of incredulity. People can’t understand how the brain works without magic, so they add some QM. Of course, they still don’t understand how the brain works, but at least they can invoke the magic, and that makes it easier to handle.

As far as precision, the brain seems pretty resilient in the face of vibrations, electro-magnetic fields, G-forces, temperature fluctuations, different levels of chemicals (alcohol). Some of these things have an effect, of course, but moderate levels of noise and distortion can be handled just fine. That means that incredibly good precision isn’t needed.

To be honest, I think the brain is quantum. I draw this conclusion from the idea that many of the processes found in the biochemistry of photosynthesis (not the exact reactions per say, but the general reaction type: proton coupled electron transport, proton translocation, electric field interactions, etc).

There have been papers submitted that have concluded that the types of chemistry and conditions that exist within the human brain highly suggest that it is impossible for quantum mechanical effects to affect the brain (1). However, I think this is incorrect.

Quantum mechanics have been observed in biological systems at ambient temperatures in multitude of systems (1) and have been characterized in the electron excitation that occurs in the Photosynthetic Complexes II & I at the P680 chlorophyll and P700 chlorophyll pair (2).

I am currently finishing my Master’s Thesis in Plant Biology, though my work has been in the automated collection and analysis of photosynthetic parameters that describe the measured results. Part of this work has been modeling proton coupled electron transfer, electron electrostatic interference within proteins, and electric field interactions. I have had to account for several quantum effects in my calculations and models.

Citation 1: http://www.bss.phy.cam.ac.uk/~mjd1014/qtab.pdf

Citation 2: http://phys.org/news/2014-01-quantum-mechanics-efficiency-photosynthesis.html

Citation 3: http://scitation.aip.org/content/aip/journal/jcp/140/3/10.1063/1.4856795

It’s possible there are quantum effects at the scale of protons and electrons, that play a role in the brain, of course. Just not on a macroscopic scale needed to produce a noticeable “quantum” effect on a person’s behavior.

“People can’t understand how the brain works without magic, so they add some QM.”

I think it’s fair to say that people can’t understand how QM works without magic either :P

Maybe the brain isn’t “magic quantum” but controlled by quantum effects like electron tunneling, e.g a single electron tunneling trough a cell membrane decides whether a neuron sends a signal. Such things make the brain IMHO very different to a digital computer, in which signal propagation is deterministic and only disturbed by outside factors, like an alpha particle erasing a memory cell.

+1 MadTux

Signals don’t spread through the brain based on some distinct clock. There must be at least some consideration to the non-deterministic nature of signal propagation (“quantum timing” of reaction/ionization/electical events).

This most likely is a bad appeal to intuition… but I have seen so many observed phenomena in nature later explained with some quantum effect that it seems unlikely to me that there aren’t many things happening in the brain that involve quantum effects.

Having said that: In the end it could simply be noise that is rejected in most cases–having written some synthetic neural networks myself, what they seem to be good at is a general way to match patterns that aren’t as precisely defined as a simply program would be able to grok. Think of random _quantum_ noise in an imaging device and then the subsequent OCR of text from it.

I’ll just leave this here:

http://www.physicscentral.com/explore/action/pia-entanglement.cfm

@Trui

You realize you just moved the goalpost by adding an assertion, right? Specifically, you’ve asserted that despite being both present and relevant for modeling the effects of QM on actions of individual connections they don’t have an effect on the behavior of a person. I don’t think this is something you can take for granted on a system where *the whole point* is determining how complex behavior emerges from the combined effects of a bunch of tiny cells doing their thing.

I mean that if QM mechanisms are present, it’s only on a molecular scale, perhaps to optimize certain reactions to do the same thing with less energy, for example. Of course, the brain/individual benefits from those optimizations, but that’s not the same as using for instance quantum superposition as a high level device for decision making or consciousness. Compare to a regular computer with a flash memory. The flash memory uses quantum funnelling to write data to the memory, but these quantum effects are localized, and do not turn your PC into a “quantum computer”. They do however, make your PC run faster overall. If the brain uses QM effects somewhere, it will be similar.

You might read some of the non-scientific literature floating around about “the quantum brain”. It ranges from “the soul is a quantum entity” to “QM is free will, because observation changes QM so QM affects observation!”. Little of it is about understanding chemical QM action in the brain, but is a way to keep old superstitions and avoid seeing the possible deterministic parts of neurons; because “a deterministic neuron would make the brain deterministic and people would have no free-will/soul.” (their assertions, not mine)

So even the rational discussions of QM effects in the brain provoke a defensive attitude from people who do study the brain. Something about dealing with loonies too often.

@Quin

Not siding either way here, but the notion (predetermined or not) of a purely deterministic universe always makes me feel like we’re all doing a very complicated and very intricate dance.

Taking a reductionist approach, would you concede that it should be possible to understand a single neuron well enough that we can predict what it will do? Is there a certain point on the sliding scale between a single human neuron and an entire human brain where it becomes impossible (rather than just slower) to model? If so, why does this point occur?

Also, how precisely do we need to replicate a neuron to get useful brain-like behaviour? It’s entirely possible that neurons are so complicated only because they’re built out of squishy stuff, and that an equally capable brain could be built out of digital neurons even if they don’t precisely replicate in-vivo human neurons?

http://phenomena.nationalgeographic.com/2014/05/29/now-this-is-a-synapse/

Having the hardware makes it *possible* to write the software. I mean, sure, you could possibly emulate this extremely slowly… given enough resources. And that’s not very effective.

Also, terrible, terrible analogy. I mean, really? Simplifying “the brain” to parallel computation and then using what is basically a *latency* problem to handwave the Neurogrid as being ineffective? Even if this is merely poorly worded and simply addressing software… obviously, software needs written! I think this is quite possibly the worst article I’ve read on this site by a wide margin (most are quite good).

Additionally, you make the claim that “[t]here are no templates in your head.” Do you have the evidence to back up this claim? Simply looking at the fact EEGs show activity in common brain regions for various tasks makes this a faulty claim.

Speechless.

I mean, sure, you could possibly emulate this extremely slowly… given enough resources.

Like emulating the MOS 6502 in Java?

http://www.visual6502.org/JSSim/index.html

Although that site is awesome…

Javascript != Java.

Heh.. this is Hack-A-Day. No substantiated claims or cited resources. Hardly ever.

Quite possibly one of my biggest complaints..

Fair point. My information comes from “On Intelligence” by Jeff Hawkins.

More and varied sources that show the depth and breadth of your research would be much appreciated. I think it helps the readers (like myself) get a better idea of your logic behind the explanation (obviously) and perhaps leads us away from the conclusion that this wasn’t well thought out merely to get another post up.

;)

I’d like to add also that your sources should be more science and research based rather than just some guy’s website or a link to gizmodo or livescience (in this case anyway). I usually try to find the original article (if possible) to get the original story, then work my way backwards. Sometimes, the reposts are terrible and get it completely wrong.

I’m not entirely sure I understand the reason we’re looking at the brain as hardware and software. The way I see it, certain areas of the brain have affinity for certain processes because their very structure dictates that, function follows form so to speak. I’m picturing, as a poor analogy, magnetic core memory.

Kinda like cardiac cells – they don’t have to be programmed to beat, they just do it because that’s what they do.

I’m not sure I’m being clear, but I can’t think of a better way to explain it. Stupid brain.

As far as I understand it, this Neurogrid doesn’t have software either. It’s programmable in a sense, but only by modifying parameters and connections, not by writing software as you’d do for a CPU.

Well… there you have the software ;)

The distinction between “Software” and “Hardware” is merely a helpful concept which is probably very difficult to apply to the brain.

I don’t know that the configuration data for an FPGA or CPLD as software. Firmware would be a better description; and as an abstraction for a ‘general neuron simulator’ to make it behave as other neuron types, it might work. Just as an abstraction; I don’t think it would, alone, lead to better understanding of the brain.

Then again, lots of abstractions in chemistry have lead to a new hypothesis which lead to new theories and later to new abstractions. The atom models, for instance; acid/base reactions too. Who knows, maybe this will do the same eventually.

“I think this is quite possibly the worst article I’ve read on this site by a wide margin (most are quite good).”

(I don’t use inappropriate language on this site, and I believe that the following comment is entirely warranted.)

ARE YOU FUCKING KIDDING ME? Have you been on this site for the last year?

This, THIS is the worse article you’ve read? Did you find this site yesterday?

You’ve been commenting for 5 years so I doubt it.

Of all the articles you’ve read in those 5+ years, THIS is the worst because they used an analogy which eventually completes a goal to describe a problem of people working in a way that will not actually resolve a situation?

Regarding the desert, you would have to consider it as a napkin, which you would pinch and wave to project the stones to the other side… There must be such a mechanism inside the brains neural processes which flips patterns to their corresponding zones by some still unknown mean, be it quantum or anything…

I hate to be a pedant, but 100 people carrying stones half the distance and then handing them to a different 100 people who have been walking with no load will probably get them there quicker. But that’s just me picking holes in the analogy rather than disagreeing with the conclusion.

Concur… With a lower duty cycle, the heat will stay less. But also, I’m thinking of the construction industry now, so what if you can get 100 guys, each throwing the stone say 10 steps to the next guy (depending on stone size) and so forth. Millions of guys, job done quicker than walking. Or, just get one big guy (say a truck) and move all at once. Sometimes you just need to change your mode of transport.

Meh, I figure there’ll be stones on the other side already. I mean, it’s a desert. Y’all have fun with your rocks, I’m headed to the beach…

But that’s the point entirely. A different transportation method in the analogy is a different computational method than we are currently using.

you just discribed pipelining

Lal.

I really do not understand the point of things like this. We already have CPLD’s/FPGA’s/ASIC’s for complicated and high speed digital design. We have ever increasing processing power with CPU’s and GPU’s, parallel computing etc.

We already have what we need now for “AI”.

This design uses a lot less power, just like our own brain.

The point of this is to study the brain, not make another computing system for AI. Nowhere in the original article (from Live Science) does it mention anything about artificial intelligence.

Normal hardware is not efficient for simulating highly interconnected spiking neural networks with Hebbian learning.

FPGAs can’t handle dynamic reconfiguration. It is usually not necessary performance-wise, but obviously crucial in simulation of a brain.

we dont have the software now but tools like this are going to help us develop them.

Clearly the person that wrote the summary does not have much knowledge on neural networks, the linked article does not help much either because its full of marketing propaganda. You do not need any sort of template for the neural chip, you simply start by mapping a set of those neurons to inputs and another set to outputs. Then using a learning algorithm you feed data into the inputs and update the neurons (in this case the synapses) according to the expected output. The easiest way to train a neural network is probably by using the http://en.wikipedia.org/wiki/Backpropagation algorithm, but this assumes we are using the weighted model (where you have weights at each synapse to mimic the strong and weak connections between neurons). However, it is not really clear what kind of model the neural network in the chip is.

MLP and backpropagation are interesting from a historical perspective, but I question their practical relevance … MLPs are very fragile and require a secondary optimization algorithm just to determine the correct topology. Even then I have never been truly impressed about what has been done with them the way I was with Berger-Liaw’s work on spiking neural networks and Hebbian learning.

Practically, they are interesting because they are very simple to understand and with a bit of tuning (the non-training part, like the number of neurons, layers ..) they can be trained to accomplish “simple tasks”. For example, I saw some efficient applications of MLPs for machine vision (which is an hard task for conventional decision algorithms).

Regarding the philosophical aspect of neural networks. If, one day, we actually manage to model a “baby” human brain into silicon, will that clone count as a human being?

What about you train that model with non-real-world (virtual) stimuli, does it count as a human being or being self-aware? What about if you feed the model with real-world stimuli?

These are just some questions which I’m really curious to see answered in the future…

So right now we have the hardware to emulate a honey bee in real time.

https://en.wikipedia.org/wiki/List_of_animals_by_number_of_neurons

That is only factor 85,000 from a human brain and 10,000 in numbers of synapes, We will be there soon, for large values of “soon”. But still it does not mean we can replicate the human brain, but something that is about as complex, eventhough it proably wouldn’t make much sense. Still, simulated evolution should give interesting results.

Before getting carried away with the idea of building a ‘brain’, it is always good to remember that the mind-body problem remains intractable.

Why is that good to remember ? It’s not even a real problem. When people have solved the body-body problem, philosophers can argue pointlessly about the mind.

The nature of consciousness is not a ‘real problem’ ? Your confidence impresses me.

I’ve made up my mind about dualism and now mostly reject it.

Let’s build an a.i. and ask what it thinks about the nature of consciousness. If it feels as confused as we are, we can call it a day.

Memory research shows we do indeed use templates. There’s evidence to suggest that your memory works very much like JPEG compression. You store faces, for example, as the closest match you have in memory. Or an amalgam thereof, along with the new pieces. That’s why eyewitnesses remember the thief looking like their Uncle Joey or President Reagan. It’s also why the unique bits work so much better, like identifying tattoos and scars.

The brain does at least 2 things that hardware cannot do: it programs itself (I suspect that this can be overcome eventually, but you still need an initial program to get it started) and it rewires itself (I suspect that this is impossible in silicon). It is a hugely complex device that no one has more than even a simplistic understanding of. I highly doubt mankind will ever truly duplicate it in silicon.

@DainBramage1991 You don’t need to replicate the way the brain works, just the result. Like comparing a analog computer to a digital. They work different inside, but can achieve the same results.

I doubt we would ever replicate a brain, it have way too much mechanisms that we don’t know yet, and of the mechanisms we do know, a lot of them we don’t understand.

There’s a huge difference between making a machine that works like a human brain and one that imitates some of the functions of a human brain. As for FPGAs, I thought about those before writing my comment. They don’t rewire themselves. In fact, they don’t do anything themselves. They rely on an external program to tell them which internal connections to use, much like any other piece of silicon.

We’re a huge distance from even understanding the human brain, much less creating a silicon based version of one.

A friend of mine finished his CompEng degree by working with self reprogramable FPGAs. A small micro and software on the FPGA looked at incoming data, at instructions for the main CPU in the FPGA, and added or removed other bits (fpu, graphics, i2c, etc) as needed. It was mostly a way to keep everything on a device with a low gate count, but the technique could be used other ways.

What about two (or more) FPGA’s reprogramming each other and act as a single unit? The brain does have different parts.

You don’t need to modify the hardware to get a self modifiable system. And if you’re not concerned with speed that much, you can just simulate a piece of self modifiable hardware inside non modifiable hardware.

Actually, rewiring itself is possible to a degree even in fixed structure silicon – look at an FPGA – all the wiring is fixed, but the actual connections are set by software. In analog, imagine it as setting different gain coefficients for each input. You can easily route 1 signal through the whole “brain” and rewire it elsewhere.

Have any AI studies done the swarm analysis? Wherein there are small AI components, each with identical capability, that then specialize for a function? Be that function standard recall or pattern recognition, object manipulation or calculation…

Do our silicon creations need to think like us to be successful? In “The Fly” Seth Brundle says that computers only know what you tell them. So perhaps the failure isn’t the hardware, but how we envision it needs to interact to make a stab at conscious thought.

Reblogged this on Carpet Bomberz Inc. and commented:

More info on the Neurogrid massively parallel computer. Comparing it to other AI experiments in modeling individual neurons is apt. I compare it to Danny Hillis’s The Connection Machine (TMC) which used ~65k individual 1 bit processors to model neurons. It was a great idea, and experiment, but it never quite got very far into the commercial market.

While Hillis’ Connection Machine was used in such a regard, it was closer to a general purpose massively parallel processing (MPP) computer, rather than one optimized for artificial neural network (ANN) simulation.

A more apt comparison might be with Hugo De Garis’ CAM-Brain Machine (CBM) – which used a special FPGA architecture (a particular Xilinx device that is no longer manufactured) and a genetic algorithm system for evolving the ANN. Ultimately, various issues (political, economic, and other) stalled that effort (De Garis’ eccentricity didn’t help). The system also seemed destined to not reach it’s main goal – that of building an artificial “brain” that could control a robot cat (Robonoko, which was like a feline shaped Aibo).

http://en.wikipedia.org/wiki/Hugo_de_Garis, sounds like an interesting project the CAM-Brain Machine (CBM). I’m always interested in anyone who has made an attempt at getting large scale neural network up and running and then attempting to program and get it to do things.

“Neurogrid”, which can simulate the activity of one million neurons in real time.

Thats Million with an “M” not Billion with a “B” as stated above in the article.

From the article (emphasis mine):

“They’ve created a circuit board capable of simulating one million *neurons* and up to 6 billion *synapses* in real-time.”

Quotes from the Neurogrid website ( http://www.stanford.edu/group/brainsinsilicon/neurogrid.html ):

“Neurogrid simulates a million neurons…” & ” Neurogrid simulates six billion synapses…”

Maybe try re-reading the sentence next time before accusing somebody else of incompetence.

21/2/18 for the Singularity just got a whole lot more likely.

That’s in application for years. I recently heard a talk from my professor about Cellular Nonlinear Network (CNN). They are used in Cameras for industrial appliacation to evaluate a product in realtime.

They were also used to create something like rudimental observation abilities of a robot.

The project was called SPARK and afaik the robot finally showed rudimentary attack and defense behaviour.

I can’t wait for a small scale version (and a nice API), would like to try my hand at creating an insect like behavior system to get a small hexapod scurrying about.

I actually just finished writing a paper on this very same technology. The primary advantage this type of architecture ha is not simple parallel processing, but hardware-based signal recognition. So, to borrow the rocks in the desert analogy, if you have groups of people whose only job is to identify what is a rock and–say–the keys to that dump truck over there, you do actually have the ability to speed up the process because you DON’T have to walk a million steps. The trick is that the entire system is built and designed to do exactly that, so the desert has motorized walkways and air conditioning, because the desert was specifically built to perform these functions quickly.

The drawback is that it’s not flexible. You couldn’t run a PC off this.

The mind is a terrible thing. It must be stopped in our lifetime!

A few comments were made on programming or software. A real brain does have something comparable to both software and an operating system. A new born knows to cry when it’s hungry or something is wrong. Fight or flight response, breathing, and many other things are built in.

It’s not as simple as writing a line of code for a human, but it is very similar. We also have something of a software framework for learning, memory, and movement. Somewhere in there is also software or firmware to handle the sensors. You could consider our brain to contain drivers for movement, sound production, and input devices. Once we learn to use these things we can shut them off to some extent or use them at will.

I am obviously not a neuroscientist or anything, but on an overview I think many of the functions of the brain align quite well with software systems. The big difference is that we do have to learn to use them. We write the drivers for the most part from when we are born.

The problem is that the brain is very much not like software or computers we use.

It’s a huge parallel bunch of self modifiable simple threads that are only active a little of the time but always on the verge of running. Memory and programs are not separate. The hardware and software are mixed. It’s more like a river and a landscape, both influencing each other on different time scales. There’s a lot of mixing between input and output too, as what your sensors perceive is largely affected by what you think you should perceive. There are no drivers or firmware. Everything can change just by being active.

But, although the brain and our pc’s are very different, it doesn’t mean we can’t simulate one on the other.

“Hiring more than 100 people will not get the stones across the desert any faster.” – Not quite true: https://www.youtube.com/watch?v=kbFMkXTMucA

Though I don’t think that particular example can be directly applied to parallel computing, it probably doesn’t work well in the face of dependencies.

Only one realistic question. Do we now look for Charles Forbin or Sarah Connor?

Why not Zoidberg?

History is full of supposedly impossible boundaries being crossed. The bow and arrow was welcome while the bomb was most disconcerting. This one… many authors have written about…. and here is seems is the first step just waiting for someone like Alan Turing to master.

My response was flippant… and for that I apologize. It was at best a stumbling attempt to provoke thought.

Who will be the first to run 6502 or Windows 3.11 on a neural soft core?

the best way to program this thing would be to stick it into a robot, send sensor input to the board output to servos and motor controllers. for it to learn anything it needs to be able to sense its environment and move about it. you can simulate feeding behavior by sticking inductive charging stations in the floor and signal the robot with an led while its on. you would also need to simulate pain to give it incentive to ‘eat’.

We do indeed have template matching in our brains, templates we make ourselves based on past memories created by our sensory systems.

All that needs to be said about this concept: Skynet. I can’t believe it didn’t come up already.

even if the brain hardware is achieved, people wont have enough resources to develop that brain to a complete human behaviour. think about humans, it take a lot of resources until became reliable in terms of being usefull to society. It must be much much much better than human brain to develop skills fast enough to supply this demand, which is really dangerous but pretty far.

Hire more people. Make them stand in several lines in the desert. Make them pass over the stones. Not a single step taken. Now all we have to do is to cover the whole desert with people… or go to Egypt.