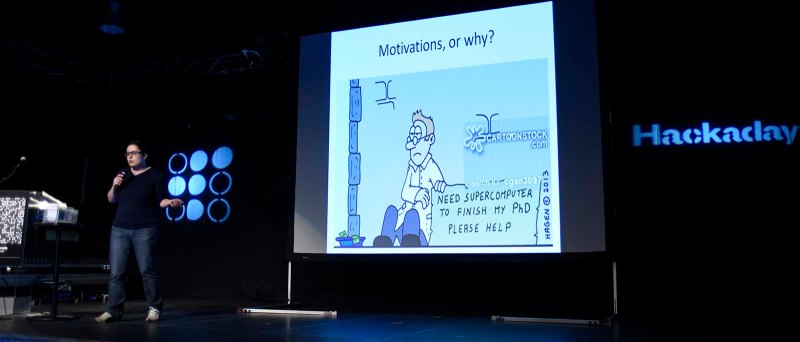

Kristina Kapanova is a PhD student at the Bulgarian Academy of Sciences. Her research is taking her to simulations of quantum effects in semiconductor devices, but this field of study requires a supercomputer for billions of calculations. The college had a proper supercomputer, and was getting a new one, but for a while, Kristina and her fellow ramen-eating colleagues were without a big box of computing. To solve this problem, Kristina built her own supercomputer from off-the-shelf ARM boards.

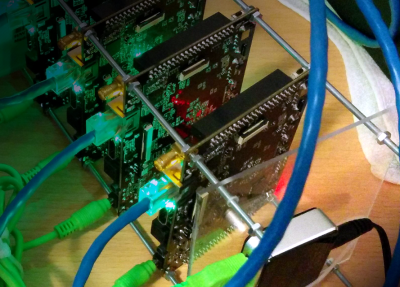

Because of the demands of a supercomputer – namely the amount of RAM and pure processing speed – Raspberry Pis and BeagleBones were out of the question. This supercomputer just needed more speed. This left Kristina to choose between the Odroid X2 and the Radxa Rock. Both of these single board computers have a quad-core ARM Cortex A9, 2GB of RAM, and can be easily powered from a 5V power supply. Only the Radxa Rock was available in Bulgaria at the time, making it the only choice.

Personal clusters have been around since before the Beowulf joke on Slashdot was new, but in recent years cheap ARM boards like the Raspberry Pi have given cluster computing a resurgence. There are 64-node clusters, 120-node clusters with displays on each machine, and a few built out of Lego. Kristina’s cluster isn’t terribly different from any of these Pi Clusters – all she needed was eight boards, an Ethernet switch, a big USB hub, a few cables, and an enclosure. With a few scripts to detect hot-plugged boards, everything just works and only cost about €500.

Personal clusters have been around since before the Beowulf joke on Slashdot was new, but in recent years cheap ARM boards like the Raspberry Pi have given cluster computing a resurgence. There are 64-node clusters, 120-node clusters with displays on each machine, and a few built out of Lego. Kristina’s cluster isn’t terribly different from any of these Pi Clusters – all she needed was eight boards, an Ethernet switch, a big USB hub, a few cables, and an enclosure. With a few scripts to detect hot-plugged boards, everything just works and only cost about €500.

Building a cluster isn’t about making a nice enclosure and wiring up all the boards. The biggest problem is getting the software running. For this, Kristina removed the Android installation found on the Radxa boards and replaced it with Linaro, with a few additional packages including MPI to pass messages around the cluster, OpenMP and Fortran. Yes, Fortran. This is scientific computing, after all.

With the cluster completed, it was time to actually use it. While this cluster isn’t a supercomputer, it is significantly faster at computationally demanding problems than a single, fast desktop processor. Kristina is using this cluster for natural language processing, deep neural networks, and simulations of quantum physics. Using this cluster, Kristina was able to run a simulation of the Pauli Exclusion Principle, resulting in a publication. That’s not bad for about €500 in off-the-shelf electronics and a few weekends of tinkering with a few boards.

That line “While this cluster isn’t a supercomputer, it is significantly faster at computationally demanding problems than a single, fast desktop processor” it’s simply not true ! At least the faster than a desktop cpu statement! This rockchip rk3188 (that’s the cpu of the rockchip), is so slow. A 300 usd core i7 is at least 10 times faster than this. And if you are able to get the code right (use the new simd instructions or just a little portion of the intel gpu for computing) you are at least 100x times faster than a single rk3188. Also the desktop cpus nowadays are 64 bit, they are not in the same league, as a low budget 32 bit arm.

If you need single-thread or few-thread processing, yes. If you need massively threaded processing, a bucket of ARM boards, 64-bit or no, is likely a much better way to do that.

Sorry but that’s also not true. These arm boards are so underpowered in computing performance. Just another factor these rk3188 has 512k L2 cache vs core i7 has 6M or 8M L3 4x256K L2 and 8x32K L1 I and D cache. That’s a huge different in computing. Also if you want to make parallel computing use a GPU.

Is your “sorry, untrue, a desktop PC is better” statement based on any hard performance tests? Because this student clearly has done the tests, and came to the opposite conclusion.

EE is right. Even nicer are the ex-datacenter Xeon E5-2670 you can pick up for $100. 32 cores, and many many more iops and flops than these ARMs. Unless the goal is learning networking technology, a single motherboard is going to leave 1Gbe in the dust for bandwidth and latency.

First the article stated that this build “is significantly faster at computationally demanding problems” without any data. But to be honest this is a ridiculous statement. Even you, if not working with computers (not writing computing software), can easily verify, with some google benchmark search, that this is simply not true.

We are working with arm, intel and power pc cpu boards (some of the boards are our design). I really like and prefer arm cpu-s in some applications, but the performance computing is not that application. We never used the rk3188, but we use stronger arm cpu’s. There are several factors if you want to choose a platform for computing, is it a computational or memory bottleneck problem, or you need fast response time etc. And I mean by the several factors is more than 100, and even a small factor like is it a VIPT cache cpu or not will hit performance really hard. (A task switch can happen in 10us vs 100us just to name one thing. You’ll have to flush the TLB or cache or both. )

So yes I may did not made my comment clear or got any data, or yes maybe a 3 year old (in design more) half word size, 1/16th cache,less than half clock cycle less than 1/10th of network speed computer (even 8 in parallel) etc. etc. outperforms a 300 usd core i7 cpu desktop computer. Muhahahaha. NO!

Simply you even cannot move the data from the computing node out or in over the 10/100 Mb network for processing, and the single node has 2GByte. And for parallel computing there is one thing GPU. You cannot beat the GPU in parallel computing (or you can with custom chip made for parallel computing). A single GTX 970 (300 usd) video card will eat this “cluster” for breakfast and will stay hungry.

Not to mention Xeon dual processor Mobos are available on used servers and 4 lane RAM access at much higher bandwidth than the 800MHz of the ARM. Ridiculous. Then again, with a little more money they could have bought an Intel Phi card. Hmmm. They might be export restricted. I have done the ARM cluster performance calculations (I have hundreds of them available at any given time so it has sounded like a cool idea) and it doesn’t pencil out against the heavy iron. If the GPUs in ARMs were open and supported, that helps. A little. But they are not.

Think Horsepower vs RPMs in vehicles. Xeon will smoke it without producing smoke ;)

@Required, where do you find these mysterious $100 32 core servers? I’m sure we would all love a stack of them.

*nods understandingly* [EE] You make some interesting points. However, come back when you build out a cluster of Intel Edison boards. Might make a good Hackaday.io project. And you more valid in what you are attempting to say. ~fin

People have been making these clusters for decades, and things sure have got cheaper sing the year 2000! http://www.clustercompute.com/

And I almost forgot, http://lcamtuf.coredump.cx/edison_fuzz/ :-)

[Dan] Missing the point… Not only that. I don’t see a mineral oil bath in that link you provided. Can you provide us with any optimizations, enhancements or alternatives or perhaps a website where you built something similar to Kristina? Without ebay or amazon or customs restrictions? Bonus points if you have a PS3 Cluster in a forgotten underground tram substation. Otherwise -10.

I use every system on my LAN as a rendering cluster, is that what you mean? What has it got to do with me or anyone else in particular when everyone who have ever needed to in the last 20 years has had no trouble making a Beowulf cluster? It is a very old running joke on /. whenever some new computing device is announce. If you don’t get why so many people are so “meh” about it that would just be your sub-cultural ignorance and it doesn’t reflect badly on anyone else.

Jim P, Drop it. You couldn’t get those sparc stations running a parallel cluster let alone pull it off with a few arm chips. The comment factor you eminate smells something like… like you work shilling Tivoli or CA Unicenter, after selling out the user group to take a job at the factory.

Now that is a bit harsh, given even a 10th grader could do it, https://mimibeowolfcluster.com/about/

“That was harsh.” Perhaps in a PC/SJW world that is what truth is.

A 10th grade girl in Eastern Europe (or Poland) wouldn’t have access to things we take for granted.

Hilarious.

You use PC/SJW as a slur, and then speak out for eastern european School kids?

In the US, it’s the PC/SJW-types who might actually care. The people who rant and rage and despise PC/SJW’s don’t care about Polish or eastern european teenagers, at least not until they get ripped of by one that was smuggled into the US as a sex slave.

Eight rk3188s are not faster at MP workloads than a single i7-6700k. Any benchmark you can get to run on both systems is going to show the the i7 achieving an order of magnitude greater performance.

I built a simple i5-6600 desktop last week from normal parts for about USD875. So, I challenge you – build something better (complete, of course) that runs Fortran and MPI for less than EUR500 (USD570) and donate it to Krsitina in Bulgaria, she’ll happily take it until her new “true” supercomputer gets installed. Otherwise, just enjoy seeing her skills but stop the “google benchmark” whining ….

I don’t get it. The article states some real high BS, and you come with this. Anyway https://www.google.hu/search?q=ebay+e5-2670

Sure – $60 for a used Xeon, and it comes without the required cooler …. But that’s not a system. It needs a LGA2011 board for about $120 (ebay, more if you want 4 RAM slots), a few 100 Watts power supply for about $50, loads of DDR3 or DDR4 RAM for something like $50, a disk to boot from for about $50, a case with a fan to get the 140 Watts of heat away, etc. Easily gets to the EUR500 she spent, and doesnt even do your fancy GPU number crunching stuff… look – it’s simple: she was happy with her results and proud of what she did. No point in criticizing that, is it ?

Precisely! She solved a problem inexpensively. Then she used the tool to get publishable results. She also showed all work. Now I know about Radxa boards and FreeBasic as well!

She says in the video she only used 4 boards, so 16 cores. The recommended PSU is 5v @ 2A, so 10 watts max each or 40 watts total. An 8 port 10/100 hub is about 5 watts, and a USB hub about the same so around 50 watts in total. Just ONE Zeon is 115 watts!! That is with no peripherals and no fans!!!!!!!! So I say BS to the max to EE.

You can buy a decent PC for EUR500 that should be able to piss all over a cluster of Ethernetted ARM microcontrollers. Sure it might run a bit hotter, but they have air in Bulgaria. You can’t beat the performance of a load of cores, on one chip, with a load of slower cores connected by Ethernet. All sorts of latencies and inefficiencies are gonna creep in and kill performance. I know supercomputing is usually batch-mode stuff, but for her trouble she’d have been better with PCs. Possibly using Ethernet if she wanted to hook a couple of them together, only GB Ethernet.

The basic principle is that x86 are made for performance, ARM units like that are made for limited performance, low power use, low heat, cheapness, etc. Tool for the job.

She’d also be able to use the PC to play games and write assignments on, when she wasn’t supercomputing.

I dont think the girl ever tried to beat Cray on this one …

She beat the clock, beat the budget and beat a path to the publisher…and that’s usually the winning combination in academic research.

Personally I’m tickled about freeBasic running on a Pi/ARM/etc too (I won’t use the “A”-word here).

60 usd e5-2670

http://www.ebay.com/itm/SR0KX-INTEL-XEON-E5-2670-8-CORE-2-60GHz-20M-8GT-s-115W-PROCESSOR-CPU-/281889330127

Just don’t be a lamer

https://www.reddit.com/r/homelab/comments/4798eo/dual_socket_motherboard_for_e52670/

https://www.youtube.com/watch?v=6b-01q09bLU

etc.

Sure – reddit, the junkyard of the internet. But a nice example from JakeRadden Hyper-V 1 point 2 months ago*

Costs close to $1000 as well, for some “old hardware” from ebay (shipping costs probably not included). And dont forget – many US vendors on ebay can’t ship computing hardware to countries such as Bulgaria …

Did you read the two most important bits? She is in Bulgaria! And she is a PHD student at a university with a Supercomputer. Do you think she had no access to advice? What is all this “this article is BS stuff”. How much do you thing your solution would cost in Bulgaria? Did you make any attempt to find out?

Not only did she make this, at a reasonable cost, but she actually used it for real work, and got a paper out of it. What is it with you people? Did you not read the article? did you watch the video? Did you read any of the background stuff? I think your way is only better if you are in the US. to paraphrase one of the idiots who were vying for the Republican nomination, “The USA is not the planet”.

+1

I just don’t get the vitriol against the project and paper. It was a neat solution to a problem with multiple ways of solving. There is no single best way to solve academic problems like this, and half the innovation comes from how you solve that problem and come to the conclusion. Not necessarily what that conclusion was.

This is ‘Hack A Day’, not “Buy-stuff-and-easily-assemble-it-like-Ikea A Day”

Well, You can buy an used quad socket IBM server with 4x 6 core xeons and 32 gb of ram for 550€, probably being another country, you can claim the vat back so it is effectively under 500€.

https://www.itsco.de/server-ibm-system-x3850-m2-4x-intel-six-core-xeon-x7460-6x-2-66ghz-7233-18855.html

I think this server packs quite a punch compared to some budget class ARM cpus tied together with a slow, high latency network.

Of course for an student curriculum vitae it looks way cooler to build some contraption with several arm boards and manage to make it work. So I think the decision to do it this way is smart, but smart as a way for learning and to give your curriculum vitae some “cool factor” (she’s on the conference and on hackaday, nobody would have talked about the initiative had she used a quad socked server, boring stuff!!!).

So congratulations to her for the clever initiative and the visibility gained from it.

If you need processing power, why ARM?

I think that may not be the goal of this particular build.

Indeed the stated Aim is to get compute for reasearch but the research is in fact a tinker with some cluster stuff ‘cos they had a spare 500 to blow on some cool gear. Fair enough but please Hackaday don’t say it was for Quantum mechanics or Nural networks because they would of been better off with a big Xeon/GPU or something like that if they had a real problem to solve

That’s one way to blow money on rapidly depreciating assets. I have a 4x 7970 GPU “not-quite-supercomputer” with a 8 core AMD 4ghz CPU. It’s powered off 90% of the time, and I would have been better off selling it after I was done GPU mining and buying Amazon Giftcards. It’s nice to have but I would happily sell it for half of what I paid for it.

And the people buying the cards off of you would have ended up with cards so horrifically overworked from GPU mining that they’d have failed within months of purchase. But that doesn’t matter to you libertarian “captains of industry”, because caveat emptor, amirite?

How did we get from a discusiion of parallel processing to the throwing of personal insults? How do Amazon gift cards go bad from being “horrifically overworked”??? When did believing in personal liberty become a bad thing on a hacking website? How does wanting to sell his computer make him a “Captain of Industry”? Your post is so confusing on so many levels. Maybe you could clarify, else just go have a beer and have a few laughs.

By cards he means the GPU’s -.-

And yes, gpu mining kills the chips, too much thermal cycling.

In the bitcoin world, Amazon gift cards are one of many ways to transition in and out of something of value. I buy you a 947 dollar gift card and you give me 4 bitcoins, for example. There is no involvement of any chips on these cards and GPU mining is simply a way to earn new bitcoins by monitoring and documenting the worlwide trade in them. No Amazon cards are involved in the actual GPU mining.

OK, I get it – he was talking about the hard disks. Overworked hard disks – this is first time I have heard of that being an issue and it is no reason to imply that the guy is personally unethical or dishonest.

He was talking about the GPUs on the graphics cards, not the hard disks!

WTH? They aren’t gears? Silicon doesn’t degrade from usage. Face Palm…… This comment of yours is the height of hubris and ignorance at the same time.

I have a 48 core Dell PowerEdge R815 I’d gladly donate to her cause.

Why not hire compute time in the cloud https://aws.amazon.com/hpc/, and fund it via https://www.patreon.com/ ?

Absolutely. It’s possible to see from 10,000ft that this isn’t cost- (or anything-) effective. I do wonder how far that €500 would have got her on aws (or better google cloud)

That is why some of us work/ed in management and others at the coalface. :-) I’d have tried to pitch a PR benefit angle to Jeff Bezos and have all the compute dollars she could ever need. On CPUs that are underutilised the only cost is the energy to use them, everything else is pretty much already paid for. So is the cloud the most energy efficient way to crunch a large pile of numbers? I’d assume so, given their profits are in being able to do just that, maximise computations (and or storage) per unit cost. The scales of economy also suggest it is the most efficient way to go.

Looking at the numbers for EC2, I’d wage a guess that paying EUR500 to Bezos would get her around 5 weeks of 24/7 computing power equivalent to her own build. Unlikely it would allow her to finish her PhD work in time …

Looking at what I actually suggested would get you a very different result. See the original comment.

Wow, everyone seems to overlook a big benefit of the mini-arm cluster versus min-maxing a commodity PC. Presumably she’s programming the mini-cluster with the same tools that are used on the university super-computer. In that case, code tested and de-bugged on the mini-cluster can port to the super-computer with hardly any new work.

Ah no, the architecture change does matter a lot, the same code may give different results.

As an embedded engineer with 15 years of experience. No. It does not matter at all. Unless you do stupid shit in C that people have stopped doing 10 years ago.

And in this case it matters even less, it’s about the tools used, the point isn’t that this is faster or not now. It’s that if it needs to be faster x100, it can scale up to that. Good luck with the single machine solution.

Well as a person who has been used Google for at least 5 minutes I have to point out that I have fact backing up my claim. https://science.slashdot.org/story/13/07/28/137209/same-programs–different-computers–different-weather-forecasts

So people do that sort of “stupid shit”, all the time, even, highly intelligent and well educated people.

*has used, or *has been using.

Neither is a great achievement, really.

Lame troll bro.

While I agree in concept. If she wanted to pretend she has a cluster she can run a bunch of lxc instances on her desktop and she would have a cluster environment that you would be cheaper and, as already pointed out, a hell of a lot faster than a bunch of budget rockchip cpus I with 100mbit ethernet (the real test of a proper cluster is the interconnect fabric).

slower than ordinary desktop at same price point, but hey LOOK AT ME I BUILD A SUPERCOMPUTA!11

:(

Sorry but I must disagree, a ~$2k (new) bare dual Xeon system with a s–tload of RAM is not an ordinary desktop computa…

This lady’s machine may not be your ideal (and that’s okay) but it’s

[x] simple, quick to build

[x] low power

[x] cheap, yet

[x] scalable, and

[x] code transparent, runs Fortran and openMP, just like her real one

[x] well documented

[x] suits her needs

[x] makes her happy

I don’t have a problem with that, really.

I recall back in about 1993/4 in the middle of winter with snow on the ground seeing a dude at a train station in sneakers, shorts and tee-shirt (the dude, not the train station) that read “My other computer is a Cray”. This student kind-of fits that image.

your list misses main reason for building a cluster, speed

Cluster of comparable hardware have always lower speed at single thread task. You may want to trow a cluster to deal with problems that will be solved in hours, days. Bandwith of calculation is more important than raw speed. Desktop architecture is more suitable to burst of computations, not prolonged number crunching.

and another one missing the point

ONE i7, even 2 generations old, is _10 times_ faster than quad core ARM used here _in embarrassingly parallel problems_. Obviously any comparisons in single threaded ones are pointless.

You can tell she is talking out of her ass when she mentions using GPU for computation :/ mali400 = no OpenCL, good luck writing shaders to extract whole purely paper 20Gflops (think >10 year old xbox/gforce FX), meanwhile intel has proper opencl support and surprise surprise ~20x the performance.

Agreed.

Also If the tasks require deep parallelism (heavy per-node computation/light inter-node communication) this approach is better. Again, at the end it boils down to the algorithms themselves.

Ok so a lot of people debating whether or not it’s a bad idea. Most of the things coming out are “she’s dumb, I could do better”.

I call for freaking sexism. You people are jerking off when you see a stupid Pi cluster which has the same power than a 15 years old PC, yet nobody got impressed by this cluster which looks way more better and useful than any cluster I’ve seen. Don’t mind the number of ARM boards, the overall power and its usage makes this project awesome.

Nobody said the PERSON was dumb, because of their sex, or even implied it, well other than you.

Sure, no one said it openly; there is just this much fury to prove her wrong. If you are a PhD student, get published is pretty much the definition of being right.

Apparently she did the right thing for her budget, availability e computational needs; computational needs that change drastically from a problem to another: remember not everything is bitcoin.

The paper was published because, I presume, she was right about the quantum whatevers. Whatever the subject was. You could work that out on a ZX Spectrum if you had enough time, and a big swap file. Any computer can do any other computer’s job, Alan Turing said that.

She could’ve gone with x86 CPUs and she’d have got the results faster. Maybe it doesn’t matter, maybe she had the time, maybe she learned something useful from dicking with ARM boards. But the technically best solution wouldn’t use ARM boards like that.

It is itself sexist, to accuse someone of sexism, just because they criticised something a woman did. I’m sure her hormones and X chromosomes had nothing to do with her bad choice of computer hardware.

False, there are two types of arguments here and neither have anything to do with her as a person, or her sex, one against the HAD author’s choice of words and more technically detailed ones about what can be achieved for the same budget using different hardware options. Getting published is actually proof of nothing these days, even the output of Markov chains have managed to get published, it is also irrelevant to the points people have made which have nothing to do with academic credibility and are purely applied electrical engineering arguments.

Are you going to also call me sexist for pointing out the entire thing could have been done using donations and leased computer time? That isn’t even a technical argument, it is economics. So perhaps the need to write a certain type of paper did skew her choices?

Personally I don’t even consider it a hack as it is nothing more than what many people have been doing for the last two decades, or more. I would have been seriously impressed by a project that turned the GPIO bus on these cheap boards into a direct high speed interconnect, if it turned out to be faster than the Ethernet IO etc.

If you have to defend your actions be saying anything along the lines of “You have no right to question what I have done because I am a [whatever].” that is an appeal to authority fallacy, the same goes for any indirect forms of the argument including the SJW crap along the lines of “I don’t care about the facts of your argument you are just being sexist and have no right to voice an opinion,,,”.

You are crazy. They are piling shit because the justification given in the article is WRONG. 500€ on these silly arm computers is not a cost effective way to do scientific calculations. It doesn’t take a PhD candidate to figure this out. Building an arm cluster for fun is one thing, pretending its a good solution meeting the given criteria is delusional. Meanwhile, you’re the only SJW clown on the board talking about sex.

There is no hard feelings about this project or anybody involved in it.

A computer system, cluster, desktop, portable you name it, is the combination of various independent components connected to each other. There is no single magic component which will make your computer fast, it is the combination of the components that creates a fast computing system. The first thing to understand is that the system will be as fast as the slowest component being used. The second thing to understand is that there is always one slow component, that’s the bottleneck of the system. It can be the CPU, memory, storage, network etc. 8 little 2Gbyte ram arm nodes connected to each other over a 100mbit ethernet (maximum ~ 12Mbyte/sec) interconnection, isn’t going to be as fast as a fast desktop computer.

Someone may say but, the node’s internal bandwidth that’s count, first that’s not case in computer clusters, and second the rk3188 has 4.224GByte/s maximum memory bandwidth ( https://mikecanex.wordpress.com/2013/08/16/sdk-2-0-cant-fix-chinese-retina-class-tablet-hardware-limits/ ), but thats the limit, real life performance shows much less ( http://browser.primatelabs.com/geekbench2/2529494 ) there the stdlib write is around 2.2 Gbyte/s.

Even a not very fast desktop computer has 25 Gbyte/s memory bandwidth. A 100 usd GPU nowadays has at least 100 Gbyte/s memory bandwidth.

Here is the comparison ( http://browser.primatelabs.com/geekbench3/compare/6497221?baseline=6504035 ) with a not too fast core i5 6500 (200 usd new) cpu. You can clearly see that nearly every multicore preformance is at least more than 10x.

Also here is comparison with e5-2670 ( http://browser.primatelabs.com/geekbench3/compare/6509647?baseline=6504035 ), these are the chips you can get about 60-100 usd (used).

But don’t forget the 12 Mbyte interconnection bandwidth! In a cluster that’s what is important.

So the article statement “this cluster isn’t a supercomputer, it is significantly faster at computationally demanding problems than a single, fast desktop processor.” is a huge pile of BS! Anybody saying that is clearly not upto the mark! That’s it. No hard feelings or whatever.

If you want to play around clusters just fire up some virtual machines, or as someone recommended run some lxc instances, if you don’t want the VM overhead.

The claim may had been stated poorly. However, this article highlights the difference between computer science and SE, HE and EE. Although raw power does help on the pragmatic side, is not the accurate picture: we want faster algorithms.

Just get an old nvidia graphics card with a decent amount of CUDA cores (GTX 750’s are less than $100 for over 500 GPUs) and this will absolutely obliterate that little cluster. To be honest, I am not sure, but neither are they as they have not included any comparison with any desktop solution. Lame article. HaD is becoming really lame.

Common misconception – CUDA cores can’t run their own programs. A more accurate statement would be that the GTX 750 has 2-4 streaming multiprocessors, each with a number of CUDA cores.

It’s like the comparison between gates and LUTs in an FPGA – can’t program a gate.

Parsimonious Praise for Parallel Processing near Poland (OK, in Bulgaria)

Having spent about only $650 USD to assemble 16 processors to run simulations that lend them selves to parallel calculations, this young woman can’t seem to catch a break here. She used available, inexpensive hardware and open software to meet an actual need. Perhaps further comments could begin with “Yes, and … ” then go on to describe penny-pinching projects that helped them accomplish a non-trivial outcome.

Contrary commentators could consider alliteration, rhyme or mnemonics to cushion their criticisms.

Sincerely spoken and well written … :-) +1

In the presentation she states she really just wanted to play with some single board computers. Seems the “journalism” took over and HAD decided to claim that ” it is significantly faster at computationally demanding problems than a single, fast desktop processor”. Of course, even the talk is titled “High Performance” which is deceptive in itself.

God !! That presentation is a shame.

I have only few words to say : GPU, FPGA, ASICS, (or even Corei7) can beat that things. Well, to be serious I would prefer a Parallella Board (or even 4…each one has 16 cores…16 x 4…).

Try and do some big NAG number crunching with 16 coprocessor cores on a total of 1Gb of slow shared SDRAM … yeah, right …

16 x 4 !

4 times a bit a a disaster is still a disaster …

So here’s a challenge: go build one, and show it off, here on this forum. Complete system, less than $600us, running openMP, and show us your LINPACK results …

Already did ! for 400$ = 64 cores (not a challenge…30 min was enough). Now, lets do a LINPACK test.

Cool. Details ? Link to build-log ? Partslist with cost breakdown? RAM? Disk size ,swap? I/O throughput ? I’d be sincerely keen to see details and how these actually compare with the build presented here by OP.

I noted that on eembc.org/coremark the Adapteva 64 core unit looks alright (10 out of 25), but I think the rank is artificially inflated by using the coremark/MHz ranking, rather than the raw speed. In the end, no one other than energy optimisers care for the /MHz values, most people would like to see how fast the thing is as a whole.

If your build is cool (hot) enough, it might “qualify” for a HAD post and we might actually see some real contribution, rather than just blunt criticism.

OK, it is nice, but I also make small compact cluster of 5x RoPI 3 and Odroid’s XU4 (that are much stronger) as part of project that has to support Big Data (as small nodes cluster) for my Ph.D dissertation research and did not make so much fuss about it.

So let’s make it clear, Ph.D candidate should have required technical knowledge and that is OK, but clustering of small nodes with ARM Cortex A9 (with 4x cores) is NOT HPC any more. Now days you need to implement some form of massive parallelization using heterogeneous platforms to move from domain of clustered servers to high speed computing. I use OpenCL and Renderscript on Odroid XU4 cluster to boost speed of its Heterogeneous Multi-Processing octa-core ARM’s and even that is not to much innovative :)

Maybe the builder had access to the boards – so cost was minimal?

We have been building personal clusters for a while now at BigBoards. I know, it is a company, and yes, we ask money for the things we build, but this is not meant as a sales pitch, but more about sharing our experiences.

We started out with ARM A9’s on the wandboard (4 cores, 2Gb RAM + SATA). For us the SATA connection was pretty important since we use these clusters mainly for BigData purposes, not something you can put on an SD card. Thing is that a lot of people told us these things were good for doing little experiments, but the limited memory was really an issue. We have been looking around for other boards, but could not find any boards with more than 2Gb RAM (remember, 2 years ago). We even thought about designing one ourselves with the help of Freescale, but even they were very sceptical about putting more than 2Gb RAM on an ARM, mosty because of the gain/cost ratio. I truly believe there is something to say about ARM64, which if I remember correctly goes theoretically up to 128Gb, but those chips are really hard to come by. Pine64 seems allright but it lacks that SATA connector we need so badly.

Long story short, we started building intel versions as well, based on the Intel NUC. That way we could go up to 16Gb RAM on each node, have a descent CPU as well as sata and m2.Sata possibilities. Right now I can safely say that almost all of the devices we sell are intel versions.

To give you an idea of the costs and what you get in return:

ARM version:

6x Wandboard QUAD (4 cores, ARM Cortex A9, 2GB RAM)

6x SDCard 16GB (OS)

6x 1TB HDD (DATA)

1x Gbit networking

1x case

List price: 3500 EUR, What it costs us: approx. 1800 EUR excl assembly time

Intel version:

6x Intel core i5 (dual core)

6x 16Gb RAM

6x 1Tb HDD (DATA)

6x 30Gb SSD (OS)

1x Gbit networking

1x case

List price: 6500 EUR, What it costs us: approx. 4500 EUR excl assembly time

Off course BigData and HPC are not the same thing, and the requirements are quite different, but it gives some indication. We have been looking into building a version of our cluster specifically for HPC based on the Parallela boards or one of the great NVidia boards (also ARM based) but that is still a work in progress.

Lots of replies, and many years later, and me, no scientist, can silence the mockers by this very simple equation:

Core i7, 4Ghz, 4cores 8threads, 65Watt = 4 * 8 = 32 GHz / 65Watt (not including PSU efficiencies, and other motherboard consumptions).

ARM, 4 cores, 2Ghz, 3 Watts (under full load) = 8Ghz / 3 watt (not including HDMI or keyboard/mouse peripherals).

While a single core I 7 can do more per cycle, a 1core, 2 thread processor does about the same work as 2 arm processors running at the same frequency.

So if the desktop performance equals 32 Ghz and it’s using 100Watt,

A comparable arm cluster would be either:

– 4x quadcore arm CPUs at 2 GHz, using <20W

Or

– 20x quadcore arm CPUs at 2 GHz doing 160Ghz at 100Watt.

The math doesn't lie, even if an x86 CPU is faster than an arm CPU, arm beats it by a lot, through the use of parallel processing.

That being said, you want performance, go GPU.