Multispectral imaging can be a useful tool, revealing all manner of secrets hidden to the human eye. [elad orbach] built a rig to perform such imaging using the humble Raspberry Pi.

The project is built inside a dark box which keeps outside light from polluting the results. A camera is mounted at the top to image specimens installed below, which the Pi uses to take photos under various lighting conditions. The build relies on a wide variety of colored LEDs for clean, accurate light output for accurate imaging purposes. The LEDs are all installed on a large aluminium heatsink, and can be turned on and off via the Raspberry Pi to capture images with various different illumination settings. A sheath is placed around the camera to ensure only light reflected from the specimen reaches the camera, cutting out bleed from the LEDs themselves.

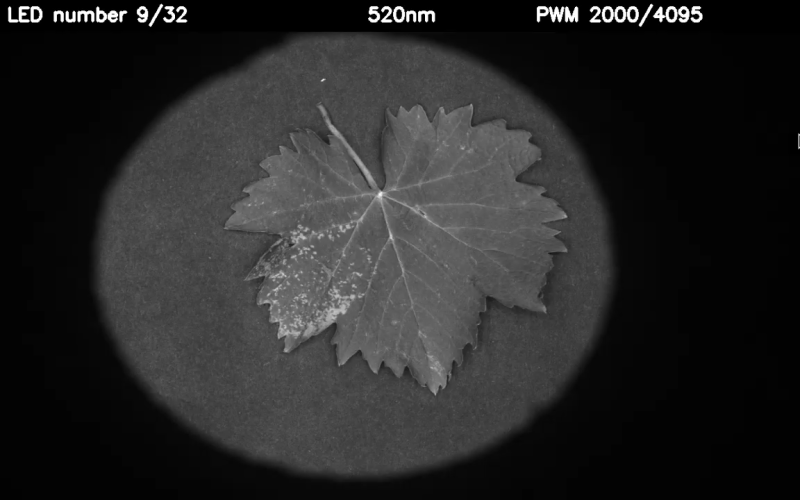

Multispectral imaging is particularly useful when imaging botanical material. Taking photos under different lights can reveal diseases, nutrient deficiencies, and other abnormalities affecting plants. We’ve even seen it used to investigate paintings, too. Video after the break.

I wonder if this technique should have a name like Multi-Spectral Illumination Imaging? It isn’t the same as spectral slices of something illuminated by a continuous source.

(Dear headline writer: IIRC there are no optical components in an RPi.)

it is actually called active multispectral imaging

The results will not be the same for any material with fluorescence (ie: blue light channel will show up bright, even though the surface is glowing red).

okay… cool… but… They should take a couple different objects like plastic, stone, powder and a leaf to show off the benefits and prove to themselves that they are actually capturing what they think they are capturing.

true, but looks like its been verified by a spectrometer at least the led wavelengths surprisingly their hwfm is much smaller then i thought

looks like what i expect to get from the specific led wavelength (in the infrared for ex dead spots on the leaf reflect more light, more absorption of blue and red more reflectance of green) plus the wavelengths has been tested against spectrometer and (at least to me their wavelength is much narrower then i thought) and from there it should be by the book

With machine learning you could have a robot bug hunter/tracker in your greenhouse

try active multi-spectral imaging :-) it has couple of advantages over multi-spectral camera and couple of disadvantages

It’s so great to see a DIY version of this! A company called Megavision makes very high-end systems like this (50 megapixel resolution, spectral coverage from near-IR to near-UV) They’re used by museums, historians, archaeologists, etc to extract hidden data from artwork and artifacts. They’re cool systems, and I always thought it would be a great project for DIY hacking: https://mega-vision.com/cultural_heritage.html

Big props on the build!

Great development, but a solution already exists that has gone commercial exploiting this technology:

https://xloora.com/en/

It is nice to see that there is interest on this technology from the development community.

The applications are vast!