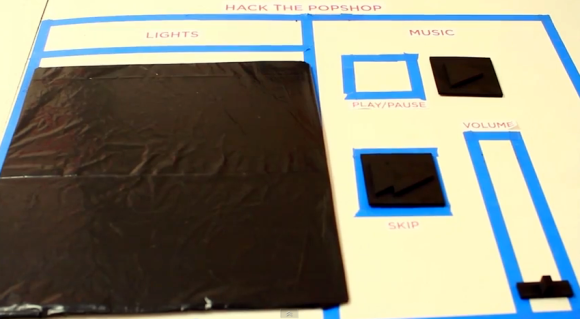

[Jeremy Blum], [Jason Wright], and [Sam Sinensky] combined forces for twenty-four hours to automate how the entertainment and lighting works at their hackerspace. They commandeered the whiteboard and used an already present webcam as part of their project. You can see the black tokens which can be moved around the blue tape outline to actuate the controls.

MATLAB is fed an image from the webcam which monitors the space. Frames are received once every second and parsed for changes in the tokens. There are small black squares which either skip to the next track of music or affect pause/play. Simply move them off of their designated spot and the image processing does the rest. This goes for the volume slider as well. We think the huge token for the lights is to ensure that the camera can sense a change in a darkened room.

If image processing isn’t your thing you can still control your audio entertainment with a frickin’ laser.

Nice! They could have also used http://lasertraq.googlecode.com (and maybe contributed to it) to achieve that with foss. They probably didn’t know…

I wonder.. If the room is dark I understand the need for the larger black square for lighting control. However what if the color was changed to something light a neon green or a reflective stip of sorts?