The Internet of Things is fast approaching, and although no one can tell us what that actually is, we do know it has something to do with being able to control appliances and lights or something. Being able to control something is nice, but being able to tell if a mains-connected appliance is on or not is just as valuable. [Shane] has a really simple circuit he’s been working on to do just that: tell if something connected to mains is on or not, and relay that information over a wireless link.

The Internet of Things is fast approaching, and although no one can tell us what that actually is, we do know it has something to do with being able to control appliances and lights or something. Being able to control something is nice, but being able to tell if a mains-connected appliance is on or not is just as valuable. [Shane] has a really simple circuit he’s been working on to do just that: tell if something connected to mains is on or not, and relay that information over a wireless link.

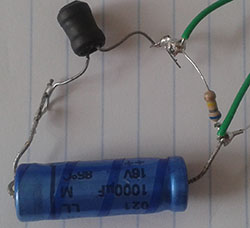

There are two basic parts of [Shane]’s circuit – an RLC circuit that detects current flowing through a wire, This circuit is then fed into an instrumentation amplifier constructed from three op-amps. The output of this goes through a diode and straight to the ADC of a microcontroller, ready for transmission to whatever radio setup your local thingnet will have.

It’s an extremely simple circuit and something that could probably be made with less than a dollar’s worth of parts you could find in a component drawer. [Shane] has a great demo of this circuit connected to a microcontroller, you can check that out below.

Neon light and photo resistor seems up to the task, safety and most bird kill per shot.

Wouldn’t that just detect that mains is on, but not if the actual load draws some current and therefore is really working?

How about using the third opamp in the package as a comparator thus eliminating the need for an ADC Input?

Rectify/chop the output from a pickup coil with a zener – feed straight into ADC. Job done, no?

No – because the output of the tank circuit used as a detector in this case is only around 20 mV (off) to 40 mV (on) – unless you have a very sensitive ADC (which would likely cost much more to implement or purchase than the example) – you won’t be able to sense that change.

This is why the LM324-based operational amplifier is needed, to boost the output up to a level that the ADC on the microcontroller can sense.

Also – if you are wondering why there seems to be a quiescent 20 mV on the coil, even with the current to the lamp off, it is likely because it is still picking up other induced voltages in the room – likely from other AC powered devices (the o-scope and maybe the power supply, perhaps other lighting in the room).

35 cent optoisolator and a resistor?

ding ding ding! we have a winner!

That requires splicing an optoisolator in line with the power. This is a non contact method of determining if there is current flowing and safer for the average person to implement.

if by splicing you mean plug in the cord?

Oh you get optocouplers in packages that accept 240v plugs now? Do tell where I can find one of these “plug in the cord types”.

idiot.

big resistor, 20mA at 230V, you need 5W.

And it’s going to be hot

It’s better to use a capacitor

The Internet of Things is just like the Cloud. The reason no one will explain either is because anyone who uses those blanket terms in serious conversation either

A) Thinks you are a moron not worthy of the time it would take to explain the concept they are trying to convey so they give you a BS answer to shut you up.

B) Genuinely has no clue what they are talking about in the first place but has heard other people say it.

Frequently both :D

Cloud computing is nothing more than the modern version of distributed mainframe computing. You do not have to do maintenance and invest in redundant servers. The trade off is you will pay more for something than it costs to do yourself and you give up direct control over your own data and services. Basically it is digital outsourcing. Pay more, loose security, gain flexibility and reliability. There. Just explained what ‘The Cloud’ is. Simple.

The Internet of Things is just a marketing term for the next significant change on the internet. The previous change was called Convergence. The magical mingling of digital data, voice, and video communications in one medium for the first time in history. It came to be as VoIP, Youtube, and Web 2.0. Internet of Things will likely make a similar appearance. Gentle clunky improvement at first (right now) leading to things that are ubiquitous, convenient, but of not of vital need. Some bad things will happen, some good. There will be many people adamant that they cannot live without their twitter enabled iDorrbell or firmware updates for their couch. Others will see it as the end of the world with everything tracking your life and being security holes. In short, ‘The Internet of Things’ is just making more stuff internet capable.

An internet enabled couch that notifies mommy on Twitter when the daughter starts making out? That’s money in the bank right there.

Of course, one must offer the optional FUFME upgrade to be of any real success.

Actually, cloud computing is a bit more than that.

Basically – a “cloud” is a collection of servers (generally blade-type or something similarly high-density) in one or more datacenters; these servers start up in an “unallocated” state. Each server has a variety of resources – cpu, disk, ram, etc – available to it.

Clients can then order one or more servers, assign IPs, etc; this order goes out to a system which will then attempt to allocate a free server that matches as closely as possible the configuration the client needs, for however long the client needs it. If the client needs to spin up one server for an hour, no problem; if the client needs 1000 servers for 5 seconds, also no problem. All this, of course, assumes the servers with those resources exist. A good system will only spin the system up when everything is available, otherwise alerting the client as needed about the problem (perhaps allowing them to select to use what can be allocated – it all depends on what the clients needs are).

Now – that is the basic idea – a pool of servers that can be allocated and de-allocated from as a client’s needs change. I’m sure you can see how this could be useful for certain tasks, and less useful for others (for instance, a cloud system for the front-end of a website could – in theory – allow a client to avoid a “slashdot-effect” by spinning up more front-end web servers to deal with the extra load – and then spin them down as the load decreases – overall a cheaper solution than trying to deal with it using a single machine that may sit mostly idle at times).

The real power of cloud computing, though, comes when – instead of running directly on the hardware – you run one or more virtual machines (VMs) on a hypervisor hosted on each server (essentially making a single server look like many). You can then let the client choose to spin up/down custom images, allow the client to take “snapshots” and “backups” of the images at points in time, allow the migration of images as needed (to servers with more -or- less resources), allow migration of data without needing to shutdown servers, and a whole host of other potential options. Because multiple VMs can be on a single server, the hypervisor can do such things as balance the load across the server for the VMs, or even migrate VMs to a different server (maybe in a different datacenter on the network) which has more resources. In other words, the server isn’t a fixed quantity any longer – heck, if the system is set up to take snapshots automatically, should the server the VM is on break, the snapshot could automagically be brought up in it’s last state fairly quickly, leading to only minutes (ideally) worth of downtime. Or – if you have a slashdot-effect or other DOS-like issue going on, the VM could be migrated to a less congested area of the system, while the main issue is taken care of otherwise.

As you can see – cloud computing is way more complex, but more flexible than a single hosted and/or managed server. Ideally it is cheaper to run as well. Security should be the same or better as a regular server (provided that the cloud provider did their homework, and the client also practiced proper precautions as well). Cloud computing isn’t difficult to understand, the problem tends to be that it is always poorly explained.

@cr0sh well, yes, but that was not the question scuffles addressed. Mostly it just means something something in the Internet that will go away in not that distant future. Also lack of privacy and security.

Yep. Like I said. Distributed mainframe computing.

Everything becomes more complex as the description becomes closer to reality. But for everyone that isn’t an admin, a cloud is just a modern version of distributed mainframe computing.

oh but this: “if the client needs 1000 servers for 5 seconds, also no problem.” Yeah, no problem, except people get charged for vm load time too. Good luck loading an image and doing something useful in five seconds. Doesn’t really matter, nobody charges time like that. If someone needs a thousand servers for five seconds they will be charged a thousand hours. Anyone that is in the industry knows that. Makes me think you just looked up a wikipedia page.

Yep. I finally gave up googling “internet of things” with the feeling i’m missing something. As long as i don’t see any concrete applications, its just blabbering around with fancy terms for me.

Well, i like the “sensing current” approach, but “extremely simple circuit” if i have to chip in a µC and Opamps? meh…

Hey! This Thingternet is on it’s way, very soon! It’s been coming since some point back in the late 1990s, so by now it must be imminent. I dunno whether to hold my breath or automate my ironing board!

Eight and half minute video instead of a simple schematic, three paragraph writeup, and a 30 second video showing it working.

Are people really so ADHA riddled that they can’t read anymore?

I’m guessing this is the result of people using microcontrollers for even the simplest of things instead of a few bits of simple electronics.

Ditto… videos don’t work well as technical writeups. I wish most of these pill popping morons figure it out sooner rather than later. My favorite was finding a code sample for a specific microcontroller as a video.

Rather than including the source code as a download or some separate snippet on the webpage, the asshole points the camera at the screen and scrolls down expecting you to read it while he talks about it.

I don’t mind retyping code from print. Used to do it all the time during my Atari days. But trying to decipher a : from a ; on a blurry screen is enough to make anyone shove the “programmers” head through the monitor.

I often compare IoT mania to masturbation, that is if God didn’t want us to masturbate he would’ve given us shorter hands.

Couldn’t this be done with a current transformer? Pass the mains line through a split ferrite and some windings, measure the voltage across the burden resistor.

Using a standard inductor placed close to the mains wires doesn’t have as consistent of a magnetic coupling and leaves a lot to chance.

Isn’t that how those off the shelf AC meters work? There’s an inductor in the clamp?

The cheap clamp current meters are exactly that, a current transformer that can open up. I bought a chinese on for 6€ and can confirm it works fine to get some basic values of higher AC currents (at least a couple of 100W, the lowest setting is 20A).

The better ones use a hall sensor built into the iron core as a source of negative feedback driving a second coil, that compensates the field generated by the conductor you’re measuring. The feedback mechanism with the compensation coil ensures the total flux in the transformer core is 0, you then just measure the voltage over the compensation coil. This has the benefit of not relying on induction so you can use it to measure DC as well.

I am no expert by any means but I believe the method you mention would be great if you want to know how much current is flowing as opposed to wheat it is on or not. An appropriate transformer is hard to source the price of a scraped coil, a cap and a resistor. Kind of like those current sensing pens vs a current measuring clamp, you don’t care how much current is passing you just care that some reasonable amount is passing.

In fact… he *is* already using current transformer… just kinda cheap/DIY one :)

I assume the idea is to avoid having to cut the cable to the desk lamp. And you couldn’t just clamp one on the cable, because the field of the live wire is cancelled by the field from the neutral wire. You’d have to separate the two wires, and only measure one of them. The little pick up coil in the demo works, because it’s so small and asymmetric.

I think having to wire up an instrumentation amplifier (and presumably configure and test it) is quite a lot more complicated than just soldering 3 passive components together. That’s the less simple bit you left out.

Also just to join in, links to videos are absolutely terrible and I almost never follow them. If it’s something I’d like to learn from or copy, a few actual words is worth a thousand Youtube videos. Could HAD possibly implement a policy whereby video-only links are, at least, less preferred than proper text ones? If something’s really desperately important and there’s only a video, maybe there’s cause for an exception, but videos teach almost nothing anyway. Youtube ones in particular.

Pretty much pointless to have the instrumentation amp when

– you are grounding on of its inputs and the fact that the input is not differential

– the difference amp doesn’t have equal resistors for the two inputs. One has a 3M feedback resistor on the side that has grounded input. If that’s not the case, *change* the schematic!

So basically the “differential” inputs can’t quite subtract.off the common mode noise.

The 20mV could easily be the DC offset of the *crap* LM324 amplified a few times.

Would have as easy to have an inverting amplifier, AC coupled to get rid of DC offset, then rectified and use part of the opamp as a comparator. Not like you can’t set a 20mV as threshold.

Wouldn’t it be easier to just go the desk lamp’s web page and check the status ?

This is not meant to be critical of Shane’s approach. Any solution that works, works.

If the objective is to just measure whether the mains voltage is present on the line (current flowing or not), capacitive coupling might offer a simpler solution. Do a quick image search for “AC coupled audio input circuit” for an illustration of what I’m about to describe.

Take a simple length of wire (say 4-6″), and tape it along side the hot wire. Connect your small length of wire to a diode, then to your ADC/comparator (high impedance input!) and a medium/high-value resistance to ground. Adjust the length of the wire or your resistor value to adjust the voltage you will get when voltage is present. From my experience, you should get an output in the volts range, rather than the millivolts you’re currently trying to sense with inductive coupling.

On the other hand, if you need to detect current flow, you’ll need the inductive setup that you have. You can probably eliminate the need for an instrumentation amp if you used a ferrite ring and made a little transformer out of the hot line and your sense wires.

this is how I’ve done it (this detects current) http://openenergymonitor.org/emon/buildingblocks/ct-sensors-interface

and using this current transformer: http://uk.farnell.com/murata/56050c/transformer-10a-1-50-current/dp/2291968?Ntt=2291968 which costs £1.10.

@kendall14> “An appropriate transformer is hard to source”

Only perhaps if you want a core that opens, otherwise any transformer core will do. I did a Current Transformer monitor for a fridge using a common as dirt 2155 tranny with a one turn loop of wire threaded through the vacant “window space” in the core as the primary, and this was for a current *measurement*, not just off/on. Any tranny should do as long as the current through the single turn doesn’t saturate the core. The output voltage-per-amp is determined by the (essential) “burden” resistor on the secondary. This does however mean breaking into the measured circuit; I boxed mine up with a mains lead and socket so it could be used to monitor any appliance.

@tekkieneet – I also question the use of an instrumentation amp when one input is grounded. The object of an instrumentation amp is to obtain high input resistance on the inverting input but there doesn’t seem to be any need of that here, a single op-amp should suffice.

*Sucking in the negativity*

I liked the video, learned something from it and saw someone having a solution for a problem he had. And then there were the comments. Criticism has not to be transported that angry and snobby. He never said “it’s the best, cheapest, smartest Idea ever”. It’s another way to do something and it may get someone else inspired to do something similar or very different, this is by all means a hack for me and deserves to be on here. Share your shit and stop the hating… and do it for science, bitches!

Yeah great going with the criticism.. meh.

Regardless, the other way I’ve read how to do to do this is by sawing toroid ferrite rings in half, and wrapping a bunch of small gauge enamelled wire on that. And I guess some op-amp or comparator trickery from there.

In-line there’s some other options. Ie. neon bulb/LDR approach. But who wants to hook their weekend experiments up to mains?

You could do this much easier with an arduino.

Great project shane..thanks for sharing…. I have already ordered the components I don’t already have to try this out for hobby use. Actually, I have been looking for a solution like this for quite some time & wanted to avoid current clamps, power meters and hot connections. As I am only interested in detecting AC current on/off status & not amps or live AC, your non-contact approach may be runner.

Having visited your website as well, I spent some time searching for similar circuits but could find any – so if you have any other references let me know.

Keep up the good work! I for one really appreciate your contribution….Thanks.