The late 1990s saw the widespread introduction of solid-state storage based around NAND Flash. Ranging from memory cards for portable devices to storage for desktops and laptops, the data storage future was prophesied to rid us of the shackles of magnetic storage that had held us down until then. As solid-state drives (SSDs) took off in the consumer market, there were those who confidently knew that before long everyone would be using SSDs and hard-disk drives (HDDs) would be relegated to the dust bin of history as the price per gigabyte and general performance of SSDs would just be too competitive.

Fast-forward a number of years, and we are now in a timeline where people are modifying SSDs to have less storage space, just so that their performance and lifespan are less terrible. The reason for this is that by now NAND Flash has hit a number of limits that prevent it from further scaling density-wise, mostly in terms of its feature size. Workarounds include stacking more layers on top of each other (3D NAND) and increasing the number of voltage levels – and thus bits – within an individual cell. Although this has boosted the storage capacity, the transition from single-level cell (SLC) to multi-level (MLC) and today’s TLC and QLC NAND Flash have come at severe penalties, mostly in the form of limited write cycles and much reduced transfer speeds.

So how did we get here, and is there life beyond QLC NAND Flash?

Floating Gates

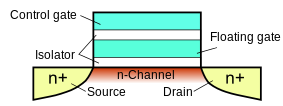

At the core of NAND Flash lies the concept of floating gates, as first pioneered in the 1960s with the floating-gate MOSFET (FGMOS). As an FGMOS allows for the retention of a charge in the floating gate, it enabled the development of non-volatile semiconductor storage technologies like EPROM, EEPROM and flash memory. With EPROM each cell consists out of a single FET with the floating and control gates. By inducing hot carrier injection (HCI) with a programming voltage on the control gate, electrons are injected into the floating gate, which thus effectively turns the FET on. This allows then for the state of the transistor to be read out and interpreted as the stored bit value.

Naturally, just being able to program an EPROM once and then needing to erase the values by exposing the entire die to UV radiation (to induce ionization within the silicon oxide which discharges the FET) is a bit of a bother, even if it allowed the chip to be rewritten thousands of times. In order to make EPROMs in-circuit rewritable, EEPROMs change the basic FET-only structure with two additional transistors. Originally EEPROMs used the same HCI principle for erasing a cell, but later they would switch to using Fowler-Nordheim tunneling (FNT, the wave-mechanical form of field electron emission) for both erasing and writing a cell, which removes the damaging impact of hot carrier degradation (HCD). HCD and the application of FNT are both a major source of the physical damage that ultimately makes a cell ‘leaky’ and rendering it useless.

Combined with charge trap flash (CTF) that replaces the original polycrystalline silicon floating gate with a more durable and capable silicon nitride material, modern EEPROMs can support around a million read/write cycles before they wear out.

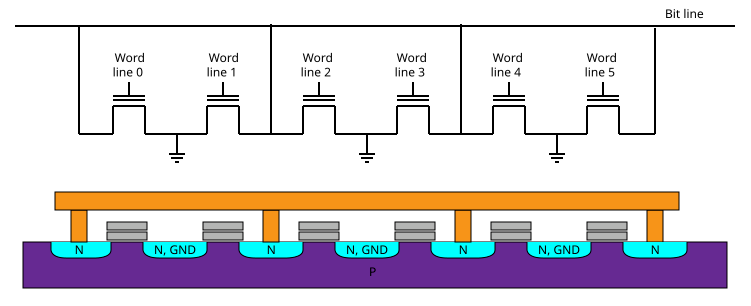

Flash memory is a further evolution of the EEPROM, with the main distinctions being a focus on speed and high storage density, as well as the use of HCI for writes in NOR Flash, due to the speed benefits this provides. The difference between NOR and NAND Flash comes from the way in which the cells are connected, with NOR Flash called that way because it resembles a NOR gate in its behavior:

To write a NOR Flash cell (set it to logical ‘0’), an elevated voltage is applied to the control gate, inducing HCI. To erase a cell (reset to logical ‘1’), a large voltage of opposite polarity is applied to the control gate and the source terminal, which draws electrons out of the floating gate due to FNT.

Reading a cell is then performed by pulling the target word line high. Since all of the storage FETs are connected to both ground and the bit line, this will pull the bit line low if the floating gate is active, creating a logical ‘1’ and vice versa. NOR Flash is set up to allow for bit-wise erasing and writing, although modern NOR Flash is moving to a model in which erasing is done in blocks, much like with NAND Flash:

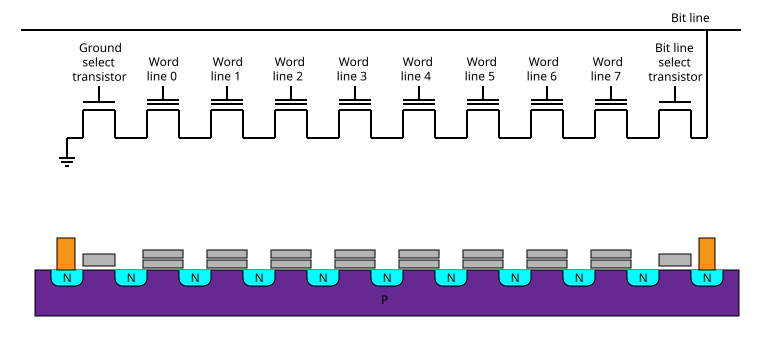

The reason why NAND Flash is called this way is readily apparent from the way the cells are connected, with a number of cells connected in series (a string) between the bit line and ground. NAND Flash uses FNT for both writing and erasing cells, which due to its layout always has to be written (set to ‘0’) and read in pages (a collection of strings), while erasing is performed on a block level (a collection of pages).

Unlike NOR Flash and (E)EPROM, the reading out of a value is significantly more complicated than toggling a control gate and checking the level of the bit line. Instead the control gate on a target cell has to be activated, while putting a much higher (>6V) voltage on the control gate of unwanted cells in a string (which turns them on no matter what). Depending on the charge inside the floating gate, the bit line voltage will reach a certain level, which can then be interpreted as a certain bit value. This is also how NAND Flash can store multiple bits per cell, by relying on precise measurements of the charge level of the floating gate.

All of this means that while NOR Flash supports random (byte-level) access and erase and thus eXecute in Place (XiP, allows for running applications directly off ROM), NAND Flash is much faster with (block-wise) writing and erasing, which together with the higher densities possible has led to NAND Flash becoming the favorite for desktop and mobile data storage applications.

Scaling Pains

With the demand for an increasing number of bytes-per-square-millimeter for Flash storage ever present, manufacturers have done their utmost to shrink the transistors and other structures that make up a NAND Flash die down. This has led to issues such as reduced data retention due to electron leakage and increased wear due to thinner structures. The quick-and-easy way to bump up total storage size by storing more bits per cell has not only exacerbated these issues, but also introduced significant complexity.

The increased wear can be easily observed when looking at the endurance rating (program/erase (P/E) cycles per block) for NAND Flash, with SLC NAND Flash hitting up to 100,000 P/E cycles, MLC below 10,000, TLC around a thousand and QLC dropping down to hundreds of P/E cycles. Meanwhile the smaller feature sizes have made NAND Flash more susceptible to electron leakage from electron mobility, such from high environmental temperatures. Data retention also decreases with wear, making data loss increasingly more likely with high-density, multiple bits per cell NAND Flash.

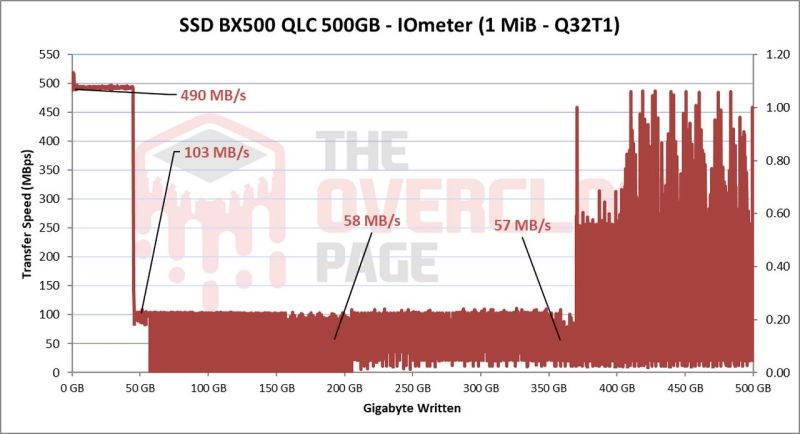

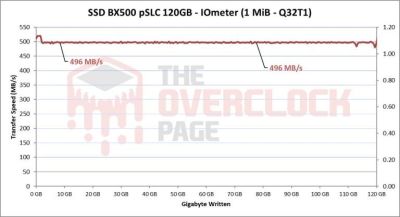

Because of the complexity of QLC NAND Flash with four bits (and thus 16 voltage levels) per cell, the write and read speeds have plummeted compared to TLC and especially SLC. This is why QLC (and TLC) SSDs use a pseudo-SLC (pSLC) cache, which allocates part of the SSD’s Flash to be only used with the much faster SLC access pattern. In the earlier referenced tutorial by Gabriel Ferraz this is painfully illustrated by writing beyond the size of the pSLC cache of the target SSD (a Crucial BX500):

Although the writes to the target SSD are initially nearly 500 MB/s, the moment the ~45 GB pSLC cache fills up, the write speeds are reduced to the write speeds of the underlying Micron 3D QLC NAND, which are around 50 MB/s. Effectively QLC NAND Flash is no faster than a mechanical HDD, and with worse data retention and endurance characteristics. Clearly this is the point where the prophesied solid state storage future comes crumbling down as even relatively cheap NAND Flash still hasn’t caught up to the price/performance of HDDs.

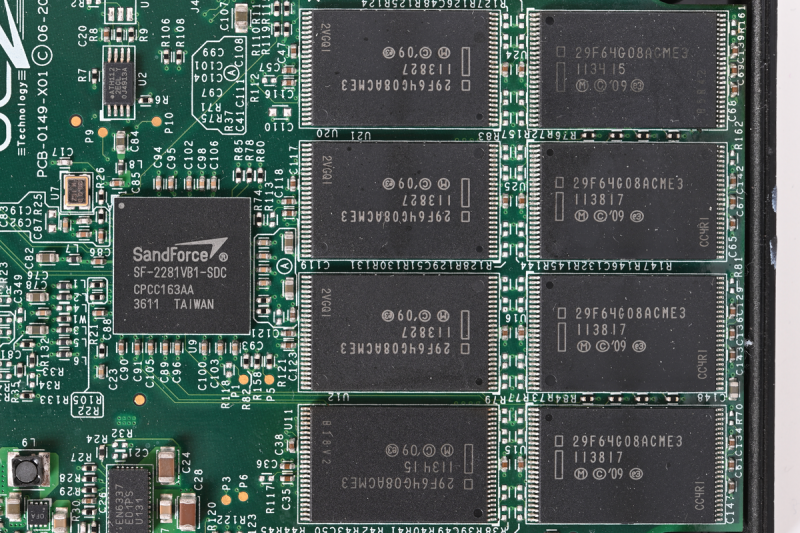

The modification performed by Gabriel Ferraz on the BX500 SSD involves reprogramming its Silicon Motion SM2259XT2 NAND Flash controller using the MPTools software, which is not provided to consumers but has been leaked onto the internet. While not as simple as toggling on a ‘use whole SSD as pSLC’ option, this is ultimately what it comes down to after flashing modified firmware to the drive.

With the BX500 SSD now running in pSLC mode, it knocks the storage capacity down from 500 GB to 120 GB, but the P/E rating goes up from a rated 900 cycles in QLC mode to 60,000 cycles in pSLC mode, or well over 3,000%. The write performance is a sustained 496 MB/s with none of the spikes seen in QLC mode, leading to about double the score in the PCMark 10 Full System Drive test.

With all of this in mind, it’s not easy to see a path forward for NAND Flash which will not make these existing issues even worse. Perhaps Intel and Micron will come out of left field before long with a new take on the 3D XPoint phase-change memory, or perhaps we’ll just keep muddling on for the foreseeable future with ever worse SSDs and seemingly immortal HDDs.

Clearly one should never believe prophets, especially not those for shiny futuristic technologies.

Featured image: “OCZ Agility 3 PCB” by [Ordercrazy]

But the growth in HDD capacity is also slowing down. For consumer grade CMR disks around 200 bucks, it was 1TB in 2010, 4TB in 2015, 8TB in 2018 , and from 2021 to now, it has only reached 16TB

That’s because HDDs have hit many of the physical limits. There were “easy” gains to be had by pushing track separation, etc., but those are done now.

I’m amazed at the new >20TB drives and how they achieve their density. It’s really pushing the physics of how data is stored.

Shingled is something people don’t like, probably because it seems it’s a bit iffy in terms of long term reliability.

So basically HD’s have the same issue as SSD’s when they reached QLC in that it might be pushed too far and embracing too many concessions.

Perhaps they will be forced to go for all those holographic cubes they have had for decades now but were never commercialised and jump to a storage system that has a 100 fold capacity, or was it 1000 fold?

I suppose the issue there is that they fear you only sell 1 per person.. ever. (unless there is a fire/war/earthquake/etc.). But in reality people will find ways to fill it up, they’ll come with a 32K (per eye) VR 3D uncompressed video standard or something. Or maybe a holographic video system that needs storage equal to 8K cubed and no amount of storage will be ever enough.

24tb is readily available for around 500$

20tb is down to around 200$ so…

I really respect how early SSD solutions performed compared to modern ones. An early Intel will only have, say, 180GB of storage space, but with astronomical TBW figures and much less compromised performance.

You can get somewhat reliable SSDs from Swissbit. They sell pSLC as well as true SLC drives. Not exactly cheap though.

I can also recommend Cactus Technoplogies. https://www.cactus-tech.com/

We used to get a lot of PC Card format memory from them for weird embedded computers.

They make SLC and pSLC products, but they have the same cost issues as swissbit.

Buying NAND to only use 1/4 of it makes the economics kinda poor.

It’s not using a quarter of it, it’s using all of it but just at a quarter of the storage density.

The ability to ‘select’ whether you use pSLC or QLC should be a consumer option (and a marketing ploy)… high endurance with low capacity… or high capacity with low endurance: you, the customer, can decide….!

It’s entirely different management firmware, often a different architecture as well (in terms of caching and supply hold-up), and in many cases requires a different verification/calibration process. Not really something you can swap between easily without putting quite a lot of design effort into it.

It’s obviously no fun to pay more; but for embedded systems there’s a reasonably compelling case to be made for drive-level redundancy: If I’m running a server or something it’s probably not worth the trouble: support for 2x m.2 drives for boot, plus whatever drive redundancy arrangement you want across the data drives, is ubiquitous and commodity drives are cheaper than specialty ones.

If you’ve got power, space, or interface constraints, though, you may not have the luxury of just slapping another drive in there; and (while not perfect, it won’t save you from a controller failure or something get unseated mechanically) getting your redundancy within the single drive looks better than being at the mercy of a QLC chip giving up and dying on you.

They ARE very robust. And they test and age then calibrate thoroughly every single data medium that gets out of their production line.

Yes, not cheap. Pay them a visit and you will understand why.

It’s not obvious to me that density is a big problem for NAND flash. I can currently put 8TB on a PCIe NVMe carrier and it’s not obvious that we have a short-term need for more than that. Those drives are still considered huge and difficult to get. They’re also hardly filling up all the space with silicon; both speed and capacity worries could be addressed by just stacking more ICs on a module, or by making motherboards with more M.2 slots. My current laptop came with two M.2 slots; who really needs 16TB of storage on their laptop?

People always say that over the years. “Who really needs more than ____”. Things keep getting bigger and bigger, some games are into the hundreds of GB. For a single game.

A baseline Windows 11 install is 20-27 GB alone. Linux used to fit on less than 4GB with room to spare…now most distros tend to be more (with WM and DE).

Just my opinion, but software optimization in the consumer software market is long gone. Instead unoptimized software (in terms of both storage and performance) is the norm.

“What are you going to do with all that space, Ed?.”

“Better leave s trail of breadcrumbs or you will get lost!”

Comments when I bought a Micropolis 1GB in ’94

In the late 80’s, I knew someone who had a state-of-the-art 286 machine with a 300MB drive. He was using DOS, so there was a size limit per partition. He had a lot of drive letters, and maintained a table of contents on paper to keep track of which programs were where.

I remember three of us and our MD looking at a full height, 20MB Rodime drive and wondering why any PC user would need that much DOS file storage.

Hey, I still have one of those! :D still works too

The Ami-Pro WYSIWYG word processor was extremely fully-functional, with almost everything anyone uses that’s currently in MS Word.

The Ami-pro install size is ten times larger than an EMPTY .docx file.

What the heck are you doing with Word??

An empty .docx file for me is 13,118 bytes. Ami Pro’s install size was like 15-20 MB. Even just the installer download is 8 MB.

docx files are just zip files renamed: even uncompressed, they take up only 100K with most of that being the style definitions. And yes, 100 kB might seem a bit much for an empty file, but it’s all documented XML: that’s why it’s compressed in the first place.

Linux used to fit on a HD Diskette, 1.44MB.

MenuetOS still does.

Focusing on the “pure computer-side” section isn’t the right place to be thinking, though – the size of the OS is pretty much driven by the size of typical drives. There’s no size pressure to keep installs small.

There are places where the increase in storage capacity is fundamental: video and photo sizes scale with the resolution (well, faster than), so the higher resolution the photos/videos get, the bigger the space requirements become.

Heck, several of the scientific experiments I’m on are fundamentally limited by storage space – at some point you have to say “okay I just can’t physically cram more data storage here” and that’s it. There’s only so many petabytes you can fit within a fixed volume and power envelope.

But there’s an interesting point here: those things have different usage patterns! They’re closer to tape than they are to random-access drives: you’d be mostly fine with program/erase cycles in the single digits, especially if you could avoid read-disturb issues (and sometimes that’s not even important!).

Read-disturb is the real issue with multi-level flash: in a lot of cases you’d be fine with effectively write-once storage. But read-disturb means that it’s not write-once, read-many, which is the real problem.

You’ve missed the point, though. Yes, at some point in the future we will be up against the density limit. But my point was not that no-one needs bigger M.2 modules but that M.2 modules can easily be made bigger (and faster) without needing higher-density flash.

The problem isn’t that they’re unoptimized. They optimize for things like fewest number of versions to distribute (like games installing voiceovers for every supported language worldwide) and ease of development (like web devs cobbling together huge javascript libraries). In the specific case of operating systems, there are huge piles of abstraction and virtualization to, eg, keep vendors’ shitty drivers from crashing systems, because that’s easier than forcing the people at realtek to do their goddamn jobs.

In other words, they’re actively optimizing against size, not just leaving things huge through indifference. In some ways that’s worse. But it’s more importantly a very different problem.

I agree. In my old laptop that we take along on trips, I have a 2TB SSD for OS drive and 1TB SSD for data drive… so swimming in disk space. Reason it seems backwards is when SSDs were ‘cheap’, I bought a 2TB SSD to replace the 128G that was in it from the factory. Spread those writes around :) .

Also I think SSDs are ‘reliable’ enough (in most cases) to outlast the system they are running on. I’ve yet to experience an SSD ‘failure’. Knock on wood. That said, I do keep good and many backups — just in case.

It’s not quite that simple for multi-level cell stuff: it doesn’t really matter how much “available space” there is, it’s really the total bandwidth into/out of the flash that matters, because read-disturb errors are going to cause P/E cycles no matter what.

I’ve used SSD since the beginning and burnt many of them on simple, somewhat obvious tasks like sync’ing ~10GB maildir folders. Those disks failed one after another, the size didn’t count, the technology didn’t count. It’s simply the software that was doing dumb million of “random access” to check the file, change some metadata to mark them as sync’ing and revert back to the previous status. In the end, the software expected that ABA writing was free and didn’t count a cycle (it wouldn’t if it could completely fit in RAM cache). So it matters, you never know what the software is doing. Also, crashing a SSD isn’t like crashing an HDD. On a failing HDD, you can usually continue reading the safe sectors and recover what hasn’t been damaged. On a SSD, once the number of free “space” reserved for bad block is filled, the drive becomes unreadable. And, up until recently, the drive never tell you the number of free “space” bad block usage, so you can’t really anticipate: you’ll have to backup the drive more often which increase the price and also increase the number of cycles used on the backup drive…

The issue with MLC/TLC/QLC is that reading a bit can change the voltage level on a bit enough to alter one of the other bits stored there, so even reading can cause P/E cycles: but this also means that once a disk fails the software on the SSD doesn’t have an easy way to read it out because it needs a place to “cache” the other bits stored while it restores it.

So you don’t even need to alter any metadata: constantly reading is bad, too. Technically even SLC flash has read disturb, but it’s orders of magnitude higher than MLC/TLC/QLC.

A lot of the “quality” issues with flash come from the management software. “Higher quality” flash often times just means the software understands the wear patterns better.

Many applications these days are dozens or hundreds of megabytes, but there is no reason why they couldn’t be 1 megabyte.

People forget how much 1 MegaByte is. The entire Bible is only a few MegaBytes and can be compressed to be around 1 MegaByte. Imagine how much source code that is (and compiled it is much more code).

And many applications just need the business logic as operating systems supply many necessary libraries for GUI and IO. But applications come with a lot of bloat.

Not just applications. Operating systems come with a lot of bloat. Often applications that cannot be uninstalled.

The solution is to write more elegant software. Hire better programmers. Software quality is a major issue. This is why software gets slower and bigger every year. It’s like companies stopped caring when they no longer had to fit things on a CD and download speeds improved enough for people to quickly download software.

Multimedia takes up a lot of space.

A wider adoption of JPEG-XL would help a lot (supports lossless/reversible recompression of JPEG, 20% reduction in file size).

Movies take up a lot more space. But with the decline of optical media people want all of it on their drives. I would love optical media to stay.

I still remember my first hard disk on a PC-XT clone. 20 megabytes! Looking at the free space reported by CHKDSK… can it really have so many digits! All my software, tools and utilities took much less than half of the space.

Later, when the free space started to get low, I installed an RLL controller and got 30MB. As disks got cheaper, I added another, 60MB disk, for a total of 90MB, and was able to install Slackware Linux beside the MSDOS. Oh the happiness.

Actually, I do have an similar XT still in use here.

Works like a charme.

I occasionally run GrafTrak II satellite tracker, Turbo Pascal 4, QB 4.5 and some chess programs (Psion Chess, Battle Chess).

The HDD is an 20 MB MFM/RLL type. It works still fine, except for the worn stepper motor, which needs some lubrication.

Considering that it was made in ~1985, it still does fine.

Maybe I’ll install a new stepper one day.

I also do use DriveSpace and MS-DOS 6.22 because I do have larger software being installed.

PC-Tools 7, for example. And the aforementioned programming languages.

For file transfer a serial null-modem cable is being used.

So I can easily transfer data between my modern PC and the XT.

A Gotek floppy emulator is also installed, as a secondary floppy drive.

I’m positively surprised how usable this dinosaur still is.

There’s DOS software available out there for about anything. 😃

How were you able to run Linux on an 8088? Linux required a 386 minimum, even in the beginning.

There was a little fast-forward in hardware. I replaced the 1987 XT with an AT clone in 1989, and later that with a 386. But I still had the original Seagate drives, until I got an IDE controller and a huge fast 105MB drive.

“The solution is to write more elegant software. Hire better programmers. Software quality is a major issue” : Got to remember that ‘time’ is money. So ‘good enough’ is the motto for most cases. Let the consumer find the edge case bugs. That is, unless you are writing mission critical software, where money is no object (relatively) to write tight, efficient, and correct code.

Yep, cheap Indian and Turkish programmers result in the bloatware we have now, but look at those quarterly earnings! Execs gotta have those numbers go up so they can buy yachts and private islands. (Facepalm)

Seriously? I’m pretty sure the west invented and perfected bloat and are still the top coders of bloat and crappy software.

Depends, I think. Writing bad code wastes time of the poor fool who has to suffer from the original programmer’s laziness.

Just think of all the bad, undocumented code that’s being carried over over all the years.

The new programmer has to pay for the sins of the lazy predecessors.

He has to work through mountains of decade old code that was badly written and not documented.

I rather go by the motto “if you do something, do it right straightaway”.

Of course, there are temporary solutions that outlive their original lifetime.

But those are kind of being proven by time and can’t be that bad, actually.

They had been accidentally good, despite being rushed.

Multimedia takes up so much space that software size on disk is less relevant, except for the bandwidth to update it, but that can be fixed with deduplication.

80MB for an app isn’t that big of a deal, most people probably still use under 100GB or so for apps, while the media can easily be many times that even for those of us who don’t torrent or pr0n.

Yeah, mostly this. The main drivers of storage space are things that can’t easily be reduced in size. Code size just isn’t a driver. I mean, a lot of high-level games are less than 1% code: the vast majority’s the audio/video. Kindof surprising how large the audio can get, but if you think about it, the visuals are in some sense programmatic, whereas the audio’s usually recorded.

Yes assets take up most space for games.

But there are new developments in terms of texture (de)compression supported by GPU.

You can procedurally generate landscapes and cities at runtime. You can procedurally generate textures using perlin noise at runtime.

You can generate audio and textures using fractals at runtime.

The 64k demoscene shows what is possible.

With generative neural networks a lot is possible. A model can be used to generate content at runtime and the model can take a fraction of the space of the game.

But rclark is right in the sense that that it costs time and money. And my point was that there hard limit anymore (CD-ROM/DVD size, download speeds, etc.).

But it can be done. And a MegaByte is still big today for many things (even short videos: codecs are getting better and better).

You can’t generate real-life audio at runtime. Not with the amount of variation that modern games have. Procedurally generated audio can be interesting, but it’s just one style. Someone broke down the disk usage for GTA IV a long while ago: it’s like 40% audio. There’s more audio data than actual graphics!

You’re also trading off power for storage space when doing fully procedural stuff: it takes cycles to actually generate data, which means higher power consumption. That’s just the way it works.

There’s just no reason to save storage space. We’re nowhere near the end of storage scaling, whereas we’re totally and completely at the end of chip scaling. Dealing with P/E cycle limitations is a solvable problem, even if it results in physical media being a thing again.

“A model can be used to generate content at runtime and the model can take a fraction of the space of the game.”

You and I have different experiences with generative models.

@Pat

“You can’t generate real-life audio at runtime.”

Of course you can. It doesn’t work well with all types of audio and it does have limitations, but it does work. Even recorded speech can be reproduced by a speech model that’s trained on a speaker. I’m not talking about text to speech but machine learning-based compression.

In San Andreas audio took up a lot of space, about half. But due to better compression and higher resolution textures that is no longer the case.

Developers can prioritize reducing the size of assets. With diminishing returns of course. But it is possible. It’s simply not prioritized.

“You’re also trading off power for storage space when doing fully procedural stuff: it takes cycles to actually generate data, which means higher power consumption. That’s just the way it works.”

That’s true. And memory usage and loading times. But since PC’s nowadays have more RAM, faster SSDs, more CPU cores and more advanced GPUs we do have more resources available to so more (de)compression of assets.

“You and I have different experiences with generative models.”

I’m not talking about generative models, but of model-based compression.

” I’m not talking about text to speech but machine learning-based compression.”

I’m sorry, you’re not going to get multiplicative factor levels of compression from machine learning stuff. Just not going to happen. You can’t magically make information appear from nothing: the entropy’s gotta come from somewhere. You could get percents, maybe, but not factors (as in, you could reduce sizes by like, 20-30% maybe, but not like, factors of 3 or 4).

And yes, I’ve looked into machine learning models for compression: one of the experiments I work on is fundamentally storage limited – if it were possible to double or triple effective data size, we would. It’s just not possible – the existing compression techniques are already close to entropy limits.

What you’re talking about is fundamentally lossy compression, and that’s a style choice. You have an actor come in and build a voice model and use it for synthesis. That’s a style choice. That’s not the same thing as using an actor to actually perform the lines. You might think it sounds good enough, but again, that’s a style choice.

“But since PC’s nowadays have more RAM, faster SSDs, more CPU cores”

And higher resolution displays and higher demands. Again: CPU performance scaling is finished, storage isn’t. Trading storage for CPU makes zero sense.

@Pat

“You could get percents, maybe, but not factors (as in, you could reduce sizes by like, 20-30% maybe, but not like, factors of 3 or 4).”

I agree. I was probably overstating the effects. I’ve seen some works on tuned/tailored compression for a specific voice by combining machine learning and traditional audio compression methods that look promising. But perhaps these early reports where too optimistic or the implementation has other downsides.

“CPU performance scaling is finished, storage isn’t. Trading storage for CPU makes zero sense.”

This is simply wrong. Single core performance is still increasing, though no longer exponentially. Performance per watt is also still increasing. The number of cores is also increasing and that is something you ignored. You could devote an entire core to compression or decompression of audio and not lose any frames in a game. There is enough RAM and SSD space to cache/temporary store decompressed audio assets. Since you are only running one game at the time that shouldn’t affect drive space.

” Single core performance is still increasing, though no longer exponentially.”

Sorry, when I said “scaling’s done” I figured “exponential scaling” was implied?

Storage capacity is still increasing exponentially: in fact, it’s been increasing so fast that the only reason it doesn’t necessarily seem exponential is because drives have sacrificed total storage for size/transfer/power reasons. Half the reason for this article is that the combination of “storage+bandwidth” (well, plus low latency, but not sure how to describe that) pressure from big data has been so strong that endurance has been progressively abandoned in SSDs.

(Plus also mobile storage pressure, where endurance doesn’t matter because everyone frighteningly throws away phones after a year or two).

If you’ve got one corner of performance space that’s scaling exponentially and another that’s clearly off that pace, functionally, the non-exponential scale might as well be static. Magnetic media capacity scaling is still crazy.

“There is enough RAM and SSD space to cache/temporary store decompressed audio assets.”

Okay, now I’m completely lost? You’re acknowledging that storage space is huge so you might as well just cache the uncompressed data. Which is what I’m saying!

The only difference seems to be that you’re suggesting you just discard that data each time you run, and that doesn’t make any sense. You might as well just give an option in the game to “clear cache” or “clear temporary data” for a user to gain space if they need it.

@pat

“The only difference seems to be that you’re suggesting you just discard that data each time you run, and that doesn’t make any sense.”

It makes perfect sense. If you have 10 games each with 1GB in cached decompressed assets it takes up 10GB on your drive. Which is still a lot today. It would only take a few seconds to decompress the assets when booting the game and you can do this in parallel so boot time isn’t decreased.

“You might as well just give an option in the game to “clear cache” or “clear temporary data” for a user to gain space if they need it.”

I suggest the exact opposite. Automatically clear the cache after closing the program by default and give the user the ability to not clear it automatically to slightly increase loading times or save a tiny bit of energy for the cost of a few GB.

” It would only take a few seconds to decompress the assets when booting the game and you can do this in parallel ”

If you uncompress 1 GB to 10 GB (good luck with that, by the way) it’s going to take several seconds no matter what. You don’t have 10 GB/s throughput, and your storage throughput doesn’t increase the number of CPUs you throw at it.

“I suggest the exact opposite. Automatically clear the cache after closing the program by default ”

So you’re killing storage devices by default pointlessly without letting the user know? Huh?

The “right” solution is to have a system where generated data can be cleared on-demand as needed, but will be retained as long as needed. There’s not really a good setup for that at the moment at the OS level, but there probably should be.

This isn’t just a game issue either, mind you: with both large-scale software and hardware development (as in, SoC, silicon or FPGA development), there’s often gigantic amounts of generated data that you’d be absurd to clear every time, but which becomes just absolutely gigantic if kept around – and here we’re talking more like tens of gigabytes, not single.

So yeah, like I said, it’d be awesome if OSes had a system more like mobile OSes do where they can inform an (inactive) application “hey you’re taking too much space, clear it if you can.” But we have what we have.

@Pat

“So you’re killing storage devices by default pointlessly without letting the user know? Huh?”

I suggest using RAM. But you can use disk too and let the OS delete the oldest cache. The best is, like you suggested, a user option.

If you have 100 apps that are 80MB that’s 8GB. It adds up. I like to archive installers of specific versions of tools, but if you save installers too then it takes up even more space. I easily have 100s of GB of apps (not counting games). Adoption of new video compression standards are slow. Many videos are still H264 while we now have H265 and H266. And many photos are still in old JPEG format. I think it is doable to cut things in half or more, which would be relevant when you use an SSD that’s modified to be SLC.

Understandable. But back in thw day, you didn’t had to have all software on your local hard drive.

Little used software was stored on floppy or streamer tapes, rather, such as QIC.

The latter could hold 100s of MBs way back in the late 80s.

In a similar way, we can use large capacity HDDs (USB HDD, eSATA HDD, firewire HDD or NAS) in these days.

Streamer tapes still exist, too, albeit rarely being used by private users anymore.

So it’s not as if development of high-capacity/low-quality NAND technology is an absolute requirement.

There are alternatives, still.

We could wait until NAND technology has marured enough.

I hope clean SLC technology will be on the rise again, one day.

It’s not “SLC vs MLC/TLC/QLC,” it’s just a generic NAND flash issue. P/E cycles for SLC drop into the thousands at high densities as well.

i couldn’t remember if /usr was on my ‘small’ SSD or my ‘big’ mirrored spinning rust. so i ran “df -m /usr/.” and it said “Available 291733”. i stared at that for a long time and couldn’t figure out if that was ‘small’ or ‘big’.

“The entire Bible is only a few MegaBytes and can be compressed to be around 1 MegaByte.”

The text version or the comic? 😂

Ascii text file English without commentary.

The version with commentaries is from Martin Luther, I presume :-D

That program size comes from the tradeoff of ease of development. Look at the bloat in a lot of apps and it’s from their underlying framework that wraps the core functionality — Electron for example adds ~80megs. It’s used because they can repurpose the over abundance of JavaScript programmers from the webapp boom to also develop desktop/mobile apps. Most companies aren’t going to change that.

We’e always known there was a long-term role for both magnetic and solid-state storage, even when there was more optimism about progress in SSDs.

Yet somehow “we” decided to summarily ditch spinning disks from consumer devices, even though it meant smaller and more expensive disks, and in many cases non-replaceable storage and dependence on cloud services. Only one of those things constitutes progress, and only if you’re Amazon or Google – as for users, it looks we’ve been plain old hoodwinked.

It’s true there are performance gains from SSDs, but a hybrid arrangement can give you all those gains *and* abundant cheap local storage. Except that option was quickly discarded in favor of everyone renting hard disk space (in the cloud) instead of buying it.

I make monthly backups of all my SSD drives to spinning USB drives. So far, no problems with SSD (totalling 2.5 TB) but one 500 GB USB HD has failed. I trust that the probability of both the SSD and (otherwise unused) backup HD failing during same month is sufficiently low.

Of course I also follow the SSD error statistics with smartd and smartctl.

Keeping your data in multiple places is the key. I avoid cloud storage however.

How do you backup 2.5TB on a 500GB drive? Mechanical drive don’t like being moved around. So a mechanical and removable USB drive is a non sense in term of longevity. As a free advice, you should consider buying a NAS and putting the HDD drive in a static place. They’ll last somehow forever.

Mechanical drives don’t like being moved around while on. They’re fine if you handle them correctly. You can’t just throw a box of NAS drives and have a true backup strategy if you really care about your data.

Assuming it’s very important data, you would want to protect from oopsies all the way to catastrophies (local natural disasters). So you’d have the first layer be a real-time backup like RAID or software raid (like ZFS) w/ file history (protect against oopsy edits), a delayed hot backup (always attached, packaged incrementals), a cold backup (not always attached), and an off-site rotation (either encrypted cloud or a backed up drive rotated to another location or safety deposit box). Not all backups should be online at the same time.

“and dependence on cloud services”: I never bought into the cloud for storage mantra for several reasons of my own. That is why I also use cheap 4TB and 8TB HDD external backup drives for on-site and off-site backups. Speed isn’t a concern when backing up data…. Works well, and my data stays relatively private and safe. FYI, 1TB and 2TB SSD drives are not that pricey and do work well for local storage. I have 5 2TB 2.5 SATA SSD drives, and one HDD (for quick backups) in my local file server. And all desktops and laptops have at least one 2TB SSD drive in them. Works for me.

That works when you don’t have much data – but when you start going over 100TB, it becomes a huge pain in the butt.

My home server has 24x 8TB HDDs, and is currently at 112TB filled, backed up to DAS with the same storage, and to Backblaze.

Before I got the JBOD box, Backblaze saved me three times when one of my drives died – each time, they just sent the backup via UPS, I restored it to a spare, mailed it back, no cost (well, $200 deposit for the drive, but you get it back when they get it back). You really can’t beat that for what they charge, especially since you get one-year version history.

Am I happy that it’s no longer my only backup? Sure. But it definitely cost a pretty penny, and if my house gets hit by a stray meteor, Backblaze still has all my data.

That’s the whole reason I use 8TB drives – it’s the size of their restore drives.

8TB drives are also considerably cheap if you buy the retired units from respected second hand storage retailers that offer long warranties.

Heck, 14TB drives routinely pop up for ~$80 these days.

“when you start going over 100TB”

Tape backup?

I have been seeing the issues with SSD life degredation for the past few years, but personally I see all of my small devices as temporary…

I am moving ever closer to having no single copies of data, pushing toward smaller drives in my laptops and SD cards, and syncing all of the data up to my NAS, and once I figure out wireguard, to be replicated to offsite storage and also up to the cloud.

I have just seen to many memory cards fail, things get dropped or lost, etc to have any trust in any single storage medium.

Personally, I really hope that we get significant investment in MRAM to overcome some of these endurance hurdles.

Everspin has been working on this tech for ages now and it seems quite capable and mature, and the low latency like SRAM and 3Dxpoint would be a good fit with the bus-connected CXL technologies we are starting to see.

yeah everything i actually care about is laughably small compared to the available storage, so i keep it in git and ‘git push’ onto my vps all the time. and then on top of that, spinning rust is so cheap i keep a backup in usually-detached usb external hdd in the basement. and active storage is mirrored disks in the headless basement pc. but my laptops and cellphones just store copies and working files.

it’s kind of surreal to know that what i think of as my post-modern information management strategy is laughably obsolete…i’m pretty sure almost every end-user just trusts stuff to various cloud providers and it’s ‘always there’ and they never think about it once

“i’m pretty sure almost every end-user just trusts stuff to various cloud providers and it’s ‘always there’ and they never think about it once”

This doesn’t work in all countries or cities, though.

If we have an 6 mbps DSL internet connection and merely ~250 kbps upload speed, the whole cloud concept becomes a pie in the sky.

That’s what often seems to be forgotten, I think.

There are situations in which a local backup on a physical medium simply is more practical and less stressful.

Let’s imagine how long it would take to restore a backup of a whole Windows installation of an ordinary PC.

Downloading 120GB or more from the cloud via an 6 mbps connection takes a while,

especially if the servers limit the transfer speed for whatever reason.

Or worse, imagine we haven’t paid the monthly fee for the cloud service.

Access denied.

Or, what if the cloud service thinks that our personal files are an violation against their beliefs/conditions ?

Considering how prudish some nations are it might be possible that harmless family photos such as a mom breastfeeding their baby are being considered nudity or something.

Then, without a warning, the user is either being in deep trouble or the access simply is being denied.

I know it seems farfetched, but reality often proved to be more crazy that imagination. 🥲

“A beginner’s guide to programming an ESP32 microcontroller”

Flashing upload instructions to ESP-32?

“and seemingly immortal HDDs.”

Anyone who says this may own a lot of old and vintage HDDs but clearly hasnt run them very much. They may LOOK immortal but they asre also slowly degrading from a variety of issues including degradation o the sufacs and moving partts. Oh its really great how many of my 25 year old HDDs can be used, but there are an increasing number that just dont. Almost certain that none of them have reached the TBW levels that SSDs can now get to just by nature of their massively slower capabilities. We’re just so used to throwing bits around no one thinks twice about moving 10s or 100s of GB at a time for a simple process.

More data efficiency and methods are probably one solution for the future in the constant oscillation between hardware and software solutions.

I just accessed a couple of old drives from the 90s a few years back, to take a look back at what I was doing as a kid. One 2GB, one five GB, one 20GB – they survived the move to the US on a boat from Germany, half a dozen moves across the US, and four years sitting in a hot attic at my mom’s while I was in undergrad.

All ran perfectly, zero degradation.

No SSD would have its data intact after over a quarter century of being unpowered and treated like that.

Nothing is immortal, but HDDs are a lot less delicate than SSDs when it comes to pretty much everything but physical impact from dropping onto a hard surface, and the SSDs only survive because of their lower mass.

Having just done the same thing, trying to look at old drives from the 90s or early 00s, I had the opposite experience. The old drives were toast. They were stored in a dresser in a relatively humid environment and I think the moisture got to them. Or rough handling prior to their replacement led to their demise.

My homebuilt NAS uses 3.5″ HDDs but everything else is set up with SSDs that get mirrored to the NAS.

I recently sorted through and dumped a box of HDDs that had not been handled with care for years (banged around. Stored in sheds and garages without climate control) and only one of the ~30 was totally dead and the rest had a handful of unreadable blocks but I was able to pull all the data back.

I also have a couple of mirrored OS drives in some old servers with, at this point, 15 years + of spin time and no issues like.

Anecdotal data is such a cluster.

I’ve also had basically brand new SSDs from reputable manufacturers up and die after a few months of light use.

shrugs

i love that it’s all expectations. if you’re using the largest single HDD that you can afford in 1996 (i still have that 3.2GB quantum fireball sitting in my basement), then the fact that there’s a good chance it dies is extremely terrifying. but if you have a pile of 10 old HDDs in your basement that you can mine for old files in the case of a failure, and 7 of them still work…dang that’s pretty good!

True. On top of that solid state storage in the the mid 1990s when it first debuted was horrendously expensive. I vividly remember a catalog for hardware components that advertised SS storage modules (at full sized drive slots of 5.25″ x something – I forget what a full sized slot height was… 6″ maybe?) were $10k for far less space than the average spinning rust drive. Course, what they had going for them is they were vibration, acceleration, and magnetic field resistant. Conventional hard drives at the time were decidedly not. It’s also likely that those early drives would not have retained charge without a periodic refresh from then to today, while there’s a good chance a hard drive does, or at least the drive data would be recoverable with due care – thank you ddrescue.

A big reason to go SSD though is the simple fact of access times being so small. You can ask what the point is using an SSD vs HDD based on speed and throughput but you still have many more SSDs with IOPs that are astronomical vs HDD or access times in the sub millisecond range.

The big failure of flash manufacturing is trying to continuously make the amount of space chips have denser instead of moving horizontally. Most Sata SSDs for instance only have a total of 2 chips on a board less than 25% the size of the case, but we were able to get 500gb on those two chips- expand the board and you could easily fit 16 slc ICs on one single board, roughly 8tb. In fact a majority of the enterprise slc did this exact thing.

Tbh for average consumer levels of use, it definitely does make sense to manicure the size of the drives and their write endurance to such a small factor, a majority of people won’t use more than 256gb of space, and even then they wouldn’t use more than 5 TBW before buying a new computer tbh. Especially those older than 35.

i still think this way, in terms of the very fast random access to my SSD. i have a 7GB source tree i have to work with every day, and i’m super glad that’s on SSD. really speeds up my builds and “grep foo

find . -type f” sort of operations. but i recently learned, actually, it’s all in RAM cache! and if anything happens that flushes the RAM cache, then suddenly i’m shocked by how slow my computer is, whether it’s SSD or HDD! i don’t know what the situation will be in a decade but it turns out there was only about a 5 year window where my RAM was so small that i cared how fast my SSD was. (and during that time, I did not think “only” 4GB of RAM was small!)This is actually pretty related to the SSD reliability issue: I don’t actually understand why modern computers haven’t just exploded their RAM usage over the past few years, and likewise I don’t understand why the internal caches in SSDs aren’t just absurdly large. Yes, obviously you’ve got the nonvolatility aspect, but that’s manageable: I mean, there’s no reason you couldn’t have lithium ion batteries for desktops, too.

SSDs became super-popular because of the responsivity improvement: same thing happens when you’ve got so much freaking RAM the computer just caches everything. And the more you cache, the smarter you can be about write cycles.

Obviously memory prices spiked for a while, but they’re back on a continual drop and 128 GB of RAM should be pretty much standard on a workstation by this point.

I feel as though that’s also an issue as well. You have server solutions with batteries but a good reason why you don’t see them in consumer products is both complexity and potential volatility.

It would make sense to just have an integrated super capacitor in these cases, especially if you wanted to add like 32gb DDR4. But perhaps the controllers have some sort of limitations in dealing with more RAM which leads manufacturers establish less capacity- or simply put, there could just be no benefit to a bigger cache- most files aren’t going to be bigger than 4GB, and an even smaller amount bigger than 16GB.

Another potentiality is that more RAM can lead to faster degrading of the Data it handles since it wouldn’t be ECC.

The late 90s? it was so small and expensive back then that it was only practical for a few niche applications.

It’s still pretty shit, the way they tend to die, rather than do SOMETHING to hint at you they’re on their last leg, many, even “pro” SSDs will rather simply cease to exist, sometimes between reboots, and that’s then that.

Sure, SSDs are nice for a lot of things, and they are exceptional as index disks and for operating system and ethereal data, but they still have some way before they’re a good replacement for long-term storage of massive datasets. (Yes, I know data-center scale deployments sometimes can get this to be financially sound, but even there, not always).

I currently use piles of HDDs in zfs pools for storage and SSDs are used for read cache etc. Even if SSDs dropped to the same price point as HDDs I wouldnt switch my setup. I’ve been burned way way more often from faulting flash than magnetic HDDs. It’s kind of infuriating.

Of all the Hard drives our family has bought since 1990 only 3 have failed, however EVERY ssd i have bought since 2010 has died! And not only that they started silently corrupting data causing all my backups (Grandfather-father-son) to be affected!

I’m sorry to hear that. I’ve had several HDDs and one SSD crash on me. I even accidentally deleted an online backup once (I set a folder to sync instead of backup and deleting a folder on my PC deleted the backup), the same day my hard drive with a second backup also failed. Luckily not much was lost. But in the past I lost emails and documents. I have nothing digital from my childhood anymore. Only analog photos survived.

I see this as less of a fundamental problem with QLC and more of a software/firmware/hardware design problem. Instead of using QLC for EVERYTHING on the SSD, they could have multiple type of memory chips which have tradeoffs. All you need then is to determine what should be stored where based on the likelihood of it being modified or erased. Alternatively, you can be like me and use a small SLC SSD for stuff I keep in /home (and is thus common to change) while I have larger TLC SSDs that are for archiving data.

If you all you have is a hammer then you’re a really bad builder. ;)

I feel the real issue with NAND must be the actual fab costs. We’re talking about rather small incredibly dense chips even with SLC.

I mean we have tiny multi-TB sticks that, even if you quadrupled the size, could easily fit with room for more in a 3.5″ drive.

The only reason we don’t get super giant SSDs for consumers at lower prices must be because they haven’t been able to bring down chip prices outside of MLC tech.

Wow Maya! You are truly raising the bar height for technical writing excellence! Hackaday should be proud to have you as a resource. Hmm… Time to write that book you always dreamed of?

Agreed. I appreciate having such an explanation in such a compact form.

This is part of the reason I still have a 1 TB Optane drive in my PC despite it being pcie3. It’s perfect for a OS drive as you don’t have to worry about the write wear issue and it’s random access still beats modern pcie4 NVME drive. I of course have a fast NVME drive too and put things on it that make better use of its strengths.