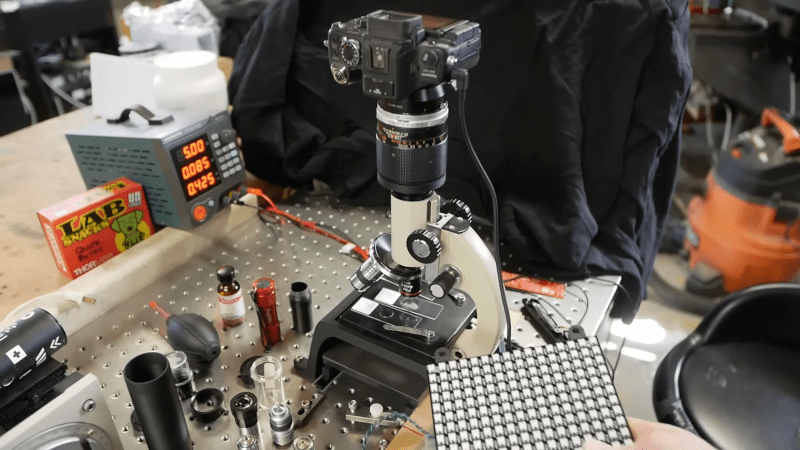

Nowadays, if you have a microscope, you probably have a camera of some sort attached. [Applied Science] shows how you can add an array of tiny LEDs and some compute power to produce high-resolution images — higher than you can get with the microscope on its own. The idea is to illuminate each LED in the array individually and take a picture. Then, an algorithm constructs a higher-resolution image from the collected images. You can see the results and an explanation in the video below.

You’d think you could use this to enhance a cheap microscope, but the truth is you need a high-quality microscope to start with. In addition, color cameras may not be usable, so you may have to find or create a monochrome camera.

The code for the project is on GitHub. The LEDs need to be close to a point source, so smaller is better, and that determines what kind of LEDs are usable. Of course, the LEDs go through the sample, so this is suitable for transmissive microscopes, not metallurgical ones, at least in the current incarnation.

You can pull the same stunt with electrons. Or blood.

Could this be used with the openflexure microscope?

It should be possible, see this paper for the use of an LED grid for illumination with OFM https://opg.optica.org/oe/fulltext.cfm?uri=oe-30-15-26377&id=477856

I think maybe an OLED screen can do the job, it’s dot is small and easy to control.

OLED or even microled sounds perfect for it.

I was wondering when I watched the video whether it might actually make sense to have a very low density grid and a gantry that can shift everything up to one LED in X and one LED in Y and cover the entire area.

But realistically, looking at the results he got with the matrix he used, the density of LED’s might not actually be that important compared to the optics and the camera sensor.

It might not be bright enough so the signal to noise would go way up. But you could use longer exposures but that would slow down acquisition by a lot I guess.

I wonder if this is similar to getting phase information with a polarisation filter at different angles, the way they can extract micro 3D detail from objects. That’s probably only phase info and little detail to work with but still, it reminded me of it.

wondering if this could scan quickly enough to integrate the images on the retina, and avoid the need for a camera; essentially a POV image?

Sure it could. To make it real time you just need your retina to operate at a few thousand frames per second, and have a high end GPU-equivalent processor implanted in your optic nerve to process the data into images.

But maybe you want to make the ‘real time’ prototype outside your brain cavity first.