Unless you have been hiding out in a cave for the last week or so, you have heard about this year’s April Fools’ joke from Google. Gmail Motion was purported to be an action-driven interface for Gmail, complete with goofy poses and gestures for completing everyday email tasks. Unfortunately it was all an elaborate joke and no gesture-based Gmail interface is forthcoming…at least not from Google.

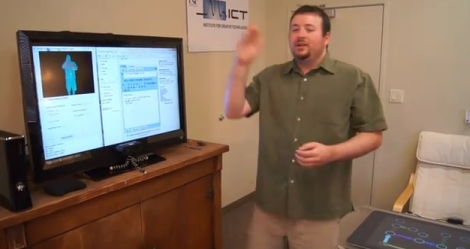

The team over at the USC Institute for Creative Technologies have stepped up and made Google’s hoax a reality. You might remember these guys from their Kinect-based World of Warcraft interface which used body motions to emulate in-game keyboard actions. Using their Flexible Action and Articulated Skeleton Toolkit (FAAST), they developed a Kinect interface for Gmail which they have dubbed the Software Library Optimizing Obligatory Waving (SLOOW).

Their skeleton tracking software allows them to use all of the faux gestures Google dreamed up for controlling your inbox, however impractical they might be. We love a good April Fools’ joke, but we really enjoy when they become reality via some clever thinking.

Stick around for a video demo of the SLOOW interface in action.

[via Adafruit]

[youtube=http://www.youtube.com/watch?v=Lfso7_i9Ko8&w=470]

It would be really cool if somebody could get the Kinect to read sign language.

I call BS.

Awesome, i like it! now i want the “Magic Smoke Refilling Kit” from sparkfun to become real!

Interpreting sign language is a laudable idea.

ASL (American Sign Language) does not have fixed rules for the signs. Anything that “looks close” to the sign you want and won’t be misinterpreted *in context* is considered OK.

I’ve even seen variation on the finger-spelling alphabet.

A fluent friend of mine tells me that there are a large number of nuances, modifiers, and jargon. For example, saying “I like” is one nuance, but saying it while leaning forward puts emphasis on the phrase, turning it into “really like it” or the colloquial “love it” depending.

OTOH, a fixed set of ASL Kinect gestures might popularize a more formal and strict definition of the signs.

To really do this, you will need feedback from someone who actually speaks the language.

FAAST and SLOOW… ;)

I’d love to try this out and probably code my own applications for the Kinect but I don’t have one because I don’t even own an XBOX ;)

4-4-2+0+1+1

I don’t have an Xbox either… Didn’t stop me buying a kinect cheap at the local carboot sale (:

This is a good way to get hired by Google…and have your project buried in the desert in Saudi Arabia.

Screw that. Boring.

Now… The starcraft 2 kinect interface, that be amazing.

I wondered how long it would be before someone did this.

Ok, Thats all well and good but when is someone going to hack together a real spaghetti tree.

http://www.youtube.com/watch?v=27ugSKW4-QQ

http://en.wikipedia.org/wiki/Spaghetti_tree_hoax

Gordon

@Drew and @Okian Warrior

Several groups are working on the ASL support as we speak. Also, ASL support was included in the patent application Microsoft filed for the Kinect.

http://www.joystiq.com/2010/12/20/kinect-hacks-american-sign-language-recognition/

http://www.gamespot.com/news/6272656.html

http://www.google.com/google-d-s/promos/motion.html

Best of the Gmail Motion options.

@cde: speech recognition has a ways to go yet to make that reasonable. while it could make manipulation of troops easier, that is specifically the roles held out for the mouse, keyboard macros would require something more complex, voiced commands would fill that role nicely, but they must work, 100% of the time and fucking fast. if it can’t fill the role of a keyboard it will fail against a keyboard and mouse.

(gestures are just out of the question…for roughly the same reason)