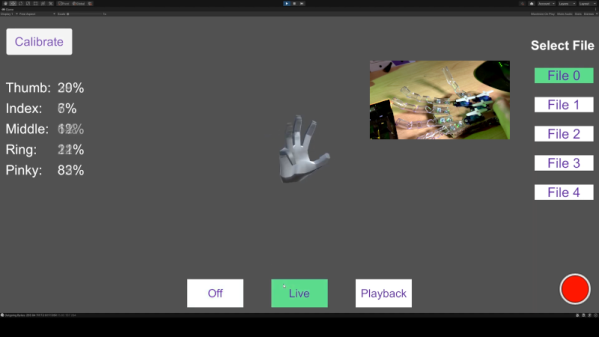

The concept of a 3D scanner can seem rather simple in theory: simply point a camera at the physical object you wish to scan in, rotate around the object to capture all angles and stitch it together into a 3D model along with textures created from the same photos. This photogrammetry application is definitely viable, but also limited in the sense that you’re relying on inferring three-dimensional parameters from a set of 2D images and rely on suitable lighting.

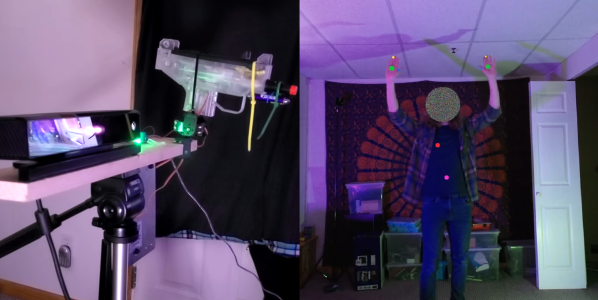

To get more detailed depth information from a scene you’d need to perform direct measurements, which can be done physically or through e.g. time-of-flight (ToF) measurements. Since contact-free ways of measurements tend to be often preferred, ToF makes a lot of sense, but comes with the disadvantage of measuring of only a single spot at a time. When the target is actively moving, you can fall back on photogrammetry or use an approach called structured-light (SL) scanning.

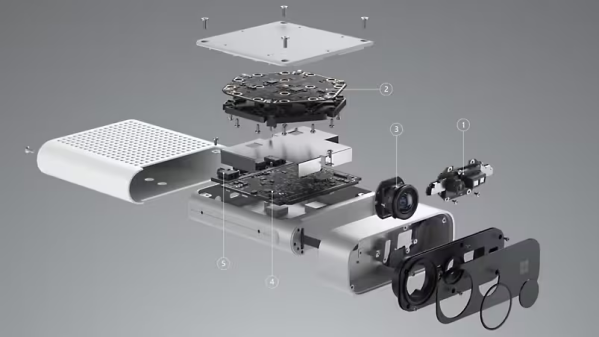

SL is what consumer electronics like the Microsoft Kinect popularized, using the combination of a visible and near-infrared (NIR) camera to record a pattern projected onto the subject, which is similar to how e.g. face-based login systems like Apple’s Face ID work. Considering how often Kinects have been used for generic purpose 3D scanners, this raises many questions regarding today’s crop of consumer 3D scanners, such as whether they’re all just basically Kinect-clones.

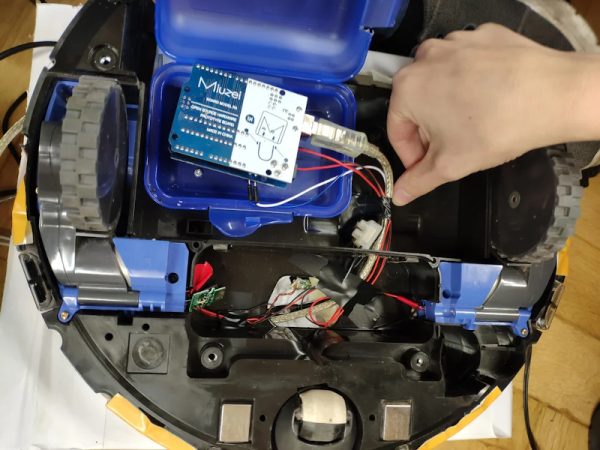

Continue reading “On 3D Scanners And Giving Kinects A New Purpose In Life”