If you ever need to manipulate images really fast, or just want to make some pretty fractals, [Reuben] has just what you need. He developed a neat command line tool to send code to a graphics card and generate images using pixel shaders. Opposed to making these images with a CPU, a GPU processes every pixel in parallel, making image processing much faster.

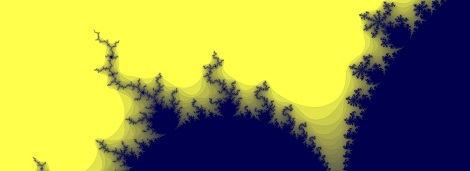

All the GPU coding is done by writing a bit of code in GLSL. [Reuben]’s command line utility takes that code, sends it to the graphics card, and returns the image calculated by the GPU. It’s very simple for to make pretty Mandebrolt set images and sine wave interference this way, but [Reuben]’s project can do much more than that. By sending an image to the GPU and performing a few operations, [Reuben] can do very fast edge detection and other algorithmic processing on pre-existing images.

So far, [Reuben] has tested his software with a few NVIDIA graphics cards under Windows and Linux, although it should work with any graphics card with pixel shaders.

Although [Reuben] is sending code to his GPU, it’s not quite on the level of the NVIDIA CUDA parallel computing platform; [Reuben] is only working with images. Cleverly written software could get around that, though. Still, even if [Reuben]’s project is only used for image processing, it’s still much faster than any CPU-bound method.

You can grab a copy of [Reuben]’s work over on GitHub.

I saw a code fragment once that claimed to be a bit-slice DES cracker for GPU. There was no support code, so I had no idea how to try it out. I wonder if this could be a good starting point.

So if I’m reading this correctly as a rendering engineer, and stripping away the buzzwords, all [Reuben] did was write a program that loads a .FSH file and draws a full-screen quad?

I think your missing the part about using the gfx card doing the edge detection rather then the cpu

No, it’s more like “your” not a rendering engineer, and “your” just being taken in by buzzwords that are rather trivial to implement in practice.

You can implement a simple Sobel filter using around 8 lines of GLSL, just perform four additional tex2D calls to fetch the neighboring texels around the central texel, subtract the texels’ values from the central texel, and then use a ternary operator comparison to set the output pixel to white when the difference is over a given threshold. The “framework” to render a full-screen quad, and maybe bind an image to a sampler, takes about 30 minutes to write with some cursory Googling, and the relevant GLSL fragment shader about 5 minutes.

How on earth is this even remotely noteworthy?

@SFRH

I’m interested in AI and was not aware that you could do say edge detection with GPU. That is the newsworthy bit for me.

Your comment is also worthy because it does point out that it is probably not very hard to do so.

Interdistsiplinary information sharing is very important.

@SFRH

>>How on earth is this even remotely noteworthy?

Because I have not done this in the fashion before, ergo neither has someone else, ergo it opens doors for others.

Yore pointing out of how quickly this can be done makes it an interesting point that even non image data can be processed as an image. Heck I may even take yore advice and render a waveform to an image via the graphics card with some built in filters and shader manipulation you can do many things.

I think I will take yore advice about the filters and use a separate pinching algorithm to do beet detection

@Kris Lee

>>Interdistsiplinary information sharing is very important

Agree’d

Can you point me to the command line tool you wrote and published please?

This isn’t terrifically new, but I’m glad to see people interested in it. OpenGL is more portable, but there are DirectX versions for those of us oriented on Windows.

Here is a chapter on image processing in the 2005 book GPU Gems 2.

OK, editing isn’t allowed and I couldn’t preview my post to see that the href formatting was correct. Here is the URL to the GPU Gems 2 book. Maybe. If the posting mechanism doesn’t eat it.

http://http.developer.nvidia.com/GPUGems2/gpugems2_chapter27.html

Yes that’s basically it. Look at the comments in the header of fragment.h in the github repo. It’s a neat tool to have for experimenting, not some massively useful new approach or idea.

I’ll just leave this here

http://imgur.com/8P6Iq

There are tools that do this:

GeexLab

http://www.geeks3d.com/geexlab/

Shader Maker

http://cg.in.tu-clausthal.de/teaching/shader_maker/index.shtml

They do it in realtime, allowing you to fiddle about with your shader until things are right.

But, I guess the news here is that GLSL programming is becoming more mainstream.

This really isn’t terribly interesting. All it does is load an image and draw a full-screen quad.

Literally like five lines of (terribly common and mundane) code.

puredata/gem can be used for the same kind of things

and much more