Ever wanted to make some seriously trippy retro graphics to go along with your lo-fi hip hop beats? Now you can, with [teafella]’s aptly named Hypno Video Synthesizer, a Raspberry Pi-based video synthesizer that digitally emulates and extends analog video workflows through colorization, shape generation, and feedback, patching the modifications into a compact interface. The device allows music creators to perform with live visuals, or alternatively to create a unique visual source for a video setup. Once the CV input is plugged in, all it requires is a composite display and power to start working.

Hypno takes input through a control voltage (CV) jack using a MCP3008 ADC via SPI, with voltages scaled from -5-5V to 0-5V. The device attaches on top of a Raspberry Pi, using Raspbian for the operating system and the Pi Zero GPIO to interface with an OpenGL Engine. The input parameters are taken from knobs through a multiplexer into a single channel of the ADC, with values offset in software based on the CV inputs.

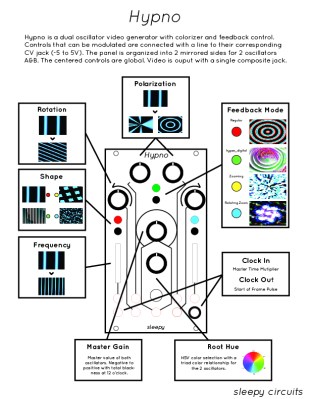

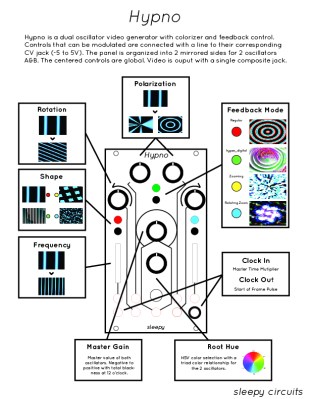

Using the Hypno ends up being fairly straight forward, as the controls are organized onto two mirrored sides for the two oscillators A & B, with global controls in the center. There are knobs that control polarization, rotation, shape, feedback modes (regular, hyper digital, zooming, rotating zoom), clock in/clock out, frequency, root hue, and master gain, as well as RGB LEDs that provide visual feedback.

A single jack outputs the composite result, although a micro-HDMI plug can also be used on the back. For advanced functionality, Hypno allows for patching, which mixes effects on top of one another and allows for shapes such as oscillator cross modulation. There are also alt-controls that open up self modulation and other shapes. Examples include bipolar drift (smoothly scrolls the oscillator) and mirroring (mirrors the oscillator’s shape in different patterns for a kaleidoscope-eque tiled madness).

The software is written in C++ and GLSL, with the main engine running with one plane in OpenGL, drawing the output of a GLSL shader. The CV and knob inputs are fed into shader uniforms that are used to change the visuals in the engine.

[teafella], a self-professed Arduino user, uses WiringPi for the GPIO interactions. The Shader system is inspired by analog video synthesis, with every shape having a simulated “scan” over the screen and function mapped to it that can be transformed into polar coordinates.

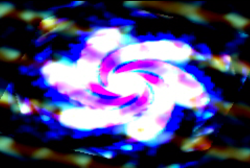

The setup for Hypno is fully compatible with analog CV equipment such as Eurorack synthesizers, which makes it easy for music creators to plug and play. Here’s a couple of sample outputs from some soundtracks inputted into Hypno:

Too many combinations to even imagine? Check out a demo of Hypno in action!

Continue reading “Hypnotic Visuals Synthesizer” →