In 1975, [D. L. Slotnick], CS professor at University of Illinois at Urbana-Champaign faced a problem: meteorologists were collecting a lot more data than current weather simulations could handle. [Slotnick]’s solution was to build a faster computer to run these atmosphere circulation simulations. The only problem was the computer needed to be built quickly and cheaply, so that meant using off-the-shelf hardware which in 1975 meant TTL logic chips. [Ivan] found the technical report for this project (a massive PDF, you have been warned), and we’re in awe of the scale of this new computer.

One requirement of this computer was to roughly 100 times the computing ability of the IBM 360/95 at the Goddard Institute for Space Studies devoted to the same atmospheric computation tasks. In addition, the computer needed to be programmable in the “high-level” FORTRAN-like language that was used for this atmospheric research.

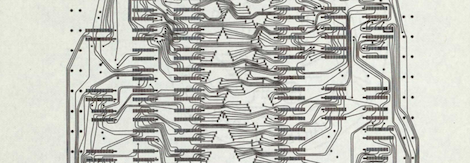

The result – not to overlook the amazing amount of work that went into the design of this machine – was a computer built out of 210,000 individual logic chips at a total cost of $2.7 Million dollars, or about $10 Million in 2012 dollars. The power consumption of this computer would be crazy – about 90 kilowatts, or enough to power two dozen American houses.

We couldn’t find much information if this computer was actually built, but all the work is right there in the report, ready for any properly funded agency to build an amazingly powerful computer out of logic chips.

That is beautiful!

Nowadays you could probably run it on a FPGA powered by batteries :)

Nowadays you could DEFINATELY run it on a FPGA powered by batteries :)

Most definitely

A few Cray XE cabinets will top 90KW.

As a former meteorologist, this interests me a great deal.

The problem will probably always be having (and needing) more raw weather data than the numerical models can handle. I remember as a student writing a simple 2-layer model with US data from 1 time zone. At single precision and making a LOT of assumptions on several variables, it took 72 hours for my old overclocked XT to come up with a 24-hour forecast. (Later, I ran the same model on a 386, upped the calculations to double-precision, and adjusted the gravity, inertia, and surface level variables to more accurately reflect real-world values, and it still took several hours to run).

Ever thought to run it in cache? Old DOS programs can run in cache these days (many modern processors have far more than 1M of cache and run at the speed of light compared to your turbo XT of yore) and while it isn’t an efficient use of HW, if your models were good, I’ll bet it will be much closer to real-time.

Haven’t had the floppy out in years — There’s quite a bit I want to do with that old program now that I know how to.

In 1992 I was doing weather calculations as an intern for the Air Force. Our 36 hour forecast for 100 square miles (data points every 100 feet in 3 dimensions, up to 50,000 feet) took 12 hours to run on a Cray Y-MP at $1500/hour. At that price you certainly made sure that the dataset was correct before you started the job! We later started to run on a sparc Ultra 10 which took 48 hours, but cost only $15,000 for the whole unit. Since we were just testing to make sure the model was accurate it didn’t matter that it took so long.

Now, If only the models were accurate… I swear that it’s secretly a random walk. It also seems like the long range models are becoming less accurate with time… so something is still missing.

I do like the more recent aviation weather systems.

It’s nice to be able to see the interpretations in dumb-pilot accessible formats.

The models are accurate — they haven’t changed much since Sir Richardson came up with them in the 1930s. It is the data that goes into them that is suspect, lacking, far too quantized for its own good, a few decimal points off, etc.

A modern, synoptic-scale, 100 level model with vorticity, inertia, pi, and local gravity out to 20+ significant digits will give a reasonably accurate forecast up to 36 hours. By 48 hours, climatology takes over. By 72 hours, gravity waves and chaos make the model so bad that a monkey throwing darts will be better.

Yet, this is enough to keep several Cray’s running full bore and still get forecasts out with a reasonable latency. Any more data or any more accuracy in the constant values wound slow things down significantly.

Jesus, American houses must be really inefficient.

90kW x 24 hours (it’s going to be running constantly, let’s face it. You don’t spend $10M on a computer and run it only during business hours) = 2160 kWh of energy per day. Divide that in turn by 24 houses, and we’re back to 90kWh of energy used per house per day.

I have a high energy requirement (pool, reef-tank, pond, air-con, baby [=lots of washing/drying]) and my house peaks at 40kWh/day (brought down to ~3kWh/day because I have a large number of solar panels).

I’d really hate to have a 90kWh/day energy bill. Before the solar panels were installed, I was being charged (topping out at) ~$1k/month in California, I shudder to think what more than twice that would cost (it’s a sliding scale, the more you use, the more each subsequent kWh costs).

what are you talking about? the post just said 90 kW is enough to power 24 houses, no one said anything about kWh. That’s 90/24 kW per house PEAK POWER, has nothing to do with energy consumption.

According to wolfram alpha, 15 to 30 american homes. And if we were to compute 2 dozen homes at peak power, then it’s only 3.7kW per house, or about 2 15A 120V circuits. So that’s like a stove, or a dryer and fridge, or the AC unit. Not that unbelievable. But no, not 90kW continuously.

Jeri Ellsworth could put it on a FPGA, AND…, fit the whole thing nicely into a C64 keyboard!!!

…and she’d record a video tutorial on how to do it in layman…

…and uploaded to opencores.

BTW, how come meteorology isn’t about meteors?

or meters?

That’s because you North Americans spell “metre” wrong.

The people who studies the metre with dedication know this and thus call themselves “metrologists” rather than “meterologists”, the science being metrology.

@anon, no, we spell it as it is pronounced.

@belg4mit doesn’t make it less wrong :)

@Eirinn

Spelling wasn’t standardized on either side of the pond until after the colonies gained independence.

@anon,

A “metrologist” is NOT a weather forecaster! A metrologist studies “metrics” i.e. measurements, therefore, a calibration technician is a metrologist.

A meteorologist is a weather forecaster, they study stuff that falls out of the sky, sort of like meteors, but water based, snow, rain, hail.

b bu but then who’s left to stody the poor Nash or Geo “metros”?

90 kilowatts! Need to invoke the Heisenberg uncertainty principle– or a variation thereof:

You can’t predict the weather without affecting it with the added heat!

Sure you can. You just use a computer that’s not on Earth.

But then you would just have a dead cat…or would you?

Anyone willing to take this on as a massive project? Maybe long term cross-country harkerspace project? (just to educate. use current tech – SMDs, etc, and have each hackerspace work on a board at a time)

Back of the envelope… IBM 360/95 = 330M Multiplications/min = 5.5M/second. 100x that for this design, 550M/s (not Megaflops, but close enough…). Assuming going from 100MHz (TTL max speed) to 400MHz (FPGA), 2.2GFlops, which with an i7 running ~100 GFlops is not too shabby. If the system used 10MHz TTL chips, the speedup could be even higher. Though switching from integir MUL to floating point carries a major penalty, and the 360/95 was only 14 bit to begin with.

Sorry, replied to the wrong comment.

Before porting this design to an FPGA, do a software simulation or emulation.

It would be interesting to see how this design stacks up VS current weather systems at its 1970’s design speed, then cranked up to run at current speeds.

So many suggestions for the FPGA version. Fantastic.

Papilio weather wing

FTW :-)

It would be cool if it ends up in opencores.

Given the sheer number of ICs, the anticipated temperature due to all that power dissipation, and the best failure rate vs. temperature stats they were able to get from Signetics, they estimated:

“…we should expect the system to operate for twenty-six to forty-five hours between failures…”

Troubleshooting a 210,000 IC computer every day or two? Sounds like fun. ;)

It’s all the Sigentics 25120’s that do it.

Did anyone manage to grab a copy of that PDF before they blocked it? (I’m getting a 403 forbidden response).

yup… first thing I did.

I would be willing to host the copy (assuming it’s legal to do so) if someone would provide it to me.

Nothing blocked here.

Same here, no block at all.

Maybe it was just contention caused by a couple dozen H.A.D. readers trying to pull the file all at once. Space Program cutbacks you see, NASA can’t affort fast web servers now.

I ended up downloading my copy via Curiosity’s high gain antenna, seemed easiest. :D