Imagine you’ve got a bunch of people sitting around a table with their various mobile display devices, and you want these devices to act together. Maybe you’d like them to be peepholes into a single larger display, revealing different sections of the display as you move them around the table. Or maybe you want to be able to drag and drop across these devices with finger gestures. HuddleLamp lets you do all this.

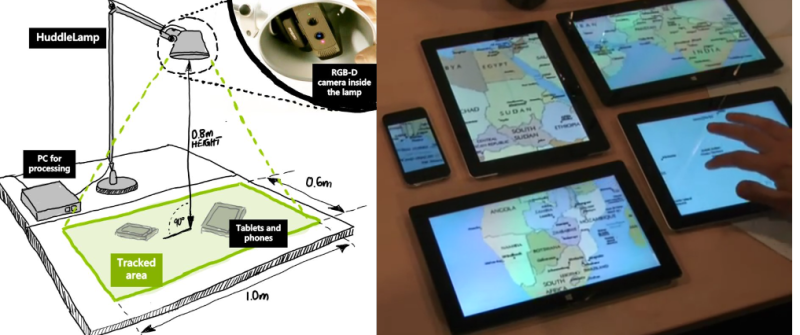

How does it work? Basically, a 3D camera sits above the tabletop, and watches for your mobile displays and your hands. Through the magic of machine vision, a server sends the right images to each screen in the group. (The “lamp” in HuddleLamp is a table lamp arranged above the space with a 3D camera built into it.)

A really nice touch is that the authors also provide JavaScript objects that you can embed into web apps to enable devices to join the group without downloading special software. A new device will flash an identifying pattern that the computer vision routine will recognize. Once that’s done, the server starts sending the correct parts of the overall display to the new device.

The video, below the break, demonstrates the possible interactions.

If you want to dig deeper into how it all works together, download their paper (in PDF) and give it a read. It goes into detail about some of the design choices needed for screen detection and how the depth data from the 3D camera can be integrated with the normal image stream.

That’s very nice, also I fail to see a real use to it.

Someone ?

What about the stuff they’ve done? Quick sharing of Tasks?

Use all your what-ever-platform-it-may-be devices and embiggen your working area :D

I don’t see a real use too. You lost big parts of the picture (and so information) throug frames and place between the devices. you can overlook something because this.

BUT: It looks really nice and the initialisation of new devices looks really easy.

I agree that a lot of info is lost between the devices. I think the more interesting part of this is the easy and adhoc joining of devices. Being able to share say a presentation or report to everyone at a table is the functionality that I like most about this.

Did you watch the video ? I think the “continuous screen” over multiple devices is the least interesting feature.

I never tried that, but I think it is similar to multi-screen PCs. It is often hard to see a real use if you never tried, but it is actually extremely useful. You should understand what I mean if you ever worked on a multi-screen PC, it’s addictive.

so similar concept to this presented last week in London :)

http://on.aol.com/video/extendernote-at-techcrunch-disrupt–london-2014-hackathon-518470061

or this that I wrote years ago

http://extenderconcept.wikidot.com/english-version

Or to the demo of Li and Kobbelt presented at the MUM 2012 conference… Original paper here: http://dl.acm.org/citation.cfm?id=2406397&CFID=589227444&CFTOKEN=98602572

OR Microsoft Surface multimedia coffee table…

https://www.youtube.com/watch?v=SRU3NemA95k#t=105

At around 1:45; it doesn’t link to the exact time :C

Actually, at 4:02 it’s shown better.

Nice, I wonder if they could make a sheet of paper into a “storage device”, I mean I’m at the desk and have a file I want to leave for somebody, I “hook” a piece of paper into the device, write “Heres your file Bob.”, drop the file into the paper (stored on the huddle’s drive, the paper is a token, whatever) and leave, when Bob comes by he hooks his tab into the huddle, grabs the file from the paper and drops it into his tab, hey it should work.

or you could drop the file into an email

What fun is that? Besides, the file only goes to the person or people who go to the table.

It looks like a 2D take on augmented reality, but really what needs to be demonstrated is 3D. For that, castAR is a much more optimized approach.

Sad that Blackberry showed something very similar a few years back with their Playbook.. but never capitalized on it.

https://www.youtube.com/watch?v=CQMTqN1jTMg

I can’t tell you how many times I’ve been in a meeting and needed something like this – wait I can – ZERO

those letters at 0:50 dont move because they are animated, its Primesense depth camera (Creative Senz3D) jitter

wow this is terrible, higher education (postdoc), magical math abilities … and they couldnt figure out their system has shitton of JITTER that makes it unusable, and simple filtering could remove it?

its not rocket science that items dont shake constantly while laying on the table, how could anyone not notice that?!?!?!

otherwise neat system (flicking content between devices, not the rest), but it could only work if someone (google/apple) build it into their ecosystem somehow, and deffo without outside sensors like that depth camera. Devices would have to estimate their orientation to one another on their own somehow, gps sucks for that, maybe echolocation – should work with three cooperating devices in the room? :)

Hey, chill, this is not intended to be a finished product. You’re right that jitter is not a very difficult problem to solve, which is probably why they decided to invest the bulk of their research efforts elsewhere.

its not hate, just critique

Hey, chill with the hate, this is not intended to be a finished product. You’re right that jitter is not a terribly difficult problem to solve, which is probably why they invested the bulk of their research efforts elsewhere.