If you’ve been keeping up with augmented and virtual reality news, you’ll remember that spacial haptic feedback devices aren’t groundbreaking new technology. You’ll also remember, however, that a professional system is notoriously expensive–on the order of several thousand dollars. Grad students [Jonas], [Michael], and [Jordi] and their professor [Eva-Lotta] form the design team aiming to bridge that hefty price gap by providing you with a design that you can build at home.

A quick terminology dive: a spacial haptic device is a physical manipulator that enables exploration of a virtual space through force feedback. A user grips the “manipulandum” (the handle) and moves it within the work area defined by the physical design of the device. Spacial Haptic Devices have been around for years and serve as excellent tools for telling their users (surgeons) what something (tumor) “feels like.”

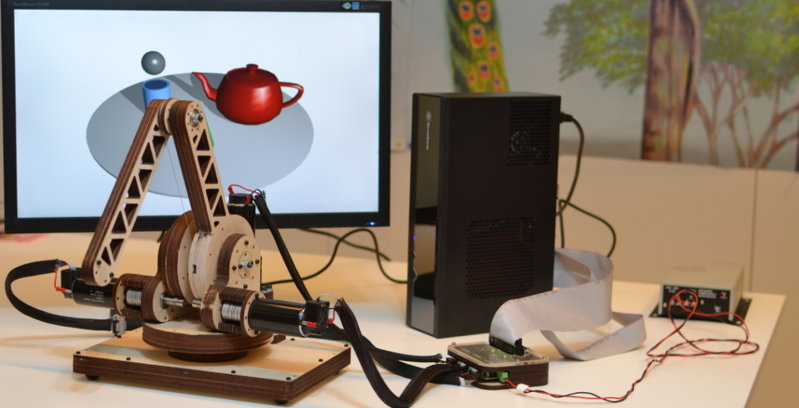

In our case, this haptic device is a two-link, two-joint system grounded on a base station and providing force feedback with servo motors and tensioned wire ropes. The manipulator itself supports 3-degree-of-freedom movement of the end-effector (translations, but no rotations) which is tracked with encoders placed on all joints. To enable feedback, joints are engaged with cable-drive transmissions.

The design team isn’t new to iterative prototyping. Hailing from CS235, a Stanford course aimed to impart protoyping techniques to otherwise non-tinkerers, the designers have drawn numerous techniques from this course to deliver a fully functional and reproducible setup. In fact, it’s clear that the designers have a strong understanding of their system’s physics, and they capitalize on a few tricks that don’t immediately jump out to us as intuitive. For instance, rather than rigidly fixing their cable to the motor shaft, they simply wrap the cable around the shaft a mere 5 turns such that the force of friction greatly exceeds the threshold amount that would otherwise cause slipping. They also choose plywood–not necessarily because of its price–but more so because of its function as a stiff, layered composite that makes it ideal “lever arm material” for rigidly transferring forces.

For a full breakdown of their design, take a look at their conference paper (PDF) where they evaluate their design techniques and outline the forward kinematics. They’ve also provided a staggeringly comprehensive bill of materials (Google Spreadsheet). Finally, as justifiably open source hardware, they’ve packaged their control software and CAD models into a github repository so that you too can jump into the world of quality force feedback simulation without shelling out the twenty thousand dollars for a professional system.

[via the 2015 Tangible Embedded and Embodied Conference]

Yes. Yes. Yes. Yes. Yes. Yes. Yes. Yes. Yes. Yes. Yes. Yes. Yes. Yes. Yes. Yes. Yes. Yes………Yes.

Not really trying to be negative but I dont see it taking off anywhere… It’s not that they’re “notoriously expensive” Novint broke that wall and made one affordable years ago the problem with 3d haptic devices is that no one develops for them.

And here I was going to lament my having had to choose between the Chinese knockoff Makerbot and the cheap Chinese laser cutter earlier today, and choosing the former… Then I looked at the price on this thing’s BOM. Three times the cost of both pieces of equipment combined! So I guess it’s pretty moot. ┐(-。ー )┌

You can probably find some cheaper parts to bring the cost down. They have a 48V@5-9A coming in at $150. You can probably find a cheaper import or use a couple of smaller power supplies. (http://www.aliexpress.com/item/Free-Shipping-400W-48V-8-3A-Regulated-Switching-Power-Supply-For-LED-Light-Surveillance-Camera-AC/1872692643.html)?

Democratize? Artspeak. Ugh.

+1

“The components mentioned here carry a cost of about 3000 USD.”

I can give you about three-fiddy for it…

And here is a robot wielding a chainsaw:

https://www.youtube.com/watch?v=Haz7rilcjHA

Also, I have invented a machine which turns gold into lead. The process is not reversible and requires both a nuclear power plant and a particle accelerator. :P

On a more serious note, wood is one of those materials which humans know really well. It might be difficult to fake it in virtual reality haptics. Rapid prototyping of actual wooden objects, like oar handles for a rowing boat simulator, might be the simplest way to accomplish this.

I think you might be confusing “haptic” with “tactile”. This project (AFAIK) doesn’t attempt to simulate the surface texture of an object, but rather the weight and inertia of holding and moving said object. To do tactile feedback, I’ve heard of covering a glove with tiny piezo actuators, and vibrating them at different rates to simulate the surface texture of objects. A “poor-man’s” version could use surplus cell-phone vibration motors.

The chainsaw robot is cool, though. I wonder how many custom log carvings you’d have to sell before such a setup paid for itself…

Didn’t know there was a difference. Thanks.

Pressing one of my more calloused fingertips against wood and holding it motionless, I feel only pressure, and that the material does not readily conduct heat away from my finger. Which is characteristic of wood, but also other materials as well.

Rubbing the fingertip against the wood, I cannot directly feel the texture through the callous, unless there’s a major flaw. But at this point, I can be fairly certain it’s wood. Why? Because I recognize the characteristic vibrations produced by rubbing against the surface, which are transmitted through the callous. It’s almost as if the nerves in the fingertip are “hearing”.

Now as you said, I’ve too have heard of “covering a glove with tiny piezo actuators, and vibrating them at different rates to simulate the surface texture of objects”. But AFAIK, this has only been done with a simple frequencies, typically with a single frequency assigned to each material. 10hz might be plastic, 5hz wood, and so on. It doesn’t really feel anything like the material in question, just a buzzing, and you have to learn to recognize what frequency represents what material.

What if you were to play back a complex waveform instead? Mount a piezo on a piece of wood, rub a fingertip against it, record the vibrations produced. Would this played back through a fingertip piezo give a more significant illusion of touching wood? Again AFAIK, it hasn’t been done. If it works, then it should be possible to design an algorithm that takes accepts a surface roughness parameter, as well as pressure and motion, and generates complex and appropriate vibrations dynamically. Expanding the algorithm with other parameters, like stiction, could allow simulation of more materials.

And Try to add peltier o it so you can feel heat removed from finger (or added if the material is hotter)

Acoustic properties of many materials change with temperature too… Interesting.

http://factorial.hu/plugins/lv2/ir is a program which takes a recorded impulse response and convolves audio inputs with it. An IR is for example the sound of rolling thunder which a lightning strike produces. Shape and material information from the landscape is encoded in thunder.

http://en.wikipedia.org/wiki/Joint_(audio_engineering)#M.2FS_stereo_coding describes a way to extract position information from a stereo recoding.

The topic of http://en.wikipedia.org/wiki/Dimensionality_reduction seems to categorize my rant. So, some data mining stuff while we’re groping in the dark: https://code.google.com/p/semanticvectors/wiki/RandomProjection

Reminds me, blind people might want VR too.

Very interesting ideas, there… I would think the actual recording of the vibrations would have to be more involved than that, though. I’m thinking some kind of prosthetic finger, with a surface very much like actual flesh, including some kind of fingerprint, and a sensor inside.

BTW, your comment reminded me of this.

UPenn has an open-source project (http://haptics.seas.upenn.edu/index.php/Research/Haptography) where they do some crazy math to replicate the feel of textures using a modified stylus and touchscreen. The demos I saw used a heavily weighted LRA (linear resonant actuator, basically a speaker coil with a weight instead of a cone) on a stylus for 2D, and something like a Novint Falcon for rendering the surface on a simulated 3D sphere. I’ve also played a bit with texture and inertia rendering using piezo haptic actuators sourced from my day job :-) (http://tim.cexx.org/?p=1129)

Hey everyone, thanks for the comments.

I realized that there are a lot of great points brought up here, many of which I neglected to bring up in the first post.

Yes, I agree that $3000 is still above the price I’d drop into a whimsical project. Before posting, I thought: “Unless haptics are your thing, most readers wouldn’t even consider buying the setup. In that sense, what can a casual reader actually get from this project?”

The magic behind this project is twofold. First, their build demonstrates some quality engineering techniques with conventional components–parts that any of us could source. Without building their setup, we can still download their 3D model, view their parts list, and think to ourselves: “Oh, that’s how they did it.” The techniques here (press-fits, cable transmissions) don’t arise every day and could be adopted by any of us in a future project. Second, this project is well-documented. With other open source hardware designs, it often takes an experienced hardware hobbyist or hacker to recreate the setup from schematics, BOMs, and Eagle Files. In this case, the designers delivered some serious effort to ensure that most people without hardware experience could still build this setup from its parts.

I also suspect that we don’t see many developers of haptic interfaces because a quality setup is so costly, not because it isn’t interesting, and I’m convinced that the designers are on the right path to bring this technology to more labs and serious enthusiasts. That said, I also admit that it isn’t quite as easy to perceive the “cool” factor from the promises made by a haptic setup (“experience what a virtual object feels like”) as, let’s say, those made by a 3D printer (“duplicate a design as a model that exists in the real world”). If you have a chance to try one out, I’d highly encourage it. With a haptic setup, yes, we can feel the outline of a virtual object, but we can also perceive different textures, and even experiences forces that don’t exist in our universe.

Lastly, I had a chance to catch up with some of the designers at TEI last weekend, and they agree that the price is still too high. On that note, they’re currently working to reduce the price further. In fact, at the conference, they were demonstrating a newer version with a custom control board that cut out the expensive DAQ entirely.

Neat build, really. You can quibble about whether it’s canonical haptic/tactile or cheap enough, but it’s really well made.

In going down the parts list, a lot of the expense is for tools and things that can be gotten more cheaply. For example their €92.06 arbor press can be gotten for about $40 full price at HF.

Good to see quality design and assembly from these kids.

Well over half the cost is in the motors (€406 each, three required) and the I/O card for the PC (€793). Get rid of those and you’re at more like €1000.

The I/O card may well be replaceable with one of the cheap ST microcontroller dev boards. The STM32F3-Discovery is more like €10, and it’s got 16-bit ADCs (and a bunch of 12-bit ADCs), DACs, timers, etc. Just needs someone to write code to use it.

The motors are harder; does anyone know where to get good motors with decent encoders for a low price? I gather that the Maxon motors are fairly fancy (nice torque characteristics, low friction). Perhaps a brushless gimbal motor/controller and a cheap magnetic or optical 1000 PPR encoder would work? US Digital sells a nice encoder (the MA3) for about $60 – $70 (depending on options), and while that’s expensive it’s still way cheaper than the Maxon ones.

I can’t speak for motors, but for encoders, I’ve used CUI’s capacitive encoders (the AMT103, 2048 CPR and up to 7500 RPM) without issue, and they sell for about $24 on Digikey.

Paul Stoffregen uses one in his encoder library test video:

https://www.youtube.com/watch?x-yt-ts=1421782837&x-yt-cl=84359240&v=2puhIong-cs

AMT103 Datasheet:

http://www.cui.com/product/resource/amt10-v.pdf

those are neat, thanks

https://www.youtube.com/watch?v=cdiZUszYLiA

This documents a method to make do without an encoder. It doesn’t work for 0 RPM speeds, but with planetary gears you could possibly keep motors always running…

Mike (co-author) here. You sir get a tip of the hat. I have been searching for at least two years for a cheap but small encoder with a center bore. Never thought to try non-optical encoders, since I didn’t realize that capacitive encoders could be so high-res and stable. Will pick up a few of these. I would also comment on lower res options from andymark.com that would probably still work fine for this application ($40 each).

” a spacial haptic device is a physical manipulator that enables exploration of a virtual space through force feedback. A user grips the “manipulandum” (the handle) and moves it within the work area defined by the physical design of the device.”

Is this something thats done to Eva-Lotta or does a Lotts-Eva get in the way?

Hi, Jonas here, one of the authors of this kit. Thank you Joshua so much for the article and everyone for nice comments! It’s awesome that you are already scrutinizing the BOM for cutting costs, I’m happy to learn where we could find more cost effective parts and update (after testing) as we go along. The idea has been to start with the high quality expensive stuff and then reduce it at least where we can do so without compromising quality. This is certainly true for the DAQ which, as mentioned, we are already working on, using an mbed LPC1768 (we have to test this carefully though to avoid potential usb delay issues).

Speaking of expensive stuff, what could be a good alternative to SolidWorks? Even student edition is €120/year (real one is €6000+) and mine is running out in 2 days – could be a good opportunity to try something else…

I don’t know much about the closed source alternatives… The open source ones are not very good but development is rapid. For example, the various ways to extrapolate from existing geometry are largely missing.

The community behind the 3D printed guns are the most active in this field and they seem to be creating a large directory of open parts which are not gun-related. This directory could become a go-to resource for makers one day.

For the price of a single license of SolidWorks you could hire a programmer to work on one of the existing open source CAD packages. Organizing this through https://www.bountysource.com/ could prove lucrative. A big-budget precedence making rounds through the media, like Kickstarter did, is sorely needed for bountysource.

Bounty is a interesting idea. With freeCAD (or similar) as base, could be worth exploring.

FreeCAD is a workable alternative. The more I work with it, the more useful it becomes. When a design creation doesn’t work exactly as I expect it to, the forum always comes through promptly and usefully. Can’t ask for more! Having used ProE (but not Solidworks), I know even professional (read: $$$$$) packages have quirks – and support isn’t nearly as easy to get.

Really nice project!

I would go for Freecad as well, usable for most of the things to develop and the Freecad FORUM is very active and helpful. Would mutually fit with the idea of OpenSource Hardware and I am sure Freecad would be interested to see this project being developed further on their software…