Hardware wallets are devices used exclusively to store the highly sensitive cryptographic information that authenticates cryptocurrency transactions. They are useful if one is worried about the compromise of a general purpose computer leading to the loss of such secrets (and thus loss of the funds the secrets identify). The idea is to move the critical data away from a more vulnerable network-connected machine and onto a device without a network connection that is unable to run other software. When designing a security focused hardware devices like hardware wallets it’s important to consider what threats need to be protected against. More sophisticated threats warrant more sophisticated defenses and at the extreme end these precautions can become highly involved. In 2015 when [Jochen] took a look around his TREZOR hardware wallet he discovered that maybe all the precautions hadn’t been considered.

Let’s start with a discussion of some common threats to a microcontroller and how they might be mitigated. When developing firmware it’s pretty common to use a serial console to print debug messages. It’s obvious that if this is enabled when the final product is shipped, you shouldn’t print out sensitive information (like private keys) during operation, lest someone connect a serial adapter and see the messages! Of course you can try hiding the physical pins the serial console is connected to, but the common wisdom about such “security by obscurity” is that it is ineffective and thus insufficient. What about wherever you physically store the data? If it’s in an external device (SD card, NAND/NOR flash, EEPROM, etc) can someone tap the lines between that and the microprocessor and see the key-related messages pass by? Can they just remove the external storage and read it themselves? They probably can, in which case you can encrypt the data, but then where do you put that key? Some microcontrollers have “one time programmable” (OTP) flash onboard for saving such secrets. For many products storing keys in OTP, disabling debug access, and turning off the debug console is sufficient. But for a security focused product like a hardware wallet, whose primary feature is security, more stringent measures are required.

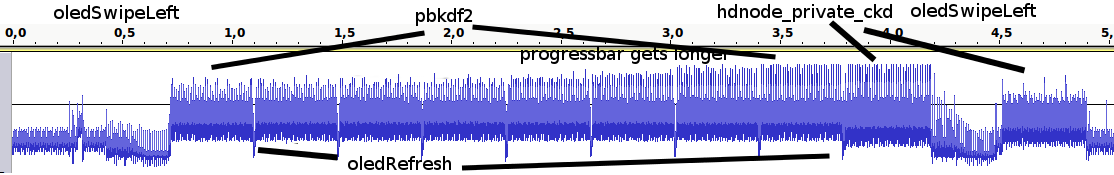

Side channel attacks focus on exploiting systems based on the system itself instead of the software running on it. The full scope of possible side channels is quite wide but in this case we’re interested in using a technique called power analysis to examine what code a microcontroller is running and what data it is handling. When a CPU is executing code its power consumption changes minutely depending on what instructions it’s running. With sufficiently sensitive tools (an oscilloscope and shunt resistor can be good enough) you can measure these fluctuations and over time and capture a detailed picture of what the CPU is up to. There are both software and hardware countermeasures to protect against power analysis but those are somewhat out of scope for the moment.

Back to [Jochen’s] TREZOR. At the time of their post the device worked by storing a seed value which was used to compute public and private keys. When it was connected to a computer it could be asked to provide public keys to participate in transactions. [Jochen] noticed that if they monitored the device’s power consumption with a cheap scope and shunt resistor, the algorithms that generated the keys were easy to discern. The display on the device obscured the CPUs activity somewhat but during certain phases of the process it was possible to observe power spikes caused by the algorithms which were used to compute the public and private keys from the original seed. By counting the timing of these fluctuations and using reference a power signature from another device it was possible to recover the series of 1s and 0s which comprised the private key, at which point the device is ineffective. [Jochen’s] concern was that an attacker could snag a TREZOR, watch while it quickly generated keys, then return it without the owner noticing. Take a read through [Jochen’s] post for a much more thorough explanation of what’s going on.

“By counting the timing of these fluctuations and using reference a power signature from another device it was possible to recover the series of 1s and 0s which comprised the private key, at which point the device is ineffective. [Jochen’s] concern was that an attacker could snag a TREZOR, watch while it quickly generated keys, then return it without the owner noticing. ”

Timing attack in more ways than one.

Are you trying to encrypt currency?

Eww. secretes means produce and discharge (a substance). I think you meant secrets.Unless you meant the hardware wallet secretes secrets.

Haha, I guess it _did_ sort of secrete them…

Fixed. Thanks!

Some of these products are better thought of as secretions anyway. :-)

I believe you mean excretions…..

My wallet doesn’t secrete anything, it takes a crowbar to get me to open it…

B^)

So, an article from 2015, the issue was resolved in a firmware update before the post in question went live:

https://github.com/trezor/trezor-mcu/commits/master?after=3185eb0fd444701e2bc976555545fe08d572c957+769

It’s almost as if things are just being reblogged here because they appeared somewhere else (this was reposted on hacker news a few days ago, again…)

They employed the guy btw

With the recent censorship and in undisclosed edits happening around here, things are becoming concerning

Yep! He seems to have done a great job following responsible disclosure guidelines (and TREZOR did a great job taking him seriously and actually fixing it!).

You got me, I definitely found this on Hacker News and thought it was a decent intro into using power analysis. Though the content is older, it seemed like a good excuse to maybe introduce some HaD readers to a class of vulnerability they might not have know about.

If you consider yourself a journalist, you ought to do some research before publishing.

Otherwise is is just cluck bait, but may be its your job description.

Totally disagree with you.

The article is a very good intro into what a side channel analysis is. And whether the example is three years old or not is totally irrelevant towards its pedagogical value (I think this case is an excellent choice for Hackaday).

Besides, your “criticism” style is borderline trollish. Disgusting

Totally agree with you.

I’ve never heard of power analysis as an angle of attack and yes this post introduced me to this concept. I had an impression that trezor has this vulnerability. Only after reading comments, i knew that trezor patched this. So yes, this is in fact poor journalism. Reposted article, with no additional research of his own. This is basically equivalent to copy paste.

You don’t seem to understand journalism or blogs, in general, or this blog in particular. So I don’t understand why you think your assertions deserve serious consideration.

You also, apparently, don’t understand that you aren’t the center of anyone else’s universe (your mom excepted, possibly). It is your responsibility to deal with duplicates among the sites you choose to read. The authors on those sites can’t and shouldn’t be worrying about what you, in particular have seen. If you find that some sites have too much overlap with other sites, its your responsibility to cull your reading list.

The article is a very good write up and was definitely link worthy. He demonstrated a very low budget side channel attack very clearly.

I liked the article, information like this never gets old ;)

In linked article author says he disclosed vulnerability to Trezor makers and they fixed the issue by adding PIN for generating private keys. This idea of the article is to show how side-channel attacks work, not how to steal someone’s bitcoins, so I think it’s perfectly ok to feature it on HaD.

> This idea of the article is to show how side-channel attacks work, not how to steal someone’s bitcoins, so I think it’s perfectly ok to feature it on HaD.

There are better ways of demoing side channel attacks. The Hackernews comments from people who wrote the tools used show the issues with this particular investigation. You’re only seeing this here because it was reposted elsewhere.

You make the assumption that everyone patched their firmware.

I play Hacker News bingo, with points for the links reposted on HaD a suitably discreet time later ;-)

Couldn’t this be worked-around by providing sufficient low-ESR capacitance to de-couple this noise from the external supply? Maybe disconnect from the USB voltage rail and run from a supercapacitor temporarily during sensitive operations so all the spies see is a capacitor charging and discharging?

A properly designed power supply costs money. Also, the power supply needs to be inside the tamper-detection envelope. (BTW, I didn’t look at the design of this device to know whether it even has any sort of tamper detection.)

The worst case for defense against power analysis attacks is the smart card: the attacker can probe the chip pins directly and there’s very limited scope for on-chip filtering. It’s important to design the logic so that the observable power consumption or timing does not depend on the bits in the key.

IIRC, RAMBUS holds / held many of the patents regarding defenses against power analysis attacks.

Smartcard attack and defense got a lot of interesting workout during the period when using smartcards for cable TV conditional access was a big thing.

Those had particulary tricky power supply side channel problems because EEPROMs either needed external high voltage(trivial for attacker to deny just by covering the appropriate contact; thus preventing the device under attack from zeroing itself); or onboard charge pumps, which can’t easily be tampered with without decapping and some tools; but mean a suspicious spike in current shortly before an EEPROM state change(which, if you are trying to avoid tripping a bad retry counter or the like are your cue to shut the thing down before it can finish).

Really quite a challenge unless the attacker’s options are carefully limited.

Problem with that is power in, power out, added capacitance helps supply a gulp of current, but also takes a gulp of current to recharge

A smart move would be something like filtering, either RC to limit that capacitors ability to recharge, but more realistically LC using an inductor to help filter out spikes

Problem is that takes up space

I think the fix that was done was to let the processor process junk while also pulling the key

Another option is to use a mosfet and pulse it randomly to gob up current and generate current noise

Or like you said using a supercap to isolate power (not uncommon in some dc isolation applications) or just give the thing a friggen battery

Depends on the threat model, since that controls how much you can count as ‘trusted’ or discount as impractical:

If the attacker needs to keep the device absolutely pristine looking(brief covert access/evil maid without time and tools) you probably can bring some or all of the power supply into the ‘trusted’ area; attacking a suitably potted lump or even a glued/welded plastic case isn’t terribly quick and can be kind of messy. Still have to keep an eye out for things like thermally reducing the capacitance; but can definitely frustrate someone with nothing but a USB passthrough cable and instruments.

If the attacker has the luxury of partially reworking the device after their attack; or flashing an extracted private key to an otherwise unmodified equivalent device(shady vendor, supply chain attack of some sort) they’ll be in a much better position to bypass the sorts of measures you describe(either just affix the probes on the informative side of the de-coupling capacitor; or short it out or the like; then clean up when they are finished.

You can move those functions on-die(you won’t get a supercap into reasonable die space; but decoupling might be an option); which makes things yet trickier for the attacker: with appropriate apparatus they probably can get the private key out; but likely not without roughing things up enough that the extracted key will need to be embedded in a new device to escape suspicion.

This class of countermeasures would be more effective and economical if they were incorporated into security oriented SoCs & MCUs, and even then, it would probably only be one layer of defense.

What the makers of the TREZOR wallet should have done is to add a large bypass capacitor, a small inductor, another capacitor, as to make a low pass filter. Then on top of this likely also have a noise source to bury the data that manages to slip through the low pass filter.

Though, this would likely not fully fix the problem, but would make it harder to exploit a power line attack.

Simple linear filtering just decreases the SNR.

Attackers know that they can average a lot of readings to improve the signal to noise ratio. (Given certain conditions) the variance of the estimate is inversely proportional to the number of samples, or something like that. Attackers probably listened to their statistics professors (better than I did, at any rate).

In turn, the defenders can work on those certain conditions. One of them is to add noise that doesn’t average out – there are some PDFs (e.g. Cauchy distribution) or some PSDs (e.g. pink or brown noise) that don’t have nice statistical properties.

Another is to put random delays into the inner loops of the crypto calculations.

> Simple linear filtering just decreases the SNR.

It does more than that, but it is unreasonable to assume that the attacker doesn’t understand deconvolution. That brings the problem back to one of SNR as you said.

Yes. This is true.

I were also thinking of maybe doing a system that relies on charging up a large storage capacitor. Then disconnect it from the main power line, and have the capacitor power the chip for a few hundred cycles, then connect it back, charge it back up, and then disconnect it to power the chip again.

This would mean that we average out the power consumed during those cycles. Yes, we can still measure a difference from one charge to another, but since we now have far less to go on, then it should be drastically harder. This as well would not likely fully fix the problem.

And nothing stops us from just putting an antenna close to the device and use something like a real time spectrum analyzer to look at stuff instead. So likely an RF shield would be needed as well. But yet again, this would still leak.

Another way of implementing your storage capacitor idea would be to cascade two DC/DC converters, e.g. boost followed by buck. The boost charges the storage capacitor. Connect the noise source into boost converter’s feedback network somehow, which will cause the cap voltage (and the input current) to vary.

The buck converter takes its input from the storage capacitor and produces regulated rails for the logic, as usual.

… and in those times when the boost converter is being told (by the injected noise) to decrease the storage cap voltage faster than the load is discharging it, the input current will drop to zero, completely decoupling it from the load current.

This requires a boost converter with a diode rather than a synchronous rectifier FET. The ones with synchronous rectification will work in reverse mode and suck energy out of the storage cap and feed it out the input.

Hindsight is a wonderful thing – I very much doubt the Trezor engineers considered this kind of side channel attack a realistic possibility during the design process. Software mitigation of the defect is much more appropriate than a partial hardware fix/product recall.

I were more thinking of the next hardware version.

Would not be logical to send back one’s hardware wallet to the manufacture, since after all, can we honestly trust that they didn’t think of a way to extract the information in their own way? (yes this is though paranoia.)

Whilst an excellent hack and analysis of side-channels, I’m not sure this is a flaw in the design of the device.

The device is intended to protect your keys from a potentially compromised PC, not from a guy with a scope nicking it and analysing it. If you need to protect against a guy with a scope, you either need a device designed for that, or a safe.

Why the axiom that anyone having physical access can do anything. It can be dealt with usually by those with secrets and the budget greater than ours.

Are there any FPGA based crypto wallets?

There was an article here a while back that talked about how side channel attacks could be prevented by FPGAs taking up spare power for noise calculations. If there was some way if implementing the crypto algorithm in multiple diffrent ways, and switching between the diffrent algorithms while the unused algorithms just ran random crypto, it might help prevent side-channel attacks on mobile devices. I’m a noob to FPGA stuff and I’m guessing all these things have been tried before by someone smarter and wiser than I.

I recall around the ’08 crash, maybe after BTC was a thing only dorks like me saw on tech sites.

I CPU mined maybe 20-30 and grabbed a few more from the free bicoin faucets some sites had to bump interest; I forgot about them as the concept of wasted cycles = value seemed stupid; almost as stupid as the feds bailing out the too-big-to-fails and screwing my well planned default contracts. FWIW the things were worth less than a dollar per coin at the time. Now people suggest I hire a team to root through the dump like they did for the ET carts. Problem is even if they find it I have no idea what the password even is.

Real security, hide the HDD in a random dump in Asia(at the time) and don’t story the password anywhere, even in your brain!