If you have your ear even slightly to the ground of the software community, you’ll have heard of Docker. Having recently enjoyed a tremendous rise in popularity, it continues to attract users at a rapid pace, including many global firms whose infrastructure depends on it. Part of Docker’s rise to fame can be attributed to its users becoming instant fans with evangelical tendencies.

But what’s behind the popularity, and how does it work? Let’s go through a conceptual introduction and then explore Docker with a bit of hands-on playing around.

What is Docker?

Docker allows you to run software in an isolated environment called a container. A container is similar to a virtual machine (VM) but operates in a completely different way (which we’ll go into soon). Whilst providing most of the isolation that a VM does, containers use just a fraction of the resources.

Why it’s great

Before we dive into technical details, why should you care?

Consistency

Let’s say you’re coding a web app. You’re developing it on your local machine, where you test it. You sometimes run it on a staging server, and soon you’re going to put it on a big production server for the world to see.

Wouldn’t it be great if you could consistently spin up the same exact environment on all of your devices? If your web app runs correctly inside of a Docker container on your local box, it runs on your test server, it runs on the production server, it runs anywhere.

This makes managing project dependencies incredibly easy. Not only is it simple to deal with external libraries and modules called directly by your code, but the whole system can be configured to your liking. If you have an open source project which a new user wants to download and run, it’s as simple as starting up a container.

It’s not just running code that benefits from a reproducible environment — building code in containers is commonplace as well; we wrote about using Docker to cross compile for Raspberry Pi.

Scalability

If you use Docker to create services which have varying demand (such as websites or APIs), it’s incredibly easy to scale your provisioning by simply firing up more containers (providing everything is correctly architected to do so). There are a number of frameworks for orchestrating container clusters, such as Kubernetes and Docker Swarm, but that’s a story for another day.

How it works

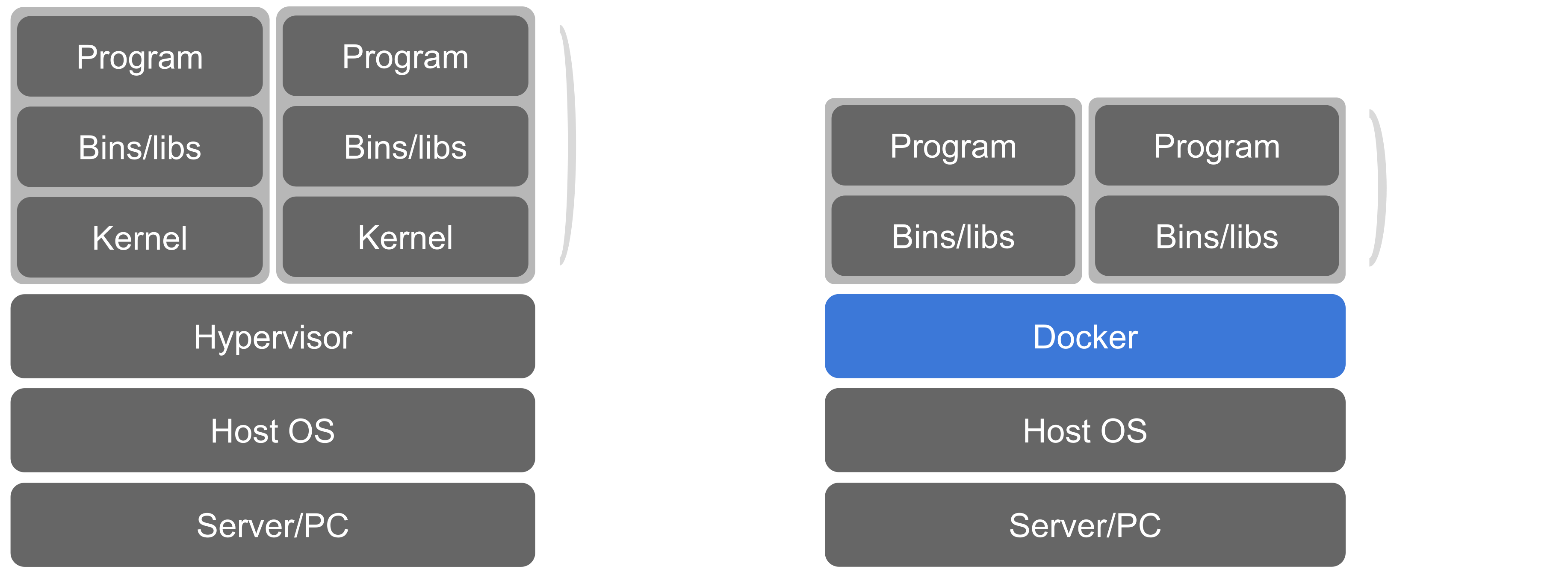

Containers are pretty clever. A virtual machine runs on simulated hardware and is an entirely self-contained OS. However, containers natively share the kernel of the host. You can see the differences below.

This means that containers perform staggeringly better than virtual machines. When we talk about Docker, we’re talking about how many Linux processes we can run, not how many OSes we can keep afloat at the same time. Depending on what they’re doing, it would be possible to spin up hundreds if not thousands of containers on your PC. Furthermore, starting up a container will take seconds or less, compared to minutes for many VMs. Since containers are so lightweight, it’s common practice to run all aspects of an application in different containers, for overwhelming maintainability and modularity. For example, you might have different containers for your database server, redis, nginx and so on.

But if containers share the host kernel, then how are they separated? There’s some pretty neat low-level trickery going on, but all you need to know is that Linux namespaces are heavily leveraged, resulting in what appears to be a fully independent container complete with its own network interfaces and more. However, the barrier between containers and hosts is still weaker than when using VMs, so for security-critical applications, some would advise steering clear of containers.

How do I use it?

As an example, we’re going to build a minimal web server in a Docker container. In the interests of keeping it simple we’ll use Flask, a Python web microframework. This is the program that we want to run, main.py:

from flask import Flask

app = Flask(__name__)

@app.route('/')

def home():

return 'Hello from inside a Docker container';

if __name__ == '__main__':

app.run(host='0.0.0.0', port=80)

Don’t worry if you’re not familiar with Flask; all you need to know is that this code serves up a string on localhost:80.

Running a Process Inside a Container

So how do we run this inside of a container? Containers are defined by an image, which is like a recipe for a container. A container is just a running instance of an image (this means you can have multiple running containers of the same image).

How do we acquire an image? The Docker Hub is a public collection of images, which holds official contributions but also allows anyone to push their own. We can take an image from the Docker hub and extend it so that it does what we want. To do this, we need to write a Dockerfile — a list of instructions for building an image.

The first thing we’ll do in our Dockerfile is specify which image we want to use/extend. As a starting point, it would make sense to pick an image which has Python already installed. Thankfully, there’s a well maintained Python image, which comes in many flavours. We’ll use one with Python 3.7 running on Debian stretch.

Here’s our Dockerfile:

FROM python:3.7-stretch COPY app/ /app WORKDIR /app RUN pip install Flask CMD ["python", "main.py"]

After the first line which we discussed, the rest of the Dockerfile is pretty self explanatory. We have a hierarchy setup like so:

app └──main.py Dockerfile

So our app/ directory gets copied into the container, and we run the rest of the commands using that as the working directory. We use pip to install Flask, before finally specifying the command to run when the container starts. Note that the process that this command starts will be inherently tied to the container: if the process exits, the container will die. This is (usually) a good thing if you’re using Docker properly, with only a single process/chunk of a project running inside each container.

Building an Image

Ok, we’ve written some instructions on how to build our image, so let’s build it.

$ docker build .

This tells Docker to look for a Dockerfile in the current directory (.). But to make it a bit easier to run, let’s give our built image a name, called a tag.

$ docker build -t flask-hello .

Since this is the first time we’ve used this Python image from the hub, it takes a minute or two to download, but in future, it will be available locally.

Now we have a shiny new image, which we can run to produce a container! It’s as simple as this:

$ docker run flask-hello

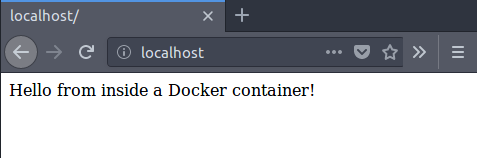

Our container runs successfully, and we get some output from Flask saying that it’s ready to serve our page. But if we open a browser and visit localhost, there’s nothing to be seen. The reason is of course that our server is running inside the container, which has an isolated network stack. We need to forward port 80 to be able to access our server. So let’s kill the container with CTRL+C and run it again with a port forward.

$ docker run -p 80:80 flask-hello

…and it works!

Summary

The first time you see this process, it might seem a bit long winded. But there are tools like docker-compose to automate this workflow, which are very powerful when running multiple containers/services.

We’ll let you in on a secret: once you get your head around Docker, it feels like being part of an elite club. You can easily pull and run anyone’s software, no setup required, with only a flourish of keystrokes.

- Docker is a fantastic way to run code in a reproducible, cross-platform environment.

- Once you’ve set up your image(s), you can run your code anywhere, instantly.

- Building different components of an app in different containers is a great way to make your application easy to maintain.

- Though this article focused on web services as an example, Docker can help in an awful lot of places.

Containers, container orchestration, and scalable deployment are exciting areas to watch right now, with developments happening at a rapid pace. It’s a great time to get in on the fun!

Isnt docker just using chroot to acconplish isolation?

Nope, there are several ways to run containers in docker and chroot+cgroups is only one of them.

Dockers, and I thought they were talking about khaki slacks…

The principle is great, but the implementation often sucks. if you are not prepared to build your containers from scratch, you will likely end up with bloated images. Here’s one example of how to make lightweight images:

https://blog.codeship.com/building-minimal-docker-containers-for-go-applications/

Depending on your environment, just building a statically-linked binary will give you the benefits avoiding system library dependencies, without the added complexity of the docker environment.

The idea behind things like CoreOS. The same that pushes for VMs in general (Cloud, Cloud, Cloud) recognize that unnecessary bloat scaled up is detrimental to making money (more money-making processes can be ran).

Containers are awesome. (Ditch the hypervisor layer? Yes please.) It’s another situation of “everything old is new again”, and this feels like a modern rehash of mainframe tech. That being said, Docker isn’t the only container company, so maybe there will also be some future discussion on Kubernetes as well.

Docker and k8s aren’t competing. In fact, you use k8s to deploy Docker containers onto one or more machines. While it’s a gross simplification, you can think of Docker as “create a zip file of all the things I need to run this program” and k8s as “pipe this service into that one, after it’s up and running”, much like docker-compose does.

Kubernetes is an orchestrator for docker (or theoretically another container engine) clusters. A layer above it.

“It’s not a VM…” “It’s a VM, but…”

If I’m reading this right, Docker is just a lightweight replacement for the virtual kernel and hypervisor for a virtual environment. So, a “fast” sandbox with security-scary indirect access to a host Linux kernel through some kind of standard interface. And that standard interface is obfuscated by some “used only our community-approved apps” handwaving.

And, according to their webpage, they’re good to use for “internet of things” and “blockchains.”

Forgive me if I fail to become an immediate zelot at how underwhelming this sounds. I’ll keep my ear away from the ground in the corporate buzzword garbage dump that this came from, thank you.

Docker (on linux) is a way of packaging and sharing pre-made containers, and a really nice interface to manage linux’s kernel namespaces and networks. Ultimately, it’s the linux kernel providing the isolation between the apps, not a separate virtual kernel in docker (once the container is running, docker itself is not really involved)

Containers is an admission that binary distribution on Linux is a practical impossibility, so instead you package up your application, and all its dependencies, and run it inside a namespace so it can pretend it’s running on the machine you developed it on.

Docker’s popularity is due to its ability to pull in random backdoored binaries via the Docker registry through just a few keystrokes. Like everything web, it’s all about doing it fast and not proper.

not to mention incompataible versions of python… or java… and while it’s usually possible to deal with it without containers… my god it’s so much easier to just stick it in a container.

Fundamental problem here is linux software does not/cannot support long term interface contracts and mass binary shipping via containers (android apk/docker/flatpak/snap) is probably the only solution the community can reasonably come up with that doesn’t involve fixing the ecosystem from the ground up.

In a way, Microsoft got lucky. They were forced to resolve this issue 20 years ago when there wasn’t nearly as much system complexity (the original DLL Hell).

Yeah, I can totally see how Microsoft fixed the DLL hell: by policy, advocating a concepts like a chroot which is also used in containers but a whole lot less capable.

There’s nothing security-scary about it vs running the actual program on your host system. There’s also nothing obfuscated in it either. You’re more than welcome to open a docker shell, dig about the inside of the container, or even modify its contents.

You’re failing to become an immediate zelot simply because you’re speaking through emotion (by implying you need to be a zelot to use this or get excited about it) and through ignorance (as demonstrated by your post).

What are you even going on about zealots and speaking from emotion? This is a hacker forum. For hackers. Not social justice warriors and theologians. We talk about engineering and electronics and heady subjects here. When we do call people names we make sure we spell check first since. Many of us are engineers dealing with dangerous equipment at times. There is no room for error.

Describing hackers and engineers as pure logic beings is not very rationnal.

Containers run on the host OS, eliminating the need for a hardware virtualization layer. Docker images also use a layered storage scheme which also eliminates much of the duplication you get when using VMs. Docker images can be very small (no need to include an OS) and layers based on common dependencies between containers are shared which saves a TON of hard drive space.

From what I understand, the security is very good (perhaps at least as good as VM technologies) and is built on pre-existing Linux features.

> And that standard interface is obfuscated by some “used only our community-approved apps” handwaving.

You do not have to use any pre-built images. You can inspect the source of all public images, or you can build your own from scratch.

> I’ll keep my ear away from the ground in the corporate buzzword garbage dump that this came from

The Docker Inc website is unfortunately stuffed with that garbage, but Docker/container technology has many real-world benefits. In another comment here, I posted a URL to a Reddit discussion that was basically “the docker website is full of meaningless buzzwords, what’s it actually good for?” Check it out if you’re interested.

Many similarities to the way java was described in the old days …..

A bit apples to oranges, but I can see what you’re getting at. I think (based on my own biases) that you may also be implying that Java didn’t deliver what it promised (and so maybe Docker doesn’t either). But I’ve been using Docker for a few years in production and I can tell you that it does provide many of the described benefits. Docker (container technology in general) really is a game-changer.

If trying to be fair, I think I could say that java promised a lot, then went wrong in accepting to change its initial idea to conform to a lot of other parties´ desires. And that contributed to it turning into jut another common language, without the benefits it promised.

I have not used dockers or lookalikes, but lately something like that seems to pop in mind more times. Like, since AMD and Microsoft seem to decide to stop supporting new hardware to perfectly normal OSs , I´ve been considerating the day I will have to install Windows 10 in a machine, then spin a couple of VMs running Windows 7 to keep using important, well behaved software. Dockers seem to be a possible solution to this, but something that would need some thinking .

Probably my biggest doubt about the concept is that, in name of giving it a nice name, and touting the way to work with as a “solves it all”, people are just changing the problem placement. A well configured hypervisor shouldn´t be that bad, performance wise.

Also, the whole “download an image someone already prepared” doesn´t convince. The article could have described a way to create a new, clean image from zero ground, or at least point to a link for this. I know it exists, and will search and read about it later. Just would be nice to have it pointed here in the article of HaD. Becuase after you start just downloading and running other people´s images, there is not much difference from the situation people complains about Intel´s ME or AMD´s whats-its-name.

All in all, a nice possibility, maybe in need of code cleaning and better documentation, as others suggested. Maybe a good solution, for some cases, maybe inappropriate to others, as are a lot of other solutions also.

I say that Java didn’t succeed because Microsoft poisioned their version of it, and by the time the lawsuit settled, the critical time of acceptance was long past. So, you could say that Microsoft actually won…

Huh? Java didn’t succeed? I’m sure that’s news to the developers of one of the most popular programming languages in use today.

@Garbz

Most people not being pendant understand that Java on the desktop didn’t succeed anything like it did on servers. Yeah JNLP and .JAR is still a thing, but still…

“not being pendant[…]”

Hanging out?

Pendulous?

(just being a ‘pendant’ here…)

It’s a common misunderstanding that docker containers are just another form of virtualization and you can run them on any OS. The fact is, docker manages Linux cgroups https://en.wikipedia.org/wiki/Cgroups. It’s better to think of it as kernel “segmentation” rather than “virtualization”. Note this also means it only works on Linux. (I suppose “cross platform” in the article means “cross distribution”)

Yes, you can “run docker on Mac or Windows” but, in those cases, it’s starting a Linux virtual machine under the covers.

> Yes, you can “run docker on Mac or Windows” but, in those cases, it’s starting a Linux virtual machine under the covers.

They’ve had native Windows containers for Docker for about two years now. Google “docker windows container”.

> They’ve had native Windows containers for Docker for about two years now. Google “docker windows container”.

And they are nothing like the linux docker containers. They are a different beast. It’s actually poison for their ecosystem, as it dilutes the “you can run this docker container anywhere where you have docker”

@daid303

Well we’re talking Docker, not Java’s “write once, run everywhere”.* Others have covered the advantages Docker brings to DevOps. And since Docker is usually targeted towards the cloud, it doesn’t poison their ecosystem, because it’s Linux or Windows anyway. Desktop Hyper-V is still there if needed.

*Closest to everywhere presence is the near ubiquitous nature of Linux. But that’s porting an OS and writing everything to that, from languages to apps.

> been considerating the day I will have to install Windows 10 in a machine, then spin a couple of VMs running Windows 7 to keep using important, well behaved software. Dockers seem to be a possible solution to this, but something that would need some thinking .

I don’t keep up with Docker for Windows, but my gut feeling is that that use case wouldn’t work at this time (someone please correct me if I’m mistaken).

> Probably my biggest doubt about the concept is that, in name of giving it a nice name, and touting the way to work with as a “solves it all”, people are just changing the problem placement.

You are probably right to have some concerns about this aspect. Docker is marketed or perceived as a panacea – or a really nice hammer with the assumption that all problems are nails. Of course, this isn’t the case. I can tell you from being in IT since before VMWare was “the next big thing” (which it absolutely was), that containers are truly the next big thing. Bare-metal will always have its place. And VMs aren’t going away (my Docker containers all run inside VMs). But adding Docker/containers to the toolkit easily solves many problems that used to be very difficult.

> A well configured hypervisor shouldn´t be that bad, performance wise.

The best, simple explanation I’ve heard describing the difference between VMs and containers is that: VMs virtualize hardware. Containers virtualize the OS. If you think of the implications of that, you might come to the conclusion that VMs can never be as performant or resource efficient as containers.

> The article could have described a way to create a new, clean image from zero ground, or at least point to a link for this.

Go to hub dot docker dot com, find a high-level image that you’re interested in (maybe something like Ubuntu or Node, or the Python image which was used in this article’s example Dockerfile) and click the link to the Dockerfile (you can also search for Dockerfiles on Github). The Dockerfile is how the image is defined (you create an image by writing a Dockerfile and running `docker build` against it.) The example Dockerfile used the `FROM` directive to base the rest on the official Python image, but it could have built from scratch and included all of the steps to install Python instead.

https://docs.microsoft.com/en-us/virtualization/windowscontainers/about/ *

My reading is much like a Linux host OS determining the containers, Windows server would do the same.

*There’s a good explanation of containers vs VM as well.

Add to:

https://msdn.microsoft.com/en-us/magazine/mt797649.aspx

> I´ve been considerating the day I will have to install Windows 10 in a machine, then spin a couple of VMs running Windows 7

Gosh don’t do that, the licensing and overhead… plus the constant rebooting.

Use linux as your host, or if you’re keen to pay unRAID Server Pro or something similar.

“I´ve been considerating the day I will have to install Windows 10 in a machine, then spin a couple of VMs running Windows 7 to keep using important, well behaved software.”

Or just run that Software on Win10, if it’s not working, that software is a piece of crap and should not be used anyway.

I feel like this is contributing to bad packaging and distribution practices… It used to be that when distributing code you had to think about having sane buildable dependencies and make sure your code’s runtime environment could coexist with other software (and reduce bloat both on disk and in memory by sharing libraries and common components). Now all a developer must do is can their wonky [potentially mismatched and insecure] set of dependencies from their development workstation, bless it as “magic” and ship the whole boat anchor as a docker image.

This isn’t a new problem (quartus from Intel/altera ships with its own private copy of everything from libc all the way up to scripting languages and effectively has a chroot’s worth of stuff though it just diddles its library paths rather than actually calling chroot() but you get the idea). It is a long-standing problem… Docker just normalizes and rebrands as a virtue what had always previously been (with good reason) a shameful practice largely confined to commercial software packages produced by companies whose forte was clearly _not_ software.

This!

Whenever you talk about good and bad it helps to not hold up the past as any kind of example of good. Linux package management has been a clusterfuck for anyone ever trying to do something that isn’t officially sanctioned and managed by some repository for a long time. The further in the past you get the nastier the horrid clusterfuck becomes. I have seen entire systems completely hosed beyond repair as people tried to navigate dependencies to get a specific version of software working.

Now you also need to realise that this doesn’t replace or change anything. I have yet to find something on docker that didn’t also have an official repo, however sandboxing and packaging dependencies has some real benefits for running anything that isn’t an officially supported version for a distribution.

Docker didn’t normalise a bad commercial practice, it directly addressed the problem that is managing linux applications without the blessing of some gatekeeper.

Heh, that’s where I always end up in various fights with linux. Or, gah, I have to upgrade to x.x.x to cure Y insidious bug, but then I’m another 3 versions away from when Z last worked.

The only, and I mean only, problem I’ve had with linux package management has nothing to do with package management. The problem is that developers of libraries target the “development” version of a library, instead of the “stable” version from at least a year ago, and that means that when you want to install the software on a regular system it won’t work; even though the libraries of the same name are all available from the distro.

I try very hard to avoid using these tools. It isn’t always possible. But often by choosing a tool that isn’t the most popular, I can find one that is better-written and used stable APIs.

These types of tools paper those problems over, but then what other iffy architectural decisions were made? Does using an unstable API, which is by definition newer code that the stable API, have security implications? If it is quality software, the stable API is still getting security updates, and the newer API may have some changes that were made for security reasons, but those features still have bugs that defeat the improvements and haven’t been found yet.

> It used to be that when distributing code you had to think about having sane buildable dependencies and make sure your code’s runtime environment could coexist with other software

But with Docker (containers in general), that’s just no longer necessary. It simply eliminates most of that (nightmare of a) problem altogether.

> (and reduce bloat both on disk and in memory by sharing libraries and common components)

The amount of complexity required to do this correctly negates any benefits you might get from saving a few megs (or gigs) of RAM. And the fact that there are terms like “DLL hell” and “dependency hell” illustrates that doing it correctly is very difficult to achieve (some might say it’s not realistic, or even possible in some situations). Hardware is cheap but engineering time is very expensive.

Also, when you use Docker images (which are built in layers that can be shared across containers with common dependencies) you’re spared a lot of the disk bloat you’d get with VMs. And, because all of the containers on a node share a single OS, you do get some efficiencies in RAM utilization especially compared to VMs.

> a virtue what had always previously been (with good reason) a shameful practice

Previously maybe, but not anymore. Ask Google. I think they know a bit about software…

There are many (perhaps much more substantial) benefits of containerization besides not having to worry about dependencies that you don’t seem aware of like isolation, consistency, immutability, deployment, scaling, and orchestration. You can find a good discussion in this reddit thread: www dot reddit dot com /r/docker/comments/9cw2bl/why_docker/

There’s a reason this technology has really taken off. You might want to look at it more closely rather than simply sticking to your previous biases.

It shrugs and says “f___ it, I can’t be bothered to care anymore”. It’s exactly as much ‘eliminating’ that task as is deciding that road maintenance is expensive so let’s just not do road maintenance except on the roads the (now greatly downsized) road maintenance crew drives on themselves.

There’s no cost to spending other people’s RAM.

Google’s use is very specifically launching the exact same server on thousands to millions of machines. That doesn’t mean that it’s a good model for desktop programs.

It’s more like not having to do road maintenance anymore because we invented a road surface that doesn’t degrade.

> That doesn’t mean that it’s a good model for desktop programs.

Of course not. Who said it is?

Except that it doesn’t change the road surface at all. It doesn’t even claim to.

That is exactly the difference between Docker and a VM. A VM provides a different surface, at a cost. Docker uses the existing surface, but shields you from the most obvious harmful effects of library version inconsistencies. At the cost of the ecosystem no longer making an attempt to use consistent versioning. It guarantees growing bloat in any growing ecosystem.

You could already do these things ad-hoc, and they were considered non-ideal practices, and not because they were ad-hoc. It tricks non-sysadmins into thinking that when they build packages for distribution they don’t need to understand the system, but that’s just nuts. The system is still there, and if you don’t even see the road surface anymore it doesn’t mean it isn’t there, or was replaced by magic.

It is a great tool for various use-cases, but the claims that are becoming popular about what it does are really bizarre.

This is exactly the way it was discussed when I first heard about it while doing web development; people who didn’t like or didn’t follow the existing best practices liked it a lot, and people who were taking the time to follow the best practices felt like it was a downgrade and didn’t use it.

Then the paid evangelism started, including this insistence that if you use it you’re also expected to become evangelical, which of course does work on a significant percentage of users under those conditions.

It is important for sysadmins in large companies because if you have a lot of workers, it is hard to enforce consistent practices. But for smaller teams, if people are feeling evangelical about their tools maybe the tool should be banned from the workplace! “Get back to work!” LOL

If you want to learn Docker (and Docker Swarm), I highly recommend the courses on Udemy by Bret Fisher

Humble Bundle has been doing pretty good so far in the technical books department, including containers.

” Part of Docker’s rise to fame can be attributed to its users becoming instant fans with evangelical tendencies.”

OS/2 fans. More modern is Python.

I like it because it makes getting the “solution” (you know, the reason we bought the machine in the first place) up and running without jumping though a lot of loose pieces that can easily break. With that said the Docker catalog suffers from what a lot of open source suffers from, poor/lack of documentation. And last for a performance hit I could see the host OS running on a VM for the security assuming something gets that far. Golden image, plus the ease of recovery and configuration tools, makes, nuking from orbit just to be sure, a valid security strategy in worse cases.

Now lets take a look at appimages and snaps as well ;)

Have been playing around with docker and kubernetes in the last year, and i like it,, mostly. Sure its a bit more complexity and adds overhead, but you quickly accept that once you discover the benifit with dynamic scaling, monitoring and general maintainance.

Combine with Chef and Puppet, one can throw configuration around with the ease that one does code, and one can have a powerful combination.

I’ve never used docker, what is it exactly, chroot? A slimmed down vm? How does it run on different architectures without needing to be recompiled? How is this a game changer? I’m just not getting the magic.

Have you ever tried reading? It’s worth the effort.

The magic is, jr webdevs can use it to build a fairly portable chroot jail without having to know what that means. And without even having to learn that “stable” means “unchanging.” This is important, because at this stage of development most programmers still think that “stable” means “not crashing,” and that “unstable” means, “release candidate,” and therefore any software you’re a fan of, the bleeding-edge development branch is Stable, because it is awesome and didn’t even crash.

It provides all the expected associated pros and cons. And if they had any feelings of sysadmin anxiety, they’ll even go full-evangelical now that they don’t feel inadequate anymore.

At the other end of the spectrum, it is a powerful tool for deploying horizontally scaled server packages without the runtime overhead of a VM.

Sounds a lot like java for applets, pdf for documents, c for programs. I suspect it suffers the normal woes, you are SOL if your target is not supported by it and it almost has to be the lowest common denominator or your perfectly transposable language starts to have a lot of hardware or os specific extensions. Look at ANSI C vs say MS C.

On the flip side, outside of the lowest common denominator issues, it is no doubt useful for the things that it does support.

Since many here will have a knack for embedded stuff, I wanted to share that I use Docker for crosscompiling and toolchain building quite a lot these days. Although bootstrapping a toolchain has become quite a lot easier over the years, there is still some fragility w.r.t. installed library versions etc.

So nowadays, I capture my toolchain build process in a Dockerfile as some kind of „executable spec“. Take this Linux distro version, install these packages, download those tars, extract and build like so…

In a year from now, when I need to change some firmware, I can be sure that I can rebuild the exact same working toolchain despite having upgraded my workstations‘ distro.

Also, Docker opened up the world of continuous integration for my embedded projects. Quite a worthwhile tool to learn all about for embedded devs!

Personally, I prefer LXD – containers.

Surprised you didn’t go for Warden.

https://blog.aquasec.com/a-brief-history-of-containers-from-1970s-chroot-to-docker-2016

By the quick blurb on that website, Warden doesn’t seem to have anything LXD doesn’t have. LXD does have remote-management of containers, live-migration, support for Btrfs-snapshotting and so on these days.

Stopped reading at, “Don’t worry if you’re not familiar with Flask; all you need to know is … ”

That right there is the fundamental problem with so much code written these days.

The fundamental problem with code is that it uses libraries? That people give libraries whimsical, but memorable names? What? Throw us a bone here.

The problem pointed to is the willingness to use a library without knowing what it does, or if you even need it. Just paste it in.

I was hoping that Docker could help me isolate a dev environment from the OS on Windows machines (have you ever tried to un-install Visual Studio?). It might also be nice to shield a working environment from Windows patches and upgrades. But no dice – that’s not what Docker does, especially on Windows.

Suppose that depends upon how leaky the abstraction is?

My experience with Docker was trying to install Discourse (a fancy forum). It requires ~20GB of disk space to install , build and run. We’re talking about a forum, a FORUM! To compare, there are embedded projects more complex than that, in the order of 200KB of occupied flash; the Witcher 3 game is about 32GB.

That sounds like a problem with Discourse, not with Docker.

Whenever you talk about good and bad it helps to not hold up the past as any kind of example of good. thanks for sharing.

I am surprised nobody has posted Randall Munroe’s comment on Docker yet:

https://xkcd.com/1988/

For people that understand german: “Adlerweb” did a video on this: https://www.youtube.com/watch?v=leTpySlsl50

https://xkcd.com/1988/

This seems to be significantly less portable than is claimed in the article. I think you are taking for granted a lot of your background Docker knowledge that someone who needs an “intro to docker” does not yet have.

You mention that this will let you run code equally in all sorts of different environments, such as the Raspberry Pi… Cool, I just flashed a new Raspbian onto a Pi Zero W last night, I will give it a try!

First, installing docker (not quite intuitive; “apt-get install docker” does NOT do the trick, you actually want “apt-get install docker.io”). Not too bad. But also not mentioned in the intro article.

Okay, now I’m ready to follow along with the article!

Huh. “Tag 3.7-stretch not found in docker.io/library/python”. Well then. Still, I’m committed to following through on this. I’ll just google up another guide.

Ah, the one on the docker website suggests you do a hello-world before anything else. That seems like a good idea, I’ll try that.

Huh. “exec format error”. More googling reveals that, as you might suspect, docker images are NOT portable across architectures. Which also was not mentioned anywhere in the article, despite the claims of great portability.

Okay, let’s figure out the magic incantations to try to find architecture-specific images. More googling. Okay, I should search the docker hub for arm hello-world. Ahh, here’s one for “armhf/hello-world”. Well, my Zero isn’t armhf, but let’s clock on that to see what it says at least.

Ahh. Deprecated. Try arm32v6 or arm32v7. Actually that sounds good, because I know I need v6 anyway — this board wasn’t armhf.

“docker pull arm32v6/hello-world”. Welp, that didn’t work. Let’s look on the docker hub again. Ahh, ok. arm32v6 doesn’t even HAVE a hello-world image. Sigh. I’ll just try one of these other images from arm32v6. I use Python all the time, let’s try this arm32v6/python image. I already have Python installed and don’t need a docker image for it, of course, but at least it exists, unlike hello-world.

“Tag latest not found in repository docker.io/arm32v6/python”. Holy crap this is getting frustrating. I guess I need to learn more about Docker tags real quick. Okay, so the Hub shows a bunch of tags for this image, I’ll just try the first one listed. “docker pull arm32v6/python:3.7.0-alpine3.8”.

Tag not found again? AAaaagh! I give up. An hour later and I haven’t even gotten the simplest task done with Docker. This “Intro” helped me not one iota.

I like service fabric. Way better.

thanks, I was useful for me in order to try a little about the topic, I’ll look further this topic, I would also like to see more of it here, but, I’m kind of confused, this doesn’t seem the regular content of HaD, but no worries for me at least.

Nice article! You have described a lot of point on the difference between Docker and VM. This will help to new buyer when he purchasing Docker container.