Hackaday readers have certainly seen more than a few persistence of vision (POV) displays at this point, which usually take the form of a spinning LED array which needs to run up to a certain speed before the message becomes visible. The idea is that the LEDs rapidly blink out a part of the overall image, and when they get spinning fast enough your brain stitches the image together into something legible. It’s a fairly simple effect to pull off, but can look pretty neat if well executed.

But [Andy Doswell] has recently taken an interesting alternate approach to this common technique. Rather than an array of LEDs that spin or rock back and forth in front of the viewer, his version of the display doesn’t move at all. Instead it has the viewer do the work, truly making it the “Chad” of POV displays. As the viewer moves in front of the array, either on foot or in a vehicle, they’ll receive the appropriate Yuletide greeting.

But [Andy Doswell] has recently taken an interesting alternate approach to this common technique. Rather than an array of LEDs that spin or rock back and forth in front of the viewer, his version of the display doesn’t move at all. Instead it has the viewer do the work, truly making it the “Chad” of POV displays. As the viewer moves in front of the array, either on foot or in a vehicle, they’ll receive the appropriate Yuletide greeting.

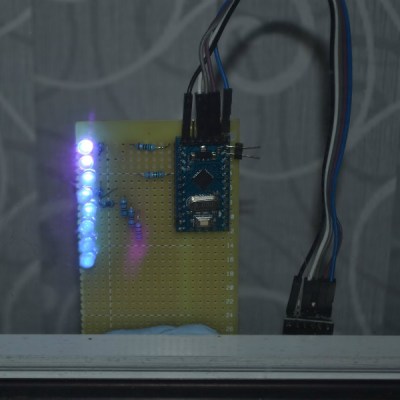

In a blog post, [Andy] gives some high level details on the build. Made up of an Arduino, eight LEDs, and the appropriate current limiting resistors on a scrap piece of perfboard; the display is stuck on his window frame so anyone passing by the house can see it.

On the software side, the code is really an exercise in minimalism. The majority of the file is the static values for the LED states stored in an array, and the code simply loops through the array using PORTD to set the states of all eight digital pins at once. The simplicity of the code is another advantage of having the meatbag human viewer figure out the appropriate movement speed on their own.

This isn’t the only POV display we’ve seen with an interesting “hook” recently, proving there’s still room for innovation with the technology. A POV display that fits into a pen is certainly a solid piece of engineering, and there’s little debate the Dr Strange-style spellcaster is one of the coolest things anyone has ever seen. And don’t forget Dog-POV which estimates speed of travel by persisting different images.

[Thanks to Ian for the tip.]

But… as soon as you look at it stops working, some kind of Machiavellian scheme here

And if you’re going the “wrong” direction you’ll need a mirror for the message to look “right”.

I made exactly the same, two years ago :) And it so happened that I made for christmas, too.

https://deralchemist.wordpress.com/2015/10/04/die-traegheit-des-auges-persistence-of-view/

English version (google translate, sorry):

https://translate.google.de/translate?sl=auto&tl=en&u=https%3A%2F%2Fderalchemist.wordpress.com%2F2015%2F10%2F04%2Fdie-traegheit-des-auges-persistence-of-view%2F

To be precise, my display is not only for christmas but I did display “Merry X-mas”. However, you can create custom characters and generate custom strings very easily. Please refer to my github project:

https://github.com/MKesenheimer/Persistence_of_Vision

Let’s do the time warp back to 1990 and remember the Private Eye display. It used this principle along with a tiny mirror to create a clear crisp image of many, many millions of dollars pouring down a drain.

I’ve seen this used as a floor number indication in the staircase of the HTL Bregenz [1]. Must’ve been mid to late 1990s. Ascending or descending the staircase, you would “somehow” see a number, if you looked directly at it you saw an innocent vertical column of red LEDs staring back at you.

A bit spooky :-)

[1] https://de.wikipedia.org/wiki/H%C3%B6here_Technische_Lehranstalt_Bregenz

Maybe this one from a while back

https://www.instructables.com/id/The-magic-wand-clock%253a-a-Persistence-of-Vision-toy-/

I think I’ve seen a similar principle used in a subway to display adverts. Instead of a single column, there are many columns, each displaying a frame of a video. which, when passing by the windows, give the impression of a floating video screen moving along with the train.

I guess that if the POV isn’t framed in some window passing by, the effect is a lot better on video than in real life because it would be hard not to unconsciously keep the display in the center of vision.

How does that work? In normal POV with the LEDs moving, some kind of synchronization is accomplished using a hall sensor or similar to turn turn the lights on at the right time. But when the POV unit is stationary, how does it know when to present the next column of pixels? Does it require the person to move at a particular speed?

If you move slower, it’s thinner. If you move faster, it stretches out.

Any idea what timing would be needed to make the message legible only to passing cars at 20MPH? I am sure there is math involved. I am guessing around 7 times the speed of a walking message if you figure walking at 3MPH.

I played with this for about 2 hours one night, using an arduino nano iso the mini which was used in the project, and i could not get it to display anything unless shaking my head like a loon. Lost interest after the headaches started.