Fully autonomous cars might never pan out, but in the meantime we’re getting some really cool hardware designed for robotic taxicab prototypes. This is the Livox Mid-40 Lidar, a LIDAR module you can put on your car or drone. The best part? It only costs $600 USD.

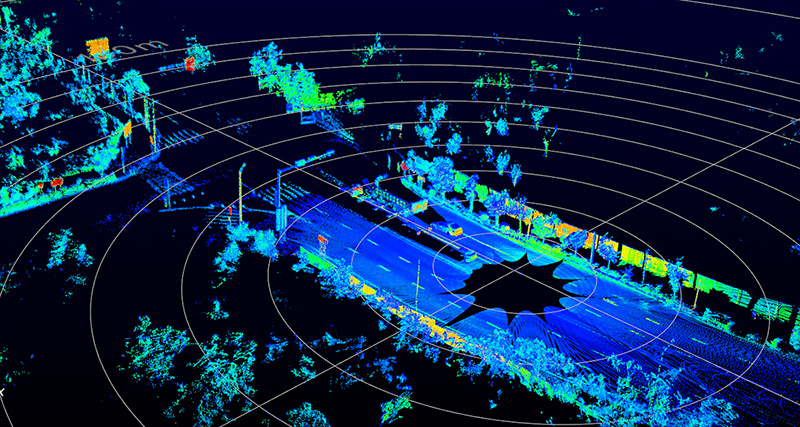

The Livox Mid-40 and Mid-100 are two modules released by Livox, and the specs are impressive: the Mid-40 is able to scan 100,000 points per second at a detection range of 90 m with objects of 10% reflectivity. The Mid-40 sensor weighs 710 grams and comes in a package that is only 88 mm x 69 mm x 76 mm. The Mid-100 is basically the guts of three Mid-40 sensors stuffed into a larger enclosure, capable of 300,000 points per second, with a FOV of 98.4° by 38.4°.

The use case for these sensors is autonomous cars, (large) drones, search and rescue, and high-precision mapping. These units are a bit too large for a skateboard-sized DIY Robot Car, but a single Livox Mid-40 sensor, pointed downward on a reasonably sized drone could perform aerial mapping

There is one downside to the Livox Mid sensors — while you can buy them direct from the DJI web site, they’re not in production. These sensors are only, ‘Mass-Production ready’. This might be just Livox testing the market before ramping up production, a thinly-veiled press release, or something else entirely. That said, you can now buy a relatively cheap LIDAR module that’s actually really good.

High-precision mapping is how I see most using this. DIY autonomous cars might be second ala DARPA Grand Challenge.

What do the power requirements look like?

going to need to upgrade the battery on your drone for mapping?

They say 10W for the small one and 30W for the big one

“Only” $600….

*looks at bank account*

*Shakes head*

Better than $100,000 for a Velodyne! https://medium.com/self-driving-cars/velodyne-lidar-price-reduction-d358f245f086

Considering most of our projects aren’t going to even make use of a fraction of the sophistication of one of these top-end units, you may as well head over to ebay and grab a lidar yanked out of a Neato Robotics vacuum cleaner for forty bucks.

Or just get a second gen Kinect.

Those are EOL. Also look at Intel Realsense D415/435

how well do those work outdoors with strong sunlight?

Coincidentally. https://hackaday.io/project/163501-open-source-lidar-unruly

The downside is actually “from the DJI Website.” Having anything from DJI attached directly to a routable IP is a bad idea – especially with their tendency to “vacuum” data into their leaky servers.

“Mass Production Ready” does not mean these are not in production- from the FAQ:

5. What enables the mass production of Livox LiDAR sensors?

One major issue LiDAR manufacturers face is the need for extremely accurate laser/receiver alignments (<10 μm accuracy) within a spatial dimension of about 10cm or more. For most state-of-the-art LiDAR sensors that are currently available, these alignments are done manually by skilled personnel. Livox LiDAR sensors eliminate the need for this process by using unique scanning methods and the DL-Pack solution, which enables the mass production of Livox sensors.

At $600 there are already less expensive lidar units in production and on regular vehicles. Of course this is a hoped-for price since they’re not yet in production.

The note is appropriately skeptical of whether general-purpose autonomy is achievable; limited-purpose (lane- and distance-keeping on highways) is already on the road though often referred to as “ADAS” (advanced driver assist systems). Ditto AVs in agriculture and mining.

For automotive, a range of 90 m is insufficient. At 75 mph a car travels 33 mps so this is less than 3 seconds out. Given lower resolution and useable signals at 90 m, this isn’t enough lead time. Closer in – automatic emergency braking – radar is good and probably under $100 delivered to a car company.

There’s also a long road from prototype to delivery in automotive. Companies have to validate in-vehicle performance and then validate the actual production process. Unless it’s an adaptation of something in production, where all that’s being considered is a change in dimensions, production validation is not trivial. Finally, units coming off of the actual production process then have to go through PPAP (production part approval process). Learning the ropes is not easy, so the most realistic approach is to get bought by or license to existing suppliers.

yep. It’s kind of nice to see some realism amidst all the self-driving hype. Full, no-human autonomy that can handle anything is far off. But better driver-assists and warning systems are here now.

My employer has piloted some other LIDAR-based applications and it’s exciting stuff. So it’s good that the costs are coming down.

Laser distance measuring tools are pretty cheap now, basic ones can be picked up for £15-20. It’s not that hard to solder a couple of wires to the pcb where the buttons should go and have a microcontroller take measurements. The tricky and time consuming to code bit, is grabbing the data from the pads, that would usually be for the display.

A couple of mirrors on stepper motors or even a pan and tilt mount would do well for cheap lidar. Probably more fun and educational than just buying one off the shelf.

You pay for the number of samples/sec

Most of these aren’t LIDAR, they’re a laser diode and a linear sensor that measure distance based on parallax. Structured light and a camera sensor would do just as well, but nowhere near as fast or reliable as LIDAR.

He’s talking about building construction “laser tape measures”. the vast majority of those are modulated light systems that compare the phase offset average over time to achieve those claimed 1/8″ at 164 feet/ 3mm at 50m or whatever they’re advertising. Those use longer exposures to get past environmental noise without exceeding eye safety regulations. This is as opposed to a pulse based lidar, that uses high peak power and off time to do the same.

Several camera systems do that. In fact, you probably could build your own diode array and backing electronics (now that would be quite the sight without your own wafer fab) to do a modulated 3D image array with common components.

Now back to this, for short range yes you’ll get faster results but shorter range and not necessarily better results. One possibility I’ve considered with a similar setup is combining the laser option with some gimballing and trying to build a poor man’s laser tracker, active tracking and everything. Should be loads cheaper than the real ones (around $100K) but also several times less accurate.

Parallax is just as valid a type of lidar as time of flight is.

Have you see this one: https://hackaday.io/project/25515-cheap-laser-tape-measure-reverse-engineering

Riddle me this:

A self driving car has a stored 3D model of the environment derived out of an earlier scan. It makes a new scan and tries to locate itself by overlapping the two. The information doesn’t quite match, so it tries to find the best overlap. A large object has appeared since the original scan, and it finds slightly more overlap if it shifts the old model to partially overlap with the new object – now the car thinks it’s three feet off from where it really is.

How does the algorithm take into account that eg. a building may have scaffolding covered in tarps erected over its facade?

There’s supposed to be a video, since removed from Youtube, where a Google engineer revealed that their car tried to drive down a ravine because it mistook the side of a truck for the canyon wall and thought it was driving on the left lane instead of the right lane.

If these devices are pulling in 300,000 points per second, I really doubt any positional location routines depend on “a stored 3D model of the environment derived out of an earlier scan.” In a self-driving car application, your usage of the word ‘earlier’ would mean milliseconds prior, not a stored map of scans taken at a resolution of 300,000 points per second of travel. That just wouldn’t scale for all the possible roads to be driven.

The LIDAR sensor is for determining spatial relationship of moving and stationary objects. This includes looking at how quickly another vehicle is approaching / slowing relative to the travel speed of the sensor. This is correlated with GPS and image inference to make decisions such as driving into a ravine.

Yes they do. That’s exactly how Google does it: drive around, take a scan, clean up the scan into a 3D model, load it back into the car. Then the car drives around comparing the two to locate itself to within a couple inches.

You don’t need to use all of the 300,000 points. The data is smoothed out to “blobs”.

>”That just wouldn’t scale for all the possible roads to be driven.”

This is Google. They’re seriously envisioning a lidar-based 3D database of all the possible roads.

The system works like:

1. scan everything once

2. use a supercomputer AI to clean up the scanned data into a full 3D model of all the roads they’ve ever been to

3. send blocks of this data back to the cars wherever they’re driving

4. scan continuously and update the central model with difference reports from multiple cars

Currently it’s humans working as the mechanical turk in the system, doing both the initial scanning and the updates, because no AI is clever enough to do it.

Sell some of that data back to various parties, from states and cities for their own purposes (maintenance, planning, etc), to game makers, and even people who make transportation related products.

>”The LIDAR sensor is for determining spatial relationship of moving and stationary objects. ”

Actually, what they’re doing is quite clever. The pre-scanned model is subtracted from the lidar data to take out what the car’s AI already expects to be there, so it can reduce the number of points it has to calculate and more easily identify what all the interesting objects are. Otherwise the task of figuring out whether some random point of clouds is “a car” would be nearly impossible.

The perk of figuring out where you are on the road, to the inch, is also necessary because there’s no other way than to look at the surroundings. GPS isn’t precise or fast enough, and simply trying to track lanes isn’t reliable enough – you have to know where you are in the general environment, and to do that you need to know what there is in the environment.

The answer will likely come from better sensor fusion between lidar, radar, and cameras.

I don’t think AV systems are being fed chassis control data from the base vehicle platform but that could be a help too.

I’m no expert on DL networks but I don’t think the current algorithms have very much ‘temporal memory’ so there are only a limited number of past states they can use as input to do things like figure out an overlap including understanding an object changing in time.

More powerful and purpose built DL silicon may help too. The tech is pretty new in the consumer space and there are a number of things that look like we are in a DL bubble which may need to pop so we have some firmer foundations to build on.

Just my wild guess, but a self-driving car probably doesn’t compare the raw 3D models between scans. It probably uses feature recognition to extract ‘things of interest’ like cars, curbs, signs, pedestrians, lane markings, etc and then compare those between scans.

“It” in this case being Google employees. The cars themselves don’t do it – they just compare the simplified model from the cleaned-up scan done earlier, to the live model scanned just now.

What they do is, they first overlap the two datasets as well as possible, and then they subtract the model from the scan, and once you’ve eliminated all the buildings, streetlights, signposts and other static features, all the objects that might be other cars, pedestrians etc. simply pop out. Likewise, if a new pothole has opened up where the model shows was previously level road, the car can detect that instead of trying to guess whether it is safe to drive there – reality does not match expectations: go around.

The computer in the car gets off with a lot less work in real time because you’re subtracting the background out. It simplifies the AI a great deal to leave ALL the reasoning about the surrounding reality to someone else.

Google? That’s exactly how the Cadillac Super Cruise works.

” use case for these sensors”. If you guys are going to insist on this ridiculous term “use case” instead of application or example or illustration, case, instance, exemplar (please don’t), sample, or case in point, you need to hyphenate. “use case” is not the same as “use-case”.

There will come a day when modules with capabilities like this are built into every street light in urban areas.

They will keep track of a continuous 3D view of everything around the street, also behind corners or behind parked cars.

They will also communicate with cars driving on the street, and together they will prevent situations from escalating in what is nowaday’s called an “accident”.

Or we might decide collectively that the privacy of the people living around those streets is more important, but privacy is not very high on the agenda’s of most people.

I think I’ll go watch some road gage vid’s on youtube now.

Where is the teardown? Is this mems lidar?

I’m looking for a LIDAR that would work underwater. So far I’ve only seen LIDAR based on RED lasers that would be absorbed by water in short distance.

Any hints about a device that could suits my need ?

…sonar? ;-)

532nm (green) lasers are used for bathymetry but for only very short distances. They also are hard to use due to eye safety issues. Sonar is a better choice for anything beyond a meter or two.

annnndddd have fun writing all the deep learning networks unless you’re just going to map with it.. If you can do it without too many edge cases you basically beat Tesla and other big vendors working on it..

The lidars are already in production! They are shipping. I have received mine as I ordered through the dji store OP posted.

That’s good to know. “Mass-Production ready” actually means that they are ready to be dropped into your mass production product so Hackaday should update.

It won’t work out of the box without some sort of a spinning platform + IMU (for SLAM) or GNSS/RTK

I’m still waiting to grab one, but since I have two left hands, I don’t know how I’m going to use it without the additional pieces mentioned above.

Happy to hear anyone’s thoughts on this.