Robots, as we currently understand them, tend to run on electricity. Only in the fantastical world of Futurama do robots seek out alcohol as both a source of fuel and recreation. That is, until [Les Wright] and his beer seeking robot came along. (YouTube, video after the break.)

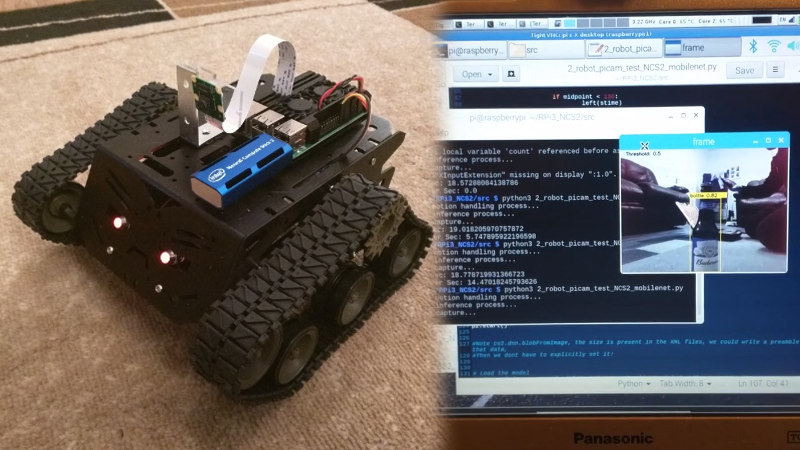

A Raspberry Pi 3 provides the brains, with an Intel Neural Compute stick plugged in as an accelerator for neural network tasks. This hardware, combined with the OpenCV image detection software, enable the tracked robot to identify objects and track their position accordingly.

That a beer bottle was chosen is merely an amusing aside – the software can readily identify many different object categories. [Les] has also implemented a search feature, in which the robot will scan the room until a target bottle is identified. The required software and scripts are available on GitHub for your perusal.

Over the past few years, we’ve seen an explosion in accelerator hardware for deep learning and neural network computation. This is, of course, particularly useful for robotics applications where a link to cloud services isn’t practical. We look forward to seeing further development in this field – particularly once the robots are able to open the fridge, identify the beer, and deliver it to the couch in one fell swoop. The future will be glorious!

https://youtu.be/6Ul2lHj9LH4

I wonder when PCs, laptops and even mobile phones will have standardised neural network hardwate built in, much like graphics hardware now.

A year or two? Currently it’s done in the cloud.

Well we don’t really have standardised GPUs yet. But I agree it’s going to be fast.

It’s happening a lot right now. New Apple and Huawei phones have this now, both of which have 5 Tera Operation Per Second neural inference processors in them.

They’re super impressive.

And there are a TON of neural processors in development, see here:

https://github.com/basicmi/AI-Chip

That threaded base looks nice! Does anyone know more about it?

Yeah, I’m curious about the treads as well

Looks similar to this one:

https://www.robotshop.com/uk/devastator-tank-mobile-robot-platform.html

The Base platform was purchased here :https://www.dfrobot.com/product-1477.html

Not cheap, but quite a nice platform for sure.

You need to source a motor controller such as the L298N to use with the Pi

Thank you Les for this project code. Saved me many hours of brain pain trying to get my Gimbal working:

https://hackaday.io/project/161581-wasp-and-asian-hornet-sentry-gun

After hacking your code for an hour or two, the gimbal now tracks a bottle, which can be swapped out for my own wasp model.

Also thank you Lewin Day for finding the project :)

You are very welcome! Glad you are having fun with it!

Cool project by the way.

https://www.amazon.com/DFROBOT-Devastator-Tank-Mobile-Platform/dp/B014L1CF1K/ref=mp_s_a_1_4?ie=UTF8&qid=1548428914&sr=8-4&pi=AC_SX236_SY340_QL65&keywords=tank+robot&dpPl=1&dpID=41nw6TpxKxL&ref=plSrch

The robot should really be contained in a giant frog…

Github readme: This script allows you to control a robot platform to track and chase any object in the MobileNet-SSD model!

…… The model contains sheep …… Hopefully it wont ‘go bad’ and switch from bottles to our wooly friends!

Celtic population/Plains States/New Zealand jokes in 3…2…1..

If anyone wants to chase sheep more accurately and more-real-time, we are getting closer on DepthAI based on the Myriad X. ;-)

https://hackaday.io/project/163679-luxonis-depthai/log/169343-outperforming-the-ncs2-by-3x

What’s the difference between a Rolling Stone and a Scotsman?

A Rolling Stone says “Hey You, get off of my cloud!”

and a Scotsman says…

“Hey McCloud, get off of my ewe!”

Hey everyone! Just wanted to shared that our DepthAI Myriad X platform is now live on Crowd Supply!

https://www.crowdsupply.com/luxonis/depthai