How many qubits do you need in a quantum computer? Plenty, if you want to anything useful. However, today, we have to settle for a lot fewer than we would like. But IBM’s new Eagle has the most of its type of quantum computer: 127-qubits. Naturally, they plan to do even more work, and you can see a preview of “System Two” in the video below.

The 127 qubit number is both impressively large and depressingly small. Each qubit increases the amount of work a conventional computer has to do to simulate the machine by a factor of two. The hope is to one day produce quantum computers that would be impractical to simulate using conventional computers. That’s known as quantum supremacy and while several teams have claimed it, actually achieving it is a subject of debate.

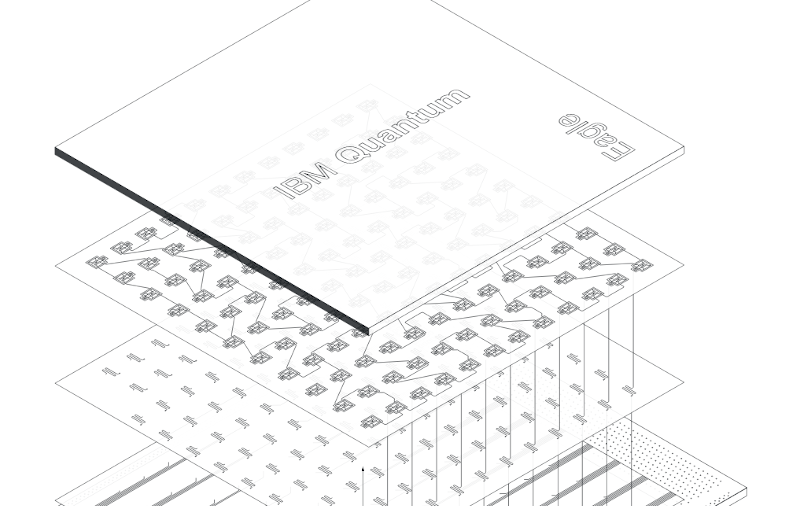

Like any computer, more bits — or qubits — are better than fewer bits, generally speaking. However, it is especially important for modern quantum systems since most practical schemes require redundancy and error correction to be reliable in modern implementations of quantum computer hardware. What’s in the future? IBM claims they will build the Condor processor with over 1,000 qubits using the same 3D packaging technology seen in Eagle. Condor is slated for 2023 and there will be an intermediate chip due in 2022 with 433 qubits.

Scaling anything to a large number usually requires more than just duplicating smaller things. In the case of Eagle and at least one of its predecessors, part of the scaling was to use readout units that can read different qubits. Older processors with just a few qubits would have dedicated readout hardware for each qubit, but that’s untenable once you get hundreds or thousands of qubits.

Qubits aren’t the only measure of a computer’s power, just like a conventional computer with more bits might be less capable than one with fewer bits. You also have to consider the quality of the qubits and how they are connected.

Who’s going to win the race to quantum supremacy? Or has it already been won? We have a feeling if it hasn’t already been done, it won’t be very far in the future. If you think about the state of computers in, say, 1960 and compare it to today, about 60 years later, you have to wonder if that amount of progress will occur in this area, too.

Most of the announcements you hear about quantum computing come from Google, IBM, or Microsoft. But there’s also Honeywell and a few other players. If you want to get ready for the quantum onslaught, maybe start with this tutorial that will run on a simulator, mostly.

At first I thought they actually got the chip working, but it turns out this is the press release from last year where they announced they are going to start working on the 127 qubit chip…

If you can’t answer the following question how can you know if quantum supremacy is even possible in a universal sense? For any given starting state S quantum computers cannot determine the final state F of data that has had rewrite system R applied to it iteratively until time step T any faster than a traditional computer. Y/N?

Also if you do know and answer Yes, then what are the implications for data compression in terms of speed and the size of the resulting archive?

I’ve always hated that “it” was called a quantum “computer”.

Perhaps it would have been better to call it a finite state synthesiser or rationalizer.

I don’t in any way see conventional computers competing with quantum computers. They are better suited to completely different tasks.

Convention computers will continue to advance using newer technologies as time goes by. The only way I see quantum computers replacing conventional computers is when quantum computers are so cheap they they can emulate a traditional computer at a lower cost per performance ratio.

So to answer your question lets start with what the question really means (at least to me) – what are the implications of giving task that is normally completed by traditional computers and is far better suited to traditional computers?

Well this course of action will cause a significant over-investment of technology and resources when the same problem can be solved with a fraction of the resource investment using traditional technologies.

There may be some advantage in using quantum computer to devise a compression scheme in some instances. However if the compression scheme is already defined then a quantum computer architecture has no real advantage.

It would be a different storey if the data to be compressed were encrypted and it had to be compressed without the encryption key while remaining valid.

“It would be a different storey if the data to be compressed were encrypted and it had to be compressed without the encryption key while remaining valid.” That is entirely doable using a conventional computer and again I see no evidence that a quantum computer could do it any faster, unless it has a qbit for every bit of data to compress. The process is simple enough, randomly generate rewrite rules and starting seeds then iterate, storing all of the results from each step. Repeat until you have a bit stream that matches your data, then you know which much smaller rule, seed and interaction count will “unfold” into your target data. Now do you see why the above is such a profoundly important question?

Translating from the Techiform, “Each qubit increases the amount of work a conventional computer has to do to simulate the machine by a factor of two.” = Each qubit doubles the work a conventional computer must do to simulate the new machine. ;-)

No one will ever need more than 640 Qubits!

Funny! Funny! Funny! – made me laugh!