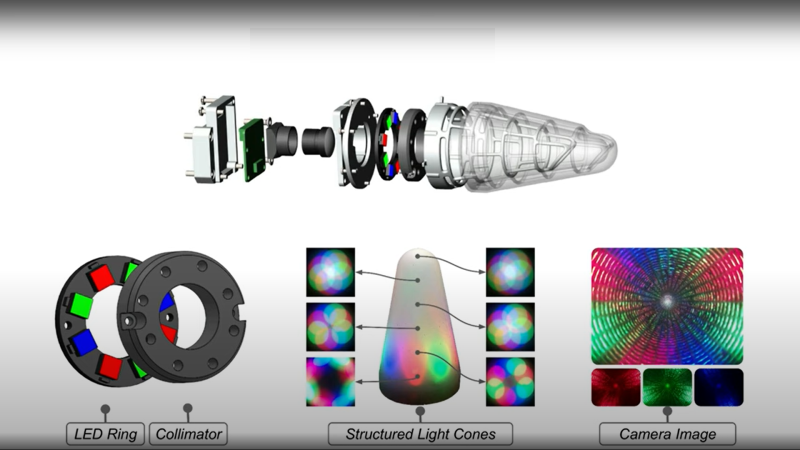

A team from the Max Planck Institute for Intelligent Systems in Germany have developed a novel thumb-shaped touch sensor capable of resolving the force of a contact, as well as its direction, over the whole surface of the structure. Intended for dexterous manipulation systems, the system is constructed from easily sourced components, so should scale up to a larger assemblies without breaking the bank. The first step is to place a soft and compliant outer skin over a rigid metallic skeleton, which is then illuminated internally using structured light techniques. From there, machine learning can be used to estimate the shear and normal force components of the contact with the skin, over the entire surface, by observing how the internal envelope distorts the structured illumination.

The novelty here is the way they combine both photometric stereo processing with other structured light techniques, using only a single camera. The camera image is fed straight into a pre-trained machine learning system (details on this part of the system are unfortunately a bit scarce) which directly outputs an estimate of the contact shape and force distribution, with spatial accuracy reported good to less than 1 mm and force resolution down to 30 millinewtons. By directly estimating normal and shear force components the direction of the contact could be resolved to 5 degrees. The system is so sensitive that it can reportedly detect its own posture by observing the deformation of the skin due its own weight alone!

We’ve not covered all that many optical sensing projects, but here’s one using a linear CIS sensor to turn any TV into a touch screen. And whilst we’re talking about using cameras as sensors, here’s a neat way to use optical fibers to read multiple light-gates with a single camera and OpenCV.

Thanks [LonC] for the tip!

It’s a neat idea but you are really limited by the number of CCD sensors you have… unless you only want to see the surface of a conical object like their ugly thumb example. This isn’t going to be the blueprint for robots that can feel but it is interesting nonetheless.

A laser bouncing on the inside of a reflective cavity could yield interesting results. But the real problem is going to be training the network, as it will not be identical from part to part.

“you are really limited by the number of CCD sensors you have”

I’m not sure how that is a limiting factor? Because of money, the number of needed parts? That’s the case with everything. And you wouldn’t want to use that technique for the whole surface of a robot, but for the manipulator parts where you need higher precision and sensitivity. In other parts other techniques are probably better suited.

“conical object”

The shape of the inner surface defines how many pixels of your sensor are dedicated to what parts of that surface. A conical shape – in comparison to a tube with endcap – dedicates more pixel to the walls of the conical shape and therefore balances the distribution (therefore sensitivity) better than a tube.

“ugly thumb”

A none concern for me. In most usecases today beauty is not the primary factor.

“This isn’t going to be the blueprint for robots that can feel but it is interesting nonetheless.”

It can become a part of it, but only time will tell.

How long of a “thumb” can this still work with? Does it support multiple contacts at once? What is the rate at which it can detect changes? How well does it detect force if multiple points of contact are made at once? What if the available light is zero around it? If the fluid around it is not air, does that change things? How much CPU is required to return real time data? What kind of thickness requirements and material requirements and opacity would be required for this to still work?

“How long of a “thumb” can this still work with?”

There is no sharp cutoff point. It’s a trade off. Make the “thumb” longer (increase surface area) and you have overall less pixel per area therefore less sensitivity.

“Does it support multiple contacts at once?”

That was answered in the video. Yes. It measures the whole surface at once. So multiple contact points at once is no problem as long as they are far enough apart to differentiate them. If they get to close, you still would be able to tell the force from that bigger area, but would not be able to tell if it’s two points close to each other or only one big point.

“What is the rate at which it can detect changes?”

As always: It depends. How fast can the camera produce / delivere frames? How fast can you process them? That is defining the rate.

“How well does it detect force if multiple points of contact are made at once?”

Dave cited a few parameters in his article. As to multiple at once, see my comment on “does it support multiple contacts”

“What if the available light is zero around it?”

The flexable skin with given thickness is opaque and the sensor uses LEDs for internal illumination, therefore exterior illumination doesn’t effect the operation. (No external light needed to work.)

“If the fluid around it is not air, does that change things?”

… ehm.. no. It can’t see the outside (opaque skin) therefore it doesn’t matter what material causes a force on the skin whether they are gases, liquids or solids.

“How much CPU is required to return real time data?”

Depends. On your definition of “real time”, the framerate and resolution of the camera and the your processing steps of these frames (in case of a neural net that would be number of neurons and used topology).

“What kind of thickness requirements…”

Depends. It’s the pixel desity on the surface that defines a fixed bandwidth (therefore depends on camera resolution). Thicker skin (at fixed flexibility) reduces sensitivity but increases range of measurable forces. While a thinner skin (at the same flexibility) allows a smaller range of forces to be measured at greater resolution.

“What … material requirements …”

Since the camera measures a displacement and relates that to the applied force, you need a skin that allows just enough displacement. Therefore a “flexible” skin. Of course if you need a thin skin, you would use a less flexible material (unless you want to measure really small forces) than you would with a thicker skin. So it depends!

“What … opacity would be required for this to still work?”

Exterior illumination is probably unwanted since it could interfere with the measurements therefore quiet opaque. The used material looks similar to something like “Galaxy Black” from Prusament. Not in the material itself (the mentioned “Galaxy Black” is PLA while the used material here is more like a rubber like TPU), but in the sense that light can penetrate into the material somewhere between 0.01 – 0.1mm. That makes it possible for particles (like glitter in the case of “Galaxy Black”) to be visible. These particles are important in this usecase because they work as markers that allow the camera to see a displacment caused by force.

As allways: It depends.

Hope I could help a little!

yes.

Most of that is in the video. Although, I myself have a habit of skimming an article.

The geometry would suggest a longer “thumb” would have reduced resolution on the sides, given the same angle and same camera resolution. I’d say material thickness doesn’t matter as long as it’s reflective but matt enough not to allow internal reflection. The shape also matters, the camera can only measure what it can see. Although there was that project for seeing around corners on HaD a while back. If the knuckles were made reflective or fibrotic there’s nothing to say the IA wouldn’t be able to cope with an entire hand.

Glad you told me what it is in the headline. When I glanced at the image I was expecting an entirely different ending.

Interesting use of structured light. I wonder how this might extend to soft deform-able grippers (aka tentacles). hmmm

And it can’t detect 2 touches that are along the optical axis since the former touch occlude the latter touch behind.