Despite the rigorous process controls for factories, anyone who has worked on hardware can tell you that parts may look identical but are not the same. Everything from silicon defects to microscopic variations in materials can cause profoundly head-scratching effects. Perhaps one particular unit heats up faster or locks up when executing a specific sequence of instructions and we throw our hands up, saying it’s just a fact of life. But what if instead of rejecting differences that fall outside a narrow range, we could exploit those tiny differences?

This is where physically unclonable functions (PUF) come in. A PUF is a bit of hardware that returns a value given an input, but each bit of hardware has different results despite being the same design. This often relies on silicon microstructure imperfections. Even physically uncapping the device and inspecting it, it would be incredibly difficult to reproduce the same imperfections exactly. PUFs should be like the ideal version of a fingerprint: unique and unforgeable.

Because they depend on manufacturing artifacts, there is a certain unpredictability, and deciding just what features to look at is crucial. The PUF needs to be deterministic and produce the same value for a given specific input. This means that temperature, age, power supply fluctuations, and radiation all cause variations and need to be hardened against. Several techniques such as voting, error correction, or fuzzy extraction are used but each comes with trade-offs regarding power and space requirements. Many of the fluctuations such as aging and temperature are linear or well-understood and can be easily compensated for.

Broadly speaking, there are two types of PUFs: weak and strong. Weak offers only a few responses and are focused on key generation. The key is then fed into more traditional cryptography, which means it needs to produce exactly the same output every time. Strong PUFs have exponential Challenge-Response Pairs and are used for authenticating. While strong PUFs still have some error-correcting they might be queried fifty times and it has to pass at least 95% of the queries to be considered authenticated, allowing for some error.

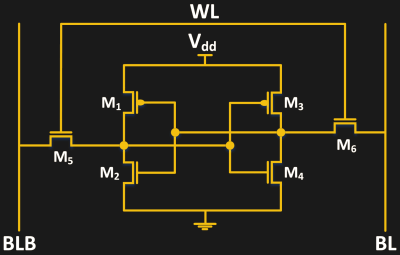

There are dozens of types of PUFs ranging from simple metal vias to quantum optical systems. One of the more common techniques for implementing a PUF is on top of SRAM. A traditional six-transistor SRAM cell settles on a random value between 0 and 1 when it first starts up due to process variations between the six transistors that make it up. Another transistor can be added to give it precharge/discharge capabilities because turning on and off the SRAM cell to take another measurement can be inefficient.

While this all sounds interesting, how does it affect you? While it’s still a titanic-sized task to dope your own silicon and etch your own ASIC, it’s becoming easier than ever to get your own silicon via OpenPDKs. Many FPGAs such as the Xilinx Zynq have PUFs built-in. Since many PUFs are built on top of SRAM, it’s possible to bring them to most FPGAs as this Github repo demonstrates. Even an Arduino can use an SRAM module as a PUF. Since the ESP32 comes with SRAM onboard and the SDK allows you to carve out sections that won’t be touched by the bootloader, so there’s another PUF you can play with.

Of course, PUFs aren’t a magic security measure. Given enough time alone with a device, a hacker could extract all the challenge-response pairs even from a strong PUF, and look it up from the dictionary when challenged. Machine learning attacks can be particularly effective as they can learn and exploit any sort of correlation present in the responses. Side-channel attacks such as power usage can be applied thanks to the error-correction and other post-processing techniques, exposing important details.

Optical PUFs can protect against some of these attacks. It shines a light into some complex scattering medium such as a glittery varnish or metallic paint and sends a response back. But even this is still vulnerable to the emulation problem. Using a quantum readout solves this by making it harder to know what the challenge is. By sending a single photon and reading the coherent scattering, only the challenger knows what the challenge and the response are. The attacker cannot read the challenge without modifying or destroying it. While this has been demonstrated in a lab, quantum PUFs are still not a commercial reality.

For now, PUFs exist in your SIM cards, IP cameras, RFID tags, RISC-V IoT devices, and thousands of other devices you interact with. Next time you need to reach for that unique chip identifier, now you might know a little more about where it’s coming from.

Did someone say SIM card?

https://youtu.be/JFpLGDmcx2g

Wow, what a great video. (Submit that to the tips line!)

> Even physically uncapping the device and inspecting it,

For some of the manufacturing yield issues we face, it’s exactly the physical encapsulation and the resulting stress on bond wires and changes in capacitance from the packaging material, that drive the yield reductions, so it’s entirely possible you could build something that relied on the packaging for your unique interaction data, which could make replication even more difficult.

Wow, where to begin? I only recently stumbled on the concept of PUF. I never gave it much thought, but if any manufacturer starts to “bet the bank” on this, they may be in for a big loss. How does one create something that is both chaotic and random but repeatable and do it reliably and cheaply “95% of the time”? What is the confidence level of that 95%? OK, so in which PUF multiverse do we exist right now?

I thought that the implementation of GUIDs was “done”. One strategy of GUIDs is to make them really big! Microchip, Analog Devices, and others make preprogrammed 48 and 64 bit GUID chips and have for some time. Before I retired (2015), I used FTDI chips in our product that used a GUID to “identify” a specific serial to USB converter IC to always assign the same SIO port ID to it where other parts enumerated ports and quickly used up all port IDs quickly. It’s nice to be able to “know” what port to attach. But that’s also a downside, as it did the same thing in a manufacturing environment, so the test process had to wipe the ID after processing each part (which simplified the manufacturing instructions by knowing which port ID it would use and have than in the build instructions). And it complicated the user’s environment too if they used different laptops to run our stuff, complicating their user’s instructions. So our PC apps needed to “know” which port to use and that required more peeking behind the curtain.

Is there any real winning strategy for this game that is “one size fits all”?

“I thought that the implementation of GUIDs was “done”.”

PUFs and GUIDs are different, at least in some sense. A GUID is just a static identifier, whereas a PUF is a function – it takes an input, and gives you an output which is unique to the device (so in some sense a GUID is a ‘flat’ PUF, which is… kinda silly). You can nominally convert a GUID into a PUF using cryptography (the GUID is a private key) but that’s useful only if you can prevent the GUID from being extractable. The advantage to having the key being generated physically by the hardware (rather than being digitally stored) is that it makes it next-to-impossible to extract it.

I could see something like that in a security key which (hopefully) becomes more important in a security conscious world.

Yeah, although the big difficulty is that it’s extremely hard to know exactly how many true bits of *uniqueness* you get because, well, it’s an uncontrolled process. If you take a look at the FPGA implementation, for instance, with a 96-bit ID, only about 63 of them are stable in one configuration: but the actual number of “unique” bits you get has to strictly be less than that. Some combination of the bits may be common between multiple devices, which, if someone could figure that out, would reduce the seed search space considerably. And in order for you to realize that before deployment, you’d have to test *lots* of devices – and you’d still somewhat be in the dark if you’re not the manufacturer of the device and you’re kindof “abusing” it to create a PUF, since out of the blue the entire PUF could become totally regular due to a manufacturing process change.

So for real security you really want it to be *designed* as a PUF at the silicon level, so that you can really measure the randomness of it at the process level.

They’re neat, but when I was researching them, they were kind of a solution in search of a problem. The costs involved in spending silicon area on security outweigh the benefits of having this functionality- at least, in the eyes of most IC manufacturers.

Great article! However I disagree with “needs to be deterministic” part. Quantum tomography with the assistance of “traditional” PCs have come a long way. You can have a practical and systematically improvable solution, for example using classical shadows.