You may have heard Linux pundits discussing x86-64-v3. Can recompiling Linux code to use this bring benefits? To answer that question, you probably need to know what x86-64-v3 is, and [Gary Explains]… well… explains it in a recent video.

If you’d rather digest text, RedHat has a recent article about their experiments using the instructions set in RHEL10. From that article, you can see that most of the new instructions support some enhancements for vectors and bit manipulation. It also allows for more flexible instructions that leave their results in an explicit destination register instead of one of the operand registers.

Of course, none of this matters for high-level code unless the compiler supports it. However, gcc version 12 will automatically vectorize code when using the -O2 optimization flags.

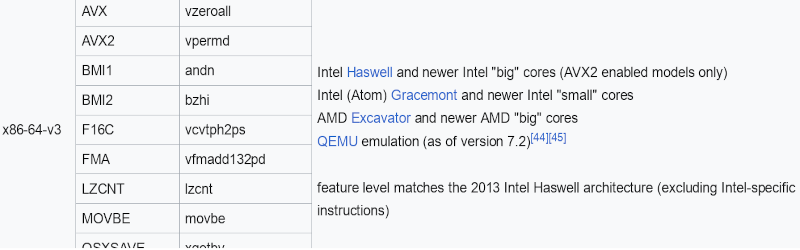

There’s a snag of course, that will make code incompatible with older CPUs. How old? Intel has supported these instructions since 2013 in the Haswell CPUs. Although some Atom CPUs have had v3 since 2021, some later Intel Atoms do not support it fully. AMD came to the party in 2015. There is a newer set of instructions, x86-64-v4. However, this is still too new, so most people, including RedHat, plan to support v3 for now. You can find a succinct summary table on Wikipedia.

So, outside of Atom processors, you must have some old hardware to not have the v3 instructions. Some of these instructions are pretty pervasive, so switching at run time doesn’t seem very feasible.

We wonder if older processors would trip illegal instruction interrupts for these instructions. If so, you could add emulated versions the same way old CPUs used to emulate math coprocessors if they didn’t have one.

Keep in mind that the debate about dropping versions before x86-64-v3 doesn’t mean Linux itself will care. This is simply how the distributions do their compile. While compiling everything yourself is possible but daunting, there will doubtlessly be distributions that elect to maintain support for older CPUs for as long as the Linux kernel will allow it.

Intel would like to drop older non-64-bit hardware from CPUs. If you want to sharpen up your 64-bit assembly language skills, try a GUI.

(Title image from Wikipedia)

Benchmarks?

I am left with two thoughts after reading the summary of differences and variations in the Wikipedia table: what a mess; and how predictable that that we should be left in this situation by the megacorps.

Would it be that hard to big distros to offer a repository of v2, another of v3 and another of v4?

Its not like compiling would be that much processing power, and yeah is like triple the storage, but is not that much in the big scheme of things

Good point. In the past, Linux had been ported to about every niche system.

Many of which I considered to be worthless.

And now the same kind of nerdy people can’t afford supporting archs that have an actual user base?

The problem IMO, is not the compile time, or storage, but the maintenance.

While you many think “Oh its just how it is compiled, you can still maintain patches upstream”, that wont turn out to be the case, v3 will need specific patches in it’s branch, v2 will etc. Each is different and will have different issues that will need specific patches.

You have now added 3 new branches that will need to be maintained, and maintainers are already in short supply.

Heck, I hate maintaining even my project code on github.

Gentoo does it, so its not impossible

It gets ported by the people who want to use those “niche systems”. Not the “same kind of nerdy people”. The ACTUAL people.

If a distro supports something obscure, which they rarely do, it’s because the people who wanted that support stepped up and put in the effort to get the packaging system to support it, do the builds, handle the bugs and edge cases, etc. Again, not “the same kind of nerdy people”. The actual people.

So if you want something, maybe YOU should be prepared to provide the support resources?

“So if you want something, maybe YOU should be prepared to provide the support resources?”

God, no! I don’t want Linux, at all.

It’s rather that there’s little choice these days (OS wise).

You have to pick one of the “diseases” that you believe will harm you the least. 😟

+1k

A friendly reminder that NetBSD is still a thing.

Now that I think of it, it perhaps would already be sufficient to have two repositories/builds.

One using the current generation and another one as a fallback, using the lowest common denominator.

That would also provide some sort of testbed, for a comparison between the original AMD64 and the current feature set. Also in terms of performance and stability.

“So, outside of Atom processors, you must have some old hardware to not have the v3 instructions. Some of these instructions are pretty pervasive, so switching at run time doesn’t seem very feasible.”

That’s a joke, right?

What about AMD Athlon 64 X2? Athlon II X4?

Or Intel Xeons? The Mac Pro 2006 had two Intel Xeon 51xx, I believe.

These processors are still in use, even if it’s just by a smaller number enthusiasts.

But isn’t that the very main audience of Linux, the computer freaks?

Atom.. I think I’m going off my rocker. 😵💫😂

Hell, how about the T430? X230? X220? And then you have your Dell 7010s. All of these have ivybridge processors.

There are a HUGE number of extremely popular computers that don’t support v3.

They said old hardware and one of your examples is legally old enough to vote (in the US).

While I agree one of the benefits of Linux is how useful it can be for supporting old hardware, you aren’t doing a great job arguing against what was said.

Sadly Athlon 64 and Athlon X2 are so slow and power inefficient you can buy a $3 CPU and $15 motherboard, keep your ram and jump to a quad core Xeon. The power savings alone will pay for it. Nevermind it is 2-4 times the performance.

“While compiling everything yourself is possible but daunting, there will doubtlessly be distributions that elect to maintain support for older CPUs for as long as the Linux kernel will allow it.”

Slackware says hi.

http://www.slackware.com/

I wonder if we’re leaving in the dust some parts of the world where we don’t have the luxury to upgrade the hardware quite often. Kinda sad if still relevant tech ends up discarded from this, it’s not like we’re living in a world where’s not enough trash.

Illegal-instruction interrupt or not, is there a standard bit of code — preferably with standard library support — that would let us test whether the v3 instructions are available, so we could switch libraries and/or tell the user to fetch the right build?

As far as people grumbling about instructions not available on older processors goes: C’mon. Folks, that goes back at least to the introduction of the Z80, and I think back into the mainframe age. As long as it’s a strict superset you can always compile to the common base instruction set, while leaving the new features for the hackers who like dancing on the razor’s edge. If there are multiple incompatible supersets, that’s a nuisance, but nothing we haven’t been dealing with since the dawn of computing.

Of course what I really want is either those illegal-instruction interrupts or updatable microcode. Or both.

Or the ability to run microcode directly and do all the scheduling and such as another compilation stage during installation, which is part of what the work on wide instructions and/or RISC were originally reaching toward.

Gentoo isn’t that daunting and will compile everything to your specific processor regardless of what instruction set it supports.

I don’t run Linux, I’ve tried, but a lot of the stuff I do (VB programming, Applewin etc.) I run on Windows 10. The processor I have came out last year, the AMD 7950x.

It replaced an older AMD-FX-8150 which at the time, was the latest. I also went from 8GB of RAM to 32 GB. The difference in speed is amazing, For what I do, it’s fast enough. I’ve never had a machine this fast or with this much RAM before so I’m liking it quite a lot. I’ve tried Ubuntu in the past but always find myself coming back to Windows. So, what does this instruction set do for Windows?

Mostly I’m either online on an emulated D-Dial system https://www.ddial.com or

I’m coding in VB. So does this instruction set actually speed things up?

I also do 6502 ML but x86 is something I don’t know.

VB hasn’t been updated in decades, you won’t notice anything

I’m sorry to tell you that you could have upgraded at any time in the last 8 years for under $50. You went from one of the most under-performing and inefficient architectures to one of the highest regarded efficient architectures.

Also it was a 4-core falsely advertised as an 8 core. Which a 2 core could beat only a handful of years later.

You could have upgraded to a 12100F and been 4-8x faster (It can handily beat 6 core processors and some slower 8 core). Even an Athlon 3000 (Ryzen) keeps up with it stock/stock.

You upgraded, but in all honesty over time most users should stick to the mid range (preferably one generation behind fie maximum savings) and upgrade more frequently. You spend less money over time (not even counting the power you save). And you enjoy better performance on average. Also you can re-purpoae the extra hardware.

Even though the 81xx were said to be significantly worse than the 83xx I am familiar with, and the scheduling was presumably worse at first, I never thought it was fair to call them a 4 core. They didn’t scale as well as you’d like with the shared FPU, maybe 80%, but they were also measured in ways that favored their competition, and people still believed userbenchmark back then. And hey, when you have a blank testing OS running a single core video game at base clocks and you see how many hundred peak FPS they get at low resolution, they might look awful. Especially since Intel was doing well for a few years after the 2500k, so they were a weak opponent to some stuff competition. The piledriver chips look less bad when you have a bunch of junk running in the background that doesn’t really include much floating point, but takes up runtime. It also helps if you figure that the *equal priced* intel alternative may not even have had overclocking enabled at the time, or may need a new motherboard again.

I went from Deneb to Piledriver skipping Thuban and Bulldozer, mainly because it was cheaper for me. The clocks were better with piledriver, although I did have to limit that for heat reasons like many people did. At the time, nobody was getting close to full performance without overclocking, no matter what chip you had. I might have been happy with a Thuban 6 core, which wouldn’t be that much worse in multicore, but hey.

Part of the reason I think they were a bit hard-done by, even though they weren’t very good, is that we’re in a slightly similar situation now. Intel’s counting e-cores the same as if they were full cores, but they’re really not equivalent. And their chips are consuming a bit much energy-wise for something that’s supposed to be ‘efficient’. They may not be as bad as bulldozer, but fair’s fair.

What’s Windoze? ha…. I run Linux on everything. As for programming languages, VB would be last language on Earth that I’d program in. But no matter what you run or use, I bet most of don’t care what the compiler/interpreter instructions are to get the job done. All we care about is that the OS runs on the laptop/desktop/work station/server/SBC/etc. so we can get our work/play done. And we’ll complain if it doesn’t ;) . So lowest common 64bit denominator works for me as long as the work completes in a reasonable amount of time. And for those that ‘need’ the ‘new’ instructions, why just re-compile the OS or application as needed.

I found out the hard way that my Sandy Bridge Xeon is only x86-64 v2. I turned on some v3 optimizations and my simple socket program started crashing in FD_ZERO() macros.

E5 or E3? Z97 boards are around $15-20 if you shop. And the E3 1241v3 is $15 regularly (its basically an i7 4770 or 1270v3). Just got a X99 and 5930K for about $50 together