Imagine you want to iterate on a shock-absorbing structure design in plastic. You might design something in CAD, print it, then test it on a rig. You’ll then note down your measurements, and repeat the process again. But what if a robot could do all that instead, and do it for years on end? That’s precisely what’s been going on at Boston University.

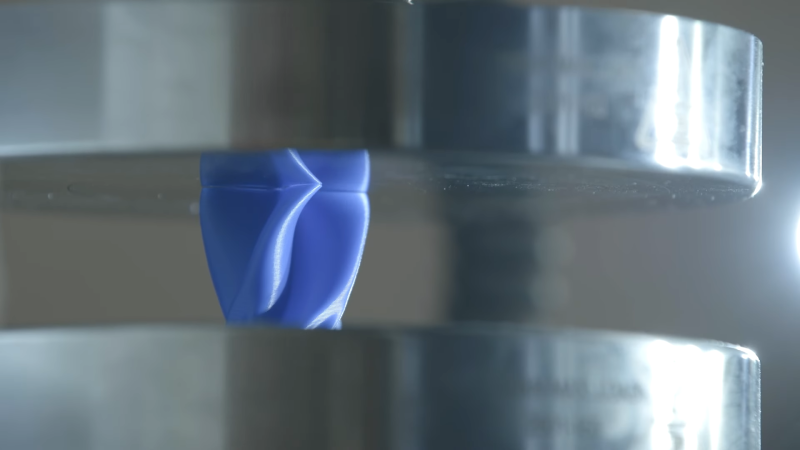

Inside the College of Engineering, a robotic system has been working to optimize a shape to better absorb energy. The system first 3D prints a shape, and stores a record of its shape and size. The shape is then crushed with a small press while the system measures how much energy it took to compress. The crushed object is then discarded, and the robot iterates a new design and starts again.

The experiment has been going on for three years continuously at this point. The MAMA BEAR robot has tested over 25,000 3D prints, which now fill dozens of boxes. It’s not frivolous, either. According to engineer Keith Brown, the former record for a energy-absorbing structure was 71% efficiency. The robot developed a structure with 75% efficiency in January 2023, according to his research paper.

Who needs humans when the robots are doing the science on their own? Video after the break.

[Thanks to Frans for the tip!]

The machines will take over soon, if they haven’t already

I’ll be glad someone… something is finally in charge.

It appears to be the same video twice.

Maybe you meant to include this video as well:

https://www.youtube.com/watch?v=IMSg8Fq0vws

I wonder how much the result depends on the material properties. For example, the effects of the layer line weaknesses in the 3d printer parts won’t translate to other manufacturing methods.

I have to wonder how much design of experiment (DOE) has taken place for this study. I remember going to a conference for additive manufacturing for use in aerospace where I heard a really interesting talk about the challenges of analyzing printed parts for strength and margins of safely- my biggest takeaway being that the increase in number of parameters (layer height, orientation, infill geometry etc.) makes it very difficult to compare parts even if they have the same geometry and material (not so good if one needs to reliably predict failures). Given that, I have a hard time seeing these results scale for other applications. Then again, a really well designed experiment may be able to make some generalized inferences for improving efficiency (especially with 25000 samples). It could be the samples are architected to identify the appropriate gradients for improving efficiency in the high dimensional parameter space. Alternatively, I could see this being immensely useful as ground truth for validating simulation models which, (once validated) can be used to brute force the parameter space much more quickly to find the optimal shape.

I’ll keep this article handy when my boss comes to me asking why the first prototype PCB of a project has minor issues and isn’t perfect.

Would you prefer a machine, which would iterate it over 9 billion prototypes? Yeah I thought not. I’m not filing a CAPA report for doing engineering

“Who needs humans when the robots are doing the science on their own?”

The robots do!

If you read the paper, they specifically note this was a *joint* experiment, where humans monitored the SDL, and in fact they note some of the largest jumps came from human intervention.

“Inside the College of Engineering, a robotic system has been working to optimize a shape to better absorb energy.”

Miles and his “earthquake brick” in electric dreams.

I so wanted that arm to drop the crushed piece into a filament reclaiming system.

I also really wanted multiple printers, since that’s the longest step in the process.

Why do I want everything to be over optimized?

It looks like it does:

see https://www.youtube.com/watch?v=IMSg8Fq0vws

at 40sec.

No, filament reclaiming would be bad: then you wouldn’t have a repeatable process.

The reclaimed plastic doesn’t have to be reused for the experiments; it could be used in something not as dependent on knowing the original material properties.

It doesn’t necessarily need to recycle the filament for itself though. It could be reused for other on- or off-campus projects. At least that would reduce a couple of reels being produced anew.

This and ML applied to protein folding reminds me of Stanislaw Lem’s prediction from 1968 that we will eventually developer autonomous knowledge gathering / generating machines.