![The future of healthy indoor plants, courtesy of AI. (Credit: [Liam])](https://hackaday.com/wp-content/uploads/2025/04/plantmom_test_setup_with_plant.jpg?w=400)

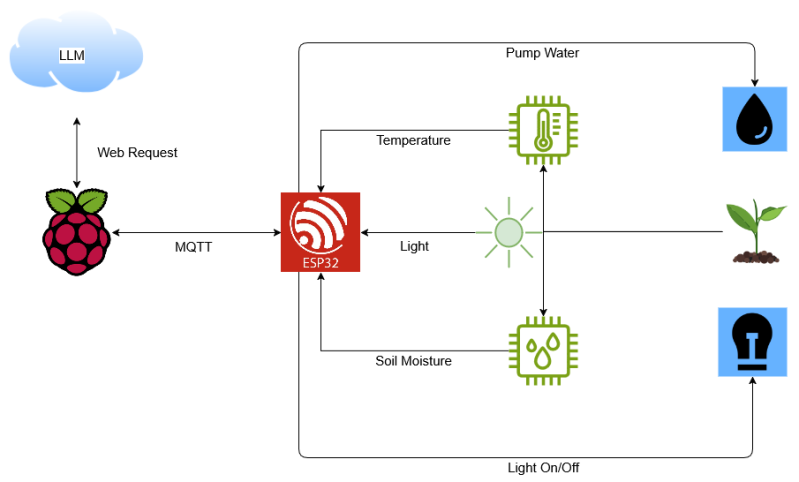

Since LLMs so far don’t come with physical appendages by default, some hardware had to be plugged together to measure parameters like light, temperature and soil moisture. Add to this a grow light and a water pump and all that remained was to tell the LMM using an extensive prompt, containing Python code, what it should do (keep the the plant alive), and what Python methods are available. All that was left now was to let the Google’s Gemma 3 handle it.

To say that this resulted in a dramatic failure along with what reads like an emotional breakdown on the part of the LLM would be an understatement. The LLM insisted on turning the grow light on when it should be off and had the most erratic watering responses imaginable based on absolutely incorrect interpretations of the ADC data, flipping dry and wet. After this episode the poor chili plant’s soil was absolutely saturated and is still trying to dry out, while the ongoing LLM experiment, with an empty water tank, has the grow light blasting more often than a weed farm.

So far it seems like that the humble state machine’s job is still safe from being taken over by ‘AI’, and not even brown thumb folk can kill plants this efficiently.

It was a practice run. Next, humans.

Be it human or LLM, if both are badly trained, the result will be inevitably bad.

Sorry. Humour too subtle. The AI killed the plants. Next it will kill the humans.

That one went straight over my head. Not sure how I missed it. Anyhow, my comments still sort of works.

LMM: Large Moisture Model?

Making holes in plastic. No, really don’t use a knife – a knife is a bad idea.

What works for me is either using a hot pointy bit (hot metal skewer or the soldering iron) or a wood drill bit (I think people call these brad point bits) with not much pressure otherwise the plastic might crack.

Use a wood scrap behind the plastic while drilling – even better: one before, and clamp the three layers wood-plastic-wood together.

Well, I don’t know why we are talking about making holes in plant pots… but if you go into the route of manually using a wood drilling bit, it works really well (better than with a hand driller), but make sure you wear a thick glove.

Keep this experiment away from Ars Technica. I can hear the “I told you so” all the way over here.

I told you so!

I told you so!

Multiple inverted sensors sounds like user error..

Meanwhile some company is using AI to make health care decisions for humans.

From a carbon balance and water consumption perspective this is metaphorical on a really sad level.

Really a study in using the wrong tool for the job. Like seeing if O’hare Intl Airport makes a good apartment or if one of those large hauling mining dump trucks that are 50 ft tall makes a good commuter car. An LLM is for predicting language, not reasoning or following procedure. Its best at averaging out language and adding in random speech that pertains to the prompt.

Running a super tiny LLM on that RPI would have been better with its more limited vocab. Even just an old school expert system would be better. When doing simplistic automation, having simple control logic is better than adding needless complexity.

Ha! I was working on something similar over a year ago but I found the LLM’s outputs were kind of terrible and would’ve most definitely killed all of my plants, even after tweaking. Many of the same problems as here.

I did try making it output json and having it automatically be parsed and work together with home assistant but the crazy outputs remained.

I understand we do things because we can and maybe the experience goes on to inspire more complex things. but I don’t get why we need A.I.? Mankind went to the moon and back with just a few kilo bytes of memory! I guess if something goes wrong the buck stops at the A.I.?

Brown Thumb is the PEBKAC(1) of the plant world.

I’m no plant whisperer, but everyone I know that “can’t keep a plant alive” is doing it wrong. And the things they are doing wrong are often so obvious that we don’t even think to ask about them.

“This dead plant has a tag hanging off a branch that says ‘requires 4 hours of direct sunlight per day’ and you have it in your bathroom that has no windows…”

It’s kind of like when someone tells you that their computer won’t start.

You shouldn’t need to ask if it is plugged in to power, and they would get insulted if you did, but every once in a while that will be the problem…

(1) Problem exists between keyboard and chair.