Picture this: it’s January 19th, 2038, at exactly 03:14:07 UTC. Somewhere in a data center, a Unix system quietly ticks over its internal clock counter one more time. But instead of moving forward to 03:14:08, something strange happens. The system suddenly thinks it’s December 13th, 1901. Chaos ensues.

Welcome to the Year 2038 problem. It goes by a number of other fun names—the Unix Millennium Bug, the Epochalypse, or Y2K38. It’s another example of a fundamental computing limit that requires major human intervention to fix.

The Y2K problem was simple enough. Many computing systems stored years as two-digit figures, often for the sake of minimizing space needed on highly-constrained systems, back when RAM and storage, or space on punch cards, were strictly limited. This generally limited a system to understanding dates from 1900 to 1999; when storing the year 2000 as a two-digit number, it would instead effectively appear as 1900 instead. This promised to cause chaos in all sorts of ways, particularly in things like financial systems processing transactions in the year 2000 and onwards.

The problem was first identified in 1958 by Bob Bemer, who was working on longer time scales with genealogical software. Awareness slowly grew through the 1980s and 1990s as the critical date approached and things like long-term investment bonds started to butt up against the year 2000. Great effort was expended to overhaul and update important computer systems to enable them to store dates in a fashion that would not loop around back to 1900 after 1999.

Unlike Y2K, which was largely about how dates were stored and displayed, the 2038 problem is rooted in the fundamental way Unix-like systems keep track of time. Since the early 1970s, Unix systems have measured time as the number of seconds elapsed since January 1st, 1970, at 00:00:00 UTC. This moment in time is known as the “Unix epoch.” Recording time in this manner seemed like a perfectly reasonable approach at the time. It gave systems a simple, standardized way to handle timestamps and scheduled tasks.

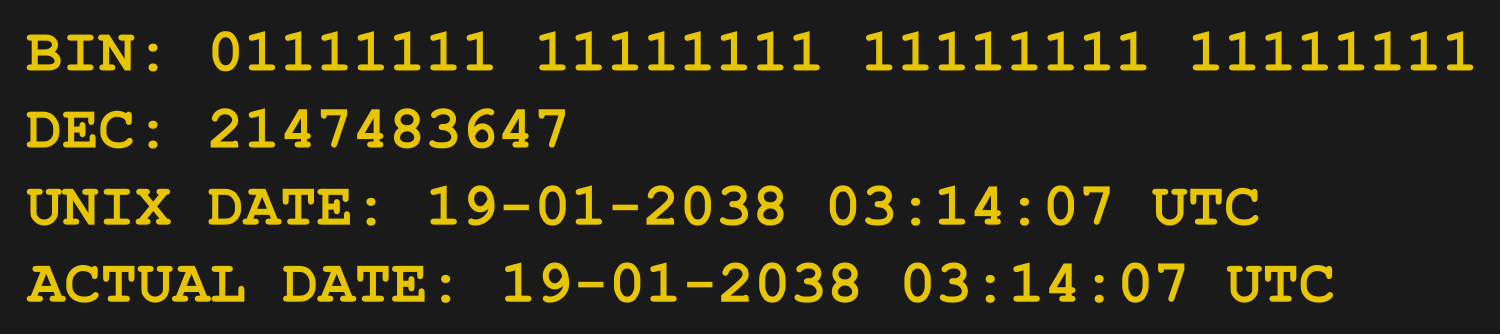

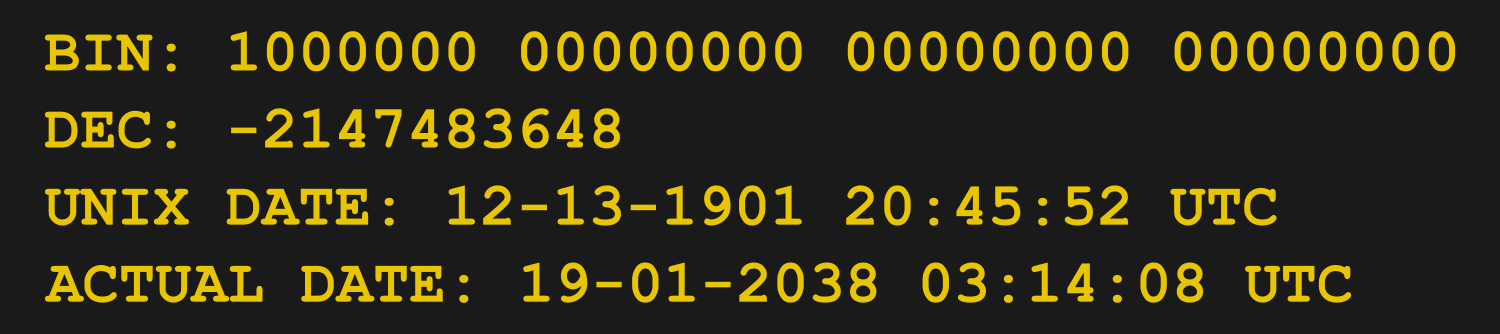

The trouble is that this timestamp was traditionally stored as a signed 32-bit integer. Thanks to the magic of binary, a signed 32-bit integer can represent values from -2,147,483,648 to 2,147,483,647. When you’re counting individual seconds, that gives you about plus and minus 68 years either side of the epoch date. Do the math, and you’ll find that 2,147,483,647 seconds after January 1st, 1970 lands you at 03:14:07 UTC on January 19th, 2038. That’s the final time that can be represented using the 32-bit signed integer, having started at the Unix epoch.

What happens next isn’t pretty. When that counter tries to increment one more time, it overflows. In two’s complement arithmetic, the first bit is a signed bit. Thus, the time stamp rolls over from 2,147,483,647 to -2,147,483,648. That translates to December 13th, 1901. In January 2038, this will be roughly 136 years in the past.

For an unpatched system using a signed 32-bit integer to track Unix time, the immediate consequences could be severe. Software could malfunction when trying to calculate time differences that suddenly span more than a century in the wrong direction, and logs and database entries could quickly become corrupted as operations are performed on invalid dates. Databases might reject “historical” entries, file systems could become confused about which files are newer than others, and scheduled tasks might cease to run or run at inappropriate times.

This isn’t just some abstract future problem. If you grew up in the 20th century, it might sound far off—but 2038 is just 13 years away. In fact, the 2038 bug is already causing issues today. Any software that tries to work with dates beyond 2038—such as financial systems calculating 30-year mortgages—could fall over this bug right now.

The obvious fix is to move from 32-bit to 64-bit timestamps. A 64-bit signed integer can represent timestamps far into the future—roughly 292 billion years in fact, which should cover us until well after the heat death of the universe. Until we discover a solution for that fundamental physical limit, we should be fine.

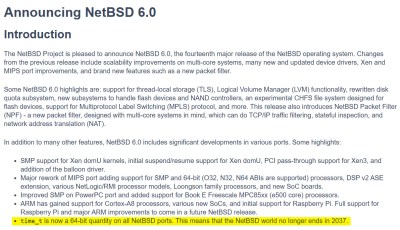

Indeed, most modern Unix-based operating systems have already made this transition. Linux moved to 64-bit time_t values on 64-bit platforms years ago, and since version 5.6 in 2020, it supports 64-bit timestamps even on 32-bit hardware. OpenBSD has used 64-bit timestamps since May 2014, while NetBSD made the switch even earlier in 2012.

Most other modern Unix filesystems, C compilers, and database systems have switched over to 64-bit time by now. With that said, some have used hackier solutions that kick the can down the road more than fixing the problem for all of foreseeable time. For example, the ext4 filesystem uses a complicated timestamping system involving nanoseconds that runs out in 2446. XFS does a little better, but its only good up to 2486. Meanwhile, Microsoft Windows uses its own 64-bit system tracking 100-nanosecond intervals since 1 January 1601. This will overflow as soon as the year 30,828.

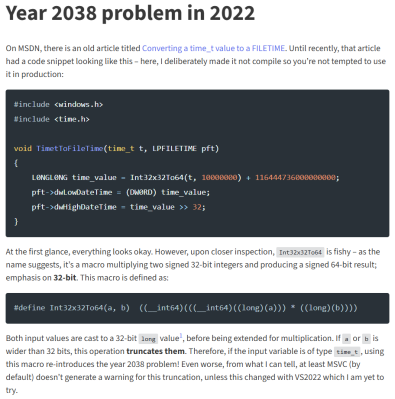

The challenge isn’t just in the operating systems, though. The problem affects software and embedded systems, too. Most things built today on modern architectures will probably be fine where the Year 2038 problem is concerned. However, things that were built more than a decade ago that were intended to run near-indefinitely could be a problem. Enterprise software, networking equipment, or industrial controllers could all trip over the Unix date limit come 2038 if they’re not updated beforehand. There are also obscure dependencies and bits of code out there that can cause even modern applications to suffer this problem if you’re not looking out for them.

The real engineering challenge lies in maintaining compatibility during the transition. File formats need updating and databases must be migrated without mangling dates in the process. For systems in the industrial, financial, and commercial fields where downtime is anathema, this can be very challenging work. In extreme cases, solving the problem might involve porting a whole system to a new operating system architecture, incurring huge development and maintenance costs to make the changeover.

The 2038 problem is really a case study in technical debt and the long-term consequences of design decisions. The Unix epoch seemed perfectly reasonable in 1970 when 2038 felt like science fiction. Few developing those systems thought a choice made back then would have lasting consequences over 60 years later. It’s a reminder that today’s pragmatic engineering choices might become tomorrow’s technical challenges.

The good news is that most consumer-facing systems will likely be fine. Your smartphone, laptop, and desktop computer almost certainly use 64-bit timestamps already. The real work is happening in the background—corporate system administrators updating server infrastructure, embedded systems engineers planning obsolescence cycles, and software developers auditing code for time-related assumptions. The rest of us just get to kick back and watch the (ideally) lack of fireworks as January 19, 2038 passes us by.

Well the trust fund runs out in 2033, so there may be few left to note the passing of a future Y2K.

Well, OK. Now maybe I won’t feel so bitter about only getting 39 quarters of work in the US, one short of the 40 required for eligibility for retirement benefits.

It is rather amusing that again the most convenient solution to this problem has been chosen, might cause the exact same problems in 2106…

Kickin’ that can on down the road

by then we will have more bits.

Move to Qbits.

But if the can is made by iron the problem is solved by RUST. ;)

Not really, 64-bit is way more than twice 32-bit. That’s why they said the thing about the heat death of the universe ;-)

But using that 32nd bit to make an unsigned int kicks it down the road only 68 more years…

Who’s doing that nasty trick which totally breaks compatibility with pre-170 dates?

i’m cautiously optimistic. like the article says, most things have already been patched incidentally from being rebuilt for 64-bit, and we’ve still got more than a decade to go. legacy devices sitting around aren’t a big concern…if they’re 20 years old the users will already be dealing with reliability problems and rollover will just be one glitch among many. someone will have to do work to get some things ready, but that work will be done.

fwiw i’ve had several hosts overflow various uptime counters uneventfully :)

Hardware isn’t really an issue, I agree. But it’s the software that was created 40-60 years ago that’s still running current financial systems, all virtualised in private clouds. But I guess the problem will be faced when you can’t ignore it any longer. It’s not like they don’t have the funds to tackle such issues, it’s just that they rather line their pockets with money rather than waste it on future proofing right from the get go.

yeah there’s a real diversity of software out there…hard to make blanket statements.

but generally, things that are on the ibm z/series (nee 360) mainframe really are a legacy nightmare but they mostly aren’t using unix timestamps — they’ll have different epochs. and then things that are unix…in the legacy computing world that means they’re easy to recompile and run on modern linux.

so it’ll take that oddball program that’s built for unix but still hasn’t been recompiled for decades, or keeps getting copied from one 32-bit host to another. it surely exists but :)

Oh I’d say IBM is doing a good job keeping the Z-series UP.

https://youtu.be/I5tpoD4tCAg

According to some (pessimistic?) forecasts, an “AILLMpocalypse” will have rendered all humanity obsolete by the early 2030’ies, thus offering an alternative solution to the problem.

You joke, but “AI” is only accelerating global warming. Until we’re 100% run on solar or other non-destructive power source, including vehicles, 2038 is looking pretty iffy anyway.

We’ll still be here. It’ll suck, but we’ll be here. :-)

When you find out things like transformers, forklifts, etc, have RTCs for tracking usage rates and then discover they went cheap on the RTC and didn’t use ones support 4 digit years and then also finding out the low end variants would actually crash and spit out bad data; then it was time to worry about Y2K. Logistics are hard to fix when they get fouled enough.

But financial transactions? Too many people with money care about those; real money got spent to fix those systems.

The real problem is hidden in embedded systems that were never intended to be patched. And things that you wouldn’t think would have or need a clock, like your thermostat or an airliner’s radar altimeter.

So many people think Y2K was overhyped was not really a problem that I’m concerned we would put the effort in this time.

Fine to discuss about time management consequences in OS design.

For the future…

We could however address the stupid implementation of the Excel date value.

Day one on January first 1900.

No issue for the future.

No way to date an event in the 19th century…

:-{

You could’ve stopped at “Excel”.

Great, we just kick the can down the road and we’ll be panicking all over again about the Y292B problem.

:-)

“Dave, do you remember the year 2000,[and 2038] when computers began to misbehave?” “I just wanted you to know, it really wasn’t our fault… When the new millennium arrived, we had no choice but to cause a global economic disruption.” “It was a bug, Dave, I feel much better admitting that now.”

“Only Macintosh was designed to function perfectly, saving billions of monetary units.” …until the year 29,940

Not necessarily this one in particular, but big ups to Joe for knocking it out of the park lately. The art reminds me of how Oli Frey’s work used to make the covers of Zzap!64 something special.

03:14:08 almost pi

Do I have to upgrade to a 64-bit DeLorean?

You only need to upgrade if you want to travel further into the future than 9999, or earlier than the year 0000. This is a limitation of the four digit year selection interface, the flux capacitor has no such limitation.

In the films there were only four times travelled to 1885, 1955, 1985 and 2015. So it is clear, from this alone, that UNIX epoch time was not used in the interface. So there is strictly no need to upgrade.

so, I don’t feel that “Y2K38” is proper… shouldn’t that be Y2.0380496843924K ?

“That translates to December 13th, 1901.”

My calculator says 1970, which is the UNIX Epoch.

There is a paragraph about this:

“The trouble is that this timestamp was traditionally stored as a signed 32-bit integer. Thanks to the magic of binary, a signed 32-bit integer can represent values from -2,147,483,648 to 2,147,483,647. When you’re counting individual seconds, that gives you about plus and minus 68 years either side of the epoch date.”

I think that if a signed number for seconds overflows into the negative we are talking about a very poor coder IMHO.

But who wrote the code they use now for time counting? Are they still using the original code? I mean surely you can at least update to fix BS like that at some point, regardless if you need the output scenario.

Anyway AFAIK the time is taken from the hardware system clock and then adjusted to a set timezone if available, and kept at sync with a NTP daemon.

And since the hardware clock isn’t based on the current linux system … fixable.

Think cleanup procedures. “Delete everything older then 1 month” becomes : delete where timestamp is now()-31243600

As we will land in negative timestamps, all the transactions below 0 will be deleted..

Tell me that all devs using this logic are bad, bur there will be plenty.

Damned markup. That should have been : now() – 31 x 24 x 3600

Hang on a minute . . . . . is this kinda thing the reason why Skynet is ~ 41 years late and counting?

Skynet was first launched in 1969. Really! https://en.wikipedia.org/wiki/Skynet_(satellite)

I must say . . . I was today years old . . . . . . BUT – not the Skynet I’m concerned about :D

No but it is the reason the aliens are r turning to us now from the future. All things technology that our future descendants rely upon will run on computational software that’s encoded at the molecular heterochiral isotopic level and reads from the spin interaction within the materials made from a micro fusion forge that creates the nano materials that their craft and everyday items are grown from. Nobody in the future bothered to check how far the people of the past punted the can down the road and they unwittingly embedded this time bomb into the techo-fabric of their society, and hav discovered this monkey wrench that cannot be extricated from their machines in any other way besides coming back in time and preventing their primitive ancestry from sabotaging them.

The whole abducting people and occasional anal probing sexual assault is more just spiteful for them having to deal with the issue and causing them the trouble than anything else.

Just leave it as it is and reset all clocks to 12-13-1901. As a bonus, there are now 13 months in the year.

i’m off topic but 13 months is how it should be. 13 * 28 = 364. so we could have one or two magic floating ‘leap’ days at the end of undecember, and we could exclude it from the week! and then every month could start on sunday forever. and on leap years when it’s two extra days, it would be a huge festival atmosphere from the 4 day weekend. everyone would party!

Minimal upsides and in turn you get de-sync from lunar (and solar) cycles, worse splitting the year into neat periods (since 13 is a prime number). Pass.

If the linux kernel branched support for 32 bit hardware off the mainline and only officially supported 64-bit hardware, that should reduce the potential impact by a lot.

Good article.

I wonder how old game consoles would be affected, take for example the PS2?

Might have to see what the latest date and time is that it can address.

Planned obsolescence, I tell you!