When the Raspberry Pi was introduced, the world was given a very cheap, usable Linux computer. Cheap is good, and it enables one kind of project that was previously fairly expensive. This, of course, is cluster computing, and now we can imagine an Aronofsky-esque Beowulf cluster in our apartment.

This Hackaday Prize entry is for a 100-board cluster of Raspberry Pis running Hadoop. Has something like this been done before? Most certainly. The trick is getting it right, being able to physically scale the cluster, and putting the right software on it.

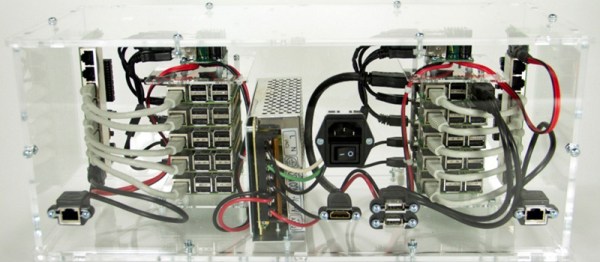

The Raspberry Pi doesn’t have connectors in all the right places. The Ethernet and USB is on one side, power input is on another, and god help you if you need a direct serial connection to a Pi in the middle of a stack. This is the physical problem of putting a cluster of Pis together. If you’re exceptionally clever and are using Pi Zeros, you’ll come up with something like this, but for normal Pis, you’ll need an enclosure, a beefy, efficient power supply, and a mess of network switches.

For the software, the team behind this box of Raspberries is turning to Hadoop. Yahoo recently built a Hadoop cluster with 32,000 nodes used for deep learning and other very computationally intensive tasks. This much smaller cluster won’t be used for very demanding work. Instead, this cluster will be used for education, training, and training those ever important STEAM students. It’s big data in a small package, and a great project for the Hackaday Prize.