Moore’s Law states the number of transistors on an integrated circuit will double about every two years. This law, coined by Intel and Fairchild founder [Gordon Moore] has been a truism since it’s introduction in 1965. Since the introduction of the Intel 4004 in 1971, to the Pentiums of 1993, and the Skylake processors introduced last month, the law has mostly held true.

The law, however, promises exponential growth in linear time. This is a promise that is ultimately unsustainable. This is not an article that considers the future roadblocks that will end [Moore]’s observation, but an article that says the expectations of Moore’s Law have already ended. It ended quietly, sometime around 2005, and we will never again see the time when transistor density, or faster processors, more capable graphics cards, and higher density memories will double in capability biannually.

In 2011, the Committee on Sustaining Growth in Computing Performance of the National Research Council released the report, The Future of Computing Performance: Game Over or Next Level? This report provides an overview of computing performance from the first microprocessors to the latest processors of the day.

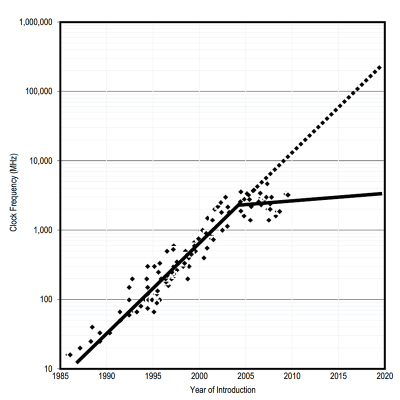

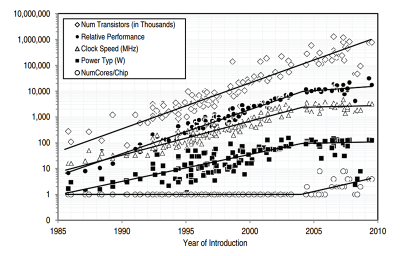

Although Moore’s Law applies only to transistors on a chip, this measure aligns very well with other measures of the performance of integrated circuits. Introduced in 1971, Intel’s 4004 has a maximum clock frequency of about 700 kilohertz. In two years, according to bastardizations of Moore’s Law, this speed would double, and in two years double again. By around 1975 or 1976, so the math goes, processors capable of running at four or five Megahertz should appear, and this was the historical precedent: the earliest Motorola 6800 processors, introduced in 1974, ran at 1MHz. In 1976, RCA introduced the 1802, capable of 5MHz. In 1979, the Motorola 68000 was introduced, with speed grades of 4, 6, and 8MHz. Shortly after Intel released the 286 in 1982, the speed was quickly scaled to 12.5 MHz. Despite being completely different architectures with different instruction sets and bus widths, a Moore’s Law of the clock speed has existed for a very long time. This is law also holds true with the performance and even TDP per device.

Everything went wrong in 2004. At least, this is the thesis of The Future of Computing Performance. Since 2004, the exponential increase in performance, both in floating point and integer calculations, clock frequency, and even power dissipation has leveled off.

One could hope that the results are an anomaly and that computer vendors will soon return to robust annual improvements. However, public roadmaps and private conversations with vendors reveal that single threaded computer-performance gains have entered a new era of modest improvement.

There was never any question Moore’s Law would end. No one now, or when the law was first penned in 1965, would assume exponential growth could last forever. Whether this exponential growth would apply to transistors, or in [Kurzweil] and other futurists’ interpretation of general computing power was never a question; exponential growth can not continue indefinitely in linear time.

Continuations of a recent trend

The Future of Computing Performance was written in 2011, and we have another half decade of data to draw from. Has the situation improved in the last five years?

Unfortunately, no. In a survey of Intel Core i7 processors with comparable TDP, the performance from the first i7s to the latest Broadwells shows no change from 2005 through 2015. Whatever happened to Moore’s Law in 2005 is still happening today.

The Future Of Moore’s Law

Even before 2011, when The Future of Computing Performance was published, the high-performance semiconductor companies started gearing up for the end of Moore’s Law. It’s no coincidence that the first multi-core chips made an appearance around the same time TDP, performance, and clock speed took the hard turn to the right seen in the graphs above.

A slowing of Moore’s Law would also be seen in the semiconductor business, and this has also been the case. In 2014, Intel released a refresh of the 22nm Haswell architecture because of problems spinning up the 14nm Broadwell architecture. Recently, Intel announced they will not be introducing the 10nm Cannonlake in 2016 as expected, and instead will introduce the 14nm Kaby Lake in 2016. Clearly the number of transistors on a die can not be doubled every two years.

While the future of Moore’s Law will see the introduction of exotic substrates such as indium gallium arsenide replacing silicon, this much is clear: Moore’s Law is broken, and it has been for a decade. It’s no longer possible for transistor densities to double every two years, and the products of these increased densities – performance and clock speed – will remain relatively stagnant compared to their exponential rise in the 80s and 90s.

There is, however, a saving grace: When [Gordon Moore] first penned his law in 1965, the number of transistors on an integrated circuit saw a doubling every year. In 1975, [Moore] revised his law to a doubling every two years. Here you have a law where not only the meaning – transistors, performance, or speed – can change, but also the duration. Now, it seems, Moore’s law has extended to three years. Until new technologies are created, and chips are no longer made on silicon, this will hold true.

Conflicting statistics. Who to believe?

Conflicts from where?

Interesting read. I wonder if time was factored into said calculation though using say a time series of non uniform intervals increasing over time. Now that’d be an interesting calculation and provide some insight!

> “exponential growth can not continue indefinitely in linear time”

Engineers grok that. Now let’s try to explain that to economists and politicians!

Yes, indeed. Also energy and resources consumption is still growing exponentially.

not in many parts of the developed world. economy and gdp per energy/resources are still increasing exponentially and can for some time with very established measures and technology. in many prominent cases, primary energy consumption has been flat or decreasing for 7 to 12 years.

It’s a tricky question…. if you count per country, maybe. But how many developed countries are offsetting their energy and resources consumption by having products made elsewhere?

this^

the problem is as long as you don’t look at the big picture endless growth sound possible, but once you see the big picture you see it’s all a house of cards and the people pushing it know it.

they just want to drain as much blood as possible before that happens.

http://physics.ucsd.edu/do-the-math/2012/04/economist-meets-physicist/

like this?

Funny essay.

However there is one disconnect that must be brought up: physical properties are provably real.

Money and our economy is a thoroughly human invention and pursuit – with no credible natural phenomenon to appeal to. Sure they may try, but i said ‘credible’.

Massive companies like amazon ‘never turn a profit’.

To me this process economics is FAKE. Literally made up – not real – provably false.

That is a great blog that I have been following for a few years. Highly recommended!

Yeah! Kill that straw man, kill it with fire!

There’s one hidden assumption in their argument of growth: that all products and all wealth is consumed.

But if there is a wealth that is not consumed, even if it embeds energy, it can keep increasing arbitrarily. As long as there is an influx of energy – no matter how slowly – it can always keep growing because it accumulates.

For example, a stone statue. It takes energy to make one, but once you have it you no longer need to spend energy to keep it. If we make our stuff last, we don’t need to keep re-making them continuously.

You might enjoy Entropy: A new world view by Jeremy Rifken and Ted Howard

Economists call it Stein’s Law. Politicians call it Their Successor’s Problem.

Tru’ ‘dat.

Well, for quite a lot of years Moore’s law was easy, but then it got hard. Wires too thin, power density too high, threshold issue…but it is not impossible to break free. Maybe soon quantum or graphene or bio computers will get a new approach and start growing exponentially again.

Too bad we wasted these good years of Moore by letting software development happen according to Wirth’s law.

Given that we’ve been following Wirth’s Law, that means that there’s room to make software more efficient.

Wirth’s law is observably true, if not necessarily. Of course people HAD to program in assembler (if they were lucky, and uphill to work every day both ways, lump of cold poison etc).

Thing is although software’s got slower, it’s also got more versatile, easier to plug things into each other, use things in different ways. Versatile I think is the word I’m looking for.

There’s a tradeoff between speed and flexibility. Or in fact versatility. We’re on the other end.

There’s also the tradeoff between programmer time and computer time, you could never write a web browser in asm. Or a modern FPS game. Or AI. Fortunately computers are cheap and powerful enough to cope with that. Thanks to Moore’s law, Wirth’s law! Wonder if Wirth realised that?

Of course Microsoft are the exception, managing to make a pig’s ear of something everyone else does better. There’s probably a way of factoring money, anti-trust, and shamelessness into that equation.

“Thing is although software’s got slower, it’s also got more versatile, easier to plug things into each other, use things in different ways. Versatile I think is the word I’m looking for.”

A bit of thinking shows that this isn’t really true either. An exponential increase in the amount of code around will result in at least a linear increase in the amount of interfacing required. For example, consider the balkanisation of web languages. And the primary reason is that for any system of sufficient size; interfacing to it becomes increasingly complex (more protocols, more APIs, more legacy considerations). So, engineers inevitably decide to create new ‘simpler’ paradigms/languages/protocols to replace the old clunky ones. The new PLPs are attractive to newcomers because they’re simpler, but as the popularity grows they find they have to start interfacing it to existing systems and expanding its capabilities and so each new PLP grows in complexity to meet these requirements.

And thus the need for a new PLP is spawned. Unfortunately, this means each new PLP only adds to the problem (there’s now an extra system that must be interfaced to). Its an issue of cognitive horizons – an increasing problem for the future!

I’m thinking more along the lines of OOP software and RAD, where a lot can be done with just a few lines of code, by joining existing software modules together. Modules that were designed to be general-purpose and to interface to others. Like libraries, but even more general. Allowing for that extra versatility means a bit more code. Also means a good few more levels of abstraction, lots of calls, which are a real bugger for slowing software down.

Or in another way, things like JSON, horrible and eldritch though it be. You can plug together functions now a lot easier. The interfacing you mention, previously had to be written as new code ad-hoc for every new program or application.

Yes sometimes things get out of control and we cut them down and start again. Often we can salvage a lot of the “good wood” that’s left.

The difference between my post and yours, I think, is that I’m talking about deliberate detail added to support versatility, you’re talking about the stuff that creeps up unintentionally. Often the two are tightly bound, and that’s a problem for the poor sod who has to work on it. But in principle, it’s not a bad thing.

That’s actually very good because it forces (or lets? because it fuels the demand) manufacturers create more powerful hardware. If possible, I try run older versions of software. And let me tell you, the computers are really, really fast and drives huge! When I tried Win7 and found that they take 20 GB on a fresh install, I just said WHAT THE FUCK?!, Are you serious?! And immediately replaced them with Win XP. Because that’s *all* I need to manage my files and run programs. The rest of features taking 19.5 GB is completely useless. Unfortunately the consumers are accepting it. It makes me kind of sad when I see somebody complaining about some bloatware and another guy advices him to “buy more RAM! RAM is cheap” etc. Yeah, sure, we all need supercomputers to move files on a harddrive and run internet browsers.

Problem with Windows XP is newer games don’t support it, bah! My brother-in-law game my brother a computer, running Linux, with instructions, should he ever wish to play games (which is all he wanted it for), to look up the four-dozen WINE workarounds for each particular one. Problem fixed with a Win XP disk.

There’s also the prob that XP won’t access more than 3.something GB RAM.

Maybe WINE needs some package format for all the workarounds to get games working. Apt-get made Linux management a lot easier and more straightforward. Even if you’ve no idea where half of the files end up. Linux seems to need a lot more effort to become a “power user” than Windows does.

Depending on the chipset and the BIOS, it is possible for a 32 bit operating system to have full use of 4 gig RAM. The method called Physical Address Extension (PAE) has been in most Intel compatible CPUs since the Pentium Pro in 1995.

But it took years before most chipsets and BIOS code adopted PAE, and some still did not into the 2nd decade of this century.

PAE is required to use more than 4 gig physical RAM, but (IIRC) not for a 64 bit OS with 4 gig or less.

On x64 Windows, on a computer with PAE *and* over 4 gig RAM, it’s possible to write (or patch) 32 bit software to enable it to access more than 4 gig RAM. How well it can use that extra space is up to the person who wrote the software. Google PAE patch for 32 bit software

My previous laptop was a circa 2005 Dell, which I upgraded with a x64 Core 2 Duo and despite the “limit” of 2 gig RAM I installed 4 gig. The chipset was non-PAE so it could only access 3.25 gig, and it ran x64 Win 7 fairly decently, especially with the 7200 RPM 500 gig hard drive.

My current laptop is a mpc TransPort T2500 (rebranded Samsung X65 from 2008) which came with 4 gig, fully accessible, and a 2.5 Ghz Core 2 Duo. Originally shipped with x32 XP with x64 Vista as an option. (So of course the Idaho State Police chose to buy XP, seven years after its release date.) I move the hard drive over from the Dell and the mpc runs x64 Win 7 quite nicely, as well as this 3.2 Ghz Phenom II x2 with 6 gig RAM I’m using right now.

Unless you’re a bleeding edge gamer or working with massive image files, video or giant databases, just about any 2+ Ghz dual core box with at least 4 gigs of RAM made from 2005 and later will run pretty much the same. 4 gigabytes, it’s the new 4 megabytes. (Remember 1991 when the computer magazines all said to get 4 megabytes RAM for Windows 3.1?)

I’ve got a nice home-assembled quad-core Intel somethingorother with 8GB in front of me now! Problem is the XP I installed on it is 32-bit, don’t think they made a 64-bit one. AFAIK you can use PAE in 32-bit mode, but XP doesn’t support it. Obviously an “up”grade is required, but christ, each Windows is worse than the last! I still run XP with ’98-style actual square boxes on the menus and buttons. I say ’98, not too far from THREED.VBX on 3.1. Which I started with! Well, after DOS 6 that I ACTUALLY started with. Way back on muh 386SX.

And yep I’ve hardly been so excited as the day I spent 90-odd quid on my 3rd and 4th megabyte! I could run DOOM! At last! In a small window in chunky-res mode! But it was DOOM, dammit!

Also had Sim City 2000, and Windows 3.1. But not all three at the same time on a 50MB hard drive (wierd typing “MB” then “hard drive”). When there was no room, one of them took a vacation on floppy disks for a while.

Oh I’m so old! I remember sitting my maybe 2-year-old cousin on my knee, I was 18. I put DOOM in IDDQD mode and gave her the fire button. I did the steering, told her to fire, and rockets flew into demons’ faces! She loved it! She’s something like 24 now! Worked as an au-pair abroad.

So anyway, apart from me being old, there’s no way of playing the newer games (Fallout 4! Bioshock Infinite) without an “up” grade. There must be some stuff out there that curbs the worst tendencies of their user interfaces. My mate bought a Windows 8 laptop, hated it! That was Microsoft’s era of “hey, phones are quite popular, let’s put a phone interface on a PC! We’re geniuses!”. Now they’re in full-on “conquer the world” mode, stronger than ever before, and with even worse ideas! I tell ya, if anyone in the future wants an example of the laughable awfulness of early C21 capitalism, I’ll show them Windows 10. If I can find a copy on any physical media.

OTOH in the last couple of weeks several people have guessed my age at 25. I’m 38! Good genes and no sunlight whatsoever are the keys.

One of the things that fascinate me about computers and Moore’s Law is that no matter what the number of transistors, how fast they run, how much memory is available, or what algorithms we develop to enhance their performance, it all comes back to flipping bits. I often wonder what (if any) discovery in information processing will ultimately make that paradigm obsolete.

computing means processing information. It doesn’t matter much the way information is stored. The bit is the smallest unit of information. Before binary computer there was analog computers using operational amplifiers. Before that there was mechanical computer or at least calculators (Blaise Pascal). Our brain is not binary and does a wunderfull job at precessing information. Maybe soon Qubits will do it too. No doubt will have more powerfull computers one day working on a different paradigm.

Q-Bits

Eh. Quantum computers are shit at doing many of the things we expect regular computers to do with ease. The same technology may lead to far denser processing…..optical cpu anyone?

Last I heard there was a bit of work done on optical transistors. There are gels that shrink out of the way, or change their refractive qualities, when hit by light. Main problem is, I think, is that photons of light just don’t interact with each other. You need some intermediate particle in some wierd quantum state to switch things, with all the cryogenics and other wierdness that comes along with that.

I think an optical CPU would be bit-serial to start with, taking advantage of the speed so you don’t need parallelism, and also eliminating bit skew. Many of the early commercial electronic computers used a lot of bit-serial parts.

Probably won’t be as simple as just transplanting mirrors and lasers in place of traditional ALUs and the like, might work best under a new paradigm for computing. Which will need inventing! I think we’re in about 1930, equivalent-wise, with optical computers. Electronic computers had the Manchester Baby by 1948, and that was a useful recognisable modern-style computer.

So far only flash RAM and HDM graphics RAM went 3D (multi-level), that leaves room for CPU’s and other circuits. And intel is already doing some work with 3D transistors.

So don’t say it ended in 2005, especially since we went all the way down to 14nm now.

And listing intel CPU’s performance nicely ignores that they since 2005 added a freaking decent GPU to the thing meaning it greatly increased in performance on a whole, and in complexity. And the latest generation focused on dropping power use a great deal, so blindly doing a graph like that only evaluating the CPU is silly.

for your information:

http://www.economist.com/blogs/economist-explains/2015/04/economist-explains-17

http://www.technologyreview.com/featuredstory/400710/the-end-of-moores-law/

http://www.independent.co.uk/life-style/gadgets-and-tech/news/the-end-of-moores-law-why-the-theory-that-computer-processors-will-double-in-power-every-two-years-may-be-becoming-obsolete-10394659.html

Issue with 3D is that you still need to get the heat out and 3D structures are shitty for that.

Memory’s gonna be easier to make 3D since it’s just a repeating pattern of identical cells. CPUs very much the opposite of that.

Also as AMS mentions, the heat. CMOS produces heat every time you flip a bit. RAM bits don’t flip as often as the gates in a CPU, Flash bits almost never chage, on a bit-per-second basis.

Nothing wrong with wanting some citation, so here’s a random link:

http://www.anandtech.com/show/4313/intel-announces-first-22nm-3d-trigate-transistors-shipping-in-2h-2011

And remember that we are now at a much later point and it’s in use and any issues are always being handled, as AMS points out you have heat issues, but of course there are ways around that too, less leakage is less heat for instance, or you can add copper layers or some such, I’m sure the intel and IBM and MIT andsorth researchers are on it. (I hear AMD isn’t interested though).

And as I said, if you count the CPU part transistors and ignore the GPU now on the same die you are being silly when evaluating the growth of the number of transistors.

But it’s true of course that speed increases of the clock are not very big the last decade, and it’s also true that sooner or later the whole growth will end, but again, we are at the moment still shrinking things and adding layers.

The exact formulation of Moore’s Law has never been nailed down (doubling transistor count every two years is the most commonly used), but one thing is that Moore’s Law never stated was clock frequency would double every two years. The fact that things flattened out in 2005 doesn’t contradict Moore’s Law at all.

If you look at the one chart with the number of transistors, it has still been following the trend line right through 2005 and up to the present. Of course that won’t go forever, nor even for much longer.

“but one thing is that Moore’s Law never stated was clock frequency would double every two years.”

That plot is even stupider than you think. They’re not plotting “switching frequency of transistors.” They’re plotting “clock frequency of CPUs.” And the idea of the clock frequency of a *general purpose* CPU doubling every two years is crazy. You actually don’t want that, because the performance becomes crap.

General purpose CPUs tapped out at about 5 GHz frequencies – but DARPA produced ICs which switch at ~1000 GHz last year. So why not produce CPUs at those frequencies?

Because it’s stupid. You can’t get enough done in that fraction of time to do anything useful – you’d have to pipeline the utter garbage out of the thing, including wasting a good number of clock cycles moving data around the chip, which is terrible for a general purpose CPU, and terrible for power.

This had nothing to do with technical capabilities of the silicon. It had everything to do with the way we use CPUs, and the fact that we didn’t want to radically transform our entire computing infrastructure. *Other* things that we use transistors for *still had* their switching speeds increasing after 2004.

“If you look at the one chart with the number of transistors, it has still been following the trend line right through 2005 and up to the present. Of course that won’t go forever, nor even for much longer.”

The amazing thing – and really, it’s super-amazing – is that when Moore’s Law finally breaks, it won’t be because the engineering got too hard. It will be because we’re hitting up against the fundamental limits of the Universe.

That’s insane. Humans went from “can’t make an IC at all” to “making the best IC you can fundamentally make” at basically a constant speed.

> “Because it’s stupid. You can’t get enough done in that fraction of time to do anything useful – you’d have to pipeline the utter garbage out of the thing, …”

And that was exactly what Intel’s old “P4” architecture did (Willamette, Northwood, Prescott chips). Crazy fast clock speeds (mostly for marketing purposes), but very little useful work done in each clock cycle. Oh, and those high clock rates consumed oodles of power. Nice room heaters those systems were!

After getting spanked for a while, Intel went back to the older and renamed “Core” architecture, which was much more sensible.

The idea that “nothing has changed since 2005” is a bit silly. I’d much rather have a Skylake chip than an older 2005 chip. Moore was a great guy, but his law certainly doesn’t capture the totality of “goodness” for customers. There is vast room for many more/better chips in the future!

Although the conclusion here is obvious (nobody ever said that it would continue forever), the evidence that he draws from is specious and some of what he says is incorrect.

The law is talks about the number of transistors, which has in fact continued to double every year since 1975 including past 2005 (until maybe 2012), as evidenced by his own graph.

|| It ended quietly, sometime around 2005, and we will never again see the time when transistor density…

|| Now, it seems, Moore’s law has extended to three years.

But this is incorrect. Intel has stated that up to about 2012 they were still doubling every 2 years, and since 2012 they’ve slipped closer to 2.5 years than 2, and that around 2017 it’ll probably slip to every 3 years*. But we’re not there yet.

He then extends the law himself to apply to other aspects than number of transistors:

|| Although Moore’s Law applies only to transistors on a chip, this measure aligns very well with other measures of the performance of integrated circuits.

|| Here you have a law where not only the meaning – transistors, performance, or speed – can change, but also the duration.

Then uses that to prove that since those broke around 2005, the law broke then too. The original observation from Moore had nothing to do with performance, number of cores, or power, so those statements are directly incorrect.

* http://blogs.wsj.com/digits/2015/07/16/intel-rechisels-the-tablet-on-moores-law/

It’s also important to mention that the “law” that is commonly referenced is not directly what he meant. To reference his original paper:

|| The complexity for minimum component costs has increased at a rate of roughly a factor of two per year… there is no reason to believe it will not remain nearly constant for at least 10 years.

Note that he is talking about the “minimum cost per component” – NOT that the number of transistors will double. Have any studies been done since then which plot number of transistors vs manufacturing price (in 1965 dollars)?

-“Moore’s Law states the number of transistors on an integrated circuit will double about every two years.”

No it didn’t.

It stated that the number of transistors on an affordable chip would double, which is a whole different proposition. It means the most cost-effective number of transistors per chip keeps doubling. Everyone’s just inventing their own “Moore’s law” every time to suit their argument. Then they go about cherrypicking some data, like picking a number of different CPUs from different years that fit nicely on a log-linear graph.

It’s always been possible to put an almost arbitrary number of transistors on a chip to keep up with “Moore’s” law – it’s only a matter of how much you want to pay. In actuality, the real Moore’s law stopped decades ago.

Although I agree with what you’re saying about the law, it would appear that it does still hold true today (and didn’t stop decades ago)

http://spectrum.ieee.org/computing/hardware/transistor-production-has-reached-astronomical-scales

Shows that the price-per-transistor has roughly continued the same decrease ever since 1955.

“Price per transistor” is not the correct metric. The original Moore’s law was concerned about the optimum size of an IC in terms of transistors. It’s not just transistors per dollar, but transistors per chip per dollar.

It makes more sense, because you have to buy/make the entire chip – not just an arbitrary number of transistors. Like, if you want to make a car to drive 10 miles, you can’t just make 1/10.000th of a car.

There’s a certain optimum size for the chip where cost per transistor is minimized. The price per transistor may drop, but that doesn’t mean the optimum chip size will increase. It may even go the other way, considering that with cheaper processes you may lay down more transistors on a silicon wafer, but more will be defective so larger chips can have poor yields.

Back in the ’70’s hot rodders had their own version of Moore’s Law, which stated…

“If this works, too much will be even better!” B^)

Another thing that’s not mentioned is that our computers are good enough. At one time computers were limited in what they could do. Now they are good enough for most things. I use to upgrade every year, my last computer lasted 5 years. We are now at 64 bits and we won’t be exceeding that in my lifetime. And the market for PCs as collapsed, everyone is going mobile, no need to spend huge amounts to developing new CPUs. The thrill is gone.

Mobile devices are going through an explosive growth phase, and when that dies off something else will take its place.

Think of the things that can do with computers now that weren’t even something we dreamt of 10 years ago – now try to imagine the kinds of things that we’ll be able to do 10 years from now with every increasing power and capabilities.

The thrill is far from gone – it’s just shifted focus.

Yes, desktop publishing and emulating Solitaire have reached satisfaction. AI has not event left the starting blocks.

Fortunately AI works well with distributed processing, it’s nearly always been a matter of width over speed. We’ve just used speed in the past cos the width wasn’t available. Now clock speeds have hit a wall, wider word size (ie 64 or 128-bit) and multiple cores have become the place the advancement happens.

Then again there’s been stupidly-parallel supercomputers have had plenty of power for decades. The main prob with AI is figuring out how to write the software. That’s the problem with software in general. Half of the problem in programming is knowing what question to ask. The other half is how you phrase the question.

Breaking down what we want (an android friend / capable slave) into discrete steps and requirements is still in the pure research stage. It’s produced a lot of useful tools, but solving problems in general, rather than with pedantic specificity, is something computers don’t do.

This^ So much this^

That being said though, while AI may benefit from increased parallelism (which, for the sake of argument, let’s say is not overly-dependent on physical density for it’s processing capability), the transistor-for-transistor density will have to dramatically decrease to get all that parallelism in a tight enough package to be mobile. This is because relying on external computing muscle (racks and racks of servers per AI, with immediate-future projected densities) would quickly become impractical when dealing with a large number of robots (such as one in every home). A distributed AI-as-a-utility (like electricity) approach might save you there, but I don’t think it would work for long due to growing processing demands, and would be somewhat disappointed.

/me is disappointed that computers had to stop exponentially growing NOW of all times, when we’re beginning to figure out how this AI stuff should work (The house-robots in The Time of Eve (highly recommended slice-of-life anime based on Asimov’s 3 laws) had TERABYTES or more of ram, which while perhaps a realistic requirement, will sadly probably not be happening anytime soon)

Bummer.

On a side note, I guess we better get good at building optical devices in a big hurry….my new computer engineering solid-state physics textbook (will buy this week) has a brand-new chapter on the subject. Could a super-simple Turing machine with a long optical delay line running in the terahertz range through a ton of abstraction offer noticeable improvement? could we get to building a CPU of some sort directly in optics anytime soon? Anyone?

Define ‘good enough’. Good enough to run a word processor, sure. Good enough to do particle level simulations of chemical reactions, nuclear reactions, fluid dynamics or finite element analysis, not even close. There are always applications that push the boundaries of computing, whether your computing problem is a loom or weapons simulations.

Commercial systems in excess of 64 bits have been around for well over two decades. Few however are microprocessors. I worked on a 256 bit machine in 1985, It was on a single die, though it was not commercialized.

Side note: One of the reasons the superpowers got away from underground testing is that simulations got ‘good enough’ and have continued to get better.

Superpowers not doing actual nuke test was a combination of public pressure, cost and availability of an alternative (simulation).

Last but not least, secrecy, you can’t do a nuclear test without the whole world not knowing about it, but you can simulate all day long in a dark underground facility and no one will know ;-)

Also the “good enough to run a word processor” is a very important factor, for nearly 3 decades, a new operating system and office SW package meant needing to upgrade the HW.

Nowadays offices equipped with core i5 or i7 will have no reason to do that, the average BFU will not see any gains with a new CPU…

SSDs though, are quite something else :D

New Moore’s law is probably going to be all about efficiency…

Exactly. Most CPU cycles are wasted, so why upgrade?

My PC can’t keep up with me when I use it at full tilt, but that’s certainly not the hardware, because my Amiga was more responsive on a 68000 7.something megahertz chip now found in toasters than the quad core PC beast running at 3 gig!

My phone is more than capable, however.

The lack of a mass market reason for the average user to upgrade is the reason for the death of Moore’s Law. If we suddenly all wanted to simulate atoms at home, the drive for Mor Power! would resume. As it stands, it is only researchers and serious power users driving the high end.

I loved my Amigas. They paid attention to the user. MS makes an OS that sometimes notices the user, but will often run off and ignore them. The MS concept – Are you busy? ‘Cause we have to install a bunch of things to fix problems we shipped and then we’ll reboot and maybe the computer will come back up again. Is that OK?

-“because my Amiga was more responsive on a 68000 7.something megahertz chip ”

I’m sensing some serious nostalgia goggles there. In reality, those old systems were dog slow – you were just less busy then.

I mean, seriously. Those old 68000 and whatnot based computers were slow enough that you could type faster than what they could display on the screen with a typical word processor.

Far as word processing goes, for what it does, Word 6.0 was more than enough, and Word 2 before it was certainly satisfactory.

If you didn’t need WYSIWYG, ie were capable of IMAGINING what bold text would look like, Word Perfect did well enough for years. All the “advancement” in MS Word has brought is ever-uglier posters for lost puppies. And 9 out of 10 greengrocers STILL can’t use an apostrophe properly, how many CPU cores will we need to fix that?

If you were to graph MS Office (and it’s clone, since MS did such a good job of buying out, bundling, and product dumping to kill the competition) by actual usefulness vs hardware demand, you’d see a very steep downward line.

Are you somehow trying to say that my mobile devices don’t need a CPU ?

Or are you trying to say that there is no need to develop better technology ?

I think he’s saying phone CPUs are fast enough. i have to agree.

From what I read 3-5 is perfectly happy working at >20GHz at >100’C

What’s 3-5?

indium, gallium etc

https://en.wikipedia.org/wiki/Template:III-V_compounds

Ah! Though since Intel et al have spent so much time and money building and tweaking machines to stretch and compress silicon, focus light beyond it’s wavelength, and all that sort of stuff for silicon, you can understand why they’ll want to absolutely squeeze every bit of usefulness out of the process they already have.

Is there much being done with high-speed Indium etc? Anyone put out a 15GHz 6502, just to prove the principle?

Whatever your interpretation of Moore’s law, the fact that cpu frequencies have stalled since about 2005 means that computer programmers have had to change their code to exploit parallelism in those cpus where the actual transistor count has still been growing. This is known as “The free lunch is over”. I think it was Herb Sutter who first framed it this way in 2005: http://www.gotw.ca/publications/concurrency-ddj.htm

There are two concepts here:

(1) The actual original assertion by Gordon Moore which had to do with number of components per cost unit doubling every year. This was broken a long while ago, with a shift to doubling every two years, but then entered into a long term fairly stable exponential growth which may or may not yet be ending.

(2) A large variety of related hypotheses related to generally exponential growth in electronic and computer performance. It has been mutated MANY ways – transistors per chip (regardless of cost), memory size per chip, memory cost per dollar, clock speed, CPU net performance, hard drive capacity, etc. A large number of other indicators have followed a roughly exponential growth (with differing doubling times) for a remarkable time. Some have fallen off the original exponential curves, some have not.

The point is NOT to question whether Gordon Moore’s original formulation has been broken or not, but to observe which of the many related exponential growth profiles (all loosely considered Moore’s Law variants or derivatives or associated) are following or not following their own exponential curves, and why.

One of the mutations in your etc. is Size of the Die.

I wonder how yield per slice of ignot is doing…

True. Although bigger dies themselves were an advance. For one, getting a big enough square of silicon that didn’t have faults in it.

Remember wafer-scale integration? Next Big Thing in the mid-1980s.

Quantum computers would allows us to greatly increase our computing power…but then maybe that’s a whole different ball game.

Quantum computers are good at things that normal computers can’t do well.

Unfortunately Quantum computers are not that good at the tasks that normal computers do well. They have a different application.

Arg. Every time I try to copy something, the original disappears! My thumb-drive crashed my hard drive! Every time I want to use a previous result, I have to start over!

I should point out that 2005 is about the time that Intel and AMD moved to the 90 nm node and discovered the leakage problems that came with it. Remember “Netbust” and the promise of 10 GHz CPUs?

Leakage wasn’t the main killer of high-frequency CPUs. Netburst’s problem was that highly pipelined architectures don’t make sense when you can drop down multiple cores and nice vector units. Code without branches is almost always parallelizable.

Essentially taking the sequential pipelines and laying them down side by side. RISC figured this out a long time ago, same philosophy.

Still, there’ll always be limits. Decryption (fortunately) doesn’t parallellise well. Just fortunate that for things like 3D graphics, games and video en/decoding, parallel is absolutely great. Just needs a bit of discipline, and to keep parallel in mind. Same applies for a lot of modern uses of a computer. All those years your multi-tasking computer was just swapping tasks into the 1 single processor!

Eh. Should’a dropped the things in liquid nitrogen. Solves all the power dissipation problems.

Wait, so was there either a new TOP500 published or was there a HotChips / other computer architecture conference I missed, because normally the near annual “Moore’s Law is over” article season normally seems to correspond to those events.

I’ve been mostly ignoring such speculation for the past 20+ years. I believe the topic has been around since people have citing Fairchild Semiconductor and Intel’s co-founder Gordon Moore’s statement(s) in 1965 and 1975.

IEEE Spectrum Special Report: 50 years of Moore’s Law.

http://spectrum.ieee.org/static/special-report-50-years-of-moores-law

(free public access)

“Cramming more components onto integrated circuits”, Electronics, April 1965

http://www.monolithic3d.com/uploads/6/0/5/5/6055488/gordon_moore_1965_article.pdf

(link to Intel’s ftp site where this was originally available, appears broken)

“Progress in Digital Integrated Electronics.”, IEEE International Electron Devices Meeting 21, (1975):

pg. 11–13.

http://www.chemheritage.org/downloads/publications/books/understanding-moores-law/understanding-moores-law_chapter-06.pdf

Intel 40th anniversary of Moore’s Law

http://www.intel.com/pressroom/kits/events/moores_law_40th/

Intel Moore’s Law @ 50

https://www-ssl.intel.com/content/www/us/en/silicon-innovations/moores-law-technology.html

One thing that hasn’t been mentioned here is that the cost per unit of processing power has fallen off a cliff.

System On Chip designs like the RPi cost less than an hour’s salary, & are vastly more powerful than NASA had in the 60s, whilst fitting in a pocket (or high heel) and having gigabytes of RAM and storage. Indeed, the keyboard costs more half the time!

An hour’s salary for some fortunate people.

And yep that’s a hidden caveat with the Raspberry pi. Buying a powered USB hub isn’t cheap, but necessary if you want to connect hard drives, Wifi dongles, etc. Often they’re about the same price. Keyboard + mouse isn’t too expensive, you can get a wireless set for a tenner from my local supermarket. Then there’s the SD card, though since the Pi came out, a usable size of a few GB is no longer too dear. Although still a big chunk compared to the “$29 computer”. And that $29 has a wierd exchange rate when buying from local suppliers in the UK.

I never know if the Moore’s law has been give success with the count of transistors in a microprocessor because the microprocessor industry would like to do the Moore’s law works, or if the Moore’s law was worked for several time because the improvements of electronics technology was jumped in “quantas” of time of 2 years.

This matching of the technological jumps always sounded a little strange for me.

I’ve been hearing the end of Moor’s law proclaimed for 30 years. Fear not, somebody somewhere has a bright idea, or will wake up some morning having solved a problem that’s had them stumped.

Nah, we’re getting to the point where you can count the atoms in a transistor. Increased leakage really hurts power consumption, and signal > noise limits how low a voltage you can run the thing on. We’re hitting actual physical limits. At least as far as making computers out of baryonic matter goes.

PC from 1995 was pretty much useless in 2000 and beyond. My current PC built in late 2008 (Intel E8400) still serves me well. It runs CAD and dev programs (SolidWorks, Visual Studio, Ubuntu stuff, etc.). After upgrading GPU from 8800GS to GTX560 it runs my fav. games in 1920×1200. I could switch to i7 with more RAM and SSD but meh, I think I’ll buy a nice car and a better scope maybe.

“it’s almost 9000!” obligatory current overclockers world record with liquid nitrogen.

[Brian Benchoff] – This IS a strange article. On one side we ARE able to confirm that pertaining to what we can simulate we can double the amount of transistors. The other side of the coin is the complexity of using them effectively is also increasing.

I guess the most recent debacle of good on paper/in theory was slides regarding the Kepler VS Maxwell GPU architectures. *not going to use the company*, as my wallet is still emotional traumatized at my purchase of the 970 (not EVGA thankfully). Log story short, the 4GB of memory is only 3.5GB with logarithmic slower .5 GB added.

The biggest problem is indeed the capacity for a company as it grows to continue to innovate without acquisitions. Maybe good old fashion egos? or lack of foresight and planning? Fear of risks and failure? Not enough time spent on gentle introduction and proper testing? Not enough money spend on R&D?

I’ve worked with some brilliant people but in regards to things of common sense and treatment of others. Not so nice. Outright mean, predatory or creepy, but they are the company “star”. Be it scaling system designs, forgetting about attenuating signals, Hall effect-Lorentz force, memory usage and leaks, cache hits vs misses, closing the connection to the JDBC pool, choking a physical HDD at 90% capacity spite of fiberchannel and scsi array size.

I think we are starting to hit these limits not because of physics but because of our own limitations and specializations in the fields of study we focus on (say ph.d in Digital signaling) and are inherently reluctant to allow some from another field (an Analog specialist) to make suggestions.

“When the opponent is challenged or questioned, it means the victim’s investment and thus his intelligence is questioned, no one can accept that.”

http://www.nasa.gov/sites/default/files/atoms/files/arc-17130-1_nanostructure-based_vacuum_channel_transistor.pdf

Hm, problem with being an expert on, say, digital signalling, is that science uses models. Engineering more so. In order to do useful work without having to know everything in the universe, a model has to approximate, and ignore certain aspects of a thing. That’s what a model IS.

So that’s why it’s vital, when faced with a problem you can’t seem to solve, to bring in knowledge from somewhere else. Might well be your problem’s been long solved in the analogue specialist world. To use your example. The Universe isn’t split into categories as sharply as the educational certification system is.

Then again I’ve grown out of taking people knowing more than me as a threat to my ego. If an argument’s about what an insufferable butthole I am, I’ll take that personally. If it’s about some quality of physics, or some factual matter, I don’t. If you don’t accept there’s a whole lot of stuff that you don’t know, you’re of limited use in your field, as well as childish.

A lot of people (ie most of the shit-throwing monkeys) still haven’t got the hang of separating a personal argument from an objective one. That’s actually often to my detriment, when I’m arguing over some factual thing, I don’t expect people to get personally upset over it, so I don’t consider their feelings when I speak. I shouldn’t have to. But I do have to, and sometimes that upsets friends.

Fortunately my family know I have the tendency and just stop taking any notice of me. Harsh, but keeps the relationships going! And I don’t really bear grudges.

“taking people knowing more than me as a threat to my ego.”

Just one more step. Remember the only real threat to your ego is yourself.

People don’t ~really~ matter. However factoring, caring for your elders, self-preservation, bargaining, haggling, mortgage payments, and keeping your job to be able to build and provide for a family. Ahhhh. That’s when you can’t tell if people are pretending that they are beyond it or playing they are beyond it. But, you know no matter what, “approval junkies”.

Usually that’s when it creeps back in. People taking credit for something you made or dedicated time to. Or inversely people dumping the crap they don’t want on your lap and saying “This is yours now”. *Nope! Not the baby’s daddy!*

It’s futile, it’s so Kenny Rodgers, y’know? When to hold, when to fold. Till our dying breath.

*Gettin Schwifty on Steve Jobs Grave yeah. Could have prevented his death.* I mean is eating only fruits and nuts now a means of suicide?

“I don’t really bear grudges.” You have to learn from past mistakes, so you identify behavior patterns, speech mannerisms, body language and expressions. It isn’t about never “forgive”. It’s easy to forgive those that are “dead” to you. It IS about never forget.

“I think we are starting to hit these limits not because of physics but because of our own limitations and specializations in the fields of study we focus on (say ph.d in Digital signaling) and are inherently reluctant to allow some from another field (an Analog specialist) to make suggestions.””

I often worry about that.

That, as a species, we are missing big breakthroughs because they require specialist knowledge in two distinct fields and we currently relay on just dumb luck for them to ever meet.

Look. No one will ever need more than 640K of RAM. And speaking of MS, can we get a World wide ban on the use of Excel plots? They suck so badly and always have. Please! Someone just use little dots, and NEVER connect discrete points with lines. Lines and curves imply a continuous process. Just stop it!

The idea of taking data points for a graph is that there is a continuous flow of values between the dots, a trend. If the points in a graph are completely unrelated and all over the place, you haven’t discovered anything and don’t have a point to make. So you really shouldn’t be making graphs about it!

I am posting on my Atari 800 on a 1200 baud modem. Why do we need more?

Seriously though, I hate to quote the plastics thing from the graduate, but the future is in graphene. I work with LED lighting and we have more than followed the graph, to the point where we thought we were going to peter out. Graphene is the answer.

> I am posting on my Atari 800 on a 1200 baud modem. Why do we need more?

prove it. Because I want to see that.

^seconded. Please send in a tip!

I’ve got a pic of HAD loaded on my wristwatch. It’s one of those Mediatek 6326 (IIRC) watch phones, so it’s not really a miracle. Although getting Internet (over 2G) in the first place was pretty clever, since it looked like the phone only allowed use of presets for a few big Chinese ISPs. Of course nothing in the manual, and the menu is Engrish on a ~128×96 pixel screen.

Days gone by, you could access Compuserve or whatever on any old text-based modem-having thing. The Atari 800 has a 1.79MHz 6502, almost twice the speed of the Commodore 64 of a few years later. Those extra cycles were handy in feeding the Atari’s amazingly versatile graphics system. Which had it’s own little processor, possibly Turing-complete, can’t remember.

Are there still public shell accounts you can dial into? Probably not. But maybe a college or something. And you could set one up at home. There’s a TCP/IP stack for Z80-based machines (started from a project on the Z88, a GREAT Clive Sinclair designed laptop, A4-sized, full size keys, weighed a kilogram and ran for 20 hours on 4xAA batteries. Since been ported to the Spectrum and most other Z80 home computers. Is there a 6502 version? Well, who cares!?

The Game.com of a few years ago was a handheld console with a modem option, launched in agreement with an ISP who provided simple menu-based text access to the net for it.

Worst-case, if you can get Telnet you can always connect it to port 80.

Anyway yeah, old computers, they’re great!

What am I missing? No matter how I figure it 2x is not x^2 (for meaningful values of x.) Why does the article keep interchanging “double” and “exponential?” They are not the same.

Moore’s Law says: “most cost effective number of transistors per chip” is proportional to 2^(N/24), with N in months, making it 2x2x2x… with an additional 2x each 24 months…. (or you can use 18 instead of 24 months for the “updated” version of it…)

And the x^2 you are writing would be quadratic, not exponential. Exponential is n^x with n = const. and x steadily growing.

Oops, your right. ^x… Still confusing though. I keep thinking of the examples of growth functions from math class.

2x, x^2, n^x, x!

With the point being made that the “doubling function” is pretty slow compared to exponential or factorial.

Actually, Moore’s Law is only about this:

“The Number of transistors that are integrated into a single chip at the cost-effective optimium”

The article mixes it up together with “Dennard Scaling”:

https://en.wikipedia.org/wiki/Dennard_scaling

While this might be nitpicking, it’s still an important differentiation.

In around 2005 we saw the end of Dennard Scaling, and the start of exploiting Moore’s Law with parallelization (Multicore).

Moore’s Law was still thriving. Just over the last 2-3 years it’s now also slowing down.

Overall, with the relation to only actual computational performance, we could still try to exploit Moore’s Law for energy-efficiency more than we do now. Nowadays everything is about power- and energy-efficiency, scalability and reliability (smaller devices are more susceptible to errors from particle strikes/radiation, which always surround us to some degree).

For Hardware Designers it wouldn’t be a problem to do more than “CPU” and “GPU”. But there could be a range of specialized processors more than that. Actually there are different little steps done already, i.e. with ARM’s big.LITTLE ( https://en.wikipedia.org/wiki/ARM_big.LITTLE ).

Software Programmers just had the “luxury” over all these years to only need to consider a general purpose single core processor ( http://www.gotw.ca/publications/concurrency-ddj.htm ). Now they are learning multicore and using GPUs. But this can already be very hard for a single human being to oversee without tool-assistance. And actually some isolated problems can not even be handled in parallel and can only run on a single core (“you can not dry your laundry while you are still washing it”).

But no matter if problems can be solved in parallel or not, it is always more efficient to have them be solved by hardware / cores more specialized to the task, or application-specific co-processors/accelerators. But then no single programmer will be able to overlook them easily. One of the earliest works that looked into this was: http://greendroid.ucsd.edu/

So interesting times are ahead, where both software and hardware needs to change, if we want to be able to get more application performance for the same energy…

I wonder what the gains are for configuring an FPGA for your specific task, versus the losses for having to use an FPGA with it’s great inefficiency (which comes from it’s versatility, eg each logic “gate” having to be so much more complex, the “wiring” between them too). I’m pretty sure most FPGAs, in products, spend all their life in just the one configuration. Being simply cheaper to buy than the cost of setting up an ASIC. Which is another step down the chain towards full-custom silicon.

So really most silicon of most FPGAs is wasted, by necessity, lots of different necessities. Still, people talk of putting them in PCs. Dunno what for, the only demand since general-purpose maths and processing has been 3D graphics, and that’s all based on fixed functions.

Efficiency-wise, modern code and CPUs go to a lot of effort to keep as much of the silicon usefully occupied as possible. But FPGAs are right where hardware design and programming meet, where hardware “programming” is done. There’s even C compilers that can output code for FPGAs, with obvious limitations of course, and recommended ways of doing things that really must be followed if you don’t want massive waste and inefficiency. Selling MS Office implemented on it’s own chip would be interesting. Would teach MS some discipline too, but that’s an alternate fantasy universe.

I’d be surprised if there’s no law analogous to Shannon’s, only about processing data rather than sending it or storing it. There must be some fundamental way of representing a unit of information processing. Maybe tied in with Turing’s theory.

There is actually idea-sharing between the reconfigurable computing and accelerator people.

A basic computer with every cell being reconfigurable is not efficient with current off-the-shelf FPGA technology. Typically, if you would implement anything at the same technology node (e.g. a specific 20nm node), a rule of thumb for FPGAs is (or was?) 10x more area, 10x less energy efficiency, 10x slower. Then you first need to compensate that, before being able to beat ASICs. But there are some algorithms that can be easily 100x in speed if implemented in parallel on a FPGA, but those will then only be a part of your total application.

Because of that, a complete system of a competitive reconfigurable computer would of course have some hardwired (=in ASIC cells) elements, a CPU, some very common accelerators + some both tightly and loosely coupled FPGA blocks for custom accelerators or co-processors. “Xilinx Zynq” is a step in that direction, at least from the hardware side, not sure about the software support.

But due to Moore’s Law, so far just putting many different additional computational units to a general purpose CPU seems to have worked better. (Examples: It began with MMX and 3dnow which have evolved through SSE to AVX, then there are specialized instructions for AES for example, putting the whole enc/decryption in hardware, … there are enough transistors available :-) )

There are some people thinking about fundamentals of computing, you find them more in the EE and physics world, where a lecture I heard began with connecting computing more to the physical world, to fundamental laws of thermodynamics. That is Landauer’s principle: https://en.wikipedia.org/wiki/Landauer's_principle

Maybe more practically interesting in this direction, is the idea of ‘adiabatic circuits’ ( https://en.wikipedia.org/wiki/Adiabatic_circuit ) that try to preserve as much energy stored as possible by unconventional circuit design.

There is much more of course that you can read about in this direction, just follow some more internal wikipedia links :-)

Moore’s Law, or more generally the law of exponential growth, is kinda like classical physics. Great on short time scales, but if you really want to model larger time scales, you have to use the big boys tool: S-curves. See, for example: https://taketimes.wordpress.com/tag/ray-kurzweil/

When working with Deep UV lasers in the past, We were running into the problems with the optics getting etched before the chips would finish. I believe this needs to be looked at more closely as a factor in why the chips started to level out.