On February 25, 1991, during the eve of the of an Iraqi invasion of Saudi Arabia, a Scud missile fired from Iraqi positions hit a US Army barracks in Dhahran, Saudi Arabia. A defense was available – Patriot missiles had intercepted Iraqi Scuds earlier in the year, but not on this day.

The computer controlling the Patriot missile in Dhahran had been operating for over 100 hours when it was launched. The internal clock of this computer was multiplied by 1/10th, and then shoved into a 24-bit register. The binary representation of 1/10th is non-terminating, and after chopping this down to 24 bits, a small error was introduced. This error increased slightly every second, and after 100 hours, the system clock of the Patriot missile system was 0.34 seconds off.

A Scud missile travels at about 1,600 meters per second. In one third of a second, it travels half a kilometer, and well outside the “range gate” that the Patriot tracked. On February 25, 1991, a Patriot missile would fail to intercept a Scud launched at a US Army barracks, killing 28 and wounding 100 others. It was the first time a floating point error had killed a person, and it certainly won’t be the last.

Floating point numbers have been around for as long as digital computers; the Zuse Z1, the first binary, programmable mechanical computer used a 24-bit number representation that included a sign bit, a seven-bit exponent, and a sixteen-bit significand, the progenitor of floating point formats of today.

Despite such a long history, floating point numbers, or floats have problems. Small programming errors can creep in from a poor implementation. floats do not guarantee identical answers across different computers. Floats underflow, overflow, and have rounding errors. Floats substitute a nearby number for the mathematically correct answer. There is a better way to do floating point arithmetic. It’s called a unum, a universal number that is easier to use, more accurate, less demanding on memory and energy, and faster than IEEE standard floating point numbers.

The End of Error

The End of Error: Unum Computing by [John L. Gustafson] begins his case for a superset of floating point arithmetic with a simple number system of integers expressed with just five bits. The number 11111 represents +16, the number 01111 represents zero, and 00000 represents -15. Adding 10001 (+one) and 10010 (+two) equals 10011 (+three). This is simple binary arithmetic.

The End of Error: Unum Computing by [John L. Gustafson] begins his case for a superset of floating point arithmetic with a simple number system of integers expressed with just five bits. The number 11111 represents +16, the number 01111 represents zero, and 00000 represents -15. Adding 10001 (+one) and 10010 (+two) equals 10011 (+three). This is simple binary arithmetic.

What happens when 10111 (+seven) and 11010 (+ten) are added together. The answer, obviously is seventeen. How is this represented in the five bit number format? If this data type were implemented in any computer, the answer would overflow, the answer would be 00000 (-fifteen), and while the answer would be wrong, nothing of significance would happen until that answer leaked out into the real world.

IEEE floats have exceptions and tests, but results calculated as floats will not always be the exact result. This is the problem of floating point arithmetic; the problem of giving exact results is too hard, so use an inexact result instead.

[Gustafson]’s solution to this problem is a superset of IEEE floats.

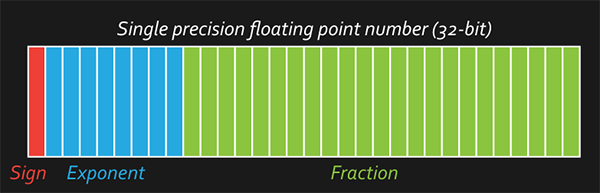

IEEE floating point numbers have just three parts – a sign, an exponent, and a fraction. While a 32-bit floating point number is capable of expressing numbers between 10^-38 and 10^38, with about seven decimals of accuracy.

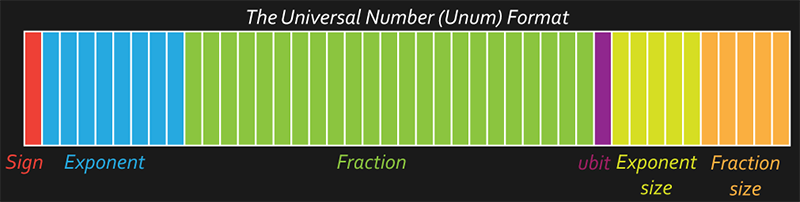

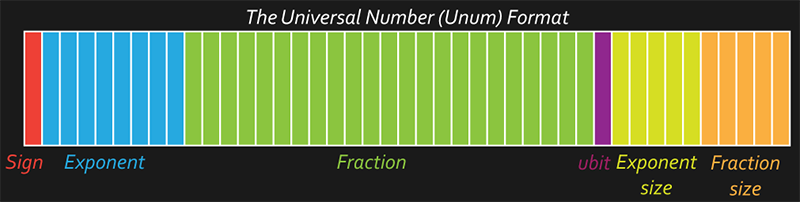

Unums, on the other hand, are a superset of IEEE floating point numbers. They include three additional pieces of metadata: a ubit, the size of the exponent, and the size of the fraction.

The Ubit

The example [Gustafson] gives for the utility of the ubit is just a question: what is the value of π? It’s 3.141592653, and that’s good enough for any calculation. Pi continues, and we signify that by adding an ellipsis on the end – “3.141592653…” Now, what is the value of 7/2? That’s “3.5” – no ellipsis on the end. For some reason, humans have had the capability to designate that a number continues, or if it is exact for hundreds of years, and computers haven’t caught up yet. The ubit is the solution to this problem, and it’s just a single bit that denotes if the fraction is exact, or if there are more non-zero bits in the fraction.

Exponent Size Size and Fraction Size Size

The two additional pieces of metadata [Gustafson] adds to the floating point number format are the size of the exponent and the size of the fraction. Both of these are simply the number of bits required to store the maximum number of bits in the exponent or fraction, respectively. For example, if the value of the fraction is 110101011, the fraction size is nine bits, which is 1001 in binary. The fraction size size, therefore, is four bits long, the number of bits required to express a fraction size of nine.

By only storing what is needed in the exponent and fraction, the average size of each unum is decreased, even if in the worst case the unum is larger than a quad precision floating point. Accuracy is retained, and even larger numbers than what a quad can handle can be represented.

If dealing with numbers that vary the length of their data structure sounds ludicrous, think of it this way: we’re already dealing with at least four different floating point sizes, and conversions between the two can have disastrous consequences.

In the first launch of the Ariane 5 rocket, engineers reused the inertial platform of the earlier Ariane 4. The Ariane 5 was a much larger and much faster rocket, and higher acceleration caused a data conversion from a 64-bit float to a 16-bit integer to overflow. A unum would have prevented this.

There are considerable downsides to unums; since the Zuse Z1, continuing through the first math coprocessors of the 80s, and even today, chip designers have put floating point units in silicon. Your computer is more than capable of handling floating points, poorly or not.

Technologically, we’re at a local minima. Unums are an exceedingly better choice than floats for representing numbers, but it comes at a cost. Any implementation of unums eventually falls back onto software, and not 30 years of chip design that has followed the introduction of IEEE floating points. It may never be implemented in commercial hardware, but it would be something that solved a lot of problems.

It’s unclear to me how unums are better. What practical purpose does the ‘ubit’ serve? How would you efficiently implement unum math in silicon? And how do you notate 1/10 as a unum, since an exact representation still isn’t possible?

Replying because I’d like to know to.

Is there an option on here to follow a comment without leaving a reply?

No, since it’s only 1993 message forums and thread-based commenting is still in its infancy. I hear that in a few years someone will invent an “edit” function as well – won’t that be grand!

Ha.

Yeah, I’d love an edit, I can’t believe I wrote ‘to’ in my comment.

Wonder why they don’t just use Disqus…

Because the obvious thing for us to do would be to integrate hackaday.io profiles.

Wonder why they don’t upgrade from WordPress to Drupal…

Because Disqus is a spy ? *put tin foil hat*

or a r/hackaday subreddit, then just embed the thread in each article…

> Wonder why they don’t just use Disqus…

Ssssh! Don’t give them any ideas!

@chranc: /r/hackaday already exists, and we don’t control it. There were plans for a bot that would repost everything from here to the subreddit, but there’s only one guy modding it.

But a subreddit would be a silly idea anyway. We already have our own social media thing that’s not overflowing with cats and rare pepes and isn’t a complete echo chamber that’s killing the web.

We’re good.

Brian – integrating .io is a great idea – while also adding features for following threads, and >maybe< editing for registered .io users.

Is this in the pipeline?

I think it would cut down on the divisive troll-style interactions if people had more of an established presence here.

It would also maybe encourage some of the awesome users on .io to use the main blog comment sections more.

Whatever you do, as you note, dont use disqus, facebook, reddit or any other third party! The experience on mobile with disqus is abysmal, facebook is a freakshow, and reddit is also over run by the rare pepes!

In order to appease the hackaday community, they are running the entire comments section off a complex arrangement of 555 timers. Consider yourself lucky to have anything at all

Wait, I thought they were using BJTs!

Any task that cannot be done on a Babbage computation engine is a task not worth considering.

That is why these comments are compiled mechanically, and served using a complex translation layer running on 1st generation mainframe technology.

Sure they do, but those point-contact types aren’t exactly reliable…

Simple, have them upgrade tothe dual 556 timers, they can be morons twice as fast.

The biggest issue I see with it is the variable length. No matter what, you would have to set aside however many maximum bits you thing you will need in the structure, otherwise when the number outgrows it’s current memory allocation, now you have to allocate more memory, and copy the old value into the new space. That certainly makes it more complicated to use than traditional floats.

Unless its a just a format that’s meant to be allocated at a fixed size, based on a specified precision. But again at any precision you will have some loss of information, it can’t be avoided.

This would only apply with old style fixed structure formats. Modern systems are much more dynamic and don’t used fixed formatting, so variables that change their size aren’t a big deal anymore. Simply unlink the old variable and add a new one on the end of the structure with a field that denotes which variable it is.

As an example of an efficient format to do this in, look at protocol buffers: https://developers.google.com/protocol-buffers/docs/encoding?hl=en

Protocol Buffers are a wire- and storage- encoding; they’re not useful for performing computation on.

Variable *byte* size is one thing. Variable *bit* size is something else entirely. There’s just not enough information in the article here to figure out whether or not this is practical.

I’ve got one word for you m’boy, just one word: analog.

Hey, let’s see a resurgence of analog computers to eliminate all this float nonsense! Oh, wait.. We’d still have to worry about floating grounds.

And good luck getting 50+ bits of dynamic range with analog…

ubit tells if the fraction is exact or not, see article.

I didn’t ask what it signified. I asked what practical purpose it served.

the practical purpose is to know whether the float has been truncated and or rounded (and is therefor not an exact number) or if it is fixed in length and represents the whole number your trying to save.

for simplicity sake imagine that your float can only save 4 decimals if your float now contain the number 0.3333 did i mean 1/3 (0,333333… to infinity) or 0.3333? with a normal float you cant distinguish the two cases with the ubit set you could distinguish both cases

But what’s the practical use of that? Since “being inexact” is a “contagious” property, as soon as you have one such number anywhere in your calculation, soon all of them become so. And how you use that information? Refuse to launch that missile?

Being inexact vs. exact means you know exactly how much precision the output has, and therefore how many bits to store it in.

But you still can’t tell whether you meant 0.33333333333333333 or 0.333…

It’s still ambiguous.

It’s not meant to *give* you more information. It’s meant to make sure you don’t store more information than you need.

Yes… once again, I know what it’s _for_. What would you do differently if you could distinguish those two cases?

Store less bits if the two multiplications are exact. 3.12345 (exact) – 2.12345 (exact) is 1 (exact). 3.12345 (inexact) – 2.12345 (exact or inexact) is 1.00000 (inexact).

Seems to be a marginal gain considering most things are going to be inexact. A more interesting format, in my mind, would be a fractional representation – you have an integer numerator, and integer divisor, and an exponent. Leads to a bunch of redundancy in number representations, obviously, but generates a boatload more exact cases (and 0.1 + 0.1 + 0.1 would equal 0.3).

The book goes into better detail, but some off-the-cuff practical uses:

When calculating the trajectory of an angry bird persay, using 1/2 of 1/4 of the computation power of 32 or 64 bit precision in order to find the identical landing-path means less battery power consumed on your phone.

When processing aliasing (such as intersecting paths of automatic cars or in graphics) you won’t encounter inaccurate overlaps (or non-overlaps) due to rounding errors.

When calculating the traveling-salesman problem, you’ll only use inputs as accurate as you know them (if the city locations are only known as (3.0, -4.0) we won’t pretend to use 7 more bits of accuracy).

When you take the number 1 and divide by 3 and then multiply by 3, you won’t get “exactly .999999999”

When you take Finite Element Analysis of the stress on a part in a powerplant – each section will confidently know whether a failure will occur within their boundaries (to a finite precision).

When you perform calculations, they are can be algebraically true and used to prove tolerances, math theorems, game theory, boundary layer problems, or any other calculation which an estimate with no precision boundary is not an appropriate answer.

One of the best examples in the book is in calculating the trajectory of a pendulum… when you are algebraically rigorous… you can actually break down the path into smaller components based on location or time! Meaning that problems which previously seemed impossible to parallel-process can now be done separately in rigorously bounded tiny pieces!

Please tell me you’re not one of those people who ignore compiler errors on “loss of precision” when you recast a float as an int. :-) If you’re doing precision mathematics knowing if something that comes up as 0.1 is actually 0.1 can be a very big deal.

Quick note: Gustafson has a presentation here –

http://sites.ieee.org/scv-cs/files/2013/03/Right-SizingPrecision1.pdf

which is a ton more technical than here and helps give some of the advantages.

Why do you need the ubit? Because unums are variable bit size. You need to know whether the number was stored small because it doesn’t need more bits for representation, or if it was stored small because it has that little precision.

Imagine the decimal case – you’ve got 1 digit of decimal precision. You store 3.5. Now you multiply it by 2: is your result 7 or 7.0? The ubit tells you which to do: if 3.5 was incomplete, the answer is 7.0. If it was complete, it’s 7. (Conceivably you could also use it to track precision – e.g. 3.5 * 2.0001 is 7.00035, but 3.5… x 2.0001 would be 7.0 but that would generate an equivalent rounding error).

Gustafson’s main argument for unums primarily comes from avoiding wasted DRAM bandwidth, considering doubles are so freaking oversized for most applications. Which is a fair point, but bit-addressibility is so hard that I would question the advantage.

Fair enough, that combination of variable bit length and floating point does make the ubit make sense. I still think it’s a pointless format, though.

It’s definitely not “pointless”: part of the problem is that he’s essentially addressing two ” problems” at once. An *internally* variable float is really a neat idea: doubles have way more dynamic range than is needed typically, and if you do need it, you typically don’t need the precision. If there was a way to dynamically allocate the fraction/exponent, that could be pretty freaking useful.

The second part – dynamically sized floats – is trying to save memory bandwidth, and I think that’s naive. If a unum-based double representation can save people from going to extended or quad precision, that’s good enough. (You don’t have to waste 8 bits on the unum tag either: you could limit yourself to just certain types).

I could see the ubit used for math where in some places you need high accuracy and others you don’t. An example from my own experience.

When I worked out a visually accurate sun and sky simulation for real time use. I had a problem of wavelength calculations throwing INF and NAN into the mix under specific conditions. My geolocation has a trademark palette in the sky that is a dead giveaway for where a picture is taken during late fall. That’s one of the conditions where the INF would sneak in and as a byproduct the NAN; ultimately they manifested as the sky flashing black during dusk when the wavelength shifted towards those trademark tones.

My solution was a hack of a solution but it proved the conditions detectable for what caused the problem. The problem manifested in 16-bit, 32-bit and 64-bit IEEE floats; as I learned is pretty common when it comes to producing such problems. The only viable solution I came up with was fixed point decimals for just those times, it resulted in a visually flawed representation but flawed in the right ways that some artificial cloud coverage was enough to hide it. Now if I had a way to shift around the fraction and exponent to fit my needs as well as a way to extend into multiple floats (via the ubit to represent when to do so), it’s no longer a problem, the computer can safely represent and work with those numbers. Sadly a custom solution isn’t viable there, fixed point works because you can treat it with integer hardware functions, I don’t see treating unum with integer or float hardware functions actually working to produce coherent results. Rolling my own here for software calculation would be too slow for real time but I may try it anyway to see if my suspicion that unum would solve the issue is correct.

If you are running toward a brick wall would you rather not be wearing a blindfold? ubit allows you to track the integrity of a string of calculations. Can you see the debugging value of that?

No, because I don’t see the point in having a flag saying “this value is uncertain” when you could simply determine that at the time you did the calculation that introduced the uncertainty.

You could be that disciplinedm have your code a true finite state machine, but that is a case that is irrelevant to the point, which is that you could count the generated bits and have a metric of accumulated uncertainty if you are coding with floating point values. This lets you set a threshold that you can act on before the uncertainty is out of acceptable bounds.

Yes, but only on a device that has sufficient memory.

Wouldn’t it be possible to implement a UNUM coprocessor in a FPGA?

Intel’s latest custom Xeons seem to come with those attached and are therefore a major candidate for a test implementation ;)

Sure, you could implement a UNUM coprocessor in an FPGA, but you wouldn’t want it. Even with the lowest latency connection available between the processor and the FPGA such as a Xilinx Zynq device where both the processors and the FPGA are on the same silicon die, doing ultra-fine-grained coprocessing would be slow. Much slower than a few extra instructions on the CPU that might get pipelined, etc.

Doing the entire calculation on the FPGA in parallel and taking advantage of the hardware DSP block of the FPGA, you might get good acceleration and you’d very likely better performance per watt than on a processor or a GPU.

There is the possibility to implement in a FPGS the UNUM instructions extension into a standard soft processor. There is situations where accuracy might be more important than top performances.

I remember time where caches was an external component before integrated into the core. Same for the MMU, same for the FPU, and now same for the GPU. Of course a possible UPU (Unun Processor Unit) will probably start his live as an external part of the core. Now if it’s recognized as a strong features, it will naturally be integrated into the core.in the future.

Maybe.

The difficulty with unums is the variable bit size. You could imagine something weird like a coprocessor with its own RAM: you store the unums in main memory (tag separate from data, tags first: then the load can sequentially arrange them into registers easily) and DMA them into the coprocessor.

Then you do a bunch of math on them, completely internal to the processor, by referring to the registers. Each unum has a fixed size *internal to the coprocessor* capable of expanding to whatever precision each one needs. Finally in the end, you DMA the results back into main memory (with their new length).

It’s not a bad idea for an embedded system (or something larger like a smartphone-level ARM) where you’re not at bleeding-edge clock speeds. The coprocessor would need to have quite a bit of RAM to make it worthwhile.

It really needs a “recurring” bit. So long as the recurring binary fraction does not get too long, the result can still be computed exactly.

What would you do differently if the ‘recurring bit’ is set? Besides, most numbers are irrational. ;)

Not the most important ones. The most important fraction that you can’t represent in binary are any of the tenths. Because probably 99% of the problems with float come because “0.1” is not representable in exact binary.

(In fact it’s probably safe to say that the vast majority of floats would initially start with a repeating binary fraction, since you obviously almost always enter in fixed floats in base 10 notation).

So what would you do differently? Calculate the answer perfectly. 0.0(0011 repeating) times 1010 would be equal to 1. To be specific you’d want a bit that indicates the last 4 bits are repeating- that gives you 1/15th through 14/15ths, which includes 3/15ths, or 1/5th.

It’s actually a pretty good idea – the correction wouldn’t be that bad, either – since you just would need a small lookup table for the correction, and add it in at the end. Since the only bits that would be allowed to repeat are the last 4, you’d also have to eliminate the “hidden bit” part of float representation because you’d want to allow the user to shift around the overall power of 2.

…but that’s not how the unum works. And even if it were, what would you do with that notation? Perform an infinitely long computation? Or do vastly more complex math to account for the presence of the ‘repeating’ section? If you’re going to do all that, why not just switch to using rationals instead of floats?

How would the unum prevent an overflow when converting a 64 bit floating point to a 16 bit integer ? Also, what idiot uses a float to maintain the system clock ?

python: time.clock()

I guess that Python just gets the integer system clock and converts it to float. That’s okay. Maintaining such a clock by adding small increments is where it goes wrong.

This. System time has the fraction/usec stored as its own integer. Python is just converting it for the user, so there would be no drift in the long run as in TFA if that function is called repetitively

Interesting stuff, and as a digital hardware engineer, I’ve always wondered about the ‘obfuscation of software’ where constructs are used to make life simpler (but some of the detail is lost). Most of the time it’s fine until you start getting close to the limits and, unless you know your stuff, you may not spot where the limits are and in the world of increasingly complex software, it’s almost impossible.

I have recollection, when learning to design algorithms on DSPs, that one would calculate how accurate the number is to avoid some of the problems discussed in the article (where the physical bit-size of the floating point number causes inaccuracy) but I’ve never seen anyone writing software worry about this. Anyone know if this sort of technique done in software (outside of low-level DSP code) where the limits of the floating point result tracked?

Good software engineers absolutely are aware of, and compensate for the limits of precision in all data types. Especially when converting from one to another. It’s software engineering 101, honestly. Mistakes will happen, however, and my impression from this article is that unums are intended to help compensate for human error. The details of how are presumably contained in the book, I guess?

The counter-argument to this is that simply standardizing on a higher precision IEEE format is much easier and more likely to succeed.. If your number system has smaller error bars than can ever cause a problem, then problem solved. This doesn’t prevent conversion errors to/from small integers, but sometimes the solution that can be implemented is better than a theoretical one that probably can’t. Besides, compilers warn about that crap, and if people writing missile code are ignoring compiler warnings, that’s a training/process issue that needs to be dealt with.

Given that compilers don’t always create good code and often generate different code between versions, they might very well be writing code at the lowest denominator. Does any assembly level language actually warn you about conversion errors? None I’ve ever run into.

So it’s just a variable length float with an overflow bit?

“Unums are an exceedingly better choice than floats”

Only if your application requires more precision than the fixed length data types you have available. Even in that rare case, you would probably be better off handling the issue in your own code.

“killing 28 and 100 others”? Wounding 100 others, maybe?

also

“Floating point numbers have been for as long as digital computers”

probably supposed to be:

Floating point numbers have been AROUND for as long as digital computers

Can we have a proofread button so we dont have to put corrections in the comments please?

Yes, 100 others what? It’s important key of the story!

Probably 28 Americans and 100 other, you know, unimportant people.

Thank you. This is the “truest” comment on this site.

I would say about 80% of errors generated by floats can be eliminated by making people use fixed-point arithmetic instead.

Of course there are SOME places were a float is the right choice (mainly when dealing with huge ranges of numbers), but if I see someone reading the ADC of his µC into a float (especially on 8-Bit arduinos) I feel the urgent need to punch that person.

The value from the ATMega328’s ADC will ALWAYS be in the range of 0 to 1024 and therefore anything beyound a 16-Bit integer is a waste of space and performance.

Same deal with clocks, I have seen people use floats to store the time and then wonder why 10 seconds aren’t equal to 10.000000 seconds.

With fixed point, it’s also easy to get errors if you don’t carefully check all your calculations for the required number of bits. Floating point is probably safer for the novice.

Whaaaaat? Using floating point doesn’t eliminate the need to ensure you have enough precision (which I assume is what you’re saying), but it adds a whole lot of other ways to screw up.

There’s very little that gets screwed up if you take a 16 bit ADC value, put it in a 32 bit float, and perform some simple calculations. The extra bits offer plenty of protection.

Floating point gets you in trouble mostly when repeatedly adding a small number to a big number. This can be easily avoided in most cases.

Or when comparing two floats. Or when converting back to an integer by truncation. Or when adding a continuing fraction such as 1/10 repeatedly. Seriously, the list of floating point gotchas is nearly endless, and very it’s nearly a superset of the issues you have to understand to use fixed-point properly.

And fixed point can get you in trouble for doing a simple multiply.

Comparing two fixed point numbers has exactly the same issue as comparing two floats, as does converting back to an integer by truncation. On the other hand, you can simply do printf( “%.2f”, f ) to automatically do proper conversion and rounding, whereas nicely formatting fixed point requires a bunch more code.

I give up. Enjoy your subtle bugs from misuse of floating point numbers.

Repeatedly adding small numbers to a big number appears to be what caused the error in the Patriot computer. That could have been avoided by going back to the original base number every minute or so for error correction.

Start here, count ‘this many’. Result should be A. If result is A, count ‘this many’ more. Result should be A+’this many’. Iterate until any error exceeds acceptable size. At every step if result is too far from what’s expected, start over.

Or a system could be tested on the count until the accumulated error is too large then automatically start over. If it’s counting time since a fixed start, then increment an integer counter each time the elapsed time error exceeds its limit.

For example, if the elapsed time counter fails its accuracy check in 15 minutes, then each time it has to reset, increment a 15 minute counter by 1. Then you know that each quarter-hour will be accurate, with time elapsed since the quarter will have a very small but increasing error until the next quarter increment. Waiting until accumulated error forces an increment in the fixed interval counter could still accumulate error in the fixed interval counter. It would just take longer.

If your clock is that inaccurate, just have it tick a counter every minute while the elapsed time counter resets every minute.

@Galane: I’d vote you up if I could.

The problem with the Patriot missile clock has ZERO to do with floating point numbers, and everything to do with accumulated error (and potentially, timer overflow).

I’m surprised the Patriot software doesn’t periodically compare the internal time with a GPS time and correct it. Maybe it does, now.

If the article is correct, there was a floating point component: the fact that you can’t accurately represent 1/10 in binary floating point. In a fixed-point or integer setup, this problem would have been obvious, or absent.

Actually it does, for a lot of practical purposes :)

A single float type handles very wide area of applications, while with fixed point you must always choose a correctly sized type. Lazy programmers will not perform analysis either way, but float is more often adequate.

Fixed-Point forcesyou to think about your design before comitting to it and most of the Errors that happen are evident very fast. The example of the time in the missiles drifting off by “just a bit” requires hours of operation to show effect.

On the other hand most Fixed-point things like an overflow can easily be tested for by using the largest anticipated value to test against.

On top of that on weaker system (mainly 8/16 Bit µCs) a float (or even worse a double) requires excessive amounts of resources.

On the other hand, gcc-avr’s floats are surprisingly fast for being implemented in software on an 8-bit micro. I whipped up an IMU using an arduino pro mini and MPU6050 and it only uses 10% of the CPU running at 100Hz. Floating point math was a clear win because it made the code readable.

Embedded folk are often prone to optimizing before benchmarking. Often times the simple approach is the best approach both for performance and for code health.

“Embedded folk are often prone to optimizing before benchmarking.” If I’m writing Code that I know will be running on a very restrictive System it is only natural to try and optimize the thing “on the go” instead of having to go back afterwards and optimize the hell out of everything to make it fit. Especially inside ISRs it is crucial to get fast code as the whole interrupt-system “stalls” while an ISR runs. On “bigger” cores like ARM it is (more) acceptable for low-priority ISRs to take a little longer as high-priority stuff can still get through.

Oh, I totally optimize before benchmarking.

Because most of the time the damn code won’t *fit* before optimizing.

BCD for the win.

If your input (e.g. ADC) and output have a limited dynamic range, you are far better off using 32-bit fixed point math. Fixed point math actually can keep more significant bits as it doesn’t need to store those exponent bits (as the programmer keep track of the exponents.) It is harder because you have to think about the input range, the intermediate results and the output range. You’ll get faster execution time, sometimes code space.

Is it true that even when you specify double precision variables, some embedded compiler (or compiler options) might in fact reduce the actual precision to single to reduce size?

I believe gcc-avr by default treats double as a float. You can force it to use true doubles with a flag.

Fixed point math isn’t automatically a performance again. For example, multiplication can get messy when the product doesn’t fit inside the data type. CPUs often promote the product to a larger type in hardware (32 bit multiply has 64 bit result). C/C++, though, doesn’t provide a mechanism to access those upper bits. You end up having to promote your types before the multiply, and now your 8-bit micro is doing a 64-bit multiply instead of a 32-bit multiply.

Sure, you could write some assembly code to do it more efficiently, but are you good at assembly? Someone else really good at assembly already implemented 32-bit floating point operations in the compiler. If you really do need 32 bits of precision, and you’re doing enough math to have performance issues, it might be time to upgrade to a bigger processor!

“The number 11111 represents +16, the number 01111 represents zero, and 00000 represents -15. Adding 10001 (+one) and 10010 (+two) equals 10011 (+three). This is simple binary arithmetic.”

Maybe not so simple? From these examples, 11111 should be +15, not +16. Also, what is 10000? Another representation of 0? Or is it the “real” +16?

Have to read the book I guess.

I’m not sure what kind of arithmetic that is, it doesn’t seem to be the standard 2’s compliment stuff. Otherwise 00000 should be 0, I think -15 would be 100001. 10000 should be -16. 15 would be the maximum at 01111, 16 would be an overflow with 5 bits.

Um, since 11111 (+16) + 00000 (-15) = 11111 (???) – its pretty clear that this is *not* simple binary arithmetic.

Unless 1 and 0 represent the constructs we are not used to in which case we need to go back and state what the identities actually are – like “x+0 = x”, “x*1 = x”.

As summarised in this post, this is just numeric nonsense that needs no further consideration.

I was concerned with the 10001 + 10010 = 10011, but I think you found a better example.

@arachnidster

The ubit could be used by an algorithm to determine whether additional computation is required. For example, we may need to calculate a number to the nearest .001. Our algorithm calculates the number at the .001 position. However, if there are additional numbers, let’s figure out what the next number, x is at the .0001x location. That number will tell us whether to round up or down.

How does this hypothetical algorithm know whether or not to set the ubit indicating there’s more bits, and if it can do that, why not just bake that into the algorithm itself?

think of ubit meaning “remainder is not 0”

Then instead of ubit, it could be called rin0, oh wait, that could be confused with RINO… B^)

While it may seem much better at a first glance try to imagine doing this with real problems – and soon the precision indication mechanism will fail. And the general mechanism this is a simplification of (interval arithmetic) isn’t foolproof either.

The problem with floating point representation is that the standard uses fixed size of storage. But one can already use variable size representations today if needed.

Neither of your examples are really problems with the floating point representation. The first is a bad use of FP, the clock should be represented using a fixed point number with enough precision and then converted to FP for the related computations. The second is a conversion error due to bad development practices for such a critical system – as the conversion generated an overflow exception no change from FP would help.

I’ve played around with Sympy, which stores numbers/math as symbols instead of values. This allows an arbitrary precision. When you do something like a=1/10 it stores an equation like “a=1/10” instead of evaluating it and storing the value. This allows you to do more math with high precision, like b=a*10 comes out to b=1/10*10, it can then evaluate and simplify (at your request), where b=1. No loss of precision. For non-terminating numbers it either stores them at the precision you entered them, or as the equation like b=sqrt(2). This way when you evaluate the expression, you can evaluate to whatever precision you require. Evaluating would only be used to do some comparison or to actually do something with the date. If it were within some loop, you would store the cumulative expression, and evaluate as needed, instead of storing the cumulative result of the expression, which is subject to cumulative precision errors.

Processors should be designed like this. The data can get large, but you can have an occasional simplification of the expression that compacts it.

Symbolic representation delays calculations, and in sufficiently advanced systems can do considerable mathematical simplifications, the issue is the cost. On a desktop or server (e.g. WolframAlpha) there is sufficient computation resources for simple calculations to be done quickly, but the several orders of magnitude slowdown inherit in symbolic algebraic systems, worst than the arbitrary precision typically also made available in most with such systems.

Whatabout sin(pow(sqrt(2),pi)) ? How could the system “simplify” it so it’s better than IEEE’s floating point ?

For anyone doubting just how hinky floating point can be, here’s a paper on just how difficult it is to accurately compute the midpoint of an interval using floating point: https://hal.archives-ouvertes.fr/hal-00576641v1/document . Remember, the basic equation is just (a+b)/2.

And the slides for this talk show just how many special cases you can end up having to handle doing something like computing the mean of a list: http://drmaciver.github.io/hypothesis-talks/finding-more-bugs-pycon.html#/21

A lot of the difficulty in with midpoint calculation in floating point is correctly dealing with Inf, NaN and under/overflows. Of course, fixed point doesn’t have Inf or NaN (but would have silently produced a wrong result instead), and also suffers from under/overflows.

Oh gese, I struggle enough with 32 bits in PIC assembler, please don’t make it harder.

I propose that quantum mechanics offers a perfect solution. For every numerical entity representing a real-world phenomenon, there is a lowest possible state. That will be defined as ‘1’. Now, we will measure that phenomenon in whole-number units representing its possible quantum increments–the scale will be inherent in the object. The definition of ‘division’ related to each object type will be designed to yield only integer results. Voilà! No more messy fractions!

This is even better than cold fusion! I’m going to be rich! No more fractional dollars for me!

What is the smallest unit of length? If there is such a thing as a lower limit on distance, that would imply that the universe has finite resolution (I imagine these smallest increments as the pixels of the universe). The concept of “analog” completely disappears once you assert that there is a lower limit on any real phenomenon.

Is there a smallest unit of time; where slices any smaller would contain no new information? All of these things, if your hypothesis is correct, would substantiate the idea that the universe is in fact a computer. Is the universe’s CPU speed related to the universal speed limit that is c? Would teleportation be akin to calling the C function memcpy(source, destination)?

The word “atom” came from another word meaning “indivisible”. Science has shown us that atoms are divisible, after all; and so are their constituents. How far can we go splitting things before we reach something truly indivisible, if at all?

Now that I went off on this tangent, I’ll be up all night thinking about it and watching The Matrix :/

An appropriate choice for smallest unit of size would seem to be the Planck length: https://en.wikipedia.org/wiki/Planck_length

The corresponding smallest unit of time would then be the Planck time: https://en.wikipedia.org/wiki/Planck_time

Interestingly, the Planck area (Planck length ^2) is the area by which the surface of a spherical black hole increases when the black hole swallows one bit of information. Put that in your Weber and smoke it!

An added benefit of this system is that it will do way with all the confusion over mass and countable nouns. All will become the latter, saving second-language learners an enormous amount of effort and giving pedantic internet commenters that much less to nitpick about. Win-win!

So, if the Plank length really is what I mentioned above, there is no way any particle, potential, or field can move less than 1.61622837E-35 meters. Doesn’t this force a notion that there is a precise, perfectly-fixed grid (assuming a Cartesian system) that makes up the universe. Every single point in this grid is perpendicular to 6 other points 1.61622837E-35 meters away.

Does that sound right?

Or is the Plank length simply the smallest MEASURABLE distance? By that, I mean there are smaller distances, but the elements (particles, fields, etc) we use to perceive them are relatively too macroscopic (similar to trying to perceive atoms with light photons). The smallest number of photons you can detect is 1, but that doesn’t mean there isn’t spatial diversity below 1 photon at a particular energy.

I’m seriously in over my head here. Genuinely curious!

The Planck length is the length scale at which the structure of spacetime becomes dominated by quantum effects, and it is impossible to determine the difference between two locations less than one Planck length apart. So yes, it is the smallest measurable distance, not that we have instruments that can measure anything anywhere near that small.

It doesn’t suggest a fixed grid, or limited motions, only a smallest distance that is meaningful for any practical purpose. Since we could never, ever have any reason to measure in fractions of a Planck length (that is, we couldn’t even if we wanted to), I propose that it makes sense as a unit length. So, ALL lengths are integer multiples of this distance, and we have done away with those pesky fractions.

A similar analysis will give us unit measurements for everything else, and our need for floating point just floats away.

Of course, we’re going to need some really, really, really big integers to give us all the significant digits that are possible for everyday measurements…

“… is the Plank length simply the smallest MEASURABLE distance? ”

Currently, yes, this is the smallest measurable distance. However, consider why they chose to name that wibbly-wobbly stuff a “string” when its closed-loopedness looks like nothing of the sort. Its because they expect to eventually find “something” even more finite than quantumness; we just haven’t gotten there yet.

Having said this, I must admit, I would be interested to understand our preoccupation with dividing existence into smaller and smaller portion;

“… is the Plank length simply the smallest MEASURABLE distance? ”

Currently, yes, this is the smallest measurable distance. However, consider why they chose to name that wibbly-wobbly stuff a “string” when its closed-loopedness looks like nothing of the sort. Its because they expect to eventually find “something” even more finite than quantumness; we just haven’t gotten there yet.

Having said this, I must admit, I would be interested to understand our preoccupation with dividing existence into smaller and smaller portions, when there is another direction to consider; namely: What is outside our universe? Are we someone else’s Planck length?

I’ll correct one thing that kept getting repeated. The Planck length is NOT the smallest measurable distance. We can’t measure anywhere near that level of detail yet. We may get there in our life times, but I’m skeptical.

It is, however, the smallest distance at which “distance” means anything; or in which “space” means anything, given our current model of the universe. By Heisenberg’s Uncertainty Principle, if we knew an object’s place down to a Planck length, we could have no idea at all about it’s momentum. All movement at that scale is governed by quantum effects, things exist at one point until the wave function says they have moved to another point.

Now, is that actually how our universe works? Well, we’re pretty certain it is and have some good proof to back it up. But we had good proof about atom’s being indivisible and later we had proof that electrons orbited like planets and not in a quantum fuzzy cloud (that theoretically could have the electron orbiting an atom in my computer be over next to the Sun right now). For the time being it’s a map that is good enough, and yes it is one of the first signs that was available that our universe might be simulated (there are more, science keeps looking at that too). But it is just a map. Just like “spooky action at a distance”, it doesn’t have to keep you up at night.

https://en.wikipedia.org/wiki/Planck_scale

Great minds think alike.

Smallest unit of length: the Planck Length

1.61622837 × 10-35 meters

Ibid.

With the missile interceptor scenario, why was system uptime part of the calculation to intercept the missile in a way subject to such roundoff?

I was already familiar with the reason behind the Patriot failure, and it’s a simple logic error. The divided clock was determined in such a way that an error was introduced with every iteration, and more importantly those errors *accumulated*. Which was easily fixed, once the error was realized, by correcting the logic. Recompute the divided clock directly from the master clock every time, extending the number of bits of the master clock if needed.

What’s 1/3? 1.333333… and so on. How many bits does a Unum require to store this with perfect precision? Is it an infinite number of bits? Or is there any other scenario in which the size of a Unum will grow out of control?

If the answer is yes, then when working with Unums the tendency will be to always set a limit to precision, or periodically truncate/round, to prevent this from happening. And then it really solves nothing. There are already other numeric storage formats with arbitrary precision, such as the “Decimal” format in .NET, with the same issues.

Better to just be a good programmer. Widespread use of arbitrary precision numbers as a crutch certainly doesn’t foster that.

All the talk about floats is interesting, but doesn’t anyone find it bizarre that the generation who developed automated processes to determine the MTBF of things like lighters and car door locks couldn’t be bothered to test a multi-billion dollar system for 100 hours?

I can hear that old 80’s sales pitch now:

General: “Ok, it works. But how?”

Salesman: “It’s got a computer!”

General: “Ok, but how does the computer work?”

Salesman: “You flip the power switch to ON.”

General: “SOLD!”

Missiles remain in the air less than a minute. Why was it active for 100 hours?

My guess is

1. They didn’t suspect there was a floating point issue caused by them leaving it on.

2. They were on high alert status and thatmeant they didn’t know when a missle could be launched. You don’t know the time required to reboot and go through the startup checklist.

For real! During the accidental nuclear crisis, it took the Carter era NORAD eight minutes of protocol to realize that they were seeing simulated data(Walker, 2009). That’s eight minutes of turning on thousands of computers for a multitude of systems, in the correct order, and assuring that they are operating per spec.

Walker, Lucy. Countdown to Zero. Film. 2009.

Hmm, maybe because missiles remain in the air for less than a minute, and it took a relative long time to make the hardware ready. Though this type of hardware is certainly faster to make ready today, it still isn’t an instant on technology.

-“the generation who developed automated processes to determine the MTBF of things like lighters and car door locks…”

That’s because they didn’t. MTBF figures in the industries in general are mostly pulled out of a hat. Sometimes they’re based on accelerated wear testing, but quite often they’re either referenced from US military manuals on the expected lifetimes of various components, or simply made up.

I guess I am missing something very fundamental here. Could someone clue me in?

“…higher acceleration caused a data conversion from a 64-bit float to a 16-bit integer to overflow. A unum would have prevented this.”

By what, checking the ubit? But… if a programmer is going to the work of checking the ubit, why not just check the original number to see if it can be represented in 16 bits? Either way you are adding a check. Either way the solution doesn’t have to do with how the number is represented, but good programming practice.

Some things should be handled in integers only so the calculations are entirely deterministic. With 24 bits the clock could have kept time in 25 ms increments to roll over in 100 hrs.

Maybe we should stop trying to place time in a bottle and just accept that it requires its very own type.

Why not implementing this unum format in FPGA, this would be a good start.

Bring back the math-coprocessor? Ever look at why we got rid of it?

We still have math coprocessors today. We just call them GPUs.

That is true but the article is about accuracy. I could be wrong but the programmer still has to be aware of those rounding errors on that GPU negating any benefit from the method in the article.

If you want pinpoint accuracy, there are other standardized methods.

I have a problem with this kind of writing.

“It was the first time a floating point error had killed a person, and it certainly won’t be the last.”

By intention the scud was launched to achieve this killing and hence the evil done at impact.

The floating point error didn’t kill anyone. In this particular case, it specifically failed to save 28 people.

Good call Leonard in documenting accuracy of cause and effect.

Statements:

Pencils cause spelling errors. Guns cause crime. Poverty causes crime.

Corrections:

People who don’t know how to spell or are careless make spelling errors. Criminals cause crimes.

The thing is I expect that the extra bits needed to represent the result will actually be infinite more often than not. The denominator of a fixed-radix system will always be limited to multiples of that radix, so in general they can’t accurately represent most rational number much less most reals. In many other cases of addition the IEEE754 spec already has a pretty darned complicated rounding system. I’m not sure under what situation this information is useful. I guess you could check these bits after a calculation, redo the calculation with a double or long double as needed. But then you’d promptly discard the extra precision when you store to the destination data structure. You could throw an exception, but then if you know this might be a problem you could detect it ahead of time.

Is logging the only useful thing you can do?

“It was the first time a floating point error had killed a person, and it certainly won’t be the last.”

Don’t be so dramatic. The guy with his thumb on the launch button killed 28 and wounded 100 others.

True,

and there was no floating point error, just a bad programmer with poor knowledge of numerical analysis baked by some “defense” equipment salesman, just to make money from craps that don’t work most of the time

I can’t help but to think that the extra 11 bits used by the unum format would probably solve 99% of the float issues if they were simply used to have a longer float.

The rest of the problems, such as coverting from a long float to a short integer without checking if it fits have nothing to do with the floating point format itself.

Half the point of unums is to save space on representation. Doubles have ludicrously more dynamic range than needed.

Every time a SCUD missile was fired (it could be seen visually by its rocket plume by every aircraft in the theater) a warning was sent out.

Since they didn’t know the target early on, all the air raid sirens went off all over Saudi. Usually the SCUDS targeted Dhahran and Riyadh.

A few minutes later the Patriot battery would fire its missiles at the SCUD. The people near the Patriot could hear the launch. Some soldiers got into the habit of “if the Patriot doesn’t launch, the SCUD must be going somewhere else.”

Rather than run to the bomb shelter, or the fox-holes provided, a lot of soldiers would just roll over and go back to sleep. So, while the Patriot not firing is truly a problem, it had nothing to do with the soldiers deaths. They were not supposed to be in the building

Besides, the Patriot technology at that time was usually just knocking the missile off course. Sometimes the SCUD warhead would be intercepted and go off at altitude, but you still had a ton of kinetic energy hitting the ground.

If a VW Bug fell out of the sky at Mach-1, it would still have enough energy to kill you.

The Patriot was derived from a proximity detonation anti aircraft missile. The critics who claim not one Patriot ever hit a SCUD are right, and simultaneously wrong. The Patriot missiles, all versions of them, are NOT designed to make direct impacts on ballistic missiles.

They do what the majority of missiles do, deliver a lot of high speed shrapnel close enough to the target to damage it sufficiently to cause it to either go off course and crash or break up and crash. (Same thing that BUK missile did to that Malaysian Airlines plane.)

The Patriot missiles performed that task very well, with the exception of the incident referenced in this article. SCUD missiles that had Patriots fired at them did not hit their intended targets.

What I heard at the time of this was that the firing computers were unable to compute which one had the best firing solution and so “confused” that none of them with a radar lock on the missile fired. The fix was to have *every* Patriot battery with a lock on a SCUD launch against it. Having just the one battery closest or with the best trajectory etc launch was for conserving the missiles.

On second look, I read that wrong. I still think it’s a wacky number format that seem to have been created by someone with much less experience than the people who created the IEEE float spec. Anyway, here’s what appears to be the point of this format:

Precision in the bit length that is unused in the exponent can be moved to the fraction and vice-versa.

The possibility for smaller sizes than the maximum is a red herring and functionally useless in an actual bare-metal program. Possibly useful on wire or disk.

Given that my suggestion would be this: a 80-bit format like long double, with a sign-bit, then 6 bits to represent how many more than 9 bits are needed to represent the exponent, then all of the last 64 bits that aren’t stolen for the exponent are used for the fraction. The format could be tweaked to better adjust to word boundaries. Maybe 96-bits with 7 bits giving absolute size of the exponent, and values of the seven bits beyond 88 being used to indicate error conditions beyond infinities and NANs. Maybe the number format can be reinterpreted then to indicate infinite length, and maybe the bit upon which the mantissa starts repeating if determined.

The short of it is, using bits where they do the most good is a great idea. Having a number format that can’t be stuck in arrays or needs to be on the heap is really, really stupid though. A fixed size format then, which makes the second size parameter redundant as it can be determined from the other one by simple arithmetic if the length is fixed.

RAM is functionally a disk nowadays, so it’s definitely not a red herring. You’d probably get more benefit for less cost by just going “halfway” and implementing a float whose fraction/exponent sizes can vary, and hardware accelerating operations between them.

in before “not a hack”

What caused the first Ariane 5 to go awry wasn’t caused by poor handling of floating point numbers. It was caused by improper systems testing. The 5’s flight systems were never ground tested in an “all up” simulation. In every test, some part of the hardware was simulated, with its outputs faked and never going out of the expected ranges. They also never tested with parts from the Ariane 4 that for some reason were going to be installed, but unused, in the Ariane 5.

The root cause of the failure was a piece of equipment that shouldn’t even have been installed because its output wasn’t used by the flight control. It was part of the pre-launch stability control which monitored the on-pad sway of the rocket. The use of it was to control the engine ignition so that it wouldn’t light up unless the rocket was perfectly upright. (Essentially like how naval gunnery computers delay firing until the barrel is aimed correctly in rolling seas.)

Upon ignition, that part’s job was done, but in the Ariane 4 it was left running for some seconds after launch, shutting off before the rocket started the gravity turn.

In the Ariane 5, the pre-launch stability monitoring was handled by other components but this Ariane 4 component was installed anyway, and turned on, despite its output being unused.

Unfortunately the 5 was a faster rocket and reached gravity turn altitude *before the unused part shut down*. That part then went “Oh shit! Falling over!” and puked garbage data onto the system bus, first crashing the backup flight control, then soon after the primary. The primary attempted to fall back to the already crashed backup flight control and… rocket does a Catherine wheel.

Had a full test of the hardware been done, the problem with the unused component most likely would have been discovered. There would have been facepalms and whatever is French for “Why the hell are we installing and activating this expensive part the control system doesn’t actually use?”.

What’s very crazy is why in the Ariane 4 that component wasn’t shut down at the instant of ignition or just one second or less after? Once the rocket ignited its job was done. Had it been setup that way, the Ariane 5 rockets would have continued to carry that expensive and useless component without a problem.

I suspect the mfgr of that particular component had a “friend” in the government who was able to require the component to be included in the rocket. (tongue in cheek) During WWII the mfgr of defective torpedos had a US Senator (from Conneticut?) who kept that company on the Navy purchases. The USN could’ve sank a lot more enemy ships and sank them sooner, if they didn’t have racks full of torpedos that passed harmlessly under the enemy ships.

From the little that I know, I think that the “unums” solve a few problems that the “inventor” sees as a problem. But if it can represent 1/10 exactly, then it can’t represent some other numbers exactly that it can’t. Even if a bit signifies “not exact”, what use is that? In many cases, say the example where the time was not exact, a “not exact” solution was thought to be “close enough” by the programmer. So even if the number format would have indicated “not exact” and that this would have been signaled as an exception while debugging, then the programmer would have said: I know it’s not exact, and that’s fine.

Also, things can go awry after a while. Say if you add “1/16th” of a second to a floating point “time” variable. A 32-bit float will have a 23-bit mantissa that will eventually overflow. (if that takes ages, I should’ve said 1/1024th in the 2nd sentence). So software using unums would then have the option: “crash the program” (because during testing no inexact results were seen), or “continue with inexact results” (that were never tested during development). And then you have the same situation as with arianne 5.

If unums are 40 bits, then they can represent (a maximum of) 2^40th different numbers. If some of those are not representable by a float, then a 40-bit float will also have numbers that cannot be represented by an unum. Sometimes having one number that can be represented may “save the day” while at other times having a different number being able to be represented is essential. Unless you really know what you are doing, you can’t predict which one will save the day. And if you know what you are doing, all this is moot anyway.

Brian, I’m curious as to the source of your information for the article, specifically the part about the missed Scud intercept. Would love to bounce that against 1st hand info.

The GAO report…

“The range gate’s prediction of where the Scud will next appear is a function

of the Scud’s known velocity and the time of the last radar detection.

Velocity is a real number that can be expressed as a whole number and a

decimal (e.g., 3750.2563…miles per hour). Time is kept continuously by

the system’s internal clock in tenths of seconds but is expressed as an

integer or whole number (e.g., 32,33, 34…). The longer the system has

been running, the larger the number representing time. To predict where

the Scud will next appear, both time and velocity must be expressed as real

numbers. Because of the way the Patriot computer performs its calculations

and the fact that its registers4 are only 24 bits long, the conversion of

time from an integer to a real number cannot be any more precise than 24

bits. This conversion results in a loss of precision causing a less accurate

time calculation.”

Sorry if I sound dumb ( because I am), but isn’t this Chaos theory?

No

Hmm…

See: Entropy

Thanks, thought it looked familiar.

Check out Modal Interval Arithmetic and a company called Sunfish Studio. This is the BETTER math!

Back in the bad old days, before SSE, programmers on Intel hardware used something called x87. All floating point registers were 80-bit, and all calculations were done on that format. If you wanted to use 32-bit floating point (what we now call “binary32”), then this was a storage problem; the number would be converted when loaded from or stored to memory.

“Well,” many programmers said, “having more bits of precision for intermediate values makes the final answer more precise. So this has to be a good thing.”

Well… no it wasn’t.

The relative precision loss of an individual operation can be expressed as a number, known as the machine epsilon. One thing that numeric analysts rely on is that the machine epsilon is constant. That’s how we measure the cumulative effect of doing a bunch of floating-point operations so we can understand the stability and robustness of an algorithm as a whole.

The main practical effect of using a smaller epsilon for intermediate operations isn’t that the precision loss was improved. The main effect was that the precision loss was less predictable, since it depended on things like how the compiler did register allocation. It was much harder to do reliable numeric analysis on x87, because you could never be certain how an algorithm would be realised.

This proposed “improvement” would make that situation even worse, because now the machine epsilon is completely dynamic.

IEEE-754 is nowhere near perfect, and I hope it’s replaced with something better some day. This is not better.

So – if I have a 32-bit float, and want to use pi.

If I just say 2^24 = 16,777,216 and set the “fraction size size” to 24, I will have pi to over 16 million places, right?

And somehow my 32-bit float magically “knows” what those 16 million places of pi are?

I think I’ve misunderstood something.

Think of it as an honesty layer. If you are doing the calculation ” pi*r^2 ” but you only input the r to an exact precision of ” 2 ” and pi to a precision of “3.14(…)” your answer should be “12.56(…)”. By pretending that more digits somehow gives you an exact answer, you negate the ability to know how much you know!

As someone with an interest in math, and as someone who went to the trouble to learn 80×87 FPU assembly to do floating point the “real way”, I have the following first impressions:

1. It’s really annoying that there is no freely-downloadable PDF paper with enough implementation details on this idea. That one at ieee.org above is NOT “tons more technical.” So somebody’s going to have splurge money on the book.

2. “What would Kahan do?” I reserve judgement until an expert on the level of William Kahan has examined this. He’s the one who helped invent IEEE 754, and has LONG experience with all the tradeoffs, traps, and gotchas of various floating point methods and failures. And no compiler or math package–no, not even MatLab–emerges untainted. And, no, interval arithmetic won’t save you. Just reading through his various cases at http://www.cs.berkeley.edu/~wkahan/

…is enough to convince me that he’s the one qualified enough to catch any weaknesses, because I know I never could! Of course, he’s practically retired now.

Oh, and Kahan himself brings up a much better example of a fatal floating point disaster: Air France flight #447 that went into the ocean after airspeed sensors failed.

“Bereft of consistent airspeed data, the autopilot relinquished command of throttles and control surfaces to the pilots with a message of “Invalid Data” that DID NOT EXPLAIN WHY.

The three pilots struggled for perhaps ten seconds too long to understand why the computers had disengaged, so the aircraft stalled at too steep an angle of attack before they could institute a standard recovery procedure. ( 2/3 throttle, stay level, regain speed)”

(Kahan is an advocate for numerical programming that intelligently USES the concepts of Infinity and NaN such as choosing your response by flags, rather than just “throwing up your hands” by throwing a hard exception and stopping the calculation cold.)

Any opinions on symbolic computing as a partial solution to the problem? I realise it isn’t as fast, but you can always throw more computing power at it, plus how many critical systems need to be both fast and efficient while remaining invulnerable to quantisation errors? There must be a lot of stuff where you can say that speed is secondary to reliability. Even if the symbolic stuff is used to give the system a fall back to get computations on track when the floating point stuff barfs out an error. A sort of “Hang on while I check that on paper” type error handler that can initiate a “Nah, start again from here” process to get the faster code on track again.

The theory of transcendental numbers is formally undecidable, so that’s a nonstarter. If you limit it to algebraic numbers, things are better. The theory of real closed fields is decidable. The decision problem is known to be NP-hard, PSPACE and DEXPTIME. So, yeah, “it isn’t as fast” is putting it mildly.

I suppose ultimately it’s an insoluble problem. You can cover a whole shitload of contingencies, but there’s always one you won’t think of until it actually happens.

Besides that, there’s a feature of human minds called something like “risk normalisation”, where, when extra safety features are implemented into something, people feel safe enough to take riskier actions. So the overall risk stays the same. This might happen in programmers and the people who assess safety-critical systems.

We could look at it from the other side, all the thousands of flights that DON’T crash. It’s a hell of a complicated system, and distributed, there’s a lot of room for chaos to creep in. They do a pretty good job really.

http://www.youtube.com/watch?v=jN9L7TpMxeA (kahan challenges at ~30min)

There is a chapter in the book called “The wrath of Kahan” – and John thoroughly discussed these ideas with him directly in 2012. Impressively, Kahan’s traps and failures are resolved within the unum system. With over a dozen references and explanations of what pitfalls Kahan has laid out and how to deal with them.

One industry that does seem to churn out lots of “new” (at least new-ish?) silicon on a regular basis, and may possibly benefit from unums would be the professional series of graphics cards (Like the Nvidia Quadro or AMD FirePro series of GPUs). As a matter of fact, if you could get better performance/speed out of unums over double-precision floats, then you might see Nvidia and AMD focus on a single line of cards that can not only handle the high speeds required of a gaming card, but also the high precision of the workstation-class GPUs.

This kind of thing is why the Ada language was invented, standardised and adopted widely as a language for integrity critical systems.

I think I see the point, but I don’t think this will be a practical replacement for general purpose calculation with real numbers. Almost any calculation with floating point numbers will result in a loss of precision: that’s part of the reason why rounding is such an important part of floating point calculations. So I can see this being used for specialist calculations or processors, but not general use.

I do like the idea of indicating that error has been introduced, but I don’t think Gustafson’s version is the best way to do this.

Also, whatever brownie points he earned for introducing a new idea, he loses for not including some worked examples contrasting his new method with traditional floating points. Simply showing an equation and the two different answers is just amateurish, and without that he has NOT earned the right to demand $60 just to share his idea before the programming community even decides if they consider it practical or not.

C99 provides functions for floating point exceptions, one of which is FE_INEXACT. The functions feclearexcept() and fegetexceptflag() allow clearing and testing of this exception flag around computations.

This is much more efficient that the ubit too since you can just check the flag after some computation, and don’t need to store the ubit inside the variable, wasting storage.

Other exceptions that can be tested in the same way are FE_OVERFLOW and FE_UNDERFLOW, and it’s clear to see how these bits, together with FE_INEXACT, can efficiently map to a status register in the floating point hardware.

Perhaps “The End of Error” was published before C99 mandated these, but it seems out-dated now.

Re Patriot clock problem – seems to me to be the old Hardware V Software problem. Why not simply read a hardware maintained clock when you need to know the time in a time critical system than trying to maintain it in software with precision problems over time (just 100 hours to failure in that example). Don’t you simply just want to know the time ??? Why not look at the clock even if only occasionally to “ground truth” your current computed value? Simple hardware V complex software – Shouldn’t we just use the right solution/s for the problem? Surely hardware and software both have a role to play.

Or just introduce fail safes into a system so they don’t accumulate error? Like resetting the system clock every few hours?

Why does he feel it necessary to use a bit to indicate positive values rather than negative? The necessary carry when doing a subtraction is a bit weird. 10101 – 10011 necessitate a carry in the msb to maintain “sign”. Hardware with no SUB instruction seem to have problems as well. In other words you would need dedicated silicon to deal with this when the previous method is “free”. Of course there is silicon added to handle the negative sign, but I can only recall one or two instructions (such as on the AVR) intended to explicitly preserve the msb. All the others are “natural” instructions that operate as they should. In addition, the beauty with the current method is that 0x00 == 00000 == 0. Specifying that 01111 is 0 seems messy. Especially with conversion when communicating with the outside world. What would happen if a port is tied to a critical system that expects 0’s on all pins for a safe state? Necessitate the use of macros so the programmer doesn’t get confused? Oh wait… macros are bad…. aren’t they?

The proposed float method is problematic too since it too would require additional logic to implement for each store value. The current implementations uses many of the same instructions that I can use for other portions.

The U flag is a nice idea though. I can see that as being useful to streamline checks during operations much like an overflow flag. Might save generating a few lookup tables for special use cases.

A sign bit can help avoid numerical bias.

Wasn’t about the sign bit itself but the implementation. Using the msb to indicate “plus” is a bit that has contradictory behavior to all other bits.

Why not design your algorithms so they are not affected by cumulating rounding errors?

Either use integers and no divisions for things that can’t have rounding errors, or use sufficient bits for the required result accuracy, with an algorithm that does not accumulate errors.

There is a rounding error in this article, as pi is 3.141592653589793…, and so after rounding it up it becomes approximately 3.141592654, not 3.141592653

Right. You’re exactly proving the point. Unum is stating a truncation with an elipsis is a different piece of information than rounding up to the next digit. So if you round up, you’ve literally just presented an inaccurate number, whereas if you show the string could (or should) continue you are still showing a correct number.

To be fair to Raytheon and Patriot, the problem had been identified and a software patch was made, but due to the red tape involved in getting software patches out to military systems on an active battlefield, it was not able to be applied in time to prevent the tragedy.