[George Hotz], better known by his hacker moniker [GeoHot], was the first person to successfully hack the iPhone — now he’s trying his hand at building his very own self-driving vehicle.

The 26-year-old already has an impressive rap sheet, being the first to hack the PS3 when it came out, and to be sued because of it.

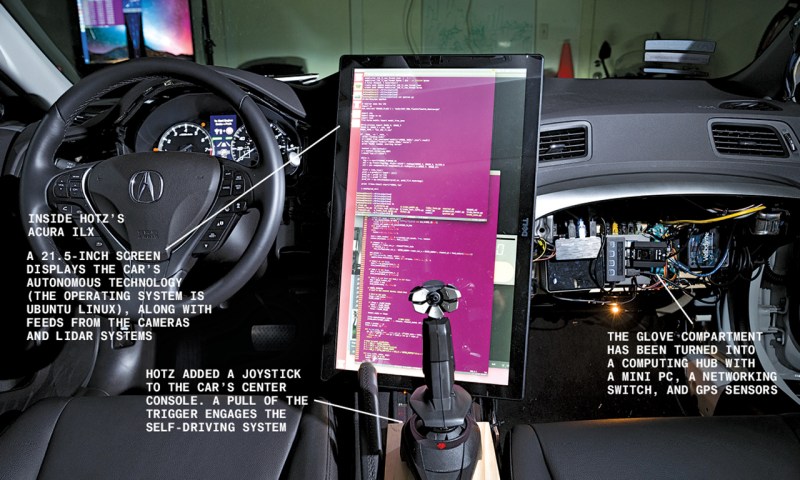

According to Bloomberg reporter [Ashlee Vance], [George] built this self driving vehicle in around a month — which, if true, is pretty damn incredible. It’s a 2016 Acura ILX with a lidar array on its roof, as well as a few cameras. The glove box has been ripped out to house the electronics, including a mini-PC, GPS sensors, and network switches. A large 21.5″ LCD screen sits in the dash, not unlike the standard Tesla affair.

Oh, and it runs Linux.

Like Tesla’s auto-driving feature, it’s designed for highway driving only. So Google still has the advantage in busy streets. Unfortunately Bloomberg doesn’t allow embedding, so you’ll have to head over to their site to see the full video.

Pretty damn impressive.

[Thanks Christian!]

Is it legal to run a autonomous vehicle on the road?

A DIY autonomous vehicle. Obviously there are teslas already doing this on the road today.

It depends on where you live. Some states in the U.S. have already passed legislation. Some will flat-out tell you “NO” even there is no law for it. For example, Nevada allows autonomous vehicle testing provided you have the proper license. It’s still a gray area that states are just beginning to address, though. I expect it will end up just like the new FAA laws requiring registration for RC aircraft/drones — you’ll probably just need to register, or pass a different “autonomous operator driving test” so the feds can quickly ID you when your vehicle accidentally crashes into the White House lawn. ;P

More on U.S. State laws regarding autonomous vehicles here: http://www.ncsl.org/research/transportation/autonomous-vehicles-legislation.aspx

Here’s the money-line: “Dude,” he says, “the first time it worked was this morning.”

lol, geohot

This

Programming and driving an autonomous car isn’t that hard, if you only drive on a highway (not that hard compared to inner city situations). The problem aren’t the 99% of the time your sensors work well and nothing unusual occurs, but the 1% the camera is blinded by the sun or any false sensor signals occur. It’s really impressive for a single person to achieve, but I think there’s still a long way to go from driving on a highway to driving on a icy road in the city with possible pedestrians crossing.

Dude … it’s not that hard you say LOL. Do you know what kind of algorithms are behind an autonomous car? I promise that you won’t understand even 1% of them even when trying 99% of your remaining lifetime.

In the Artificial Intelligence for Robotics course in Udacity Sebastian Thrun explains crystal clear how to use most algorithms you need to build a selfdriving car.

A very different thing is to really understand the guts of algorithms like the Kalman filter, but this it’s the same as you don’t need to know how a microprocessor was designed to just use it.

Do you know?

To build a simple autonomous car (given that you only build the sensorsystem and highlevel control algorithms) that will work in most cases is not actually that hard. If I had lots of money and time on my hands I could most likely be able to develop one. It won’t be good, but it will work for for 95% of the cases. We built a simple autobraking-system for my bachelor thesis (project for half a year), and we had no idea what we were doing at the time. :P

There are two main tricky parts with autonomous cars:

1. Your system has to work all the time, in every occasion and every situation. 99.99% might not even be sufficient. Say your car has en error once every 100 000km. Now, image that you sell 100 000 cars. Statistically, one car will fault every km… (of course a smiplification, but you it’s the easiest way to calculate)

2. You have to able to sell it. That is, the car hos too actually look nice and not be too expensive. Google might sell a few of their cars, but they have a huge ugly lidar on their roof. That lidar also costs like a nice car.

Correction: to build an autonomous car, you don’t have to be able to sell it. You only have to build it. (This isn’t “sell-your-product-4-big-bucks-aday.com”)

Four nines. Has to have a success rate of 99.99% to be successful, will never happen…

So many people have said that about things our children are now doing. Why bother a shackee site if you don’t believe in the power of progress? As we advance we are more capable, eventually all technology trickles down into the understanding and easy mastery by children. Give it 50 years and children will build these at camp.

“Never” people throw that word around to much.

are YOU four nines? humans are terrible at mundane tasks requiring complete attention

I haven’t been killed in a car wreck, I am at 100% thanks.

I would like to hear more about the technical side of this project. New Acura’s already have adaptive cruise control and lane keeping assist[1]. I am skeptical that he’s implemented his own autonomous driving system in such short amount of time. My guess is he is relying significantly, if not entirely, on the features already built into the car.

[1]https://www.youtube.com/watch?v=cxFAxU-tj4Q

no, entire point of the whole thing is to beat mobileye – current industry leader in lane following tech

mobileye uses lame image recognition for road/sign detection, Dave did a teardown some time ago of one of the mobileye units

https://www.youtube.com/watch?v=lxqDR2-DrnU

Damn, all these people asking for technical details. Either read the article or watch the video. It gives enough details for you to have a pretty good idea of whats going on. If you watch he is obviously not using the built in features.

I read the article and watched the video. While I am not very familiar with self-driving tech, I’ve played around with various machine learning algorithms and know from experience how difficult a project like this can be. And this article was very light on details (a lot of hand waving about ‘if-then’ statements and deep learning). I realize a Bloomberg article isn’t the place for deep technical discussions. But what I want to know (preferably from someone with experience in the field) is if this passes the sniff test. Does 10 hours seem like enough time to get a lane tracking algorithm to work? I don’t doubt that he managed hack into the canbus and has the ability to control the car. But training an algorithm that can safely drive the car in such a short amount of time, from scratch, seems fishy. Especially considering that the car already has some of those features built-in.

I don’t doubt 10 hours is enough. Think of how many millions of images/lidar returns there are to process and learn from in that amount of driving time. How many data points are recorded in a single minute? When considering the amount of data that can be extracted from six cameras + the lidar for that amount of time, I’d think it would be plenty to learn the basics of staying in a lane and staying away from the car in front of you.

Now all the car needs is one of these: http://www.sun-innovations.com/index.php/products/fw-hud

Where is the technical info?!

I think it will be hard for self driving cars to be a reality. Who is really responsible for the car? The company or person who programmed the car has liability and even if the cars are safe, what would be the safety record? If one out of a billion people die from the car, would any company take responsibility? As an owner, I wouldn’t take liability because I didn’t program the self driving car so basically it is product liability law.

As opposed to how many thousands of people die on the roads every year right now? It’s really easy to completely ignore the current statistics and focus on hypothetical future deaths instead of the massive reduction in them we’d have if we moved wholesale to automated driving systems – which already proven to be much safer drivers than humans.

Right now, most of the time *nobody* takes financial responsibility for an accident, and it falls upon insurance. Eventually, those insurers will insist on using the auto-driving facility as a condition for paying a claim, or make your premiums extremely high.

” instead of the massive reduction in them we’d have if we moved wholesale to automated driving systems – which already proven to be much safer drivers than humans.”

That’s begging the question. Nothing of the sort has been proven, since nobody’s actually driving these cars in anything else than fair weather and good conditions in sunny California.

The current crop of self-driving cars are all line following robots that don’t have enough AI to tell a trash bin from a pedestrian 70% of the time. There’s a snowball’s chance in hell these things as they currently are save anyone’s life if they were deployed on a fleet scale.

The google self driving car has already racked up millions of autonomous driven miles. It has had accidents. In EVERY SINGLE CASE, the accident was the fault of the human driver in the other car.

I don’t know what you want for proof, because it’s obvious whatever evidence is given you’ll just say “oh, but it wasn’t in this other specific set of conditions”.

Why would the owner take liability for a self driving car when he doesn’t drive or cause the accident?

Why would google or another company take product liability for thousands of cars on the road? A couple of accidents would make them not willing to pay for someone else.

The law has to change before self driving cars are a reality and we know how fast congress works

That’s the point. You’re assuming there will be lots of accidents that will be the fault of the autonomous car, when that’s a faulty premise. Autonomous cars have driven millions of miles already, and at least for Google’s car, the only accidents it has had were caused by the human driver in the other car.

As I said, at some point the insurance companies will make it more expensive to cover a car that *isn’t* being driven autonomously. That’s a market force and congress is irrelevant.

It’s somewhat unfortunate that super geniuses (and yes, I’d consider Hotz one) often don’t make good team members in a company (often due to neurotic quirks) or that companies don’t really go out of their way to support them in ways that make them want to stay. I suppose they may be better off doing their own thing, as long as they’re willing to delegate certain tasks they shouldn’t waste their time with (company management, sales, etc) to others. The world needs as much effort as it can get from the highly gifted, and that means giving them the support they need to thrive.

Embeddable video: https://youtu.be/KTrgRYa2wbI

Ubuntu, African word meaning can’t configure Debian.

In all seriousness though. Nice car and interesting implementation. I look forward to when its using a less end user based Linux flavor however.

Are you a bot? Because this generic line pretty much looks like mindless babble.

I doubt that he built the self driving car within one month. I doubt he even can built a “non”-self driving car within one month. I even doubt he built it. It’s not that hard adding chinese products to a car. It’s also not hard to sniff, replay and modify some CAN messages using those products. It’s also not that hard to write some piece of software to make it looking good.

Okaaaaay Edgar – what have you done?

I think the kid has an obvious flair for self promotion, but that does not mean what he does is “easy”. I am not as impressed by his pointless resume of aborted involvement in commerce\tech, or his claims he “knows everything” about AI research, but he clearly is not totally full of sheet.

You, on the other hand, have made a stream of likely incorrect statements, why? Biter resentment?

finally a self-driving car that isn’t hardwired to pull over for flashing blue lights! I hate not being able to race the po-po in my Tesla (that i don’t really have because i’m poor)

As long as he is testing for uncommon situations on a test track.While ifs kill, because of the unexpected conditions and exceptions, lack of thorough (AI) training can also kill. For example, what happens when a woman posts on Facebook that Pharrell’s song Happy makes her happy… while she is driving and she crosses the media and hits someone else head on? (http://www.dailymail.co.uk/news/article-2614151/The-happy-song-makes-HAPPY-32-year-old-woman-dead-Facebook-post-driving-leads-crash.html) The correct response would be to make an attempt to avoid the oncoming car if possible. Whereas the likely result from what information is given is just stopping. On the other hand, most drivers, when faced with such situations do not get it much better