Researchers from VUSec found a way to break ASLR via an MMU sidechannel attack that even works in JavaScript. Does this matter? Yes, it matters. A lot. The discovery of this security flaw along with the practical implementation is really important mainly because of two factors: what it means for ASLR to be broken and how the MMU sidechannel attack works inside the processor.

Address Space Layout Randomization or ASLR is an important defense mechanism that can mitigate known and, most importantly, unknown security flaws. ASLR makes it harder for a malicious program to compromise a system by, as the name implies, randomizing the process addresses when the main program is launched. This means that it is unlikely to reliably jump to a particular exploited function in memory or some piece of shellcode planted by an attacker.

Breaking ASLR is a huge step towards simplifying an exploit and making it more reliable. Being able to do it from within JavaScript means that an exploit using this technique can defeat web browser ASLR protection running JavaScript, the most common configuration for Internet users.

ASLR have been broken before in some particular scenarios but this new attack highlights a more profound problem. Since it exploits the way that the memory management unit (MMU) of modern processors uses the cache hierarchy of the processor in order to improve the performance of page table walks, this means that the flaw is in the hardware itself, not the software that is running. There are some steps that the software vendors can take to try to mitigate this issue but a full and proper fix will mean replacing or upgrading hardware itself.

In their paper, researchers reached a dramatic conclusion:

“… The conclusion is that such caching behavior and strong address space randomization are mutually exclusive. Because of the importance of the caching hierarchy for the overall system performance, all fixes are likely to be too costly to be practical. Moreover, even if mitigations are possible in hardware, such as separate cache for page tables, the problems may well resurface in software. We hence recommend ASLR to no longer be trusted as a first line of defense against memory error attacks and for future defenses not to rely on it as a pivotal building block.”

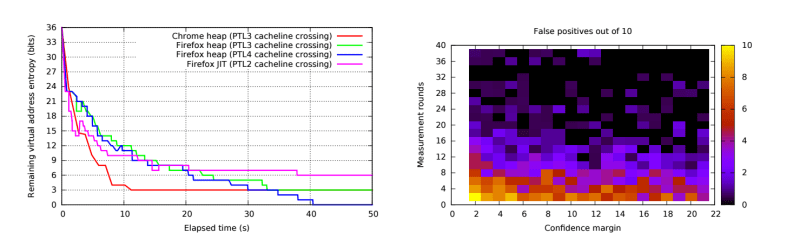

All the details can be consulted in the research team’s paper. Meanwhile, they left us some videos of demo attacks on the ASLR. The fastest one took only 25 seconds:

ASLR was only ever a band-aid on top of the wound that is shoddy programming, and all this talk of replacing hardware to fix it is missing the point. Perhaps now software vendors – especially the browser makers – can be convinced to focus a little more on their software’s integrity instead of rushing out the next cool feature-du-jour.

I can dream, can’t I?

Sad to think software security is becoming enough of concern for everyday users that software vendors will eventually have to start boasting it as a feature instead of a priority, provided they can actually back up their marketing claims. Hopefully SecOps researchers can continue to help keep these companies honest with their reports.

dream on freind…

I think that in principle what you’re talking about makes some sense. But in practice, mistakes happen even in projects that don’t include “shoddy programming”. For this reason, we need multiple tiers protecting against the most common types of exploits (buffer overflows for example). This was one of those layers and this shows a weak spot in that defense.

ASLR is not a protection, it is obfuscation. Security by obscurity NEVER works.

And what is a password?

a level of authentication. unrelated to code integrity.

no matter how hard you dig into a disassembled binary, you wont magically find the users password.

ASLR is also unrelated to code integrity.

How do you suggest code integrity stops someone detecting cache misses?

Anybody with that position have no freaking clue about security. When a security analyst say that security by obscurity should be avoided they mean that a system _only_ being protected by obscurity should be avoided, best practices for highly secure data/installations etc. includes obscurity as a valid protective element.

IOW learn about security instead of spouting out sound bites.

Exactly, ultimately any hidden detail such as passwords, game plans, network layout is security by obscurity.

It’d be foolhardy to give any of those away.

Valid is a strong word. Obscurity only prolongs the inevitable.

And Peter, passwords are not obscurity, unless some dingus hard-coded one. They are a form of authentication.

How often do you use one letter passwords?

Something that can be reliably bruteforced (not even mentioning actual clever bypasses) is a duct tape of security.

Well Mozilla is going at it pretty hard with Rust’s memory safety.

Every heard of the Swiss chess model of security? It’s impossible to make an impenetrable barrier, we need things like ASLR, NX and stack protection not because they are perfect but because nothing will ever be perfect.

Anything that can be defeated so readily is nothing at all.

You clearly have no idea what you’re talking about if you are reducing this wonderful attack to “nothing at all”.

I don’t reduce the attack, I reduce the protection.

Something defeated is not a link in a chain of protection as you seem to imply.

It’s like saying ‘my house has multiple layers of protection because first there is the air you need to pass through to get to the door…’

@Whatnot:

No it is like your house have multiple layers of protection because first you have to enter a locked gate, then enter the maze and after passing through it your door. And don’t forget to disable the silent alarm.

Will you say the same thing in x years when AES is broken? Whether that be cryptographically or computationally, it’s inevitable. Just because something has the potential to be broken “easily” does not make it “nothing at all”. By the same premise, AES might as well be “nothing at all” because in, say 10 years, it may be readily defeated. But for the last 20 or so years AES has been the cornerstone of encryption.

ASLR has been around almost as long as AES, and it has taken this long to find a reliable attack against it.

its not the first ASLR attack ever, its the first one(?) demonstrated running reliably inside javascript virtual machine.

Once AES is broken it becomes nothing in terms of encryption, seems simple.

@Whatnot:

regarding “broken AES”: The question would remain, if it would be broken by 3 months of computation by the combined server power of the NSA or by a school kid’s gaming PC in e.g. one day.

Mostly any lock can be defeated, it is just a question of time.

Is AES likely to be broken?

I think mathematically it’s unlikely, we can chip away at it reducing the effective security in bits.

But breaking it? Only if we ever work out how to unscramble an egg.

Will you say the same thing in x years when AES is broken? Whether that be cryptographically or computationally, it’s inevitable. Just because something has the potential to be broken “easily” does not make it “nothing at all”. By the same premise, AES might as well be “nothing at all” because in, say 10 years, it may be readily defeated. But for the last 20 or so years AES has been the cornerstone of encryption.

ASLR has been around almost as long as AES, and it has taken this long to find a reliable attack against it.

No, it’s not inevitable. If there is a flaw in the design of AES that can be exploited, yes, it will be found sooner or later. But if there is no such flaw, AES with 256 Bit will not be broken. Why? There is a minimum amount of energy necessary to flip a bit. The complete energy the sun can provide until it burns out is not enough to run a simple 256 bit counter through all possible states and AES is more complicated than a simple counter.

The swiss cheese model applies to natural, random problems. It fails badly in the face of willful attackers.

Software security is made by creating impenetrable barriers. There is no other way, as attacks are too cheap to be deterred by permissive ones.

There is no perfect, flawless code possible. Therefore I see this ASLR more like a helmet. Of course it should not be like with some people starting to wear a helmet for skiing and then going much faster than before, because they feel too safe and over assured.

Harvard architecture? If data and programs are in separate places, would that help mitigate these things? Program-to-program branches would still be possible, but you can’t use buffer overflow to inject a program.

I don’t know enough about this to know whether this makes sense.

https://en.wikipedia.org/wiki/Data-driven_programming

NX does this for modern machines. It marks data as “none executable”.

But, as always, there are ways around it. And modern javascript engines use “just in time” compiling, meaning they need to write new program code on the fly. So it generates data and then makes it executable.

@Luke: https://en.wikipedia.org/wiki/Return-oriented_programming

Short version: you can’t change the code in a Harvard arch, but if you’re able to chain bits of pre-existing code (“gadgets” in the lingo) together into something useful, you can still run your own programs.

If you can defeat it with JavaScript then JavaScript has too many functions and it’s a flaw of JavaScript I would think.

Why does JavaScript has so much capability to defeat all and any protection of anything anyway? Did the freaking NSA sponsor it?

It’s a remarkable attack to achieve in javascript.

but really its more to do with the excellent javascript performance we have these days than a feature.

We need something line javascript and we need it to be performant.

So what should we do?

Make the functionality reflect where it’s used is what they should do.

Same with other web plugins incidentally, which all have a long history of seemingly adding functions who’s primary use is to support hackers for some reason.

The problem is that the capabilities necessary to do useful things are also the capabilities necessary to produce a hack. You’re suggestion is like saying that nobody should have knives in their kitchen because they might be used to hurt people.

JavaScript isn’t an excellent performer; hardware, bandwidth and massive libraries have simply made javascript’s lousy performance less of an issue.

It’s honestly really fucking good.

https://benchmarksgame.alioth.debian.org/u64q/compare.php?lang=node&lang2=yarv

Really, REALLY good.

https://fir.sh/projects/jsnes/

Seriously JavaScript is no longer a joke.

https://bitwiseshiftleft.github.io/sjcl/demo/

I didn’t really believe it since last time I actually used it was IE6 days where it was still being interpreted, recently I’ve been using it (admittedly more via clojurescript) but JIT version performance is amazing.

Great now people can hack you in less than a millisecond, good for the hackers and spooks and advertisers since they probably want to hack in bulk and a million people times a second is months of waiting.

1) Number crunching performance tests are no real benchmark for real world performance. As number crunching is very good to optimize with a JIT. It’s an area where on some tests JIT languages outperform C code.

2) The nes was emulated already on a 40Mhz 486. So that just shows that javascript is up to speed with a 486.

3) Just a variation on 1.

But in the end, performance usually does not even matter. As 90% of your execution time is spend in 10% of your code. Making sure your code in maintainable is much more important. And if it is slow, find that 10% and fix that.

I’m just glad that they are fixing the language. Proper syntax for classes is really welcome for example: https://developer.mozilla.org/nl/docs/Web/JavaScript/Reference/Statements/class

(I write 80% of my code in python. Which isn’t fast. And it’s not important that it isn’t fast)

@daid303

If you’re not happy with benchmarks, and you’re not happy with real world performance and you’re not happy with real life computationally intensive applications.

Then what do you base your idea of performance no?

Javascript has it’s shortcommings in syntax and lack of namespaces.

But between google closure, and the new JIT engines (like v8) the performance is amazing for well written code (as always you need good code).

It certainly outperforms python + pygame (I think that’s SDL1.1 accelerated) when you combine html5 and js in a modern browser.

@daid303

The NES was emulated *badly* on a 40MHz 486, with massive amounts of accuracy bugs. Cycle-accurate emulation of the NES requires closer to a 1GHz x86 CPU. But don’t let facts stop you from spreading your ignorant drivel.

If you actually looked into modern js performance you would be impressed.

http://www.quakejs.com/

That’s true for the performance AFTER the initial compilation, but now look at the startup time and the memory footprint of your application, especially if it run on a small embedded device. Compiled C code still have a long long life on small embedded devices.

@Whatnot

I’m getting the impression you don’t actually know much about javascript or programming.

This is why the industry should of skipped NX and ASLR and cookies and other stuff and went to sandboxing a long time ago.. Sandboxing is far easier to do and all you have to protect are some* APIs

NX and ASLR are there to protect your sandbox, not to replace it.