Long before we started reporting on [Dan Kaminsky]’s DNS chicanery, he contributed a guest post about one of our favorite sources of new technology: SIGGRAPH. The stars have aligned again and we’re happy to bring you his analysis of this year’s convention. [photo: Phong Nguyen]

So, last week, I had the pleasure of being stabbed, scanned, physically simulated, and synthetically defocused. Clearly, I must have been at SIGGRAPH 2008, the world’s biggest computer graphics conference. While it usually conflicts with Black Hat, this year I actually got to stop by, though a bit of a cold kept me from enjoying as much of it as I’d have liked. Still, I did get to walk the exhibition floor, and the papers (and videos) are all online, so I do get to write this (blissfully DNS and security unrelated) report.

SIGGRAPH brings in tech demos from around the world every year, and this year was no exception. Various forms of haptic simulation (remember force feedback?) were on display. Thus far, the best haptic simulation I’d experienced was a robot arm that could “feel” like it was actually 3 pounds or 30 pounds. This year had a couple of really awesome entrants. By far the best was Butterfly Haptics’ Maglev system, which somehow managed to create a small vertical “puck” inside a bowl that would react, instantaneously, to arbitrary magnetic forces and barriers. They actually had two of these puck-bowls side by side, hooked up to an OpenGL physics simulation. The two pucks, in your hand, became rigid platforms in something of a polygon playground. Anything you bumped into, you could feel, anything you lifted, would have weight. Believe it or not, it actually worked, far better than it had any right to. Most impressively, if you pushed your in-world platforms against eachother, you directly felt the force from each hand on the other, as if there was a real-world rod connecting the two. Lighten up a bit on the right hand, and the left wouldn’t get pushed quite so hard. Everything else was impressive but this was the first haptic simulation I’ve ever seen that tricked my senses into perceiving a physical relationship in the real world. Cool!

Also fun: This hack with ultrasonic transmitters by Takayuki Iwamoto et al, which was actually able to create free-standing regions of turbulence in air via ultrasonic interference. It really just feels like a bit of vibrating wind (just?), but it’s one step closer to that holy grail of display technology, Princess Leia.

Best cheap trick award goes to the Superimposing Dynamic Range guys. There’s just an absurd amount of work going into High Dynamic Range image capture and display, which can handle the full range of light intensities the human eye is able to process. People have also been having lots of fun projecting images, using a camera to see what was projected, and then altering the projection based on that. These guys went ahead and, instead of mixing a projector with a camera, they mixed it with a printer. Paper is very reflective, but printer toner is very much not, so they created a shared display out of a laser printout and its actively displayed image. I saw the effects on an X-Ray – pretty convincing, I have to say. Don’t expect animation anytime soon though ![]() (Side note: I did ask them about e-paper. They tried it – said it was OK, but not that much contrast.)

(Side note: I did ask them about e-paper. They tried it – said it was OK, but not that much contrast.)

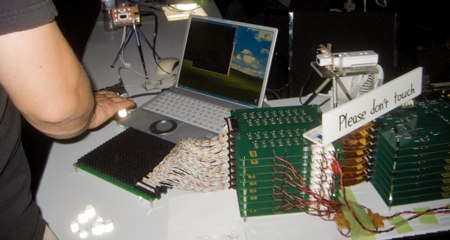

Always cool: Seeing your favorite talks productized. One of my favorite talks in previous years was out of Stanford – Synthetic Aperture Confocal Imaging. Unifying the output of dozens of cheap little Quickcams, these guys actually pulled together everything from Matrix-style bullet time to the ability to refocus images – to the point of being able to see “around” occluding objects. So of course Point Grey Research, makers of all sorts of awesome camera equipment, had to put together a 5×5 array of cameras and hook ’em up over PCI express. Oh, and implement the Synthetic Aperture refocusing code, in realtime, demo’d at their booth, controlled with a Wii controller. Completely awesome.

Of course, some of the coolest stuff at SIGGRAPH is reserved for full conference attendees, in the papers section. One nice thing they do at SIGGRAPH however is ask everyone to create five minute videos of their research. This makes a lot of sense when what everyone’s researching is, almost by definition, visually compelling. So, every year, I make my way to Ke-Sen Huang’s collection of SIGGRAPH papers and take a look at the latest coming out of SIGGRAPH. Now, I have my own biases: I’ve never been much of a 3D modeler, but I started out doing a decent amount of work in Photoshop. So I’ve got a real thing for image based rendering, or graphics technologies that process pixels rather than triangles. Luckily, SIGGRAPH had a lot for me this year.

First off, the approach from Photosynth continues to yield Awesome. Dubbed “Photo Tourism” by Noah Snavely et al, this is the concept that we can take individual images from many, many different cameras, unify them into a single three dimensional space, and allow seamless exploration. After having far too much fun with a simple search for “Notre Dame” in Flickr last year, this year they add full support for panning and rotating around an object of interest. Beautiful work – I can’t wait to see this UI applied to the various street-level photo datasets captured via spherical cameras.

Speaking of cameras, now that the high end of photography is almost universally digital, people are starting to do some really strange things to camera equipment. Chia-Kai Liang et al’s Programmable Aperture Photography allows for complex apertures to be synthesized above and beyond just an open and shut circle, and Ramesh Raskar et al’s Glare Aware Photography evaded the megapixel race by filtering light by incident angle – a useful thing to do if you’re looking to filter glare that’s coming from inside your lens.

Another approach is also doing well: Shai Avidan and Ariel Shamir’s work on Seam Carving. Most people probably don’t remember, but when movies first started getting converted for home use, there was a fairly huge debate over what to do about the fact that movies are much wider (85% wider) than they are tall. None of the three solutions – Letterboxing (black bars on the top and bottom, to make everything fit), Pan and Scan (picking the “most interesting” square of video from the rectangular frame), or “Anamorphic” (just stretch everything) – made everyone happy, but Letterboxing eventually won. I wonder what would have happened if this approach was around. Basically, Avidan and Shamir find the “least energetic” line of pixels to either add or remove. Last year, they did this to photos. This year, they come out with Improved Seam Carving for Video Retargeting. The results are spookily awesome.

Speaking of spooky: Data-Driven Enhancement of Facial Attractiveness. Sure, everything you see is photoshopped, but it’s pretty astonishing to see this automated. I wonder if this is going to follow the same path as Seam Carving, i.e. photo today, video tomorrow.

Indeed, there’s something of a theme going on here, with video becoming inexorably easier and easier to manipulate in a photorealistic manner. One of my favorite new tricks out of SIGGRAPH this year goes by the name of Unwrap Mosaics. The work of Microsoft’s Pushmeet Kohli, this is nothing less than the beginning of Photoshop’s applicability to video – and not just simple scenes, but real, dynamic, even three dimensional motion. Stunning work here.

It’s not all about pixels though. A really fun paper called Automated Generation of Interactive 3D Exploded View Diagrams showed up this year, and it’s all about allowing complex models of real world objects to be comprehended in their full context. It’s almost more UI than graphics – but whatever it is, it’s quite cool. I especially liked the moment they’re like – heh, lets see if this works on a medical model! Yup, works there too.

As mentioned earlier, the SIGGRAPH floor was full of various devices that could assemble a 3D model (or at least a point cloud) of any small object they might get pointed at. (For the record, my left hand looks great in silver triangles.) Invariably, these devices work like a sort of hyperactive barcode scanner, monitoring how long it takes for the red beam to return to a photodiode. But here’s an interesting question: How do you scan something that’s semi-transparent? Suddenly you can’t really trust all those reflections, can you? Clearly, the answer is to submerge your object in fluorescent liquid and scan it with a laser tuned to a frequency that’ll make its surroundings glow. Clearly. Flurorescent Immersion Range Scanning, by Matthias Hullin and crew from UBC, is quite a stunt.

So you might have heard that video cards can do more than just push pretty pictures. Now that Moore’s Law is dead (how long have we been stuck with 2Ghz processors?), improvements in computational performance have had to come from fundamentally redesigning how we process data. GPU’s have been one of a couple of players (along with massive multicore x86 and FPGA’s) in this redesign. Achieving greater than 50x speed improvements over traditional CPU’s on non-graphics tasks like, say, cracking MD5 passwords, they’re doing OK in this particular race. Right now, the great limiter remains the difficulty programming the GPU’s – and, every month, something new comes to make this easier. This year, we get Qiming Hiu et al’s BSGP: Bulk-Synchronous GPU Programming. Note the pride they have with their X3D parser – it’s not just about trivial algorithms anymore. (Of course, now I wonder when hacking GPU parsers will be a Black Hat talk. Short answer: Probably not very long.)

Finally, for sheer brainmelt, Towards Passive 6D Reflectance Field Displays by Martin Fuchs et al is just weird. They’ve made a display that’s view dependent – OK, well, lenticular displays will show you different things from different angles. Yeah, but this display is also illumination dependent – meaning, it shows you different things based on lighting. There’s no electronics in this material, but it’ll always show you the right image with the right lighting to match the environment. Weird.

All in all, a wonderfully inspiring SIGGRAPH. After being so immersed in breaking things, it’s always fun to play with awesome things being built.

Great article! One massive, glaring error, though, and it’s one that no tech writer should ever make. It’s regarding your statement about Moore’s law, which I get the distinct impression that you’ve never actually read. Moore didn’t state that processor SPEED would double, but that the number of transistors that can be inexpensively placed on a chip would double about every two years.

And in case you haven’t noticed, processors have been growing wider, not taller. Take, say, a Pentium 4 531… 3 ghz, 125 million transistors. It was hot shit about two years ago, the best money could buy.

Now, let’s take a Core 2 Extreme, an X6900. Not even the best money can buy nowadays; there are better, but it has a clock speed of 3.2ghz, still better than the 531… and 291 million transistors. That’s 2.3 times more transistors.

Seems Moore’s law is still alive and quite well.

I do look forward to the 3D exploded view diagrams, though. Those are a bitch to try to figure out in 2D!

So many sweet things this year. So many…

Wow, I was really impressed by the “Photo Tourism” section, although I have a feeling it’s not the same as actually being there :)

Wow, these demos are amazing. Can’t wait to see if they ever get implemented in big box office productions.

moore’s law is, and has always been, about transistor count. NOT core clock frequency. And it’s definitely not dead yet.

pentium IV: 42,000,000 transistors

Core 2 Duo: 291,000,000 transistors

I think Moore’s Law is still cookin’ with gas.

Old slang is the bee’s knees!

Wow, people are quick with the whole transistor count thing. Moore’s Law is the cat’s pajamas!

Moore’s Law as a measure of transistor count? Sure, it’s doing fine.

Moore’s Law as a measure of computational power? Not so much. Do you realize how many of those transistors are just CPU cache? L1, L2, L3…more and more and more layers. Sure, we’re getting more cores, but lets be honest — nobody really knows how to use ’em, not like we knew how to use more clock. And as we learn to parallelize, we’re abandoning x86 entirely for the 50x speedup.

Maybe a better way to say it: “Moore’s Law is dead. Long live Moore’s law” — as we finally are forced to migrate off sequential computation to actually experience its advantages.