TinkerCAD had its first release all the way back in 2011 and it has come a long way since then. The latest release has introduced a raft of new, interesting features, and [HL ModTech] has been nice enough to sum them up in a recent video.

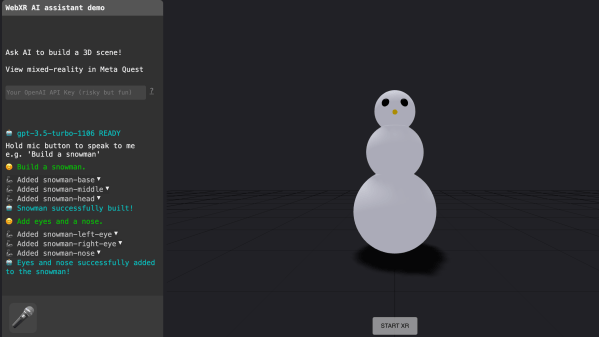

He starts out by explaining some of the basics before quickly jumping into the new gear. There are two headline features: intersect groups and smooth curves. Where the old union group tool simply merged two pieces of geometry, intersect group allows you to create a shape only featuring the geometry where two individual blocks intersect. It’s a neat addition that allows the creation of complex geometry more quickly. [HL ModTech] demonstrates it with a sphere and a pyramid and his enthusiasm is contagious.

As for smooth curves, it’s an addition to the existing straight line and Bézier curve sketch tools. If you’ve ever struggled making decent curves with Bézier techniques, you might appreciate the ease of working with the smooth curve tool, which avoids any nasty jagged points as a matter of course.

While it’s been gaining new features at an impressive rate, ultimately TinkerCAD is still a pretty basic tool — it’s not the sort of thing you’d expect to see in the aerospace world or anything. ut it’s a great way to start whipping up custom stuff on your 3D printer.

Continue reading “A Quick Primer On TinkerCAD’s New Features”