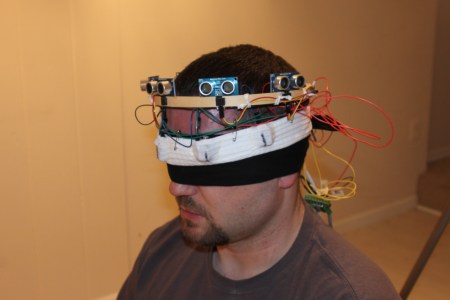

[polymythic] Is helping the blind see with his haptic feedback device called HALO. At the heart of the device is an Arduino Mega 2560 which senses objects with a few ultrasonic range finders and then relays the information back to the user using some vibration motors from old cell phones. The user can feel the distance by the frequency at which the motor pulses. The faster the motors pulse the closer an object is.

This kind of sensing is something that it can be applied to pretty much any sensor allowing the user to feel something that might be otherwise invisible. While haptic feedback is nothing new its good to see continuing work with new sensors and different setups.

Great idea, I like it! :)

I always save these motors “just in case” when scrapping a phone, usually they just unplug but some are soldered.

if you have a lot of phones surplus which are broken then its worth going on a salvaging mission as the LEDs on the backlight(s) and OLED panel can often be repurposed, and many Nokias have a nice colour LCD with plenty of documentation.

what about using infrared?

Geez, how about giving that poor dude’s forehead some oxygen?

zeropointmodule, polymythic is using Parallax PING))) ultrasonic sensors. they are very easy to use and can sense things up to about 10 feet away, much more usefull and less limited than infrared. The only downside is that they cant do well with corners, like if half of the signal hits a corner, and im not sure about rounded irregularly shaped objects, i havent used mine in a while.

This is awesome. Also worth noting that its been proven mainly by a blind guy doing it and being able to teach people to do it, that humans are capable of range finding with verbal clicks (like bats).

as a retired firefighter, I could see that invention “built in” to a fire fighter helmet.

good idea! Keep up the good work….

this might be of interest too:

http://www.esenseproject.org/downloads.html

guide dog is much cooler and useful

Use the force!

Feedback that is.

Cool, I’m working on a device that uses the same idea. I just can’t find blind people to help me develop it…

Sherman, set the way back machine for star date 5630.7 (aka October 1968). This reminded me of the sensor dress from the original Star Trek episode “Is there in Truth No Beauty?”

Funny how so many technologies were dreamed up back then and are now realities or on the brink. Still waiting for the transporter to save me from TSA pat downs.

This is a great concept.

Why not refine, and kit this? I totally believe that it could turn into something.

This is an area of research that really doesn’t get enough attention, and making this more accessible to those who are blind, and usually very, very broke would be a great benefit.

therian: Although a guide dog is cool, it’s very difficult to get one, and can take years.

very cool idea!

hope it develops into something practical for the blind.

well why not hack up the kinect thus deep-sight for blind-people , they could be allowed to drive a car then …

if you overlay the depth matrix with a live feed you’ll have low-res eye’s only thing left to would be how te implement it ?

part of brain that interpret signals on retina is physically located close to part of brain that respond for tong. Sometimes signals misfire to wrong parts and with training one part can take job of the other. So in theory tong (or some other body parts)can be used as retina, we just need small enough electrodes matrix for any usable resolution

Xtremegamer: Brilliant!

Reminds me of something i read where used a magnetometer and a bunch of vibration engines on a belt to continually show where north was. Over time the wearer developed a better ability to navigate inside a city simply by how north changed at each turn taken.

One could even develops something of a “danger sense” using this, by placing the sensors and vibrators at the back of the head.

The kinect uses way too much power for it to be a wearable device (for now)

They’ve been trying this for many many years and yet it’s always stuck at the concept level it seems, I wonder why.

I did a quick google patent search, this was patented in 1988.

I also built something similar like this a few years ago, but then implemented into a glove.

there were plans to put a RFID reader into the glove so that the user could find specific objects.

but we never got further then a ugly prototype.

All, thanks for the suggestions. You guys rock! Honestly, I appreciate all the ideas. I had noted using the kinect several times over on (http://www.instructables.com/id/Haptic-Feedback-device-for-the-Visually-Impaired/), and will post a bit more when I get my blog post fleshed out.. I think the power issues can be solved for sure.

hmm, sparkfun have some nice magnetic sensors (HMC5843) ?

@logan, yeah i realise ultrasonic sensor are more versatile but draw a fair amount of power.

perhaps a single transducer set with a phased array circuit to steer the beam would be more useful.

(this technique is used for medical ultrasound)

Very interesting, but the 360° peripheral vision is unnatural to process for humans, more practical would be using the extra feedback to increase resolution in a more localized area (right in front of you, like normal vision).

Use of the kinect’s depth camera connected to an electrode matrix exposed to a large part of skin (belly or back) could provide enough resolution to see at least object outlines.

And just to make a joke; Imagine seeing the world like a teletubby!

I think a guide dog and this project would be mutually exclusive: dogs don’t like ultrasound.

Qwerty, you’re probably right. Dogs hear up to 60khz, and the PING))) sends 40khz. I have not really tried with my dog around, but may be interesting to see how she reacts. Good observation.

Is the user also deaf, black band? Binaural generated sounds would be better than vibes.

Tommy can you see me.

You know where to put the ball.

@sueastside: 360° peripheral vision is unnatural? But you know you have ears which offer exactly that. Gamers love surround sound headsets for providing natural 360° “vision”… I’m pretty sure you easily adapt to this…

@therian

I remember reading about an experiment a few years ago. Where they connected a camera to electrodes on a tounge. They tried it on a guy with full vision (blinde fold ofcourse) and he stated that after 12 hours he started to “se”

Kinect depth sensing: based on infrared. Probably unreliable outdoors, as sunlight contains much infrared.

Umm, sorry to tell you this but this idea is a clone of a more advanced version featured on Daily Planet (Discovery Channel) almost 2 year ago. The idea was originally done as a Fourth Year Design Project, which placed second, at the University of Waterloo. Looking forward to seeing future versions.

Note: There are several patents pending on the design presented at the UW Symposium.

What a great idea. I really hope it proves a success. It could improve the quality of life for many people.