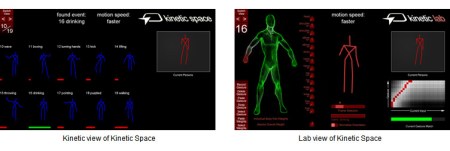

For all of you that found yourselves wanting to use Kinect to control something but had no idea what to do with it, or how to get the data from it, you’re in luck. Kineticspace is a tool available for Linux/mac/windows that gives you the tools necessary to set up gesture controls quickly and easily. As you can see in the video below, it is fairly simple to set up. You do you action, set the amount of influence from each body part (basically telling it what to ignore), and save the gesture. This system has already been used for tons of projects and has now hit version 2.0.

[Matthias Wölfel], the creator, tipped us of. His highlights of the new features include:

- can be easily trained: the user can simply train the system by recording the movement/gesture to be detected without having to write a single line of code

- are person independent: the system can be trained by one person and used by others

- are orientation independent: the system can recognize gestures even if the trained and tested gesture does not have the same orientations

- are speed independent: the system is able to recognize the gesture also if it is performed faster or slower compared to the training and is able to provide this information

- can be adjusted: the gesture configuration can be fully controlled by a graphical interface (version 2.0)

- can be analyzed: the current input data can be compared with a particular gesture; feedback of the similarity between the gestures is given for every body part

Perfect application for Neural Networks instead of forcing users to teach it per person.

This tool is exactly what I need for my Kinect lesson plan: http://constructingkids.wordpress.com/2012/06/18/kinect-hacked-paradigm-shift/

Previously ive been using a combination of FAAST, GlovePIE and Scratch

The video was so … cool! I can’t believe there is a machine that can do this. This is definitely going to my wish list.

I cannot install the software needed (OpenNI and NITE).

Anyone can help me?

Thanks