After adding a few LED light strips above his desk, [Bogdan] was impressed with the results. They’re bright, look awesome, and exude a hacker aesthetic. Wanting to expand his LED strip installation, [Bogdan] decided to see if these inexpensive LED strips were actually less expensive in the long run than regular incandescent bulbs. The results were surprising, and we’ve got to give [Bogdan] a hand for his testing methodology.

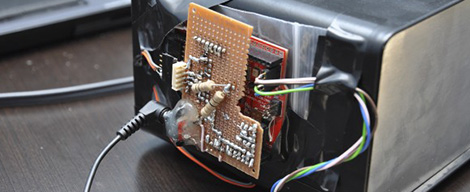

[Bogdan]’s test rig consists of a 15 cm piece of the LED strip left over from his previous installation. A Taos TSL2550 ambient light sensor is installed in a light-proof box along with the LED strip, and an AVR microcontroller writes the light level from the sensor and an ADC count (to get the current draw) of the rig every 6 hours.

After 700 hours, [Bogdan]’s testing rig shows some surprising results. The light level has decreased about 12%, meaning the efficiency of his LED strip is decreasing. As for projecting when his LEDs will reach the end of their useful life, [Bogdan] predicts after 2200 hours (about 3 months), the LED strip will have dropped to 70% of their original brightness.

Comparing his LED strip against traditional incandescent bulbs – including the price paid for the LED strip, the cost of powering both the bulb and the strip, the cost of the power supply, and the time involved in changing out a LED strip, [Bogdan] calculates it will take 2800 hours before cheap LEDs are a cost-effective replacement for bulbs. With a useful life 600 hours less than that, [Bogdan] figures replacing your workshop lighting with LED strips – inexpensive though they are – isn’t an efficient way to spend money.

Of course with any study in the efficiency of new technology there are bound to be some conflating factors. We’re thinking [Bogdan] did a pretty good job at gauging the efficiency of LED strips here, but we would like to see some data from some more expensive and hopefully more efficient LED strips.

What if the light sensor was also loosing sensitivity?

Why would it ? There’s nothing that should degrade over time as there is in LEDs..

what if the voltage is dropping ?

what with another sample

what if the temperature …

the conclusions have 0 value, this is un-scientific

Checked voltage at strip: 12.02V, exactly as when installed.

The setup is inside the house, temp 25 +/-2 all the time, rather constant.

Sample: fair, not enough samples, but there are three series of leds which are measured at the same time. Also, there are at least 50-100 types of strips i can find in just my local shop, no resources to test them all.

Off the top of my head – I think you have to be careful about testing cheap LED lighting, bad heat management and bad power supplies.

White LED are really not, like florescent tubes they use chemical to shift high energy light like blues to other colors. The ultimate goal is to make all colors and thus white light. If the chemicals are unstable, the color can shift. In fact, this is one of the hall marks of badly made “white” LEDs. That they change color over time.

LED fail slowly. They fade over time. To get the best life, I believe they need to have their current managed well and to have good heat management. It’s no accident that bright LED fixtures have exotic shapes. Most if not all the time this is an effort to get rid of unwanted heat.

Are you using a constant current power supply? Or does this strip have integrated power management? If inexpensive, the strip is probably a bunch of LEDs in series. I believe the best way to deal with this is to use a constant current power supply. Something not usually found in at your local electronics store. But easy to order from most on-line LED lighting re-sellers.

-good luck

The strip is made of groups of 3 leds in series with a 130 ohm resistor, every 5 cm, so there are 3 series in the strip. The strip is designed to operate in constant voltage.

Power comes from stabilized 12V supply, good quality.

There’s absolutely no heating problem: the strip is mounted on the aluminum front panel of the box which is about 9 x 17 cm, and power dissipation is very low.

The over/undershot is caused by Excel plotting the line. I have no explanation as to why the drop is so sharp, happening somewhere over 6 hours.

Maybe longer measurements will provide more data, i’m planning on expending the test to include some good quality strips.

If you have a stable power supply and properly rated resistors your leds will be seeing a constant current. CC power supplies are really only needed with unstable input power or changing loads/dimming.

The drops in the curve are suspiciously sharp. If the ADC resolution is so bad, I wonder the decrease might be just the offset voltage drifting.

Also there seems to be undershoot and overshoot before and after each step – is this caused by the graphing software?

That’s a pretty cool experiment and a lot of time invested. The LEDs are either very poor quality or are they are being over-driven. It’s likely the manufacturer of the strip used lower value resistors on the strip than specced to provide greater brightness. This is a very common practice with cheap+bright products in the LED sign and display industry.

Current draw is about 13mA for each LED series. I cannot tell if they are overdriven or not, but life seems terribly short.

The power numbers seem funny. 13mA each for 3 strings at 12V is less than half a watt, but you said the PSU draws 14.3W from the mains. A very inefficient power supply, which is not a fair power comparison to CFLs, in my opinion.

13 mA sounds slightly low based on my experience with these strips. A quick calc shows that the average Vf @ 13 mA with 130 ohm series resistor would be 3.44v that also seems slightly high for that type of LED. Did you measure Vf?

Heat is the LED killer. It might be interesting to attach a thermocouple to some of the LEDs and try to estimate the junction temperature. Of course you’ll have to guess the thermal resistivity but using some published LED specs might get you in the ballpark.

My experience is that the quality of the cheap chinese LED strips that are commonly sold is terrible. I guess your results aren’t surprising. Neat experiment.

I think it would be very useful to get data from a higher-quality LED source. Now the payback time would be clearly longer for more expensive lighting – but the useful life is likely to also be longer.

I suspect these LEDs are cheaper ones that are: Being driven too hard (even if it’s on a massive heatsink, driving the LED hard will still warm up the junction, and these particular LEDs might not have the ideal thermal management solution.

As an example – look at many of the LED-based Maglites. You’d expect that a product made from a MASSIVE chunk of aluminum would not ever have any thermal issues with a 3W LED – the truth is that Mag completely botched the thermal management and heat isn’t transferred to that nice big heatsink. End result is that inside a product that could be effectively considered a giant heatsink, the LED and its power supply overheat and the power supply throttles back the brightness.

Also important with LEDs, and one of their biggest advantages, is their ability to dim. So even if they don’t win in a “full vs. full” comparison, the fact is that an incandescent at 50% power is nearly useless, most CFLs are not dimmable, and dimmable fluorescent lighting is expensive, leaving LEDs as the best solution if you want the ability to dim lighting – there are many cases where 100% brightness is not needed. I wouldn’t even necessarily consider dropping to 70% to necessarily be the end of “useful life”. More importantly, if the emitters happen to have an initial “drop” within the first 1000 hours and then level off as they are “broken in”, their useful life to 70% might be far longer.

BTW, the reason LEDs became so popular in flashlights wasn’t their efficiency. Years ago, the best halogens could beat LEDs in efficiency, however:

1) Halogens get less efficient as bulb size goes down, flashlight bulbs suffer here.

2) Halogens in that size class have extremely poor lifetime and ruggedness. You’re lucky to get a few hundred hours out of them

3) LEDs provide superior dimming – even in flashlights that were not officially dimmable, having a “moon mode” available at extremely low battery was highly beneficial. Halogens would become unusable very quickly as the battery voltage dropped, LEDs would take far longer to have even a noticeable reduction, and even more time producing “usable” light.

You are definitely right about the dimming. This is why i want led: i can automate the lighting to turn on/off as often as i want and i can dim it.

But i also want them to cost less than incandescents, CFL or regular fluorescent overall.

The LEDs on the strip may be overdriven, it could be one of the explanations of the poor life.

Also, some applications could find leds acceptable even if they dropped to 50% of initial brightness or less. But for general lighting, dropping to 80 or 70% is considered the end of life.

try this type of led bar.

http://www.superbrightleds.com/moreinfo/rigid-light-bars/lbcob-series-led-cob-linear-light-bar-fixture/1212/

not to be a party pooper but your methodology needs some work. first you have to clarify what exactly you want to measure. as it is right now your measuring “praciticality”. some points to consider:

-warm white leds are slightly less efficient that “blue” white led (because of chemical composition)

-you cannot just ignore the psu (except if you want to make a comparative study of different strips) since it is crucial to the whole system. an inefficient psu will waste power no matter what the charge/led strip quality is. a smart combination of pwm and load regulation will go a long way (overdriving the led after they have fallen below the 70% limit will yield addtitional “up time” as an concrete example of what i mean).

– your test box is prone to light reflection and absorbtion also using this setup the middle led are getting “weighed” higher that the side led (middle led face the sensors the ones at both extremes do not)

-keep in mind that the sensor sensitivity will change over time. to make the problem worst most led tend to shift their color slightly depending on load temperature and age

– using led strips with leds in series is a bad idea even is a led fails completly it will often still conduct electricity to some point basically dimming the other leds in the serie

-incandecence bulb have a relativly short lifespan and will require more that one bulb for the same amount of time and while cfl might have a similar lifespan the startup time and brightness will worsen gradualy

i’ve considered using led light more than once in the past 12 year and tried a lot of different solution commercial and otherwise but only in recent years has led technology evoled to the point where it could really be usefull. however at this point cost efficency will be hard to reach with off the shelf parts. energy savings however are another topic altogether.

having done the calculations myself only on “dry facts” i think its a really good thing to have someone test the stuff for real.

Although the HAD title says so, my goal was just to determine the point where the strips dim to 70%.

You are right about the led positions and box reflections but since these don’t change in time they don’t matter.

Most strips are made as some series leds with a resistor. Then, multiple such groups are wired in parallel. The strips I used have series of three leds and a 130 ohm resistor, operating at 12V.

When calculating the cost effectiveness i didnt factor the PSU because I already have multiple available. They should be factored in other projects. I also factored the incandescent replacement every 1000 hours.

ok so i guess your right HAD missed the point

but i think it still would be cool to get some more real world data on those strips/leds/lighting options because datasheets just don’t reflect the entire truth…

im sure led can be made cost effective i just dont know how many off the shelf component you can buy to do so

Good quality LEDs are a cost effective solution. I just wanted to check if cheap leds are also.

Think of this, if cheap leds would cost 3 times less and last three times less, then they would be on par. But, they cost 3 times less and so far I can say they last more than 10 times less than expensive ones. This makes them drop to a point where they are more expensive than regular halogens.

I noticed you were using a regular power meter. Those things have little or no way to determine RMS values of uneven wave forms and can give wildly varying values when used with switching power supplies, especially at low power.

THe meter uses a MCP3906 ic for power measurement, which is also used in regular power meters. If it’s good enough for the electricity company it is good enought for me.

My several year old, well used 5 meter LED strip is producing less than 1/5 the light it used to, all have faded and a lot of the LEDs have severly faded or died.

The strip is just hanging in air so heat is probably to blame for a lot of the LEDs deaths.

It’s getting retired soon, replaced with two strips running at half the power through PWM, a cool white and a warm white strip as combined they produce a very nice colour of light. And they’ll be stuck to aluminium strips this time for heat dissipation :)

If you run this experiment again you need to add a control. Include a second strip and illuminate it temporarily when you’re taking readings to ensure your box isn’t oxidizing, your driver is steady, and your light sensor isn’t losing sensitivity.

Being an LED engineer your test methodology is terrible. Noble effort though. Also there are a lot of factors you are not considering, your taking many things as constants which can degrade over time and change. You need a control and some way of tuning your measuring device to that control consistently over a large span of time. Also I didn’t see any mention of thermal management or monitoring. Heat is the biggest thing you have to worry about for LED’s. Cheap LED’s also give LED’s a really bad name. Take a look at CREE’s LED’s they are the best on available. They will operate at 70% of there original output at 50,000 hours. They even have LED’s that will operate at 80% of there original output at 75,000 hours. These are light sources that will last 10-20 years and put out just as much lumens as CFL’s and incandescence and use considerably less power. In most case’s they put out more lumens.

You are right about the method, it’s not great. But i didn’t want to go to all the trouble of making it really good.

Even if the error is as high as 30% my conclusion still stands: cheap LEDs don’t cost less overall than incandescents, and I didn’t even factor the power supply in this math. The conclusion so far is damn simple: i shouldn’t waste money on cheap led strips. This is what i wanted to know, considering the multiple claims on the internet that strips are poor quality, i wanted to know a rather rough number.

A interesting scenario to investigate would be this:

Use the light sensor to get a constant brightness.

Drive the LEDs with PWM to get about 60% of brightness in the beginning and power them up over time to hold the brightness at the same level. You will increase power consumption over time and the “final dead” of the LEDs will come sort of more prominent. But maybe worth to investigate.

It would be a good idea, but it is not practical. The simplest way i can think of doing this is to use a microcontroller that counts how much the strip has been used and correct the power based on a model, via pwm so that, at least for some time it will offer constant brightness.

As a side note, incandescent bulbs are no longer allowed to be sold as regular lighting options. Although they still exist (as ‘rough service’ bulbs, apparently, and remaining stock).

Comparisons against more polluting lighting solutions like CFLs would be interesting.

Nice work Bogdan, and ignore the usual HaD haters.

My friend Wolfgang (an analog designer) noticed the same thing a few years ago. What we found was that if the LEDs were driven via PWM instead of constant current, they lasted a lot longer. Might be worth a try. Thanks for the interesting post.

if those LED’s are standard 10-20 ma then the strip is NOT 12v… at least not with the 130 ohm resistor!

if i read correct, its 3 LEDs in series, if the strip uses white LEDs then the voltage is around 10-11v assuming they use the existing 130 ohm resistor

personally, for 12v, i would use either:

1) 4 LED’s in series with a 100 ohm resistor (more efficency)

-or-

2) the three in series with a 220 ohm resistor

if he changes his resistors to 220ohm -OR- uses PWM @ 20 years, and still have the same brightness

The strip is marketed as 12V. How exactly can you judge by looking at it? You are incorrect. Also, there’s no good way to run 4 white leds at 12V since they each require more thant 3V at nominal current.

Each series of three leds + 130 ohm resistor draws 13mA, like I said before.

Great job,

Really,LED has a much longer life span in comparison with other. It also does not take longer time to start up and helps in ensuring the maximum energy utilization.

Version 3 is complete and already running:

http://www.electrobob.com/led-logger-v3/