Some people know [Tom Murphy] as [Dr. Tom Murphy VII Ph.D.] and this hack makes it obvious that he earned those accolades. He decided to see if he could teach a computer to win at Super Mario Bros. But he went about it in a way that we’d bet is different that 99.9% of readers would first think of. The game doesn’t care about Mario, power-ups, or really even about enemies. It’s simply looking at the metrics which indicate you’re doing well at the game, namely score and world/level.

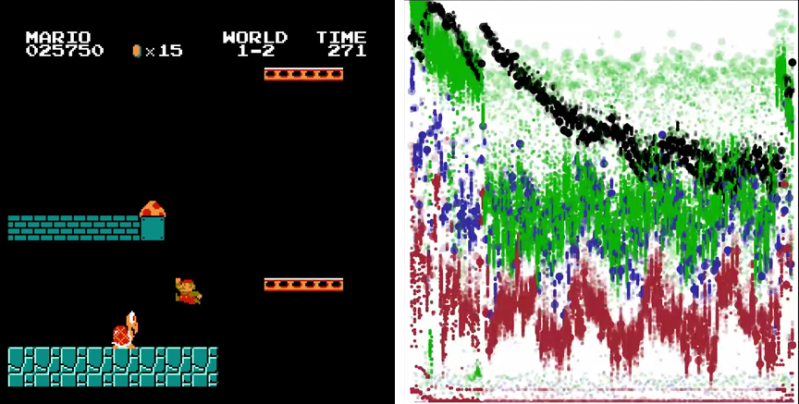

The link above includes his whitepaper, but we think you’ll want to watch the 16-minute video (after the break) before trying to tackle that. In the clip he explains the process in laymen’s terms which so far is the only part we really understand (hence the reference to voodoo in the title). His program uses heuristics to assemble a set of evolving controller inputs to drive the scores ever higher. In other words, instead of following in the footstep of Minesweeper solvers or Bejeweled Blitz bots which play as a human would by observing the game space, his software plays the game over and over, learning what combinations of controller inputs result in success and which do not. The image to the right is a graph of it’s learning progress. Makes total sense, huh?

[via Reddit]

Either you use Dr. or you use Ph.D.

You can’t use both at the same time.

NIce research otherwise, wonder how these kind of algorithms can/will be used in the future.

Depends on the country. In some Dr PhD is acceptable. In Germany you can even be a Dr. Dr. Dreamer

Yes, additional honorary Doctorates are allowed, but in that case you could also do Anonymous PhD PhD.

You can’t do both.

Not if you are also an M.D. =P

Umm, heard of humor? The “Tom Murphy VII” should have given it away.

Re: Dr. Dr.: Yep, and in Germany you would be addressed as Herr Doktor Doktor Dreamer.

This is a lot like other search problems in AI where we can’t exhaustively search the state tree, except the move results are handled by feeding the move to a ROM emulator. Cool to see it applied to emulated video games.

Tricky bits are the choice of ordering / scoring heuristic, and the fact that he’s using emulator state saving to let it compute several potential moves from each move state without having to repeatedly traverse all the states leading up to it.

this is sick lol

Cleaver and the paper has some funny bits”:

“On a scale of OMFG to WTFLOL I was like whaaaaaaat?”

[Picture of computer playing Tetris, about to lose.]

“Figure 16: Would you hire this construction company? Death is imminent, so playfun pauses the game shortly after this and then doesn’t unpause it.”

The only way to win is not to play.

lmfao!

Hello Joshua.

Found that really interesting :)

Interesting! His approach of looking at the overall size of the memory every 1/6th of a second reminds me of how physicists use energy methods to make complex problems relatively simple. I find it interesting that he only programmed it for one “play gradient”, and that it doesn’t set a gradient to play by from the example game it’s given as the first input.

The “Dr. vs PhD” comment is best left for the author of the paper instead of Hackaday as this is how “Tom” referred to himself … check the link for the 22 page paper.

Now THAT’s how you write a paper! Everyone… take notes!

It’s REAL paper for a FAKE conference, hence all the jokes.

Fascinating. Brings up the possibility of using VR with goal oriented machine learning. I’m starting to see that AI ultimately is not going to be a single program calling the shots. It’s going to be one of these programs in greedy mode, one in cautious mode. A danger interrupt program that does care about the aesthetics. Perhaps a cloud oriented language interpreter, etc. It will be more like a hive mind.

Marvin Minksy would agree with you: http://en.wikipedia.org/wiki/The_Society_of_Mind

OK, so from the perspective of a computer scientist, this was awesome to watch / read :) I congratulate you for the great method and the way you present it in. The fact that this works so often by using just analyzing RAM image sequences is remarkable. Imagine what would happen if you use some heuristics to basically validate your input before executing it. This will surely get unbeatable. A very rewarding experience, thanks to both Tom and HaD!

Anyone else thinking of ford prefect’s security robot buddy?

I’m surprised noone has tried that, though this is basically the same thing.

Awesome Hitchhiker’s reference!

And I agree.

It would be fascinating to see similar mathematics applied to 2 player games.

Also I think your program plays better than I do.

It would be interesting to have two systems play each other at a relatively simple game (checkers?) and let them go at each other for a couple of months, at the end they should both be world class players.

If theyre basically able to view raw ram and make decisions based off that in a fraction of a second, its basically a learning aim-bot of sorts.

Yes, Professor Falcon! Shall we play a game?………Strange game, the only winning move is not to play……LOL :-D

The interesing hack here is that he is scrapping the emulator ram contents of the game to grab the data to generate an heuristic where all the hard work is done, the greedy search used even if somehow works is not very good though

The CS188.1x course offered by BerkeleyX thru edX that is just ending now (seems that will be offered again soon along the second part) teach AI learning algorithms using a Pacman implementation in Python with much much more advanced AI methods like Q-Learning.

The course is really awesome and easy to follow btw, not obscure at all as the recomended book from S.Russell and P.Norvig Artificial Intelligence-A Modern Approach.

Gotta love that reference to WarGames! :)

That was great. The computer did learn.

HOW ABOUT A NICE GAME OF CHESS?

Keeping tetris paused and refusing to not loose points and get a game over is the best part. It’s like a kid that refuses defeat.

Cool bit of work for sure, but the way the guy addresses the audience really annoys me, could he not just cut the crap and get to the point rather than spending 6 minutes performing a routine?

Tom is a funny guy!

“I just like this graphic – it doesn’t matter what it means”.

All too frequent in modern papers. It’s called the Desktop Publishing effect. Back in the day (when people walked to school in the snow uphill both ways) graphics took real effort to make, and therefore were used sparingly in papers. Now that it’s just a few clicks from DB or SS to Graph, they’re everywhere.

Hard to say if that’s a good thing or not. It’s a picture is worth a thousand words versus hiding poor results in a pretty picture.

Relatively simple topic, awful explanation.

Explanation:

He played super mario bros and took 6 RAM snapshots per second and captured his key presses.

A program analysed the RAM to see which memory locations go up (and considered that making them go up is doing well).

Had a computer emulate the game along with key presses. Simulating 10-frames ahead for each move considered then picked the best input.

Is what I understood from the whitepaper.

And this takes an hour to generate 16 seconds of retarded mario playtime.

Really uninteresting….

Wow! life must look so boring through your eyes. You ignore so much with your explanation.

Paper dated 1 April 2013, OK… Anyway a fun video to watch, never read the paper through to the end, and it’s probably in the delete line up when I want to recover every MB of storage possible. Dr John Doe Ph.D my earn demerits in some circles, I wouldn’t know because that’s not my world.

It’s a joke conference and the object of the paper of the joke, but as far as I can see the method and result is real.

Brilliant end sequence in the video there. Hilarious!

I figure this approach is sensible where obstacles and enemies are predictable, which would be the case where you can’t afford the memory nor processing time for sensible randomness. Tetris, however, can be random with little effort, making it impossible to repeat a game on demand.

I think the tetras problem is optimizing towards score not toward filling a row. as filling a row partially doesn’t increase score the system doesn’t try to do that. That could be because of how the grid is stored in the RAM.

Yeah. I bet that without sufficient lookahead, and on the 1st level, the points gotten from clearing a single line (40 points) are insufficiently big in comparison to the points from soft drops (1 per line).

It’d be idly interesting to hack Tetris’s scoring to make the search better. Maybe something like “you get N points for filling in the Nth column of a given row, plus something much larger for clearing each row”

The way to get big points in Tetris is to form a pile, then wait for the 4×1 pieces, giving you 4 lines at once. You get a big bonus for that. The other objective is to stay in the game, which might require making smaller groups of lines, and clearing up anry garbage pieces you couldn’t fit in.

Tho organising your pile so you always have room for a new piece is important too. One bad-placed piece can send the whole thing spinning into oblivion as blockages pile up.

You could probably make the emulator provide the same seed to the PRNG.

Sounds like a very interesting piece, but youtube is so laggy with loading nowadays, I can’t watch :(

To explain the reason why Hudson puts their name in Adventure Island is to look up a game called Wonder Boy published by Sega. A thing to keep in mind is Wonder Boy came first. Surprisingly, this was done legally and more than once by the developer of Wonderboy.

Guess Wonderboy changed his name while skateboarding over to Nintendo from Sega ….

This is a great example of making intresting research that could be boring fun…

But will Twin Galaxies accept AI high scores?

Try this with “DIGGER” from Windmill Software. It will be good at this game i guess.

You guys noticed it was published on 1 April 2013, right?

I’m 100% sure no one read the paper ;) I was looking for something like reinforcement learning or something but instead I found footnotes like this : “possible additional simplication would be to just take lex-icographic orderings over bits, which then generalizes to 8-bitbytes. This is probably too crazy, but just right now I am sort of

feeling like maybe I should try it, though it may be the beer” :)

Nevertheless I’m having fun reading ;))))

Congratulations to the Dr. PhD, this one is a nice piece of work, both funny and interesting.

Wow, really amazing approach!

Hey! Let’s apply this to warfare! I wonder how many nukes we have to drop until we hit the target :p

I love this project. The video was a little bit off, but the work done is really impressive. Also source code is available (link in YT description to sourceforge) :D

“The only winning move is not to play”

Pause