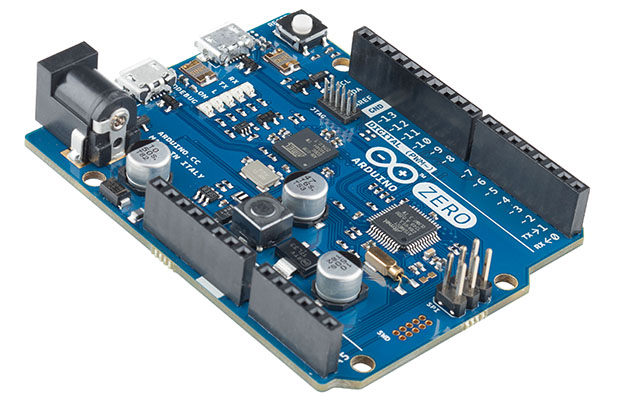

The Arduino Uno is the old standby of the Arduino world, with the Arduino Due picking up where the Mega left off. The Arduino Tre is a pretty cool piece of kit combining a Linux system with the Arduino pinout. Care to take a guess at what the next Arduino board will be called? The Arduino Zero, obviously.

The Arduino Zero uses an Atmel ARM Cortex-M0+ for 256kB of Flash and 32k of RAM. The board supports Atmel’s Embedded Debugger, finally giving the smaller Arduino boards debugging support.

The chip powering the Zero features six communications modules, configurable as a UART, I2C, or SPI. USB device and host are also implemented on the chip, but there’s no word in the official word if USB host will be available. There are two USB connectors on the board, though.

The Arduino folk will be demoing the Zero at the Bay Area Maker Faire this weekend. Hackaday will have boots on the ground there, so we’ll try to get a more detailed report including pricing and availability then.

yay, this is great news. I mean, I do all sorts of hardcore embedded flippy bit 0x register garbage at work, but I always use arduino at home. It’s just so much more pleasant.

Will there ever be a version with standard 100mil spacing between pins 7 and 8? Perhaps they could have made a compromise between that and what they have now, that will accept original shields aswell as vero-board (albeit with a tight fit).

I’m guessing that’s a feature, not a bug.

It IS a feature

Arduino was designed for young school children

The offset makes it impossible to plug in a shield upside down

but there are MANY other ways to key a set of pins so that its reverse-proof.

using nonstandard spacing is just bizarre. no other word for it. well, maybe stupid. that also describes it, but I was trying to be kind.

From what I heard, the original Arduino had the weird offset by mistake and it caught on before they could correct it. They didn’t want to ruin reverse compatibility for the shields.

Arduino COULD always put a second set of standard headers right next to the offset ones, but then you run the risk of people “not understanding”.

Yeah. I have an oddball TI chip breakout which has dual row headers arranged in a box formation. But each set is one pin to the left or right of center, such that it only mates up one way only. Certainly better ways. Launchpad took the opposite direction of no key at all (save for the 3 pin power header). But board to board orientation usually makes it obvious, unless you have a board that is only as long as the headers.

Well… actually… designed adult college students taking art and design.

But yes.. keyed makes it slightly more idiot proof.

Its a bug that caught on.

It was a mistake in the final prototype, but by then it was a choice of delay shipping and waste a load of time and money fixing it, or just roll with it.

It does also provide the feature of not being able to plug one in backwards (and thats what they put in the FAQ).

Don’t count on it. That design feature is truly grandfathered in, now, for that form factor. Fortunately, getting your own prototyping PCBs made to fit that pin pattern is fairly cheap, and while they’re not as cheap as vero-board, I’m fairly certain they can be had for pretty good prices from people that make them in large quantities.

they really should have gone .1 friendly.

there is just no excuse for that. no, not everyone wants (or can) get pcb’s made. being perf board friendly is VERY important and this mistake being made over and over really annoys those of us who want more freedom and lower entry requirements to play. .6″ spacing on dip style pkgs is also a wise thing, when you can find it. have the native chip be smd, fine; but please also allow a way for a dip24 or dip28 (etc) module for those of us who want the easiest and fastest way to prototype and try out new things.

Ow, get over it. Arduino Protoshields are affordable enough.

They should fix that when they move to a non-5V tolerant I/O version. See my comment below for datasheet small prints why this board is not 5V tolerant.

The spacing is the biggest mistake the Arduino designers ever made.

Hmm, why to use the Atmel’s proprietary EDBG chip instead of implementing a proper SWD/JTAG that is actually supported by standard and open source tools? The micro supports it, a simple SWD/JTAG bridge is a matter of adding something like an FTDI chip or another small micro.

Like this the only way to debug will be through the Windows Atmel Studio or perhaps an “arduinoized” (read crap) debugger in the Arduino IDE talking the EDBG protocol, instead of something like GDB …

Once the Arduinos moved away from the small ATMegas they are becoming less and less useful :(

These days, Arduino is essentially the brand applied to Atmel entry-level dev kits – designed by Atmel – aimed at the hobbyist market. It’s an improvement over the traditional dev kit model (costing hundreds and shipping with awful windows-only IDEs – looking at you, TI, IAR etc.) but do not confuse the new Arduinos with the grassroots effort that produced the Duemilanove.

I think with the introduction of the Galileo and the rest of the Arduino branded boards Arduinos moving to a licencing model. Pay them plenty of cash and you get to use the Arduino logos and colours and in theory lots of people willing to use your over priced under powered development boards.

“On-board programming and debugging through SWD, JTAG and PDI”

Looking arount open-ocd i thing it is already in the works http://lists.nongnu.org/archive/html/avrdude-dev/2014-02/msg00034.html

Indeed, it’s a bad decision when compared with mbed’s switching over to using CMSIS-DAP. I think that the flexibility that brings mbed (while still allowing for the “friendly” IDE and “platform” of libraries, but also supporting any alternative setup based around the standard toolchain of [Editor]+GCC+GDB+OpenOCD) will make it a much more attractive choice for any board based around a Cortex-M than the Arduino platform, which really is a surprising reverse of positions from when mbed started out (with their proprietary interface chip and single supported board vs. Arduino’s ability to run on anything cobbled out of any Atmel chip with a serial interface).

Maybe the ecosystem of shields allowed by a standard pinout (but diminished compatibility from a switch to 3V3, I assume) and existing momentum will allow Arduino to “win” anyway, since every mbed-compatible design has zero pinout-compatibility with every other. But moving into the ARM-era, this new Arduino direction just hasn’t got the appeal to anyone who wants to be able to “remix” the hardware design itself, it’s still locked into a single manufacturer’s set of components, with expensive support hardware required for programming support, only now all the competition’s not locked to single manufacturers. And it doesn’t even bring anything to the table like their serial bootloader did for the ATMega designs, it’s a straight up Atmel Demo Board, using an embedded Atmel Programmer.

I’m not sure how the EDBG works, I assume it emulates some other Atmel programmer at the protocol layer, and requires Atmel-proprietary drivers. If so, GDB would likely work through OpenOCD, if OpenOCD has support for whichever programmer it emulates.

Even if Arduino are using OpenOCD as both the debugger AND the flasher in the IDE, I suppose this is going to devastate the clone market. You know Atmel’s going to charge an arm and a leg for those EDBG chips (though Arduino no doubt have very favorable pricing offered to them), and there’s not going to be any other option if you want to make something plug-and-play compatible with the Arduino IDE now, especially if the drivers come into play. They could go with some other bridge that’s OpenOCD compatible, but they’d have to have customers rewrite openocd configuration files, which isn’t going to go over well. I’m sure Arduino would love to see some of the cloners die off, but I’m not sure the platform can stay as big and healthy as it is now if there aren’t as many alternatives available, including the dirt-cheap chinese knockoffs.

If they really use a standard SDW what is to stop someone for putting something else in place of the EDBG chip ?

Because SWD isn’t a protocol between a PC and a programmer/debugger, it’s the protocol between the programmer/debugger and the target chip. The EDBG chip will be using the SWD protocol to communicate with the SAM D21, but the IDE will be using the EDBG protocol to talk to the EDBG chip via USB.

But looking at Atmel’s documentation on the EDBG protocol, it sounds like the core of the EDBG protocol is ARM’s CMSIS-DAP standard with a few “vendor” commands layered on top. Arduino might just be treating it as a generic CMSIS-DAP interface/protocol after all, in which case it would work with absolutely any CMSIS-DAP interface that’s plugged in. That would be really great, and I’d love to see even more momentum for CMSIS-DAP.

SWD/JTAG debugger/programmer support has been a stumbling block for people trying to get into ARM development with open-source toolchains. The choices in hardware have often required choosing to compromise on a solution that is either locked to a single manufacturer’s chips, doesn’t support your favorite OS, has license restrictions on non-commercial use, or is extremely expensive. Getting compatible drivers installed can sometimes be a huge problem, especially for Windows users if libusb is required, and then it can be difficult to configure even once it’s connected and working, with different options for every device and protocol, and some devices requiring different options for every MCU.

CMSIS-DAP has the advantage of being a single, standard, cross-vendor protocol, and based around (driverless) USB HID (though Windows users still need drivers if the device wants to include an additional serial bridge for UART monitoring, since Windows doesn’t support composite HID+serial devices). With OpenOCD’s (the tool most open-source toolchains are already built around) latest release (v0.8.0) supporting CMSIS-DAP, there’s finally a free tool available (formerly Keil IDE was just about the only tool that supported the protocol), and more and more manufacturers are turning to it as the protocol for their development board’s onboard programmers (which often are both cheap and have headers to work as generic external programmers). I think we’re finally going to get over this stumbling block for people who want to try out Cortex-M development. Now if only vendors would start providing GCC linker scripts and device headers for their MCUs…

That’s the thing… things are getting more open.

While atmel’s EDBG chip is not hobby friendly BGA, someone else might come up with some open debugger that uses the standard SWD. Maybe even the stm nucleo will work :))

you are indeed right about some reasons why arm is not that hobby friendly. A long time ago when I first learned about ARM I thought that the micros would be similar and moving between vendors would be a breeze. Well, it is not, in fact, the only common thing that they have, the core, is one of the least relevant things due to the abstraction provided by the compiler. Everything else is a pain…

They’ve got some pretty stiff completion in the form of the STM Nucleo boards (less than £10 for a Cortex M4F with 512K of flash and Arduino pin outs, plus an integrated USB programmer/debugger)

s/completion/competition/ (blasted autocorrect)

Agreed

Thank god finally an Arduino with Zero calories how is the taste compared to Arduino Classic? Hey someone had to say something useless and damned if it wasn’t going to be me!

Didn’t you even read the article? The new Arduino Zero is root beer flavored, where the old one was more like cola. There’s just no comparison!

In other words, it is already rooted!

“useless and damned” you forgot “tasteless” (smile)

To me Teensy 3.1 is a much better alternative to this. Much smaller footprint, Cortex-M4, 256kB flash, 64kB(!) RAM, 34 pins, faster clock, 13 bit ADC, built-in DAC, build-in RTC, all kind of fancy peripherals, and even cheaper at $20.

but what about all mah overpriced shiiiiiields

Not to talk about the uber crappy and sluggish Java based “IDE”…

Teensy and its variants/clones win hands down in every field.

I like the Teensy too, but it does use exactly the same IDE, it’s not like the Teensyduino installer includes a native executable that installs over top of Arduino’s java one.

I mean, you could use an external setup for developing for the Teensy 3.x, and the Teensyduino installer provides the necessary files for compiling outside the IDE with gcc directly, but surely that’s going to be just as true of this Arduino Zero. If you compile with gcc in an IDE, it’s kind of hard not to include the files necessary for compiling with gcc.

Teensy 3.1 > Arduino 0

Agreed.

three is bigger than zero

Arduino is a monument to compromise, built on the dumbed down of the Wiring API, the mediocrity of the Processing IDE and a nonsensical shield layout that refuses to die. Anybody want who really wants to learn embedded systems should stay clear of it.

“Anybody want who really wants to learn embedded systems”

Does anyone want to “learn embedded systems”? People want to get stuff done- not become an expert on antiquated and obtuse C APIs. I’m an embedded programmer and I’m annoyed by an industry that rejects abstraction out of some sort of ego.

Arduinos have a lot going for them. A more intuitive programming environment than most, existing shields that make most tasks no work at all, and most of all an expansive and friendly user community if anything goes wrong.

Some platforms have some of these features but even if another platform had all these features you would still have to do a lot of work to find it and make a decision. Arduinos cost more but you pay for convenience.

Who cares if the shield pinout is non standard? That only matters if you are building a shield on a breadboard and PCBs are cheap these days.

The extra abstraction layer is also rejected because of its (lack of) speed.

Which is irrelevent for 90% of hobbyist applications.

But people “who really want to learn about embedded systems” are often in the other 10%. Abstraction should not be at the pin level. They should be at the functional level, e.g. “turn alarm led on”, “copy nvdata to memory”, “read SPI ADC”, such that the implementation can be optimized by exploiting the hardware features.

Yes, there are people who want to “learn embedded systems.” The arduinos are good for the hobbiest to get stuff done, but remember, someone has to know embedded systems well enough to create all that abstraction that makes it so simple.

Yea, but what would the embedded programmers do with the rest of their time? Plus, going balls deep in datasheets helps you grow hair on your chest. And if you are a woman, it does the thing which is equivalent to that.

Attaching the name “Arduino” to something does not prevent the user from going balls deep into a datasheet. This is not a closed source. Anyone working with ANY board with an ATMEGA or ARM in this case should take the time to at least browse the chip datasheet. But people take that in their own time. Arduino delays/removes the need for that, but one should still do it.

By the way, I hope you went balls deep into that intel quad core cpu you are typing away on.. because… you know.. if you USE the chip, you should KNOW the chip.

A tired ego based argument. That is like saying “Anyone who REALLY wants to learn how to play baseball should just skip T-Ball or Junior leagues.”

You are not a true programmer unless you do Assembler.

Power users ONLY use linux.

And on and on and on.

There is a LOT of room to grow with arduino that most ‘proper engineers’ never take the time to learn about.

Lets say you pick up an ATMega, make your own board, hook up a debugger and work with AVR-GCC… They only difference here is ‘arduino’ adds an easy to use boot-loader and a whole bunch of pre-written code base/libraries. You don’t have to use any of it if you don’t want to. And in that arduino IDE you can work down on the chip and register level if that is what you really want or need to do. AND you can always get rid of the ide and use the toolchain with a more PROFESSIONAL IDE. And you can always get rid of the toolchain entirely and something directly from AVR or PAY for a tool chain, and still use that handy bootloader.

You can use as much of or as little of it as possible while still enjoying the benefits of the hardware (like just getting stuff done), and even deconstruct the hardware, learn from it and build your own.

This has more to do with experience than ego. Most people become religiously attached to the Arduino and it’s API and will not recognize that it’s a dumbed down piece of crap. Then they wonder why repeatedly calling digitalWrite to toggle a pin is so slow. Or why they run out of RAM when they add more and more constant strings.

And for the people who do delve deeper…they wonder why they bothered with Arduino in the first place. Arduino tries its damndest to keep you from seeing the structures that comprise the microprocessor: Register interfaces, PIO ports, Timers/Counters, Comm peripherals and DMA transfers. These are the things that let you build what you truly want.

Case in point: Arduino has probably hindered DIY 3D Printer development more than it has helped. Just look at the evolution of the firmwares.

“Case in point: Arduino has probably hindered DIY 3D Printer development more than it has helped. Just look at the evolution of the firmwares.”

There is a chance that the internet will spawn an even more retarded comment this week, but the odds are lower than average..

@voxnulla

haha.. yeah… I really have no response to that. Whaaaaat?

Well certainly I am against ‘religious attachment’ to any particular device. There are fanboys, and there are anti-fanboys… and then there are some very enlightened engineers (Dave Jones for example) who say that when project constraints demand it, you choose the processor and tool chain best suited to to it, and when they don’t constrain it, you choose whichever you feel most comfortable with.

You can be religiously attached to a device, or religiously against a device, or you can realize that for every device there are potential use cases and potential users.

“… to build what you truly want.” Funny that.. considering that there are literally THOUSANDS, of not millions of projects using nothing more than the dumbed down arduino hardware, it’s api and shitty ide to make things that people truly wanted. Hey, if it really was nothing more than a semi-polished turd then there wouldn’t be so many books written about it and clones would not be selling like hotcakes.

When beginners delve deeper, they usually find out the truth about working with other micros; that it can often take hours of frustration getting a new tool chain to compile correctly, hours to figure out all the shit you are doing wrong in all the setup files for a particular chip, and all the pain of debugging hardware, just to get a first simple blink application to work.

Anyone who has been around since before the platform knows that to be the first law of micro-controllers. “Thou shalt not be successful on thein first try.”

For those that dig deeper, they realize that the platform was a functional starting point for learning and getting up to speed FAST, as well as developing a whole lot of useful stuff before really having to dig deep and study hard. The abstraction got you up and running and gave you a lot of room to build amazing things. For most people and most projects, they really will NOT need to have deep chip level access.

If anything, your argument only holds true in that when people finally hit the wall with Arduino, they assume they have exceeded the capacity of the lowly AT chip and decide to jump to whatever the current trending ‘newer’ ‘faster’ ‘moar better’ chip is (usually something ARM or ST) before digging deeper, exploring and realizing they can squeeze more in by getting their hands dirty at the hardware level.

I hope they break out ALL the pins, nothing annoys me more than a pin connected to nothing! Even if it were just connected to a test pad that would be actually beneficial!

42 Pins and you can only use the Arduino Uno default pins?

If they brought out all the pins, you’d complain because it’s not hardware compatible

But since this is actually the only ARM Cortex development board on the whole planet, you would expect them to do everything all at once

Umm.. you mean besides the 804 options listed at Mouser?

http://www.mouser.com/Semiconductors/Engineering-Development-Tools/Embedded-Processor-Development-Tools/Development-Boards-Kits-ARM/_/N-8x1wl

They could have made an Arduino in between the “normal” and mega form factor. Call it, Arduino, smedium

Id buy that. At least it isn’t that starbucks cup size bs

If you look at the pictures from the official site, it’s clear that the BGA is providing the debug gateway and the QFP is actually the application processor. Looks like more of the same where the comms processor is more powerful than the applications processor, ala UNO R3, etc.

Yeah the Kontron 6502 debugger unit from 1987 is a lot bigger and more expensive than a 6502

are you only just now spotting this trend?

The problem with ARM based Arduinos is that almost no library is going to work.

Most of them access AVR Registers directly because Arduino does not provide an efficient hardware abstraction layer.

E.g. I think they still have not fixed the digitalWrite function: https://code.google.com/p/arduino/issues/detail?id=140

I don’t think this is true. The toolchain is like an onion. Each layer abstracts to some level, The outer shell is the user’s application. The layer below is all the libraries the user and other users have created, below that is various layers of abstraction.

Somewhere down there is the ‘arduino’ library which augments AVR-GCC. Provided that this library layer is written specific to each micro, in such a way that chip version of the library presents itself to the outside world in the same way, then any library which plays nice with this core, will play nice with ANY core.

Your statement is only true of the core itself. Obviously switching to different CPUs will require an entirely new core control library to be written to perform all those functions. The key is consistent keyword naming conventions.

I would say ‘the vast majority’ of libraries will have absolutely no issues at all, as has already been proven by Maple and Energia (STM32 and TI arduino ports).

As a library writer, if you start bypassing everything that makes arduino arduino, and hit the chip directly, then obviously yes you will have problems.

But what makes arduino great is that only the porter has to deal with this, and only the one time. Theoretically, any chip with GCC support could be supported by arduino as well.. provided someone does the chip level abstraction. That is the essence and beauty of abstraction.

Example of some things that won’t work: Servos. Audio. Both use the AtMega’s timers, configuring them directly. Actually, the servo lib may have been updated for the Due, but that’s the exception because it’s a core lib. The issue still exists with the audio library, and would exist with almost any other library besides the defaults that makes use of timers.

“As a library writer, if you start bypassing everything that makes arduino arduino, and hit the chip directly, then obviously yes you will have problems.”

So your counter to his argument that almost none of the libraries will work is that they don’t have to be written that way? Well they are.

“But what makes arduino great is that only the porter has to deal with this, and only the one time.”

Most Arduino users aren’t knowledgeable enough to even know where to begin doing this.

Sure.. ok I can see your point here. And here is a post on the 430 forum that somewhat corroborates your claim. http://forum.43oh.com/topic/5080-issues-with-servo-library/

But clearly, they HAVE a servo library, that ‘talks’ the same way as you would expect on an Arduino. And any library or sketch that someone writes that talks to the servo library would be portable. The Energia servo library may be very different under the hood, but you use it the same way.

Jitter or possible problems is a bug issue that certainly needs to be tracked down, but does not invalidate my point.

Perhaps a clarification of what we are both saying is in order:

In claiming that “none of the libraries will work”, are we referring to just lifting Servo, or Wire or Serial from AVR and slapping it in a TI and expecting it to work?!?! Then sure, that is going to be a disaster.

“Libraries” is a rather encompassing term, and includes THOUSANDS of bits of code that work just fine.

My point was this: “If the servo library works properly on my new chip, and my library talks properly to servo, then my library will likely work properly on my new chip.

And I would not expect the average arduino user to have to port the core libraries to the new chip. That falls under the port project for the chip (I.e. the Energia/Pinguino/Maple team or whatever). by core I mean:

EEPROM, LiquidCrystal, SD, Servo, Serial, Servo, SPI, and Wire libraries. These, and a few others such as basic interrupt and power management I consider to be core.

These all are very different chip to chip under the hood. The port team writes these, and presents the libraries to your libraries and sketches with the same standard convention, such that your code is portable to it.

Your I2C sensor library talks to the Wire library. Wire handles the hardware. Provided you use the Wire library appropriate for your chip, your I2C sensor library is highly portable.

@scswift

I counter that “library” and “almost none” are incredibly broad reaching, and contend that it is in fact the opposite. Some libraries wont work well without tweaking (or in the case of something like audio/servo will require the porting team to write a new core library) but still feel confident that most libraries that users have written will work without a problem, or just need a nudge. There are LOTS of libraries out there that don’t play on the hardware, or do so only through core libraries.

In my experience with Energia, many libraries do indeed access AVR registers directly and will not function.

Assuming you are using Energia’s core libraries for the hardware (not lifting AVR wire library and slapping it at a TI) and your application’s libraries do not hit hardware directly, you should only have minor tweaks, such as pin routing and the occasional delay nudge to get things working. Delay twiddling is often a problem on the I2C bus, where delays inserted into I2C device libraries to play nicely on the AVR Wire library/hardware are either not necessary or need to be adjusted on the TI. Its not perfect, but does not warrant “BeWaRe! Your libraries wont work! Dooooom!” Let’s call it a change to practice troubleshooting and to learn something ;)

Last time I ported an accelerometer sensor lib to Energia I had to re-write the whole library using the arduino one for reference becasuse the whole thing used ATMega’s PROGMEM for just about everything… I can understand putting largeish blobs into PROGMEM, but every single value defined in the code?!?

“The toolchain is like an onion”

You peel off a layer, and cry some more…

Meanwhile the Parallax Propeller has had these features since about 2010, for the exorbitant price of ten bucks.

Welcome to 2014 where ST makes $10 development boards with floating point hardware.

I’m curious about this line “The chip powering the Zero features six communications modules, configurable as a UART, I2C, or SPI”. Does that mean I can run 6 I2C buses simultaneously? Is that something the teensy 3.1 can do?

Haven’t looked at the arduino pinout yet, but yes the D20/21 chips have six serial peripherals (each unit can be configured for UART, SPI, -OR- I2C at any given time). There is a large amount of internal pin multiplexing available, so most of the serial peripherals can come out of 2 or more pins on the package depending on how you configure it. I2C is actually the most limited of the serial peripheral modes due to the requirement for the I2C capable pins (most of the serial pins are push/pull only)

TLDR: Maybe…

Yes, there are 2 micro-USB connectors onboard. And as far as I can see, one is with black plastic part and the other is with grey plastic part. That should indicate that one is micro-USB B (black), and the other one a micro-USB AB (grey). The micro-USB AB should be able to act as host.

So whatever happened to 5V tolerant? Did the Arduino community finally grow up like the rest and embrace 3.3V signaling?

Page 900 Datasheet of the Atmel microcontroller: Atmel SAM D21E / SAM D21G / SAM D21J

Under 35.2 Absolute Maximum Ratings:

VDD Power supply voltage: 0 (min) 3.63 V (max)

Pin voltage with respect to GND and VDD: GND-0.3V (min) VDD +0.3V (max)

When they state GND-0.3V (min) VDD +0.3V (max) It basically means that there are (ESD/protection) diodes for pins to the power rails and no going over that plus a (margin) for the diode drops. So when the parts tells you that it can be *damaged* .running at 3.63V and inputs exceeding 3.93V, it means it is not 5V tolerant.

*damaged* = is what “Stresses beyond those listed in Table 35-1 may cause permanent damage to the device. ” means…

The arduino R3 pinout includes an IORef pin which will be 5v on a 5v arduino but 3.3v on a 3.3v arduino. Any IC’s on the shield should run at IORef.

“Did the Arduino community finally grow up like the rest and embrace 3.3V signaling?”

Apparently the Arduino Pro 3.3V is just a figment of someone’s imagination

I’ll save judgement until we get the full details of it, but I think it’s kinda neat to see what two companies can produce together — sort of allows you to “get into their heads” a bit.

I can see where this board will be handy for a great number of fellow developers, especially those doing low-voltage ARM stuff, but I don’t think I’ll personally be using one. I’ve got a stack of TI’s Stellaris and MSP430 boards sitting here (though the documentation is a bit…difficult? :), and the limited number of times I’ve needed them, they’ve worked out pretty well. Unless these Zero boards are $5 or less, I don’t see much reason for me to switch, but then again, I don’t need the Arduino IDE, either.

Energia is a fork of the Arduino IDE for TI’s Stellaris/Tiva, MSP430 and C2000 platforms anyway. I use it.

Yeah. I like it too. Most arduino libs work without too much tweaking (a few things here and there).

Also, Pinguino for PIC2550 and a few others.

I forsee a future in which a great many chips/platforms will have some wiring style port.

I got a Due clone for 12 euros that has twice the Flash, twice the clock speed, twice the number of DAC channels. What do I need the Zero for?

Is it possible to use the Zero as a programmer for external SAMD IC-s? For burning bootloader, etc.

sorry this is an old thread, hope to get a reply…

does anyone know if the native usb port (using the zero as a device) allows one to exceed the data transfer rate that exists when using the basic serial connection with an established baud rate? I know usb 2.0 has a decently high theoretical transfer rate and am interested if this can be used as a more functional data acquisition unit now.

Thanks!!

First beta of an Arduino Zero integrated .ino gdb debugger available here http://www.visualmicro.com/post/2016/01/03/GDB-for-Arduino-INO-Initial-Beta-Notes.aspx