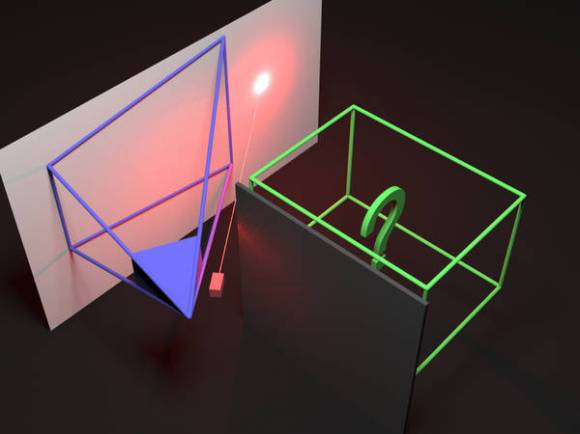

[Matthias] recently published a paper he worked on, in which he details how his group managed to reconstruct a hidden scene using a wall as a mirror in a reasonably priced manner. A modified time-of-flight camera (PMD CamBoard Nano) was used to precisely know when short bursts of light were coming back to its sensor. In the picture shown above the blue represents the camera’s field of view. The green box is the 1.5m*1.5m*2.0m scene of interest and we’re quite sure you already know that the source of illumination, a laser, is shown in red.

As you can guess, the main challenge in this experience was to figure out where the three-times reflected light hitting camera was coming from. As the laser needed to be synchronized with the camera’s exposure cycle it is very interesting to note that part of the challenge was to crack the latter open to sniff the correct signals. Illumination conditions have limited impact on their achieved tolerance of +-15cm.

So… How does it work? Any links?

https://www.youtube.com/watch?v=IyUgHPs86XM

explanation starts around 40 min

What Carmack explains there is the forward problem. We’re dealing with the inverse problem: Given an image formation model and the resulting image (measured data), what are the parameters of our model (geometry and reflectance)?

All the remaining details in our paper, which can be obtained through the link provided above.

I’m all for featuring academic research on HAD, but I wish they would include a link to an ungated copy of the paper.

last link in the page i linked:

http://cg.cs.uni-bonn.de/en/publications/paper-details/HeideCVPR2014/

Thanks! I only saw the gated SIGGRAPH link initially. Nice work!

email the person and 99% of the time they will send you a pdf or link

Here’s a link to the paper. http://cg.cs.uni-bonn.de/en/publications/paper-details/HeideCVPR2014/

While I’m here, I might as well add a call to all hackers out there: PLEEEASE, someone get the Kinect One to operate on custom modulation frequencies and output raw phase images. (I’ve talked to a lot of folks at Microsoft – it is never going to happen officially) It would be so much nicer being able to use off-the-shelf, well-engineered hardware for this kind of work.

Microsoft should just release sources of windows xp, we could combine Linux kernel with XP performance and usability.

Hahahahahahahahahahahahahahahaha!!

Lol. I have no idea if this is serious or a joke but it’s funny either way.

That said, it’d be pretty cool if they’d release source for, say, Windows 95, just for goofs.

A hybrid OS was created it ate the internet and all inside.

Reminds me of http://newsoffice.mit.edu/2012/camera-sees-around-corners-0321

Here’s the tip I submitted to tips@hackaday.com. Apparently, not all information made it into the post.

Dear all,

Please allow me to toot my own horn, but this is a project I’m extremely proud of because it shows the power of multi-path analysis in combination with commodity range cameras.

You certainly have come across the MIT and their fantastic “femto-photography” line of work where they used the heaviest optical machinery to record videos of light in motion at picosecond time scale [1], then showed you can use this data to reconstruct objects outside the line of sight [2]. Sounds similar to Dual Photography way back in 2005 [3], except that here, neither light source nor camera can directly see the object – so there’s yet another level of indirection involved. So they deservedly got a lot of press for their work.

Anyway, we were just able to show that the same is possible using standard time-of-flight sensors for a couple hundred $ – in particular, we use a PMD CamBoard Nano [4] – essentially equivalent to an (unlocked) Kinect One. Last year we demonstrated the capture of light in flight using range imagers [5]. Now imagine staring at a wall using such a device, then being able to reconstruct what’s behind you. Our reconstruction is based on a generative model (a simulation of light in flight), whose parameters we tweak to learn more about hidden geometry and reflectance. We can reconstruct centimeter-scale detail from three-fold diffusely reflected light. We will present method and results this week at the CVPR 2014 conference [6].

Also, our solution involves a lot of hackery around the CamBoard. While we’ve gotten rid of the 50% melt glue that was our first camera prototype, we still drill into the sensor package (literally) to inject custom modulation signals and synchronize them to the CamBoard’s own exposure cycle [7]. All reflow soldering is done under a carefully adjusted cheese vapor atmosphere in our group kitchen’s toaster oven.

[1] http://web.media.mit.edu/~raskar/trillionfps/

[2] http://web.media.mit.edu/~raskar/cornar/

[3] http://graphics.stanford.edu/papers/dual_photography/

[4] http://www.pmdtec.com/products_services/reference_design.php

[5] http://www.cs.ubc.ca/labs/imager/tr/2013/TransientPMD/

also: http://hackaday.com/2013/05/18/filming-light

[6] http://cg.cs.uni-bonn.de/multipath

[7] Images attached: 0.5mm pitch LFCSP-56 chip manually placed and pizza-soldered to 4-layer signal generator board; CamBoard nano with modifications; historic hot-glue camera as used for our 2013 SIGGRAPH project

Cheers,

Matthias

apparently the new Kinect is coming to windows (sorry linux)

http://www.microsoft.com/en-us/kinectforwindows/Purchase/developer-sku.aspx

Yes, but no raw data and no custom settings. At the time you have a depth map, it’s already too late

Just thinking… the stuff the new Kinect / these cameras do seems to rely on sending out a very brief pulse of light. I’m wondering how annoying or even noticeable that is to humans, and how much of a sensor problem room illumination is.

Cos I was thinking, room lighting pulses at 100 / 120Hz, would that be of any help? IE detecting the darkest part of the cycle, then syncing the sensor’s own light flashes with that, 100/120 times a second.

Actually if that does turn out to be useful it’ll only be as long as we have lights that flicker. Do LED light bulbs use capacitors to smooth out their DC?

Those are demodulation sensors, basically the equivalent to a lock-in amplifier per pixel. They are only sensitive to light that is modulated at the right frequency (typically 25-120 MHz) and not to ambient illumination. Also, infrared, so the RGB image doesn’t get polluted and us humans don’t get annoyed.

Ah! Sensible! Thanks.

Wonderful project.

Reminds me of that TNG episode where they reconstruct someone from the scenes shadows.

Could something like this be done also with one of those lightfield lens?