Like some of our grouchier readers, [PodeCoet]’s Digital Sub-Systems professor loathes everyone strapping an Arduino onto a project when something less powerful and ten times as complicated will do. One student asked if they could just replace the whole breadboarded “up counter” circuit mess with an Arduino, but, since the class is centered around basic logic gates the prof shot him down. Undeterred, our troll smuggled an MCU into a chip and used it to spell out crude messages.

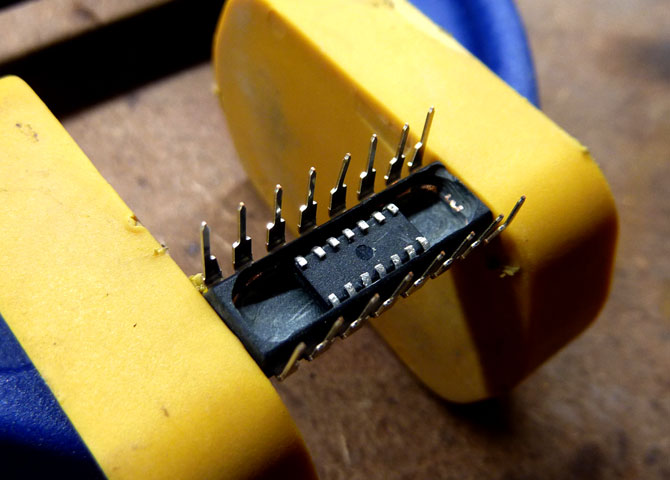

No Arduino? No problem. It took him 4 tries but [PodeCoet] hollowed out the SN74LS47N display driver from the required circuit and made it the puppet of a PIC16F1503 controller. The PIC emulated the driver chip in every way – as ordered it showed the count up and down – except when left unattended for 15 seconds. Then instead of digits the PIC writes out “HELLO”, followed by three things normally covered by swimsuits and lastly a bodily function.

For such a simple hack it is wonderfully and humorously documented. There are annotated progress/failure pictures and video of the hack working.

It is not as elaborate as the microscopic deception in the infamously impossible 3 LED circuit, but it gets to the point sooner.

How great is that!

At the very least it is damn funny :)

I’d really like to see the look that professors face!

The author mentions it’s basically an Electronics 101 class and most of the students are way beyond the material. I suppose it’s possible to leapfrog necessary material, but, seems the Prof is a bit curmudgeony about microcontrollers even if they weren’t the wrong solution for this particular class. It’s maybe why the college stuck him with the intro class :p

It’s unbelievable. As a student of electrical engineering in Germany 25 years ago, it was already the same spirit. To have a “valuable” solution, one must have a bakingtray size TTL or CMOS logic circuit. Those who used microcontrollers already had been stigmatized as wimps … I thought, in times of Arduino, teensy, Raspi etc. these prejudices are behind us … Ok, there is nothing false in learning the basics of logic circuits, but later for finding solutions, the fastest, cheapest and easiest way (of course at least at the same quality level) will be the solution.

Sure, but could he do it with logic gates? Cool hack but I hope the prof held him to the assignment.

Why would he not? The implementation works, even if you replace the hacked display driver with an original.

Yup.

And to be fair, this project was probably beneath a lot of the students. One of those tedious box-checking things just to prove they can do it, when everyone knows they can. Who couldn’t design a simple counter circuit with some 74-series logic?

It can be very boring when you’re “learning” something you already know, just because you don’t have the paperwork to prove it. And this was a pretty good hack.

Might get some creativity bonus points, but none the less he fails to follow the specification (depending how it was written).

There are just so many things other than just making a circuit work in engineering. If he were out in the real world, sometimes there are business reasons to not to use a certain part – or they simply don’t have the resource to hire a programmer so it has to be done in hardware . Other reasons could be lead time issues with supplier, unqualified supplier, part not in preferred part list (qualification, operating range), part too expensive etc

Not enough resources to hire a programmer, seriously? Anyone who can hard wire logic using electrical components should surely be able to learn how to write some code in a language just as well as any “hired programmer”. I don’t see how anyone today can rightfully call themselves an engineer if they’re not comfortable with code and learning new programming languages. There’s really no modern engineering problem you shouldn’t make a computer do for you if it can.

Exactly, in the same way any programmer should be able to put together circuits. Can’t hire an engineer? No problem, just hand the responsibility of hardware design over to the guy writing kernel code. Because, really, what’s the difference between a CS degree and an EE degree anyways?

yes the people who write SQL parsers need to know about MOSFET leakage currents because it affects their jobs directly

At the local uni here it’s about one semester.

Does a CS guy have the math, electrical background, and know how to design and build Analog, RF, Antenna, Communication, Control System, design chips, transmission lines, high speed digital design? Do CS work with manufacturing, purchasing, chips vendors, mechanical design, thermal design, compliance testing?

Yes, I think programmers should at least understand the basic digital circuit and know how their microcontroller/processor works at the lowest level.

yeah we need all of those things when we worry about database integrity and correct compilers

NOT

From the comments, you guys have not worked in a corporate environment. Working in the real world is a bit different than working at school or on your own projects. Just being able to write some code does not mean writing code that meet company software standards or follow process.

So when I said resources, it is not just salary/benefits – HR head count limits for a project (yes there is a cap for that), desk space, infra structure. Not a case of just piling a warm body.

It is not just drawing schematics, but EE manages project/schedule, interfacing with other groups/teams, working with supply chain, factor testing team, safety/EMC. It is not just talking to your own little group like software. BTW typically there is a 1:10 ratio (give or take) for EE vs CS people, so there is no lack of work having to be done.

Yes, you can double time if you can actually have to time to do stuff outside of EE at work *without* affect your ability to meet schedule.

And dont forget regulated industries (medical, aerospace) or any company under ISO 9000.

true that, i’m not an engineer, but i am an electronics technician, and with all due respect to all “software engineers” but most of the time, they come to us, the technician for everything dude, i work on the industry, and there hasn’t been a single time when all 3 “hired programmers” of our company hasn’t come to me to get him something to work,

damn, they even ended up assigning projects to me because i can design, prototype, test and build with “programming included” because i do use MCU’s on most of my projects, so i am actually saving them tons of money by doing everything they need, i’m telling you guys, multi-specialization is the future, the more skills the better, don’t settle on trivial careers doing just “one thing”

It’s like the difference between using a calculator and doing math by hand. It’s easier with a calculator but I’d you don’t know how to add yet, you need to do it by hand first. This is what we call “learning.”

Beside, the firmware is just an example. Don’t get so hung up on that as you haven’t have any answers for the other cases.

But I have been told that in some project that the software team is falling behind schedule. Make something that they don’t need to write code for. Oh BTW, our coders works in Linux , DSP or Power PC, so they might not have the environment, debuggers or want to spend time on some 8-bit microcontrollers. Those software guys really hate to be in the lab or working on different architectures.

Not necessarily that they, “hate to work on other architectures.” It’s more of the fact that if all you have is a hammer, everything starts to look like a nail. Then it’s perceived that learning a new language would take too long or too much work.

I used VB4 & 5 for years before I decided to expand due to job pressures.

While there’s truth to that aphorism, it’s also true that if all you have is a hammer, all you can do is hammer (and stuff that doesn’t require tools :V ).

Where I work, not everyone has full admin privileges on their computer. This includes some developers. If you’re not one of the ones with privs and don’t have a manager with an “in” to the shot-callers, they have to get official approval for anything they install. If the IT department decides it’s time to wag the dog, it can indeed dictate how the devs design their software. Or, if you’re on a contract where the client requires you use particular software, completely stop you from working while you work your way up IT’s chain of command until it ends with your VP and his VP exchanging cannon-fire, possibly literally.

Sidenote: it’s fun to try to guess which commenters have worked outside of academia, and in what sector. Ever time someone says, about any kind of professional work, “just use a different programming language/environment/toolset” I giggle.

The implementation appears to be pin-for-pin compatible with the original logic chip – so I guess the answer would be yes

As others have pointed out he completed the actual logic gate portion of the assignment, the only thing that was changed out was the display driver. Which was just responding to the logic gate inputs and the Arduino replacement was set up to interact exactly like the original display driver was. So yes they effectively completed the assignment exactly as directed but then added their own cheeky little spin on it.

Two corrections since my brain instantly goes to Arduino and apparently it was a PIC16F1503 and I also said ‘he’ when I don’t think either article specifies so it would be more accurate to say ‘they’ instead.

Now, try smuggling an arduino into a project for your employer. I don’t think it will go over quite as well.

Especially if you are mixing some open source code for closed sourced project. That kind of things can get very messy legally.

Depends on the company, and depends on the kind of open source involved. The Arduino runtime is LGPL, and most of the bundled libraries are as well (with the exception of the SD library IIRC). So long as the company is willing to honor the terms of the LGPL for the runtime code (your app can be non-open), then it shouldn’t pose a problem.

Before I stuck out on my own, the previous three companies I worked for had no problems with MIT, BSD, or LGPL code being used in a project so long as it was properly disclosed. It isn’t that unusual these days.

Read what I stressed: *closed sourced project* What if the company license a library or signed an NDA with its chip vendors to get the chips specs or if the company is in certain industries e.g. defense? Not everything is open sourced in the real world. It is less of a problem, but still an issue with the halve a dozen incompatible open sourced licenses. The last thing you want is to introduce another one into the mix.

The other thing to consider is that if it was specifically written to not use an Arduino for whatever and was agreed in a meeting with everybody, you really not have a say in that. The class is one such situation. Do you quit your job and go somewhere else?

And you will notice I mentioned only licences that were compatible with closed source projects and even mentioned that. Also none of the ones I mentioned are incompatible.

Nor is the military a stranger to open source, both using it, and contributing to it.

oops. I did miss the licenses you mentioned with more freedom with closed source.

Defense Industry is not necessarily the military. They are the companies that make & sells military hardware, hence the industry part.

Some part of the code might deal with the military capabilities – how much the radar/satellite/sonar can see that type of stuff or what they are looking for or how they react – all classified stuff. Kind of hard if your work require secrecy clearance for nation security reasons and want/have to release the source code because you have tainted the code.

There are also company trade secrets – you are talking about companies that makes big money with fat government contracts and oversea business. Not like they want their competitors or the unfriendly nations copy their stuff. Last thing you want is the control firmware of fighter jet or a missile guidance falls into the wrong hands.

Dont need to, we use Atmel chips all the time in products going to full production. Arduinos are in a lot of products. Just like Linux is in every single home. (All Bluray players and large LCD tv’s run linux.)

and almost every single wireless router/access point/switch/modem, every android device ……

almost every router, except for MOST of them that are running Cisco’s proprietary OS

Sure, every ISP just gives you $1000 Cisco instead of $15 D-Link or something like that. I heard that porn looks better when you download it using Cisco router.

Are you talking about VxWorks or Cisco’s iOS/NX-OS? You see VxWorks on a lot of consumer level routers because it can do the job with about half the ram of Linux, but Cisco does not own VxWorks.

Cisco’s enterprise and ISP routers run iOS or NX-OS which are a different story.

gosh because this situation is just like that one

Why is he pressing the pushbuttons with a Sharpie?

Because he didn’t cut his finger nails.

The prof is, I suspect, saying students “can’t use an arduindo” for the same reason primary school kids aren’t allowed calculators – it facilitates the learning process.

Nice hack though.

I agree. When I was a marksmanship instructor a lot of my work was in teaching the very basic skills of marksmanship. Steady position, point of aim, breath control and trigger squeeze. One a student masters those four fundamentals shooting is very easy no matter what you are using. If a student insisted on using laser dot pointers and a scope he could probably do alright with no training at all. He’d also lack the ability to pick up any weapon and use it with no forehand knowledge. The basic skills of any job are meant to equip a person so they can follow an employers, and customer’s, demands. Customers may have excellent reasons to demand purpose built electronics and the professor is getting his students ready for that reality.

” to equip a person so they can follow an employers, and customer’s, demands.”

Yeah this is some sort of joke remark from someone who lives in their parent’s basement. Customers don’t demand specific implementations, they give specifications and by contract will accept ANY implementation that meets their specifications.

You must have perfect customers, if any. Half of my time is spent trying to gently persuade customers that there is a difference between solutions and specs. Most of them think they are engineers and want to tell me how to solve the problem. I spend a lot of time trying to figure out what they expect the outcome to be.

Or maybe he said the students “can’t use an Arduino” because “the class is centered around basic logic gates”.

@dana correct, the point isn’t to ‘use’ basic logic gates rather than an arduino, it is to ‘learn how to use’ basic logic gates.

Which he showed his understanding of the 74 series component very well…

Implemented a functional clone of the 74 logic in the PIC – as well as some extra functionality.

This is awesome. I think that it still holds true to the assignment. He just made a custom driver chip.

I’m one of those grouchier readers, but using a battleship to kill a mosquito? This is awesome!

Full marks. He completed the circuit and learned in the process.

Right? Reminds me of skilled forklift operators showing off by flipping a penny off the ground onto the fork.

If I were the professor I would have given him full marks for the project and extra credit for the extra “features”. I guess it’s a good thing I’m not a professor!

I have two words: Kobayashi Maru.

So you’re saying that if a situation is not winnable, change the rules?

The only winning move is not to play…

That particular lesson was about accepting/coping with losses in an unwinnable situation and not about winning. By cheating out of it, Kirk didn’t learn the lesson in an controlled environment and make it so much more difficult in a real situation.

the lesson from this TV show is to think outside the box

tell us about how kirk failed to learn his lesson in class and was promptly killed in the next episode because he didn’t learn his lesson

it looks like this is a case of the instructor being FTDI’d!

(or, something like that).

It’s nice someone is defending our right to not learn anything and use the laziest methodology possible.. The professor doesn’t want his name associated with incompetence maybe?

You think the student was lazy? He completely the assigned project but then went above and made a custom disguised chip that emulates the logic gates required.

No the initial premise was lazy. Arduinos don’t belong in a logic course. This student was just being a smartass but the initial premise that the logic design course shouldn’t include MCUs still stands.

” the initial premise that the logic design course shouldn’t include MCUs still stands.”

Did you know that some human beings are capable of holding more than one thought in their head? Did you know that the appreciation of digital logic can happen in an environment where the reality of processors is actually acknowledged? Did you know that processors are built up from digital logic and make a fine examples of the application of digital logic?

apparently not

“Like some of our grouchier readers, [PodeCoet]’s Digital Sub-Systems professor loathes everyone strapping an Arduino onto a project when something less powerful and ten times as complicated will do.”

Being a grouchier reader, it’s more accurate to say I loathe seeing full-blown development boards so often used in permanent projects that do not require them. When it could have been easily built with ten times less cost, unused components, and board real estate; because little more was actually needed than the MCU chip. And when a tiny little peripheral chip is put onto a mostly empty 2.7″ x 2.1″ shield with 32 pins of stacking headers, because using four wires to connect power and I2C to a more sensible board is too intimidating. None of this is unique to Arduino, but they are what popularized such nonsense.

As for this project, nice work and photo documentation on the impostor chip!

To be fair sometimes it is just more cost effective to leave the development board in a project especially if it is going to be a one off than it would be to design and source a board just for that project. Is it lazy? probably… OK yeah and I’m not saying that is optimal by any means just that I understand why it happens. :P

For qty 1 projects, Arduinos are usually cheaper than rolling a PCB, especially if your time isn’t free.

That’s why I use Teensys, almost no board wasted. I just used them in a text fixture design that we will be shipping off the the assembly house.

Very funny, gave me a good laugh, Thank You.

That is awesome, and should prove beyond a shadow of a doubt that this student understands the original chip and is probably very far ahead of anyone in his class.

If I were the professor I would have given him full marks for the project and extra credit for the extra “features”. I guess it’s a good thing I’m not a professor!

http://www.eletorial.com

Excellent ^^

excellent hack, this is the kind of out of the box thinking that schools should encourage but he should still do the assignment as required

Hmmm… so I am one of your grouchy readers… let me explain why using an Arduino “to make a LED blink” is a no-go for most of my projects: it’s boring and it’s an overkill. There is a gazillion ways of achieving the same effect with a conventional approach – all of them with their own challenges and limitations and therefore for me as a hobbyist way more interesting as the challenges are the reason why I do my projects on the first place: this is how I improve my skills.

At work, sit between 2 RP-machines and a CNC mill and I use them A LOT. But when I see what hobbyists make with 3D printers at home, it makes me cringe: a piece of tube, a project box … mostly stuff anyone can make with a hacksaw, a drill press and a lathe – don’t have a lathe? Improvise one with an electric drill and a file – it works amazingly well. That’s what HACKING is all about: when you KNOW how to make things, 2 transistors and a few passive components are a simple and elegant way of making an LED blink, when you DON’T know how to use tools or how to improvise you need an Arduino / 3D printer for every little task. Yes, problem solved but nothing learned in the process (and boring too).

because people do things their own way and refuse to follow your examples of living life exactly, they are doing it wrong. gotta love that logic.

yes the appropriate utilization of prototype board space in an academic environment is more important than the questions of whether or not the students are actually absorbing the material being presented

I respect your viewpoint, but can I share why I personally disagree with it? Its the weekend, I have a cute idea for a project. I look around and I am out of 555s, should I then stop everything and wait for a new shipment to arrive? Or should I just use what I have to hand? Because I am always going to pick the latter.

If it isn’t a commercial project, who cares if it is overkill. I care about the result and not how I got there.

Then there is this, in my commercial work, the vast majority my projects always have a microcontroller (or microprocessor) in them. It is very rare indeed for a client to contact me with a project that doesn’t require one. So I don’t keep a lot of things like 555s on hand; because I have the microcontroller to hand, it is easier and cheaper to use that than do it the “right” way. I am not going to add components to a design just for some abstract notion of correctness.

Since you expressed your reasons as a personal preference, this next bit isn’t really addressed toward you. I don’t have a lot of time for people who want to tell others they are having fun the wrong way. Did they do what they wanted? Is it cool? Does it fit into the broad definition of a Hack? Then bully for them.

I like the effort this smart kid A+

Though I like the out of the box thinking. The target of the lab was to build this from discrete components. This basic knowledge is so damn important that I don’t like the idea of cheating around. If I would had been the Prof… Well depends on the level of trust and the theoretical skills of the student. If I know he is good with the theory and just had been bored. Fine. Otherwise its a attempt to cheat… Therefore failed lab. See you next time.

If you check the schematic and pin map files, it appears as though the new chip / microcontroller / impostor is pin for pin compatible with the discreet logic chip, so the circuit will behave the same way if you were to replace the microcontroller with a logic chip

That combined with the comedic tone of the article, I think the guy/girl knows exactly how the circuit and chips work and just wanted to torment the teacher

Reminds me of a book I read as a kid Danny Dunn and the Homework Machine. The main character wants to get out of doing homework, so he programs a supercomputer to do it for him. Of course in the process he ends up doing far more work and learning more than he would have just doing the original assignments. As many have pointed out, there are sound didactic reasons for requiring using logic gates for the exercise. But clearly the student understands their use now even better than his classmates (since he emulated the chip). So it’s really a win all the way around – the professor got the student to understand how to use basic logic gates, and the student pulled off a great hack.

I remember when one of the assignments in English class was a “word pyramid” where you typed the first letter of the word, then two on the next line, then three and so on until the whole word is typed. (Yes, it was as dumb as it sounds.) I wrote a simple program in LabVIEW (my dad had a copy) to automate the process of making the word pyramids. I actually learned something and ended up saving time.

While YMMV, but it’s generally a good idea to also show you know how to do what is expected of you, as directed, in parallel with the fun and games.

As this guy did. He completely emulated the chip in question with the Pic microcontroller, and used it to add functionality.

I agree. I think the student captured one of the early selling points of small microcontrollers: “logic replacement”. He replaced and augmented functionality. I teach at a community college (stcc.edu) and I’d have given the student encouragement, an “A” grade, and also mentioned a few reasons why specs are important. We need to fuel these characters; after all, weren’t we those students so (many) years ago? So much creativity! Embrace it. They’ll be plenty of wet blankets ahead for these students. They need a little fire-in-the-belly for engineering.

oops, I meant “there’ll be”

A true arduino hack

Except that he was using a Pic ;)

Yes, he programmed a PIC (NOT AN F*ING ARDUINO BTW) to do more than the original chip. Bravo.

However, the prof has a point. There are still shitloads of 40/74 series logic chips in the world and there are a lot of instances YOU CAN NOT USE MICROS in a circuit. In my profession, I would love to use a simple micro, FPGA or CPLD a lot of the time, it would cut down on space and power consumption and everything could be done in software but I can’t because of reasons (specs) that are obviously going to be way about just about all of your heads.

When I was in school there were courses on micros and programmable logic. The student should have messed with this crap there. Period. I would have flunked his ass because while he gets the concepts I’m sure, he doesn’t follow instruction very well and is a smart ass and a cheater, things that don’t get 99.9% of people very far in the work force. Something tells me he is not the next Steve Jobs. So he would do well to lose the attitude. Better for him to find that out now than later.I would have encouraged him to take the appropriate course for micros or programmable logic.

Thinking outside the box is wonderful, when it’s asked for. This is a class with a specific format. He was told what was expected and he took it upon himself to be an ass about it. There is a time and a place for micros, this wasn’t it. “F”

There are a lot of things in this world that are “critical” and they have to work 100% of the time and programmable logic and micros are not reliable or robust enough for very important reasons.

It’s a lot like wire wrap, people see a rats nest of crazy wires that “have to be unreliable”, but its done for a reason because PCBs crack and fail when things go boom… wire wrap can flex and withstand things PCBs cannot. It’s not because the designer was being sadomasochistic. It’s because the thing just may have to survive more than being carried in skinny jeans..

People say “tubes are dead, solid state has replaced all tubes”… Except tubes can operate at insane amounts of power at frequencies solid state can’t even dream of operating at. And when you need a transmitter on your satellite in the harshness of space and the temperature extremes and radiation, what do they use 10 times out of 10? Tubes, which degrade gracefully after decades of use and can withstand the rigors of the space environment.

Its funny how all you kids see consumer electronics and think that’s where all the magic happens, that your cellphone is “electronics”… You don’t even know a fraction of electronics.

And the 2014 Get Off My Lawn award for best rambling tirade in the category of Those Kids Nowadays goes to…

Well played!

Finding it hard to believe you even have a job with the obvious attitude problem you possess. That and at least half of what you said is misleading or false, especially the statement about the student “cheating”.

The hoaxer actually fulfilled the requirements. They implemented the logic as intended, & just hacked the display driver (74LS47 BCD to 7 seg decoder) part of the circuit. If I were their tutor, I would’ve given them an ‘A’ for the project, & kudos for the ingenious hack.

Mig29 fighters are a case in point still flying on rod pentodes. Will be still flying when an emp wipes every arduino on the planet. Microwave oven: valves. Marshal stack: valves. I don’t knock modern tech and I’m typing on a phone that also emulates 3 kinds of analogue keyboards.

It’s not a 74LS47 implementation; the shape of the 6 and 9 are wrong. It’s a 74LS247 implementation, and I noticed that right away.

The big issue is a 74LS47 is replaceable with a known part, an MCU is an MCU+software and 99.94% of the time you can’t get the software for it.

Interestingly, 99.94% of statistics are made up on the spot.

I get that sometimes class is ahead with subject and tutor should be elastic. But also happens that class is only ahead with technology. They insist on using ex. Arduino because they know it and they never hardwired anything. They don’t see point in doing hardware excersise because software is simpler. It is said that in real life you would choose whatever suits you but sometimes it’s not your decision for many reasons.

Imagine that class is supposed to write a simple code on microcomtroller in C but they insist on micro python because that is what they would use in real life.

Of course in this case the prankster dod more than expected. So I hope his tutor apreciated his joke.

Hell – necroposting award goes to me. To much linked articles. Sorry!