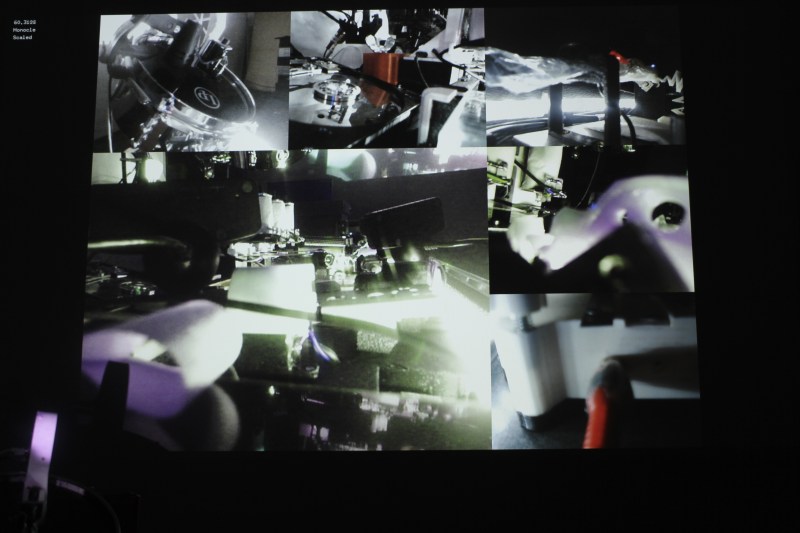

It’s pretty common to grab a USB webcam when you need something monitored. They’re quick and easy now, most are plug-and-play on almost every modern OS, and they’re cheap. But what happens when you need to monitor more than a few things? Often this means lots of cameras and additional expensive hardware to support the powerful software needed, but [moritz simon geist] and his group’s Madcam software can now do the same thing inexpensively and simply.

Many approaches were considered before the group settled on using PCI to handle the video feeds. Obviously using just USB would cause a bottleneck, but they also found that Ethernet had a very high latency as well. They also tried mixing the video feeds from Raspberry Pis, without much success either. Their computer is a pretty standard AMD with 4 GB of RAM running Xubuntu as well, so as long as you have the PCI slots needed there’s pretty much no limit to what you could do with this software.

At first we scoffed at the price tag of around $500 (including the computer that runs the software) but apparently the sky’s the limit for how much you could spend on a commercial system, so this is actually quite the reduction in cost. Odds are you have a desktop computer anyway, and once you get the software from their Github repository you’re pretty much on your way. So far the creators have tested the software with 10 cameras, but it could be expanded to handle more. It would be even cooler if you could somehow incorporate video feeds from radio sources!

wow… that’s a lot of computing power for such a small job. i think lots of USB->FPGA->ieee1394 would have resulted in a more robust solution that doesn’t peg the CPU.

We also thought of that, but all in all using a standard PC with a standard software (open frameworks) was the easiest route. We dont knwo so much about FPGA (yet!)

This would have use at a business that specialized in online webcams.

This seems like a really neat project with a lot of potential. After watching the video though, I think I have more questions than I had before!

This post’s title brought to mind Douglas Gordon’s work “Pretty much every film and video work from about 1992 until 2012.”

How is this different from the wildly successful open source project iSpy?

http://www.ispyconnect.com/

My experience with iSpy at my business is that it chokes on more than four or five cameras, and is crashy/unreliable in general. I ended up paying $400+ for Luxriot, which is a vast improvement. I run 8 cameras with four of them being 1080p/30 fps. The computer was a $300 build and still has plenty of oomph to be our music machine and a spare point of sale terminal. iSpy is fine for light use and two or three non-HD cameras but was problematic for me after that.

It’s odd that that stuff is so hard though, you’d expect that by now this would be trivial and available at low cost or free as part of the OS.

I’ve been using Snowmix (http://sourceforge.net/projects/snowmix/?source=directory) for video mixing. It can be easily customized using its tcl scripts. It accepts gstreamer feeds both for input and output. However, mixing of, say four FullHD h264 streams is not trivial and adds considerable delay. I think a dedicated video-mixer hardware is required. One may also a high-end GPU for low latency video mixing.

From the writeup I though it was some specialized PCI card, which made me wonder what year this was since PCI is so yesterday. But after checking the site I found it’s just USB expansion cards and they advise to use PCIe types.

So nothing special hardware-wise, they just found that you can’t do more than 2 cams per controller without getting slowdowns. Mind you though, they have webcams that send compressed streams these days, like MP4 or older MJPEG, and that means a lot less data needs to be transferred. The drawback is that webcams that send compressed tend to be quite a bit more expensive, unless you find a supplier in china, since it doesn’t have to be expensive anymore, it’s just that the brand names and their offerings are living in the past and their prices are outdated., in fact it’s so stalled in development that they still don’t sell USB3.0 webcams I think.

Yep, we used standard material here – for a good reason: Cards and Cams are about 180€ (200$) which is extremly cheap, escpecially for a system with such a low latency (we didnt measure it, but it should be way below <1s). You can probably do it better / newer / faster with high class material .. but then its several thousand bucks.

I’m wondering about this, as the linked website doesn’t appear to go into any detail:

“…they also found that Ethernet had a very high latency as well. They also tried mixing the video feeds from Raspberry Pis, without much success either.”

I don’t have a Pi, but I know use of H.264 encoding is common on them, given the GPU support. However, I have run into problems with attempting to stream low-latency H.264 on other platforms. A good explanation of why is here:

http://raspberrypi.stackexchange.com/questions/24158/best-way-to-stream-video-over-internet-with-rpi

To quote the problem with H.264 from that:

“There’s another frame-type we haven’t considered in the H.264/MPEG scenario: B-frames (“bi-directional predicted” frames). These can’t be decoded without knowledge of prior and subsequent frames. Obviously, in a streaming application this will incur latency as you have to wait for the next frame before you can decode the current one. However, we don’t have to worry about that because the Pi’s H.264 encoder never produces B-frames… But what about the client side? H.264/MPEG players don’t know that their source is never going to produce a B-frame. They also assume (not unreasonably) that the network is not reliable and that uninterrupted playback is the primary goal, rather than low latency. Their solution is simple, but effective: buffering. This solves uninterrupted playback and providing space for decoding B-frames based on subsequent frames all in one shot. Unfortunately it also introduces as much latency as there are frames in the buffer! So, your major issue is on the client side: you need to write/find/coerce client software into forgoing buffering to immediately decode and display incoming frames.”

Running a less complex encoding protocol such as MJPEG on Pogoplugs (less powerful than a Pi and no GPU), and with client-side software designed to simply pull the JPG frames out of the MJPEG stream then display them as soon as they’re received (rather than pre-buffering and trying to maintain a fixed frame rate) I can wirelessly receive and mix multiple video streams with latency <0.2s (barring interference). Given a hard-wired Ethernet connection and GPU-based MJPEG encoding (does Pi have this?), I'm sure it could be done at higher resolution and with less latency.

So I'm wondering if maybe [Moritz] gave up on other methods like Ethernet/Pis too soon, or if he ran into other issues. Having all the cameras connected to a central computer via USB may be fine in some cases. But typically the more cameras you have, the more distance you want between them; and USB isn't so great for that.

Did a qucik search and found that the raspi does indeed supports HW MJPEG in the frimware, although there seem to be some things to watch out for I gather from browsing though this: https://github.com/raspberrypi/userland/issues/208

This is something I often looked for although on a much smaller scale for a few lower resolution video surveillance feeds. Say I have linux box and some video devices (USB webcam, TV cards inputs, etc), all of them accessible through a video device such as /dev/videoX. Does it exist some software that will group these feeds in a user definable matrix accessible through a virtual video device /dev/videoY? And possibly also run on small SBCs such as the RasPI etc.?

Way to not understand the project and Cock up the description Bryan, very impressive!

NO, they DIDNT use PCI, and NO, USB is not a bottleneck.

Comment I posted on Hackernews to this story:

“some things that made me sad when reading this github:

> One USB Host can theoretically take up to 127 client, but we found out only 1-2 Cameras per host controller work without glitches and missing frames.

and you didnt realize what is the real reason (not the limit of 7 bits address field in USB protocol)? No one bothered to do a simple calculation 640x480x2x30 per camera? :( btw USB 2.0 in reality is able to sustain ~40MB/s, hence 2 cameras at 30Hz max per controller = ~37MB/s.

>PCI can process less data then PCIExpress, so it is best to build your system on PCIe cards

PCI is ~130MB/s, plenty for 6 cameras, again no numbers

Of course you could simply find cheap cameras with building mjpeg compression and avoid adding external usb controller cards – Great source for cheap camera modules is laptop service parts. For the last 10 years USB webcams have been standard in laptops. Example ebay auction

http://www.ebay.com/itm/Macbook-iSight-Camera-replacement-fo…

ISight supports:

MJPEG 640×480 @ up to 60fps

MJPEG 720×480 @ up to 60fps

MJPEG 800×600 @ up to 30fps

MJPEG 1024×576 @ up to 30fps

etc …

and all of a sudden we have <3MB/s per $3 camera."

They say they use one PCI USB card and a few PCIe USB cards, so yes a PCI card was in the mix

Plus the raw bandwidth doesn’t say anything about the overhead the USB chipset has and how they implemented the interface.

And you say USB is not the bottleneck then do the calculation that it is? Seems a bit odd.

But you make some very good points though that are very to the point, including the one I made earlier about MJPEP/MP4 cams.

And that’s why HaD is nice, it has people helping out with ideas in the comment section. Now if only people could be nicer while doing it. But I guess we have to paddle with the paddles given.