The Landsat series of earth observing satellites is one of the most successful space programs in history. Millions of images of the Earth have been captured by Landsat satellites, and those images have been put to use for fields as divers as agriculture, forestry, cartography, and geology. This is only possible because of the science equipment on these satellites. These cameras capture a half-dozen or so spectra in red, green, blue, and a few bands of infrared to tell farmers when to plant, give governments an idea of where to send resources, and provide scientists the data they need.

There is a problem with satellite-based observation; you can’t take a picture of the same plot of land every day. Satellites are constrained by Newton, and if you want frequently updated, multispectral images of a plot of land, a UAV is the way to go.

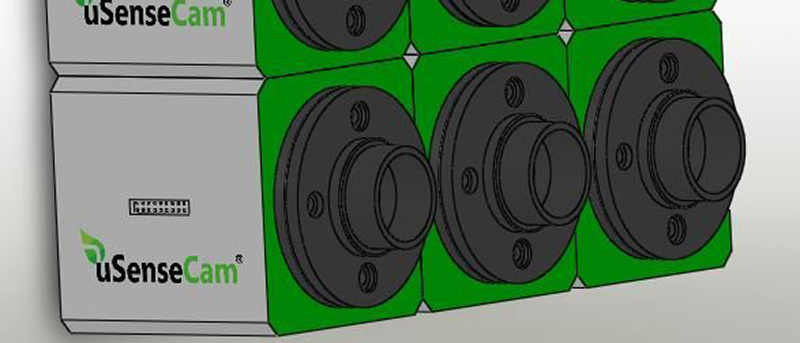

[SouthMade]’s entry for the Hackaday Prize, uSenseCam, does just that. When this open source multispectral camera array is strapped to a UAV, it will be able to take pictures of a plot of land at wavelengths from 400nm to 950nm. Since it’s on a UAV and not hundreds of miles above our heads, the spacial resolution is vastly improved. Where the best Landsat images have a resolution of 15m/pixel, these cameras can get right down to ground level.

Like just about every project involving imaging, the [SouthMade] team is relying on off-the-shelf camera modules designed for cell phones. Right now they’re working on an enclosure that will allow multiple cameras to be ganged together and have custom filters installed.

While the project itself is just a few cameras in a custom enclosure, it does address a pressing issue. We already have UAVs and the equipment to autonomously monitor fields and forests. We’re working on the legality of it, too. We don’t have the tools that would allow these flying robots to do the useful things we would expect, and hopefully this project is a step in the right direction.

Neat! I heard about multi-spectral imaging but never really bothered to understand it before. Possibly dumb question follows..

For its intended usage, would it be practical to use a single imaging sensor with various filters attached to something like a rotating wheel? Since a UAV is unlikely to be flying very fast, how necessary is it to capture the various spectra simultaneously?

Not so dumb. There are/were imagers with filter wheels, at least for space applications. But, in case of satellites any moving part brings additional complexity and reduces reliability.

However it should certainly be easier to implement on a UAV than a satellite. Still you would either need to pan the camera assembly or compromise on the image size and use overlapping parts of the images. In both cases there would be gaps between full spectral frames.

On the other hand, multiple sensors have their own advantages. For example, you can implement super resolution through post processing and build wider spectral band and higher resolution monochrome images.

For a hobby project I’d say that you have two options. The first is to simply hoover and accept the slight mismatch in FOV of the different images. The other is to keep moving and take the images in a sequence. With enough overlap that you don’t get any gaps an ANY of the spectral bands. (normally a 50% overlap works well. So now you aim for 92% overlap if you have 6 spectral images to take. So every 6th image still overlaps 50% with the previous shot of that band.

Right. I was still thinking the wheeled imager as if it was employed at satellite ground velocity. Any gap should be negligible.

One of the original GOES weather satellites (maybe both, ALL?) had a carousel with light bulbs on it for calibrating the IR imager. All the bulbs burned out and left that portion of the satellite useless, a “sister” craft ran out of positioning fuel (hydrazine?). It drifted into sort of a “figure-8” orbit which some ground stations were able to follow for a while.

Now if HaD had an edit button, I would mention that this info has been sitting in the back of my brain for about 20 years, so it could be faulty.

I dabbled in similar work during the course of my doctorate, ended up going a different route. I can say that if you have the ability to solve the problem at acquisition time rather than post-processing you save yourself a lot of headache. Even with this setup, you’ll still have slight misalignment of the images since the cameras are placed next to each other. It’s a slight bit of parallax, but enough that application of super resolution like [Sean] mentioned would be something to consider. I even developed a system that used a single imaging module with multiple apertures that had individual filters in front of each aperture. So on a single board you could get six bands. The benefit of doing it this way is that you only have to buy and futz with a single nice imaging sensor and you don’t have to worry about synchronizing acquisition of several modules.

The problem with the rotating wheel is the vibration and the introduction of any moving parts always makes for a headache. There was one student http://imaging.utk.edu/people/wcho/wcho.htm who focused his entire dissertation on handling misalignment in multispectral image acquisition, it’s not a trivial problem. Plus, accurate alignment is vital for the success of super resolution. If you could synchronize the framerate of the camera with the rotation of the wheel that may solve some problems but not all. He used a LCTF (Liquid Crystal Tunable Filter) for his experiments which was very convenient, no moving parts, selectable width of every band he chose (27 total) but runs about $10,000.

The other problem that no one’s mentioned yet is the issue of IR. The main article says only up to 950nm, but there’s a lot missing. USDA project use NIR, which goes from 800nm to 2500nm. I don’t know whether you get interesting information subdividing that part of the spectrum, but I feel like the imager should be sensitive to more than just the bottom 9% of NIR.

The French survey made its own aerial digital cameras more than 20 years ago (and has now a real expertise on the subject, after building a second version), based on the same idea.

On those “good old days” (1993), there was no speedy micro-controllers and memories… Se beside the difficulty to align the cameras (best done in post-processing), there was some others challenges : the storage capacity (typically, a photographic mission for topography produce hundreds of images, each one weighting several megabytes) and the speed of writing (Since the images must overlap, a 4 channels image is produced at a speed that a disk drive cannot achieve… the solution was to use a huge buffer and finish the disk writing during the turn of the plane between each band). One should also note that an aircraft produce a lot of vibrations, which a disk drive doesn’t appreciate :)

You can found (in French) the whole story there on 8 pages : (link to the second page where you have some pictures of the — now “RetroTech” beast) : http://loemi.recherche.ign.fr/argenerique.php?AR=CAMNU&PAGE=2

Cool project, I am really interested in affordable lightweight multispectral imagers. Koen Hufken’s TetraPi project is doing something similar:

http://www.khufkens.com/2015/04/24/tetrapi-a-well-characterized-multispectral-camera/

[“There is a problem with satellite-based observation; you can’t take a picture of the same plot of land every day. “]

Yes you can. That’s one of the uses of sun-synchronous orbits.

UAVs are cheaper and more accurate? Also true.

temporal resolution of landsat is 14-16 days.

I am not talking about Landsat

I was just pointing that you can build satellites which do that (daily revisits), and in fact they are used. For the sake of correctness.

The beneft of a landsat is that the infrastructure is already up and running. If you need a UAV to fly over your fields for several consecutive days taking into account the fields might be the other side of the country? Maybe strap those cameras to commertial airliners?