Last week, the Nvidia Jetson TX1 was released. This credit card-sized module is a ‘supercomputer’ advertised as having more processing power than the latest Intel Core i7s, while running at under 10 Watts. This is supposedly the device that will power the next generation of things, using technologies unheard of in the embedded world.

A modern day smartphone could have been built 10 or 15 years ago. There’s no question the processing power was there with laptop CPUs, and the tiny mechanical hard drives in the original iPod was more than spacious enough to hold a library of Napster’d MP3s and all your phone contacts. The battery for this sesquidecadal smartphone, on the other hand, was impossible. The future depends on batteries and consequently low power computing. Is the Jetson TX1 the board that will deliver us into the future? It took a hands-on look to find out.

What is the TX1

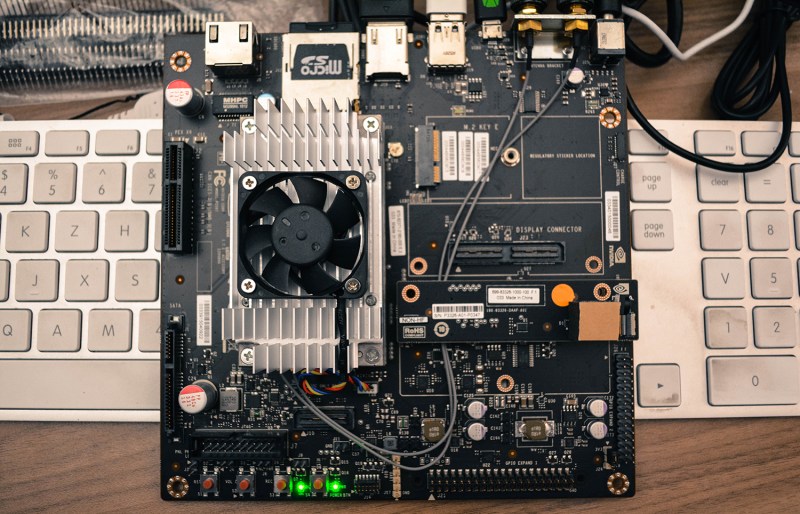

The Jetson TX1 is a tiny module – 50x87mm – encased in a heat sink that brings the volume to about the same size as a pack of cigarettes. Underneath a block of aluminum is an Nvidia Tegra X1, a module that combines a 64-bit quad-core ARM Cortex-A57 CPU with a 256-core Maxwell GPU. The module is equipped with 4GB of LPDDR4-3200, 16GB of eMMC Flash, 802.11ac WiFi, and Bluetooth.

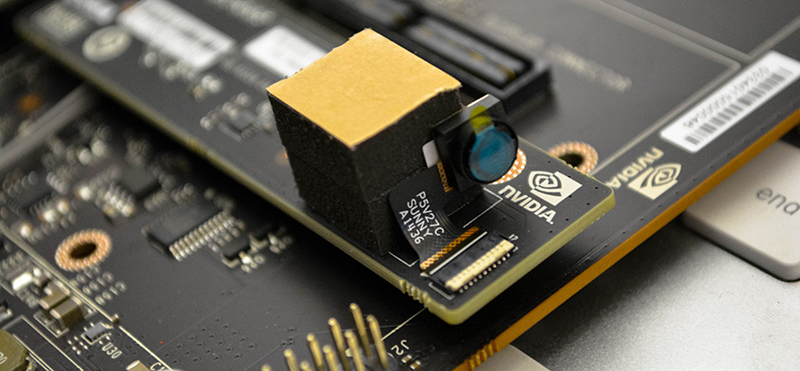

This module connects to the outside world through a 400-pin connector (from Samtec, a company quite liberal with product samples, by the way) that provides six CSI outputs for a half-dozen Raspberry Pi-style cameras, two DSI outputs, 1 eDP 1.4, 1 eDP 1.2, and HDMI 2.0 for displays. Storage is provided through either SD cards or SATA. Other ports include three USB 3.0, three USB 2.0, Gigabit Ethernet, a PCIe x1 and PCIe x4, and a host of GPIOs, UARTs, SPI and I2C busses.

The only way of getting at all these extra ports is, at the moment, the Jetson TX1 carrier board, a board that is effectively a MiniITX motherboard. Mount this carrier board in a case, modify a power supply and figure out how to wire up the front panel buttons, and you’ll have a respectable desktop computer.

This is not a desktop computer, though, and it’s not a replacement for a Raspberry Pi or Beaglebone. This is an engineering tool – a device built to handle the advanced robotics work of the future.

Benchmarks

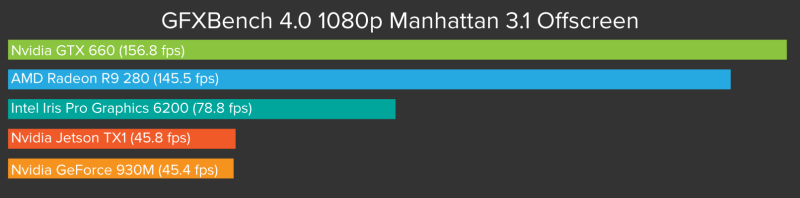

No tech review would be complete without benchmarks, and since this is an Nvidia board, that means a deep dive into the graphics performance.

The review unit Nvidia sent over came with an incredible amount of documentation, pointing me towards GFXBench 4.0 Manhattan 3.1 (and the T-rex one) to test the graphics performance.

In terms of graphics performance, the TX1 isn’t that much different from a run-of-the-mill mobile chipset from a few years ago. This is to be expected; it’s unreasonable to expect Nvidia to put a Titan in a 10 Watt module; the Titan itself sucks up about 250 Watts.

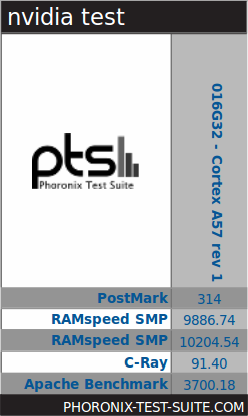

What about CPU performance? The ARM Cortex A57 isn’t seen very much in tiny credit-card sized dev boards, but there are a few actual products out there with it. The TX1 isn’t a powerhouse by any means, but it does trounce the Raspberry Pi 2 Model B in testing by a factor of about three.

What about CPU performance? The ARM Cortex A57 isn’t seen very much in tiny credit-card sized dev boards, but there are a few actual products out there with it. The TX1 isn’t a powerhouse by any means, but it does trounce the Raspberry Pi 2 Model B in testing by a factor of about three.

Compared to desktop/x86 performance, the best benchmarks again put the Nvidia TX1 in the same territory as a middling desktop from a few years ago. Still, that desktop probably draws about 300 W total, where the TX1 sips a meager 10 W.

This is not the board you want if you’re mining Bitcoins, and it’s not the board you should use if you need a powerful, portable device that can connect to anything. It’s for custom designs. The Nvidia TX1 is a module that’s meant to be integrated into products. It’s not a board for ‘makers’ and it’s not designed to be. It’s a board for engineers that need enough power in a reasonably small package that doesn’t drain batteries.

With an ARM Cortex A57 quad core running at almost 2 GHz, 4 GB of RAM, and a reasonably powerful graphics card for the power budget, the Nvidia TX1 is far beyond the usual tiny Linux boards. It’s far beyond the Raspi, the newest Beagleboard, and gives the Intel NUC boards a run for their money.

In terms of absolute power, the TX1 is about as powerful as a entry-level laptop from three or four years ago.

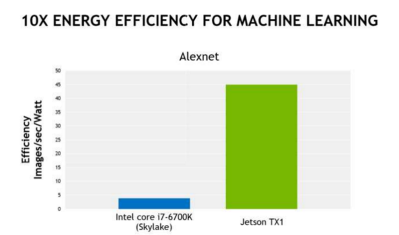

The Jetson TX1 is all about performance per Watt. That’s exceptional, new, and exciting; it’s something that simply hasn’t been done before. If you believe the reams of technical documents Nvidia granted me access to, it’s the first step to a world of truly smart embedded devices that have a grasp on computer vision, machine learning, and a bunch of other stuff that hasn’t really found its way into the embedded world yet.

And here lies the problem with the Jetson TX1; because a platform like this hasn’t been available before, the development stack, examples, and community of users simply isn’t there yet. The number of people contributing to the Nvidia embedded systems forum is tiny – our Hackaday articles get more comments than a thread on the Nvidia forums. Like all new platforms, the only thing missing is the community, putting Nvidia in a chicken and egg scenario.

This a platform for engineers. Specifically, engineers who are building autonomous golf carts and cars, quadcopters that follow you around, and robots that could pass a Turing test for at least 30 seconds. It’s an incredible piece of hardware, but not one designed to be a computer that sits next to a TV. The TX1 is an engineering tool that’s meant to go into other devices.

Alternative Applications, Like Gamecube

With that said, there are a few very interesting applications I could see the TX1 being used for. My car needs a new head unit, and building one with the TX1 would future proof it for at least another 200,000 miles. For the very highly skilled amateur engineers, the TX1 module opens a lot of doors. Six webcams is something a lot of artists would probably like to experiment with, and two DSI outputs – and a graphics card – would allow for some very interesting user interfaces.

That said, the TX1 carrier board is not the breakout board for these applications. I’d like to see something like what Sparkfun put together for the Intel Edison – dozens of breakout boards for every imaginable use case. The PCB files for the TX1 carrier board are available through the Nvidia developer’s portal (hope you like OrCAD), and Samtec, the supplier for the 400-pin connector used for the module, is exceedingly easy to work with. It’s not unreasonable for someone with a reflow toaster oven to create a breakout for the TX1 that’s far more convenient than a Mini-ITX motherboard.

Right now there aren’t many computers with ARM processors and this amount of horsepower out now. Impressively powerful ARM boards, such as the new BeagleBoard X15 and those that follow the 96Boards specification exist, but these do not have a modern graphics card baked into the module.

Without someone out there doing the grunt work of making applications with mass appeal work with the TX1, it’s impossible to say how well this board performs at emulating a GameCube, or any other general purpose application. The hardware is probably there, but the reviewers for the TX1 have been given less than a week to StackOverflow their way through a compatible build for the most demanding applications this board wasn’t designed for.

It’s all about efficiency

Is the TX1 a ‘supercomputer on a module’? Yes, and no. While it does perform reasonably well at machine learning tasks compared to the latest core-i7 CPUs, the Alexnet machine learning tasks are a task best suited for GPUs. It’s like asking which flies better: a Cessna 172 or a Bugatti Veyron? The Cessna is by far the better flying machine, but if you’re looking for a ‘supercomputer’, you might want to look at a 747 or C-5 Galaxy.

On the other hand, there aren’t many boards or modules out there at the intersection of high-powered ARM boards with a GPU and on a 10 Watt power budget. It’s something that’s needed to build the machines, robots, and autonomous devices of the future. But even then it’s still a niche product.

I can’t wait to see a community pop up around the TX1. With a few phone calls to Samtec, a few hours in KiCad, and a group buy for the module itself ($299 USD in 1000 unit quantities), this could be the start of something very, very interesting.

Ok, obvious question…

If it only takes 10W then why the massive heat sink?

Given Brian’s photo caption mentions it only getting a couple of degrees above ambient when running benchmarks (and as Brian was stressing in the article) – it’s for engineers so it has been massively over-engineered.

I wouldn’t be that surprised if, for most applications, you could probably get away with a fairly modest sized passive heat sink.

Oh, no one told you? Heat sinks aren’t for making things cooler, they’re for making them LOOK cooler. ;)

+1

to dissipate the 10Watts of power.

Probably for when one decides to over-volt and overclock?

If they let it heat up too much, it’ll start drawing a lot more than 10W. My bet is the heat sink is there to keep it under that power envelope.

If I’m not wrong, the fan seems to be regulated. And it spins at a quite high speed on the picture. Quite strange at such low temp.

It needs a big heatsink so it can work in high ambient temperatures without overheating.Such as inside your car’s dashboard when the car is sitting in the sun.

You got the right answer. Others are rubbish. I am hoping to add the tx1 to my helicopter drone on my website.

You got the right answer. Others are rubbish. I am hoping to add the tx1 to my helicopter drone on my website nocomputerbutphone.blogspot.com .

The TX1 IS THE HEATSINK. The first sentence read: “50x87mm – encased in a heat sink that brings the volume to about the same size as a pack of cigarettes. ”

That’s TINY. Not massive at all.

The breakoutboard is not needed, you could do away with that and build your own (article mentioned open PCB plans).

Wrong. The TX1 is the semiconductor module the size of a postage stamp. The development kit (shown above) is sold attached to a heatsink the size of a pack of cigarettes.

Also, in the world of SoCs and integrated electronics, a pack of cigarettes IS huge….

It appears you have failed to research before posting.

http://www.nvidia.com/object/jetson-tx1-module.html

The TX1 is a board, with a bunch of chips on it, all covered by that aluminum block. On the back side of that board, is a 400 pin connector (mentioned above), which connects to the much larger development board.

The actual processor is a Tegra X1 (Cortex-A57), which is paired with a Maxwell GPU.

The dev board is more or less a ‘dumb’ board; it’s all interconnects and power regulation.

As one of my Electronics instructors told us,

“Don’t answer the interview question “What is the heat sink used for?” by saying; “It’s where you dump the heat when you’re finished using it.” “

And how many NDAs will be required to actually build anything with this?

Anyone can download the comprehensive docs & design files after automated registration:

https://developer.nvidia.com/embedded/downloads

I would be much more concerned about binary blobs and closed drivers than NDAs.

An NDA can always be examined to search what could screw you, a binary blob cannot.

Unfortunately they neglected to put anyone knowledgeable about it at their booth at the show they were announcing it at.

I dunno, “Hello Barbie” has the power of a google server farm and all she needs is a Lipo and wifi. Unless what you’re doing is time critical or radio silent, offload the processing to something more powerful, it’s likely cheaper that way.

It can see advantages and disadvantages to each and where the situations warrants it. Why stop pushing the envelope toward smaller independent computing in lieu of glorified thin clients?

i wouldnt want to offload critical navigation, sensor or vision data, especially in anything wireless since it would more often than not require two way comunication, adding latency and therefore uncertainty with every step.

Agreed. This looks like a practical module for ‘serious’ mobile robotics. You do not want your vehicle’s steering loop (to pick a random example) to include video encoding and a round trip via wireless network (with all of the random delays that implies). Not to mention that much network traffic means it won’t scale when you try to increase the number of units in a given area.

I prefer to have data local and not floating it in the clouds.

Replace “clouds” with other peoples servers.

>And here lies the problem with the Jetson TX1; because a platform like this hasn’t been available before,

Apart from the Jetson TK1 last year…

Actually the K1 is now in a few devices with no fan. At 300GFlop SP, it’s a little slower than the X1’s 500GFlop SP/ 1TFlop 16bit float. But a system with no fan and CUDA… I want it. I want it, I want it, I want it.

Not sure the product is worthy of all the gushing in the article. A5x have been on the horizon for years with a hand full of companies already shipping. It’s not a giant leap over the TK1 either. It is not record setting in terms of compute power per watt. Chips like Adapteva Epiphony still have it beat – albeit at a smaller scale. It’s simply the next evolution of nVidia’s embedded SoC offering.

How about a rack of these…

http://fossbytes.com/googles-project-vault-a-secure-computer-on-a-micro-sd-card/

i7 wipes the floor with this. Unless they mean an i7 constrained to 10 watts. In any case, it’s stupid to make a comparison when the Intel chip is not designed to sip power at all, it’s designed to be dense in computing power. And it’d smoke Nvidia’s BS. This is just Tegra all over again.

The point *is* to compare performance per watt. There are devices/applications with power budgets.

In terms of pure flops, the i7 is not faster even flat out. 4 core 4.4GHz i7 looks to be about 140Gflop SP maximum with AVX. The X1 does 500GFlop SP or 1TFlop half precision. The i7 however is much more flexible.

What do you want to bet they are ignoring the GPU on the i7…. I would not be supprised if it were just as efficient and probably faster due to the faster cpu on an i7 running OpenCL on its IGP… and ovbiously any dedicated GPU will absolutely lay waste to this board.

The Xeon D family may be as close as we can get to a comparison as it’s supposed to be an SoC. But at 45w, it’s not a good comparison. Granted, this is a Xeon which are not generally about sipping power.

There is a 15w Skylake, bit I don’t see power numbers for the chipset at first glance. The benchmarks nVidia has prepared make them look favorable, here’s to hoping for more independent tests.

I don’t think people will flock to this in droves, all the snags of working with an embedded system are still there in terms of how much software porting work will likely need to be done for the OS and apps. If nVidia really is publishing specs without an NDA that requires a legal team to deal with, that could be a good start. They’ll need to foster a community for tinkers, hackers, and makers to really be able to use this though. Still, an open set of specs could lead to a new ARM platform in addition to the server platform going through its iterative evolution right now.

If this is a platform for software and other hardware vendors to target, that would get me excited. That’s a big reason for Intel’s success with the PC.

Using a non contact/infrared thermometer on a surface that is a very poor black body is generally going to give you duff temperature measurements. Moreover reflective surfaces i.e. the aluminium heat sink, cant usually be measured this way as they are reflective.

https://en.wikipedia.org/wiki/Infrared_thermometer#Accuracy

Unless of course you took into account the emissivity of the reflective surface and adjusted the non-contact thermometer to suit. I would be keen to find out if the temperatures were really only a degree or two above ambient. 10 Watts of power is going somewhere after all.

Long time reader, first post. Keep writing articles like these Brian!

Maybe a dumb question but could you hack this by just putting a patch of black paint on the heat sink, and measuring the temperature there?

Not dumb at all, Yea, that’d get you pretty close as long as you were sure to cover the entire measured area and it was in good thermal contact.

This hasn’t existed before…unless you adjust for Moore’s law. From that perspective, hardware companies have been peddling the equivalent of this device for decades. You’ve been able to get some sort of moderately powerful CPU module (compared to the current state of the art) with arcane documentation and uncomfortable connection schemes. They get used in various non-mass-market devices, but never change the world. They follow the curve, they don’t lead it.

I don’t understand your deem cynicism. Lower performance & lower power CPUs and SOCs have been changing the world. Yes, the curve keeps moving up and the areas where these none racehorse CPUs can be put to good use also keeps moving up.

All I’m saying is that, based on the currently available computing power, you’d expect a small module like this to exist. For every stage in computing progress, there have been these sorts of modules available. They get used for special applications but they don’t become the basis for some kind of revolutionary community. Brian Benchoff is wondering why the threads in Nvidia’s forums don’t see much action, and I’m explaining that it’s because this is a standard type of product you’ll see in the computing industry and it’s not FOR the type of people who hang out in forums.

+1 for the “not FOR the type of people who hang out in forums” hahahahahah

You don’t see the forms full because of the high level of education and experience needed to use something like this and because it is not targeted to end users or even casual programmers. This device is used for things like computer vision, like self driving cars and robots that pick parts out of unsorted bulk containers. Compared to the i7 which is more targeted to gammers. There simply exist more graders then robotics/vision engineers

Also, lack of surprise/wonder is not the same as cynicism.

I think it shouldn’t be called “Jetson” until it is used in a 4 passenger (plus one large dog) flying car that can fold into the size and weight of a briefcase.

It has to EARN THE RIGHT to use that name!

B^)

Ten watts, hmm… 5V @ 2 amps?

The CPU is definitely running on much lower voltage (and consequentially more amps) :P

Sounds like it would be ideal for a CNC machine tool controller.

You don’t need anywhere near this level of processing power for a CNC machine tool controller. Simple microcontrollers can do that.

“In terms of absolute power, the TX1 is about as powerful as a entry-level laptop from three or four years ago.”

Hell no ! I’m looking at the price almost everyday and now it’s like 400€ for a dual-core with Intel HD and 4G of ram. Seems that the price didn’t lowered for 4 years. Still shitty computer with high price. (meanwhile, you can have way better phones for less money). So, correction : the TX1 is about as powerful as a entry-level laptop FROM NOW.

I’ll just leave this here (previously posted on HAD I think) – NVidia going forward with FORTRAN as well.

https://www.llnl.gov/news/nnsa-national-labs-team-nvidia-develop-open-source-fortran-compiler-technology

Well now, what a load of Weasel wording, “This credit card-sized module is a ‘supercomputer’ advertised as having more processing power than the latest Intel Core i7s” but “In terms of absolute power, the TX1 is about as powerful as a entry-level laptop from three or four years ago.” and all for a price of $600~ something that has not been mentioned in here.. this is “far beyond the Raspi, the newest Beagleboard, and gives the Intel NUC boards a run for their money.” KTHXBI i buy my $50 beaglebone x10.. or maybe a quad core I5 with a 750TI? looks to be the same spec and price there.

If this item was for sale for $150 i can see it worth the price/performance, but a “entry-level laptop from three or four years ago” is priced these days at $150, and it has HDD+Screen+DVD-DL Drive + every other item needed.

Opencv not fully ported to that specific GPU. Looks good on the surface but checking the details unveils the most of the calls still use CPU. Hopefully v2.0 of this board will have much better opencv integration (and it will be more affordable).

Am I the only one waiting for the crossover between Raspberry Pi and this pretty baby ?

Used T30 + GT750M before it was cool.

Nice keyboard! :D

you keep saying its not for makers but keep comparing it to the raspberry pi and beagle?! Obviously, its powerful probably alittle over engineered as well as over priced but its still a board that could be used for any maker project.

what is the best way to interface this board with another processing board like, beaglebone, Raspberry pis or even Infineon’s Tricore, I mean o share tasks processing maybe…

e-con Systems announces the launch of the much awaited MIPI camera board for NVIDIA® Jetson Tegra X1 development kit – e-CAM130_CUTX1. e-CAM130_CUTX1 is a 13MP 4 lane MIPI CSI-2 camera board based on AR1820 CMOS Image sensor from ON Semiconductor® and an integrated Advanced Image Signal Processor(ISP) for NVIDIA® Jetson Tegra X1 development kit.

https://www.e-consystems.com/13mp-nvidia-jetson-tx1-camera-board.asp

And now the TX2 is out:

https://devblogs.nvidia.com/parallelforall/jetson-tx2-delivers-twice-intelligence-edge/

And before you compare to an i7, remember this is for embedded. We’re doing ML on quadcopters with ours.

$199 now 8/16/2017

I just bought the discounted TX1 dev kit SE…not sure what to do with it yet. Think this would have the power to do real time style transfer of video input?