When the Raspberry Pi was introduced, the world was given a very cheap, usable Linux computer. Cheap is good, and it enables one kind of project that was previously fairly expensive. This, of course, is cluster computing, and now we can imagine an Aronofsky-esque Beowulf cluster in our apartment.

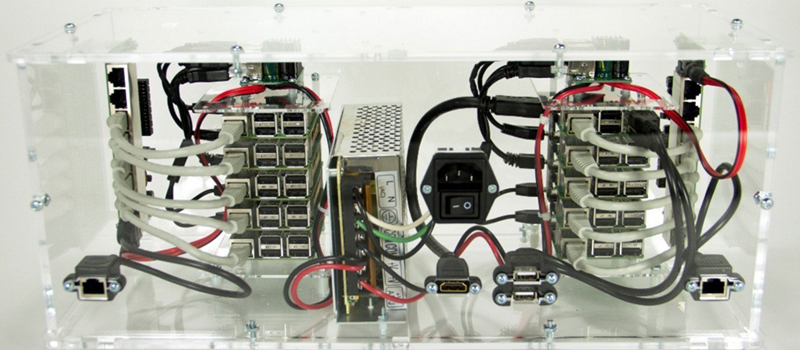

This Hackaday Prize entry is for a 100-board cluster of Raspberry Pis running Hadoop. Has something like this been done before? Most certainly. The trick is getting it right, being able to physically scale the cluster, and putting the right software on it.

The Raspberry Pi doesn’t have connectors in all the right places. The Ethernet and USB is on one side, power input is on another, and god help you if you need a direct serial connection to a Pi in the middle of a stack. This is the physical problem of putting a cluster of Pis together. If you’re exceptionally clever and are using Pi Zeros, you’ll come up with something like this, but for normal Pis, you’ll need an enclosure, a beefy, efficient power supply, and a mess of network switches.

For the software, the team behind this box of Raspberries is turning to Hadoop. Yahoo recently built a Hadoop cluster with 32,000 nodes used for deep learning and other very computationally intensive tasks. This much smaller cluster won’t be used for very demanding work. Instead, this cluster will be used for education, training, and training those ever important STEAM students. It’s big data in a small package, and a great project for the Hackaday Prize.

each time I think about doing a cluster of rpi’s and making it serviceable, I just give up. the way the boards are with the ports all over the place, its just a tangled mess.

some day, there will be something that is rack or cluster-friendly. but the pi in its usual state, is just too crazy to deal with like that.

shame. it didn’t really have to be that way, but the designers either didn’t care or just went with ‘easy’ instead of more forward thinking layouts.

The compute modules look like they would be better suited to this, but I only see kits with both the module and IO board. They are going for ~2-3 times the price of just the Pi itself.

Maybe someone could come with a sort of carrier board that relocates the problem connectors. I could see it being dome with something soldered to the Pi, something with pogo pins, or something that just attaches to the existing connectors. None seem like a great idea though.

PC Duino has a decently usable port setup… Also POE injector modules exist, and as long as you aren’t doing more than 40 boards or so you could always replace some ports in a few hours with a soldering iron.

STEM students, not STEAM students.

The “A” (for “art”) is increasingly being added by schools – presumably to include design, fine arts and other non-numeric tomfoolery to reach a larger audience (and higher funding).

So, everything but sports? I never liked stem as a term for science and engineering, but this seems like the wrong direction. The idea was to get kids on a real track to successful employment and then down the road reap some diversity points. No one wants to pay artists, they just want jira ticket to unit test converters.

Yes to arts, no to sports. We need to support all mental activities. From the arts you get better design. Without design it is just mechanics. Sports can survive on it’s own. And we should stop treating sports as the path to fitness. That is backwards. It leaves out all those with no interest in sports.

Assuming “arts” is “fine arts”, it’s leaving out all the humanities and the social sciences.

My training is in mathematics, and I program computers for a living, but we as a society need as many artists as we can get.

No! STEM > STEAM is NOT enough! It should be “STEAMLGBTQBLM” Students. If you DO NOT COMPLY: You will be Assimilated! Resistance is FUTILE!

Everytime I see a pi-cluster with just a few dozens of nodes I’m thinking – What’s the use for this, why not just run it on a common multiple cpu, many cores Intel machine with 64GB ram and a bare metal hypervisor. Same memory and probably the same combined cpu as a 64 node pi-cluster….

A lot more CPU power for a given price, actually. A quad-core Intel CPU is just so much more powerful than an old 32-bit ARM SoC. The only real point of a Raspberry Pi cluster is to practice using a real cluster. Otherwise the same software can run on a simulated cluster with better performance at the same price.

I’m not complaining about people making Pi clusters. It’s an interesting exercise in cluster design that feels more like real HPC than booting a bunch of virtual machines. Just don’t expect to do actual HPC with it…

Well actually it might be a little bit closer now that the version 3 Pi has a 64-bit quad-core. The gain is offset by the increased price, but it might not look too terrible in terms of performance/price/power for some workloads. Still not practical, but somewhat more interesting.

Even with a 64-bit core they are still using DDR2 — not even DDR3 — and the connection between all the cluster-nodes is… 100Mbps.

Which is why you look at things other than a Pi, DDR3, gigabit ethernet etc.

It’s a little closer just like me walking to my front door puts me a little closer to Australia than Europe.

18 RPis = 54 GFLOPS, slow DDR2 RAM, slow 100Mbps access, $600

1x GTX 1080 = 8800 GFLOPS, DDR5X RAM, fast PCI-E access, $600

Some things need MIPS not FLOPS….

Apples to oranges. Can you run a bunch of web servers on a GTX 1080? I didn’t think so…

Don’t compare the single-precision FLOPS of a GPU to the double-precision FLOPS of a cluster.

The two are suitable for completely different applications with completely different programming models. The purpose of a Raspberry Pi cluster is to learn how to write applications for a cluster.

A much better comparison would be to run the same code on a typical desktop computer. A $600 computer will have around 100 GFLOPS with vectorized code and up to 200 GFLOPS with FMA code, but a lot less for code that mere mortals write.

The double precision performance of a GTX 1080 is about 250 GFLOPS, by the way.

That’s kind of funny, considering this is Hackaday, every time I see one of these clusters, I think, “wow, look how easy it is to play with things like cluster computing!”. You get to play with the physical, and the software side of things. I always learn a lot about power supplies, 3D printing, cooling, etc.

If you want the hardware, you can opt for FriendlyArm, which are nicely laid out. Or Odroid, which are way more powerful, but then you’re talking about ROI, and the “this doesn’t make compute sense” argument has even more weight.

Take it for what it is.

If the reason for the hack is to make something that looks cool on your shelf or or your highschool science fair – then sure, this is nice. And also if you want to learn how to cut network cables to the right lengths and crimp the RJ45 contacts.

But for learning clustering techniques and “supercomputing” it’s basically a big waste parts, money and time.

Most of the projects I’ve seen have the “learn clustering” as the stated goal though…..

I still think there’s value in it. The first “multi-processing” computer I ever used was a BeBox, and it actually had 2 physical processors. Having 2 physical processors, vs anything else, such as multi-core, or hypervisor divided VMs or whatever, is actually different.

You’re not going to get the same speed profiles with these pi clusters, but it will make you think differently about things.

Also, I could easily take a cluster like this as have it as a high school build project. Kids will love it, and the school can afford it, much more easily than a rack of something. And having the physicals is important so you can actually show all the components that go into it.

Why’s it a waste of time to learn clustering? If you ignore the speed, that is. Supercomputing is still, far as I know, a problem where you have lots of high-speed elements, connected by low-speed links. Relatively speaking for “speed”, of course.

I suppose real supers usually use other connection methods, switching hyper-cube backplanes and stuff, rather than heirarchical Ethernet, but aren’t they, logically, the same thing? Doesn’t this cluster look a lot like, from a software POV at least, a super from maybe the 1980s? So programming would be the same. The optimisation problems would be the same.

I love how supercomputing calls it “codes” rather than “code” btw. Just a cool little superiority artefact.

Of course in every practical way, it’s a complete waste of time, but doesn’t mean it can’t teach principles.

Booting a bunch of virtual machines just isn’t the same as working on a real cluster. To “learn clustering” without a cluster you’re going to have to make a bunch of virtual machines perform like a bunch of relatively slow individual computers connected with a similarly slow network. At some point it’s easier just to get a bunch of cheap ARM boards and have some fun fabricating a cluster.

If I were a student I would much rather work on making a handful of ARM boards approach the performance of a single desktop computer. Taking a desktop computer and making it artificially slower and more complicated is just boring. Maybe it works for some people, but I would rather deal with physical hardware.

Virginia Tech’s SeeMore sculpture https://www.icat.vt.edu/funding/seemore) is a great example of this.

You have never run a virtual Rasberry Pi in a VM have you, the above claims indicate that strongly. In fact your suggestion that virtual hardware and actual are not equivalent suggests that your knowledge of computer science is fundamentally deficient!

Why “Questionable” end-use clusters of RPi’s? Simple…

Experimentation. Proof of Concept – Important before spending MUCH more money on an array of more powerful machines. RPi clusters are an affordable “Stepping-Stone”, if you will.

And let’s not forget the RPi has a broad user-base to draw support from, with many examples of clustered RPi’s (e.g., BitCoin mining). Get it?

We need more STEAM students, that’s for sure.

Maybe they can fix the problems with Team Fortress 2.

That is about as original as a plate of scones, but not as useful. You know what big clusters have, the fastest possible interconnects, you know what the RPi can never have, even average speed interconnects, without some hardware hacking to tie the GPIO lines into some sort of bus.

You could probably do some sort of 16 bit data, 8 bit address, plus a few extra lines for R/W and polling signals. Each Pi can request the bus and be told to dump as much data as it has 16 bits at a time until it finishes or is interrupted by the master module. Or there is already some sort of parallel bus and protocols that is a direct fit the the number of GPIO lines the RPi has.

Not really. If you take a look at the list of the top 500 fastest supercomputers, a bunch of them are still using 1 gigabit ethernet. It’s not optimal by any stretch of the imagination, but it works for some workloads. You can debate all day about whether or not those constitute a “true” supercomputer, but they are absolutely a form of cluster.

The truth is that a cluster computer does not always have an ideal interconnect. Programming a real-world cluster means dealing with real-world limitations. Nothing is optimal.

The point of a Pi cluster is not to get competitive performance compared to a cheap workstation or server. It’s to learn how to deal with networking a bunch of individual machines. The slow Ethernet ports on the Pi are actually a benefit in that respect since they make the networking issues nice and obvious.

Also, forget about using GPIOs for networking. You would have to toggle the pins at several megahertz just to match the performance of 100mbit ethernet. It may be possible to toggle the pins that fast, but good luck synchronizing a bunch of boards at multiple MHz, let alone having any CPU time left over at the end. You can kiss any semblance of performance goodbye.

The Pi 3 can toggle 16 GPIO lines fast enough to, coincidentally, transfer 1 gigabit per second. Do the maths, your theories are always more useful if they are based on facts. I did look up the max GPIO speed for C code on the RPi3 therefore the above is a reasonable claim. So the buss I describe would load the entire cluster at once at that speed (initial rendering model or whatever), or transfer between any two members or the master at that speed. Use less of that address space (8 bits) for machines and you can multicast data to and from sub groups too. All that with a simple linear topology. If it operates more like a bus and not a network you can have memory mapped transfers of blocks rather than packets as there is no routing or security requirements that would require a network. Or for a DB config a query would go on to the bus and be loaded by each module then the master would use a one tick to place an address on the bus and another to read if a given module has any hits, if it does the speed to transfer the results depends on the geometry of the data in the DB, but the rate is still 1 gigabit. That is very fast, much faster than the Pi can do with a network.

Your comment about slow networking on the Pi being somehow beneficial is, insane, hilarious but still completely insane. The speed of a network does not impact on the ease with which one can debug issues at the TCP/IP layer, in fact it is totally irrelevant. How old are you 13?

Would certainly be an interesting thing to figure out, I’d love to see you work this out in practice. I bet this is also something that hasn’t been considered before by anyone else! +1 for thinking outside-of-the-box.

For one, I’m sure you know transmitting is only half of the work, but yeah receiving is a piece of cake anyway right. Would be nice to see how the receiving end would be done though, considering the jitter factor on the line with bit banging in general, as well as skew between the different GPIO ports, let alone at that throughput. I guess the Pi 3 is really capable of bit transmission on the GPIO lines using a real-time schedule, or perhaps cranking out a gigabit using interrupts. When you say “transmit” I assume you mean error-free transmission. I guess you’re not wasting any line overhead on error checking either, wow.

Hey, if you pull this one off at 1 gigabit/s, or even 5% of that if that doesn’t work out, be sure to put it on Github for all of us out there. You’ll be featured on Hackaday too, and this time not in in the passive aggressive comments section!

You can have a hybrid serial/parallel scheme to reuse lines, they are not fixed like fully wired logic. This means the address lines can carry a check sum during a block transmission as all the units know who has the bus and that they must STFU until the master dictates a state change. All these methods are decades old, just the context is new. So it is not out of the box at all, it is entirely logical.

It is the only way you can build a Raspberry Pi cluster that is not a complete waste of time because the interconnects are so slow via the joke of an Ethernet port that it has.

I don’t share built designs, ideas yes, but I don’t work for free especially for people who have no respect for me.

That’s complete and utter bullshit, but I’ll go ahead and feed the troll since I feel like it. No way in hell you’re doing gigabit networking with GPIO.

First of all you can’t run Linux while doing this. The scheduling jitter is on the order of 20 microseconds at best the last time I measured it, which means that you can’t actually reliably sample faster than ~50KHz without losing most of your data.

Alright then, so let us assume that you are writing a bare-metal program in assembly and don’t need to actually do any computing at the same time. With a tightly-written assembly loop you can do 25MHz. 25*16 = 400mbit/s single duplex.

So even in an ideal world you can’t do better than 200mbit/s full duplex. Gigabit my ass…

But what about the real world? As cez pointed out above, the speed at which you can toggle IO is just one tiny consideration when bit-banging data. What are your plans for dealing with jitter, skew, error correction, and so on?

Finally, I never said anything about debugging TCP/IP. I’m talking about writing software that deals with the throughput and latency of a sub-optimal network, which you would realize if you had any clue how cluster computing actually works. It’s insane that you managed to come up with that interpretation.

I won’t even bother debunking the childish ad-hominem.

You are a loudmouthed idiot, that may be me “attacking the man” but in this case it is a relevant fact.

“Pi3 now can get up 65.8 Mhz pin-toggle with direct write”

https://plus.google.com/+HennerZeller/posts/X7hfPAJYFUB

See my other comment re “protocols” to cez, and I did not specify the OS either, but obviously one can do what you want with a FOSS kernel to ensure it passes 100% of control over to a low level DMA routine during the time that blocks are transferred. Remember it is now just a number crunching and transmitting module sitting on a closed bus so 90% of the OS is redundant.

Shame about the GPU not being 100% open, but if you are just using the standard cores half the drivers are just wasting space and CPU time.

It should be obvious that if you are hardware hacking a bus out of the GPIO lines that you are implementing a bare-metal approach so your references to pointless or redundant OS imposed features and characteristics is at best naive, or at worst a straw man.

Yeah, I mean, if you set it up as a render farm or something… you get the scene, spend a couple hours producing one .bmp, then ship it over the network. You could use RS-232 and not notice a serious difference.

This is relevant to my interests. I’ve been struggling to think of how best to mount several Orange Pi Plus 2Es in a stack nicely along with a bunch of HDDs. Best I could come up with was rectangular acrylic sheets with one for each board. Holes in the corners that’re where spacers go through the sheet to connect to the layer underneath. Power runs through the spacers, with those things that look like washers but let you get a wire off used to transfer power from the spacers to the GPIO pins of the boards. Everything else is just a mess of networking cables, USB, SATA, plugs for the HDDs.

I feel we need to discuss cheap vs inexpensive.

Cheap is some product that costs little because of the use of underperforming not well made parts. Particularly this definition “5. Of poor quality; inferior: a cheap toy.” Is important.

Inexpensive is something that costs little because it uses established low cost parts and is made of quality. For what it is, the raspberry pi is inexpensive, not cheap

Depends on the context. I know certain of us here aren’t too good with that. “Cheap” can be good, bad, or neither.

For about the cost of a RPi 3 normal price I can pick up a 1U Xeon server, sometimes they go for as little as the ~$30 that the 3 is on sale for.

I can snag several for a comparable price, throw on say DragonFly BSD which has native clustering at the kernel level. If I wanted to experiment with a cluster this would be a better option if I wanted to do more with it, as Xeons and software for standard PC architectures will more easily serve as experience for larger scale clusters using the same technology as opposed to trying to shift from an ARM cluster to x86_64. If it’s just to mess with, them ARM is fine (the xeon will utterly crush it, but at an added power cost) while if someone wants to do more with it, especially on a more professional level it really helps to be able to go “I already have administered a smaller scale version of the cluster I’m interviewing to work on, the processors were a bit older but I functionally have a few years working with this computer”.

With some of the industrial work I’ve done, it’s nice if say someone has had programming experience, nicer if it’s an embedded thing like PIC or embedded ARM or some such, but that still requires a lot more training to convert them to standard ladder logic compared to the person who cut their teeth on Mitsubishi (for cost reasons) who I can just hand them the AB manuals and they are good to go as they already know the system.

Aronofsky.. Pi… raspberry pi…

Great reference. Lol

And it does look somewhat like the computer prop from said movie.

Well since we see this project so many times maybe someone should whip up a pi-core computer, one that while useful on it’s own is easily configured into a cluster, hey the RPi is open source and China can make stuff cheap, might be a money maker for someone. Oh and as for the headline could somebody please invent a better plural for Pi, please.

One could have several recording video, images, or sound at distant locations and then they all join together to process the data. Would that be a good use?

A Gaggle of Pi. A Herd of Pi maybe? Baker’s Dozen!!!

A Pack of Pi works for me. :)

One bakes pies on a rack, or a tray. A tray of RPies would still have the problem of bandwidth, something video processing needs a lot of.

Is bandwidth really an issue if one doesn’t care how long it takes to run?

Hypothetically I could have a baker’s dozen of Pi, each one is owned by a separate person…

Everyone records video, images, or sound and they join together at night to process the data.

Presses start. Goes to bed. Wakes up, everything is done 3D map of a hiking trail or localization of audio source has completed.

I agree that clustering Pi(s) is far from being a supercomputer, but there are possible advantages to having independent processing units.

If a single supercomputer fails you could lose everything that was accomplished, but if one ‘processor’ (a single Pi) fails the rest could continue undeterred.

They don’t have to be a cluster 100% of the time. I think that is the advantage here.

“Is bandwidth really an issue if one doesn’t care how long it takes to run?”

You have a choice you can either have a infinite number of monkeys and get the result instantly, or one monkey and take an infinite amount of time, or some combination of the above. Either way the problem stays the same as space and time are really just one thing space-time.

You can use a Linux computer to contribute to a job sharing system, sure that is a old trick, but what really matters is the flops per erg, the energy consumed by any given computing configuration to process a given set of instructions. i.e. How efficient will it be when most of the SOC chip is not required for the task but is still powered up?

The largest cluster in the world is (this week LOL) in China and was made from custom chips that sacrificed some speed in order to gain more efficiency, because on the scale of clusters the total energy required to build, set-up and operate the system divided by the useful work performed determines if the effort is justified.

I can’t afford what China has and I doubt you could as well, so that point is null.

You failed to acknowledge my hypothetical in any fruitful manner.

Let’s imagine that it is winter; the horribly inefficient bakers dozen of Pi(s) would be heating the house during unproductive idle time.

Relatively cheap computers doing work and heating my house more efficiently than my oil furnace.

Maybe I could keep a cup of coffee warm or preheat the bed of a 3D printer. :) Or maybe rise bread…

‘HACKADAY PRIZE ENTRY: A LITERAL OVEN OF RASPBERRY PIS’

“You failed to acknowledge my hypothetical in any fruitful manner.”

Hmmp now that was just plain stupid of you, because in fact I pointed out that people do do stuff like that, and have done so with Linux boxes FOR A VERY LONG TIME! WTF Do you think that SETI thing is? However I also pointed out what really matters, the efficiency of doing it. Look at how people waste electricity turning it into money in the form of bitcoin, unless you are doing that very efficiently you could be just wasting money.

Your house heating example is also naive as I can gain far more with the same amount of energy by driving a heat pump because the COP is 3.5 or better.

http://hyperphysics.phy-astr.gsu.edu/hbase/thermo/heatpump.html

So you’d need to give up on the assumption that any energy consumed was a gain elsewhere and therefore the overall system was the most efficient possible at 100%. It also neglects the huge losses required to get the electricity to your home vs generating power on site because your cluster is so big that the scales of economy allow you to build a power plant for it, or situate it very near to a hydro electricity source, as has in fact been done with data centres.

The key principle is scale, you simply can’t do some things well as a small scale, it is like the effect from the ratio between surface area and volume and how it effects the thermodynamics of animals. Cold climate animals have their morphology pulled toward a spherical shape to maximise volume and minimise surface area and larger sizes for the same reason, but in areas where heat loss is required you see the opposite effect and you can even see this in hominids (that would be you and your distant relatives). Consider the Difference between a Neanderthal and an Ethiopian, essentially the same given they can breed with each other (and did!) but significantly different in their anatomical adaptation to their environment.

Except the Pi isn’t fully open source or open hardware. Broadcom won’t share the VCU bootloader or detailed chip docs without NDA, and they don’t sell the chips on the open market. The Raspberry Pi Foundation has released limited schematics of the sections, but not full schematics or board files.

Of course there are many competitors, but just like with Arduino, superior hardware alone can’t compete with community or a well fueled hype machine.