Your computer uses ones and zeros to represent data. There’s no real reason for the basic unit of information in a computer to be only a one or zero, though. It’s a historical choice that is common because of convention, like driving on one side of the road or having right-hand threads on bolts and screws. In fact, computers can be more efficient if they’re built using different number systems. Base 3, or ternary, computing is more efficient at computation and actually makes the design of the computer easier.

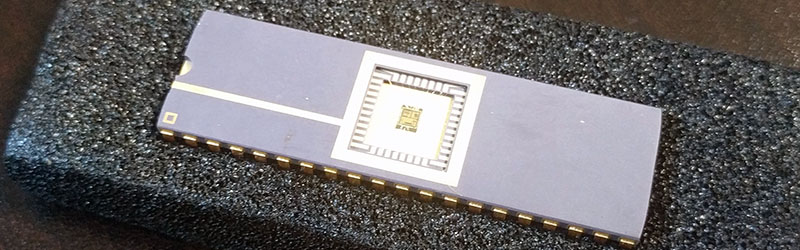

For the 2016 Hackaday Superconference, Jessie Tank gave a talk on what she’s been working on for the past few years. It’s a ternary computer, built with ones, zeros, and negative ones. This balanced ternary system is, ‘Perhaps the prettiest number system of all,’ writes Donald Knuth, and now this number system has made it into silicon as a real microprocessor.

After sixty or seventy years of computing with only ones and zeros, why would anyone want to move from bits to trits? Radix economy, or the number of digits required to express a number in a particular base, plays a big part. The most efficient number system isn’t binary or ternary – it’s base e, or 2.718. Barring the invention of an irrational number of transistors, base three is the most efficient way to store numbers in memory.

Given that ternary computing is so efficient, why hasn’t it ever been done before? Well, it has. The SETUN was a ternary computer built by a Soviet university in the late 1950s. Like Jessie’s computer, it used a balanced ternary design using vacuum tubes. The SETUN is the most modern ternary computer that has ever made it to production, until now.

For the last few years Jessie has been working on a ternary computer based on ICs and integrated circuits, making it much smaller than its vacuum tube ancestor. Basically, the design for this ternary logic relies on split rails – a negative voltage, a positive voltage, and ground. The logic is still just NANDs and NORs (ternary logic does provide more than two universal logic gates, but that’s just needlessly complicated), and ternary muxes, adders, and XORs are built just like their binary counterparts.

This isn’t the first time we’ve heard about Jessie’s ternary computer. It was an entry for the Hackaday Prize two years ago, and she made it down to our 10th-anniversary conference to speak on this weird computer architecture. Over the last two years, Jessie found a team and funding to turn these sketches on engineering notebook paper into circuits on real silicon. Turning this chip into a real computer – think something along the lines of a microcomputer trainer from the 1970s – will really only require a few switches, LEDs, and a nice enclosure.

Where will the ternary computer go in the future? According to Jessie, the Internet of Things. This elicited a few groans in the audience during her talk, but it does make sense: that’s a growing market where efficiency matters, and we’re more than happy to see something questioning the foundations of computer architecture make it to market.

Computers use 0 and 1 because of how diodes and transistors work. Before unsubscribing / unfollowing hackaday please specify if “There’s no real reason for be the basic unit of information in a computer to be only a one or zero, though. ” is a quote and not an author statement.

The interesting point for using ternary system in the SETUN was to take advantage of the three states allowed by a memory core: magnetized clockwise, counter-clockwise, and no magnetized. In my opinion, at a pure electrical level the binary form is the most eficient.

We’re throwing away 1/3 additional er… state width?

To put it another way, given a collection of standard IC’s and comparable controllers to ARM with the same as comparable binary controllers and IC’s. How much additional circuit board space is required beyond the negative power rail to a comparable binary board?

8 states would require only 2 pins on a ternary while it takes 3 on a binary. Seems to me, it would save on board space allowing us to create much better toys to play with.

I don’t think binary systems should go away, but I don’t think it should be the only player.

Damn… bad edit on “controllers and ICs.” Should just read IC’s. blah blah blah. You get the idea.

Yes, it is possible for a magnetic core to have many states, and it might seem obvious to use CW, CCW, and “none”, but in practice it’s very hard to get to “none”. Due to the hysteresis of the materials used for magnetic core memory, it would rather flip from one direction to the other than just find a nice comfortable in-between state. It’s like trying to make a toggle switch stop in the half-way position – you can do it, but not easily.

Weren’t the memory cores in the Setun weaved in a different way?

Was thinking this exact thought just half hour ago. What follows are disconnected ramblings, discard before/during/after reading.

If I remember correctly, direct bits’ storing is almost never efficient, and what’s stored instead is the quadrature of the signal, ie, the phase change between adjacent bits at the lowest possible Nyquist Rate (ie, sampling). Literally, the signal is converted into its analog representation that would be more-or-less safe to store (or transmit – fiber optic cables, satellies, WiFi modems, same difference).

So, in my opinion, binary is kept as binary only inside the digital realm, inside the computer, and nowhere else. Analog signals are notoriously tough to reliably handle in the analog world, noise, signal drop out, etc etc, and there are hundreds genius inventions doing that (keeping the original signal intact) that made it possible.

Point being, at the hardware level it is already handled as non-binary. IMHO, why it takes so long to re-invent some analog computing at that level is kind of puzzle for me – things like instant parallel computations with Op Amps are known to be done using zero code – though not at Gigahertz speeds, sadly, but who knows, maybe it is time Analog Computing makes a spectacular comeback outside the digital realm.

(case in point, so-called car “cruise control” is nothing but lowly OpAmp-handled comparison between present speed and set speed, it was implemented using nothing but analog transistors in the 1960s cars; in itself, cruise control was military technology, it was invented for steadying the bombing targets, tube-based and all. There are plenty other examples, Decca Navigator, position triangulation using three signal beacons transmitting on three separate frequencies – aha, now this makes even more sense, three-phase signal, tertiary basics : – ]).

I’ve been thinking about this over and over untill I googled if someone was making a ternary computer. If I’m right, even at 32 bits across and half the clock speed, a ternary computer is 5×10^15 times as powerful as a modern binary computer. It increases dramatically from there, obviously. 3^64 is insanely powerful.

We use thyristors. That’s a 3 state device. We’ve had them for a very long time. We really sold ourselves short with binary. Simpler?

I’m not sure that’s necessarily true. I mean, It’s a got to be some simpler, but not like achieving the same power with binary. That’s not even possible. So in that regard, ternary is a requirement for computing advancement at some point.

Turing’ss computer had 6 states, if I’m not mistaken. (It’s been a long time for me, folks). That poses some issues today. But in ternary, If you’re laptop was 5×10^15 times more powerful, even at half the clock speed, your laptop would outcompute all the supercomputers in the world, COMBINED.

Maybe my math is off. Check it. It’s easy math on a calculator.

The issue of ternary computingbis less a matter of efficiency than it is about power. As I said, even a 32 bit width and half a modern clock speed we’d have 5×10^15 times as much power was binary. This a a rebirth in computing. It’s not some incremental carrot on a stick. Remember 2x CDROM the 4x, the 8x, then 24, then…. they were milking us for money back then. A ternary computer is like having a sentient computing platform to start with, if you’ll forgive the hyperbole. It can easily outcompute the fastest binary computers, solving the world’s toughest problems as a screensaver or in the background. It’s not a matter IF we SHOULD. we must, if we are to grow at the rates we were growing in the 90s. We’ve become much smaller and more efficient but in computing power, we haven’t seen a REAL breakthrough since we went from 32 bits to 64. If we need problems to solve that would require ternary computing, then start with the biology and gene science, cold fusion simulation, the data sent to and from vessels sent into deep space (that is efficiency), ask any scientist what the world could do with computers that are on the order of 10^15 times as powerful in their laptop. If it’s there, people will use it.

One of the most intractable truths in many domains is that good enough is the enemy of better usually expressed as the cost/benefit ratio. While there are some features of using ternary in computing that are advantageous the real question (as always) is if it is worth it.

For many general purpose applications, generally not. But as is typical, there are always niche cases where certain things don’t weigh as heavily. Most consumer products are a far cry from being “perfect” if there even is such a thing but this comes into play far more often than you would think. Even for such mundane things as roofing materials. Pay a “little” now for a cheap roof or pay a lot now for a better roof but have it hold up for several decades or more. Or the use of extremely specialized and niche mechanical devices when the need for them is there and cost is less of a concern. Surgery, space flight, F1 racing, etc.

“a ternary computer based on ICs and integrated circuits”

Will it be put to use in ATMs and automatic teller machines?

Nice

Nice

Nice

Nice

Yes but it might be put in ATM machines as well.

Those things you put your PIN Numbers into ?

I’m a little concerned about the performance you can get from a ternary computer. How many MIPS per second do you suppose they can do?

“There’s no real reason for be the basic unit of information in a computer to be only a one or zero, though.”

What!? There are very good, very real reasons why binary is used. Saturating transistors can be used, which reduces power. Having only two voltages to work with reduces power rails. And having only one threshold to worry about makes the noise margins better for a given voltage swing, and allows for simpler and faster circuits. Those advantages outweigh the benefits of a higher radix encoding.

Now, if what Jessie is doing is using pairs of binary circuits to encode three states (akin to how some hardware uses base-16 encoding of floating point numbers), I’m unconvinced how that is more efficient than encoding four states with two binary circuits.

Don’t forget that someone would need at least two transistors per trite-bit (tit?) and maybe a way to stop accidental follow through (both on at same time) and then the address bus decode being a high tit decode and a low tit decode presuming a zero tit meaning unused (off).

So how would you determine if the tit is high, low or extra low (damn that’s low)? Via voltage references and/or opto-iso feedback?

Binary can be just two transistors and a current limit resistor (oldskool discrete transistor logic)

(tits weren’t meant as a pun :D)

wikipedia says it’s a trit https://en.wikipedia.org/wiki/Ternary_numeral_system#Trit

There is even a qutrit https://en.wikipedia.org/wiki/Qutrit

I would’ve gone for “tit” strictly because it fits in the same visual space as a bit. But I guess since a trit is bigger than a bit, that makes sense.

Or Tet?

Didn’t you or the guy above read the article here? She uses +V, GND, and -V for the three states, so the device needs a positive and negative rail. Nothing’s stored as binary, otherwise it’d be a binary computer with a load of pointless intermediate convertors and unconvertors.

Storing two sets of binary is still storing two sets of binary. All you’ve done is hide that implementation detail from the user. But we’re not the users, we’re here to discuss the actual implementation detail. Moreover, it’s the exact reason why there is a very good reason we use binary – it’s the natural way our current electrical components work. For a microprocessor to be ternary you’d need to use fundamental components that offer that property natively; not through various workarounds such as what they discussed in this video to “simulate” such property. Turns out it’s not exactly a trivial task. So really, we can say they’ve developed a binary electrical circuit that simulates a ternary circuit.

Memristors are those fundamental components that offer ternary natively: http://edition.cnn.com/2015/02/26/tech/mci-eth-memristor/index.html

Quote:

“She uses +V, GND, and -V”

That was the basis of my thinking:

+V is high (or very high)

GND is unused state (or low/high relative to what you want)

-V is low (or very low if you want)

now:

0 3 6

0 0 0 = 0

+ 0 0 = 1

– 0 0 = 2

0 + 0 = 3

+ + 0 = 4

– + 0 = 5

and so on.

My comment is how would you use such a thing as an address line, as in how does the address translate the + and – voltages without lots of transistors.

Also in theory, yes it is efficient…. Only if you look at the table above.

Now that we are in the habit of Jordan Maxwell-ifying facts here:

One could look at the 2.718 value and say that 2.X means two states are efficient and 0.718 means it is about 72% efficient with the remainder being the glue logic to make it work. However that is using the same logic as a guy (Jordan Maxwell) who takes an ancient name, Krishna, and claims it came from Christ (Jesus Christ) which would only of worked if English was older than say Latin, Arabic or Sanskrit, That among his other New-agism Hipster agenda pushing blag of a technique.

Next someone here will say “ternary computers…. because aliens did it”

Bludi hipsta logik!!!!!

Well using a high z state instead if the negative rails would make more sense I guess???

Hey Jim, can I DM you a few questions about ternary vs. binary systems? I’m a writer trying to make a story work so it will be (largely) theoretical and as brief as you like.

cheers,

KDA

I can count to 512 on just two hands…. And…. probably get arrested for public disorder sticking two middle fingers up in public when counting in base two on my hands.

The problem will be having to learn a whole numbering system. Binary seems easy to count.

Ternary, well I can’t be asked to traipse the internet on how to count past 2 in base 3 when I’ll never have use for it as far as I can see to my future.

Sorry to hear that you’re missing a finger. WIth all ten of my fingers, I can count to 1023 in binary :D

Cheers for the error correction, Just realized that this morning. LOL

[quote]I can count to 512 on just two hands…. And…. probably get arrested for public disorder sticking two middle fingers up in public when counting in base two on my hands.[/quote]

This one is very good! Could be a handy excuse in front of a judge, “i wasn’t insulting him, i was counting in binary!” :-) Not sure if it will be accepted though…

But ur fingers have mire than one segment. So u can for example bend them to count in ternary. This will allow u to count up to 59 049 with just 2 hands … if u now would use the hands of a second person it would not only be 57.67 times more but 191 751 times more… with just 2 times the resources.

The point with the resources is trash…

Why not different between + 0 and -0 uhuhu

To simplify the answer, because zero has no direction.

of course negative zero is a thing but afaik mainly in IEEE 754 floating point maths (already well abstracted above/away from processor physics), not in logic levels or voltages.

-0 makes the most sense when 0 was arrived at from rounding–knowing which side of zero you came from can be useful in those situations.

It also makes sense in limit math, but those are only going to be approximated in a digital system anyhow.

There are only three kinds of people…

Only 10 kinds of people in my book. Those who use binary and those who don’t.

Only 10 kinds of people in my book also:

1. Those who use binary,

2. those who use ternary

10. those who don’t use anything.

I lol’d.

There’s only 10 kinds of people in my book:

1. Those who use binary,

2. Those who use ternary,

3. Those who use quaternary,

….

9. Those who use decimal,

…

f. Those who use hexadecimal,

…

…

…

First? Yeah, let’s forget history. Don’t check even Wikipedia, because clickbait.

https://en.wikipedia.org/wiki/Setun

https://en.wikipedia.org/wiki/Ternac

You guys (editors here) can be such idiots sometimes. Shame on you.

You… You realize I linked to Setun in the article, right? It’s almost as if you’re only reading the headline, and complaining the headline is inaccurate.

NOW HIRING: BETTER TROLLS. Please send your application in the form of a brick thrown through my front window.

Any particular type of brick? There’s quite a wide variety of types, shapes and materials to choose from :)

Hint: Surprise him with your creativity.. ;-)

any of these should do the trick…

http://storage.lib.uchicago.edu/ucpa/series2/derivatives_series2/apf2-00502r.jpg

I didn’t know that the Setun used a microprocessor! I could have sworn that it used tubes.

And the Ternac doesn’t count — it’s merely a simulator written in Fortran, rather than an actual computer.

there are 10 types of people in this world, those who understand ternary, those who don’t and other just heard about it.

That made no more sense than my failed attempt.

It seems that we wrote the comment at the same time, LOL!!

Okay,I’ll get in trouble for this, but …

There’s also those that don’t care ;-)

Nice.

I was saving ‘Don’t care’ people is for Quaternary. You ruined my joke, LOL!!!!!!

Aren’t quantum computers potentially base whatever-you-want?

So are regular computers if you design them that way.

Qubits are still inherently base 2. Qutrits (https://en.wikipedia.org/wiki/Qutrit) are a thing, and I suppose you could extend the idea to base n in principle.

Me thinks there is encrypted data in the sound stream at the end of video.

The best reason to use something other than binary is so that we could reduce the number of traces needed to convey instruction sets and memory locations. Say for example that you had a new kind of diode that would not just give high or low from a voltage, but it could distinguish between many many different voltage levels that means you could theoretically create a computer where the instruction, and addresses locations could be transmitted in a single channel single clock cycle.

But as someone above mentioned, you get voltage drift and resistance can lower the signal voltage etc, so it would be really hard to control, but it could be really really small since you would need fewer channels.

We are doing something similar with fiber lines. Usually its just red light on or off, but there is some experimentation using RGB and breaking it apart on the other end. It would essentially be the same thing.

Another correlation is radar detectors used to just use a single point bounce, to measure distance twice and determine speed. Now they send a hamming pattern and then when they receive it, they can actually get much more precision by looking at the pattern when the signal is returned.

Anyway, I was asking about this in my undergrad and got in trouble for “distracting the class” … very annoyed. :)

Some signaling protocols use more than two voltage levels – your ground return quality matters more and your signal eyes are smaller, but it’s a valid strategy for trading off clock speed, number of signals and bit rate.

Some versions of Gigabit Ethernet (excluding optical, obviously) use multiple voltages to encode more than one bit per channel per clock – https://en.wikipedia.org/wiki/Gigabit_Ethernet

So does MLC flash.

https://en.wikipedia.org/wiki/Multi-level_cell

Ah! There are 10 types of people: those who understand binary, those who don’t and those who don’t care!

There are 10 type of people: those who understand binary, those who don’t and Nikolay Brusentsov.

It’s funny that this applies to any base (10 in base X is equal to X).

So besides the first use (related to base 2) when the joke come from people being used only to read 10 like ten in base ten and it was something new, now i don’t see it funny anymore.

My favorite is “There are 10 types of people: those who can extrapolate from incomplete data.”

Except for better educated IT and math guys, most people don’t even know about other bases. If their school system does teach them about it, converting between two or more systems is somewhat hard to them. So in my book joke with 10 people is always funny.

Just wanted to point out that we already use “more than binary” in lots of practical applications right now. Just not in processors.

Some modern DRAM cells can represent as many as 6 different states. That’s six states, not six bits. The DRAM controller handles the translation from binary to whatever n-ary the RAM uses. Some of you may have such memory in your computer right now.

There are non-binary Flash memories. If you have an SSD in your computer it is likely that you have a multi-level NAND Flash drive.

IBM had mainframe tape drives that used 10 bits per symbol. In the 1983.

Telecommunications technology has been doing this for just as long, if not longer.

The US Army had a radio system that used a modulation method that had more than two states per symbol in the 1940’s.

Using multi-level digital modulation over radio was already old-school a couple of decades ago.

56K modems? 3 bits per symbol.

802.11ac wifi? 512 bits per symbol.

“But those are ANALOG!” you say. Okay, maybe you can argue that a digital modulation method over radio or old phone lines isn’t “fully” digital. So how about Fiber Channel? 10 bits per symbol.

Long story short: Many of our digital systems have already gone beyond binary. It’s mostly just our microprocessors that haven’t.

Microprocessors with a constellation.

I was disappointed to see nothing but block diagrams, especially since those looked just like the diagrams for binary logic and arithmetic. Show us some transistors implementing a gate!

And how about flip-flops? Flip-flap-flops, I mean? So much of computing (aside from high density storage and communications protocols) take advantage of how easy it is to make two-state circuits. So show us how easy it is to make three state circuits!

We can use 0, 1 and -1

Ground, +4v., -4v.

See optical computing, flip-flap-flop, and clockwise, counter-clockwise, off magnetic polarization for possible ternary circuit archs.

See also Thomas Fowler’s 1840 ternary calculating machine – he needed to calculate parish contributions to poor relief by reducing pounds, shillings and pence to farthings. There’s a demo of a reconstruction online.

Can you please share more details about this machine?

Here’s a website: http://mortati.com/glusker/fowler/

and here’s a video: https://youtube.com/watch?v=uTo1M_ClN74

Why stop there? Let’s go back to analog computing. Even within zero and 1 you get access to an infinite number of states.

That would would be Base E, the most efficient. But try to make a “digital CPU” around that…

Now to build a Ternary computer and get Midori running on it! Optimal efficiency!

Project Midori (the OS) to disambiguate.

Balanced ternary is the most efficient computing base if the circuit complexity follows radix x length. But it may not. If we look at the number of possible gates for example:

Binary Ternary

1 input 4 27

2 input 16 729

3 input 64 19,683

Now many of these gates are not useful, the only useful 1 input binary gate is an inverter, the other 3 are always 1 always 0 and same as input are not really gates. But if the complexity of the circuit to implement these gates relates to the number of possible gates then binary wins out over ternary.

https://en.wikipedia.org/wiki/Radix_economy – Look under “Computer hardware inefficiencies”, Base 2 would be 39.20 N=m in tubes, while Base 3 would be 38.32 N=m. So the transistor count could go down, if you built a transistor that take in an input and compare it to ground…

WOW I am not nearly as knowledgeable as you guys but I do buy into ternary computing. First by sticking with alternating current we save a fortune in power waste and usage. by introducing a third option we can modify the logic of present AI and create AI that has a creative ability. Think about it. Mr Spock is logic based but on occasion his human (third option) jumps in and the party changes.

While yes that is possible, but I think the target for this design is to reduce the amount of interconnections of the bus, which in theory would reduce the foot print of a die. I think what you are referring to is fuzzy logic.

I just read this below and was reminded of this thread.

https://www.crypto-news-flash.com/big-news-for-iota-information-about-the-secret-project-jinn-will-be-announced-on-monday/

The only time I encountered ternary data was for the maerklin digital control systems for model trains. It sends data in trits (although, inefficiently, encoded back into binary, so what is the point?).

Maybe this new IC would have an application in model railroad control :)

I didn’t see anybody else in the comments point this out so I’ll just make a note:

Base e looks exactly like ternary, but each place value represents a power of e. That means it still only uses the digits 0,1,2 or -1,0,1 for its balanced and unbalanced forms. Now, base e would be almost useless for integers so you wouldn’t use it to index addresses, but if you had built a ternary computer then base e could be the perfect encoding system for floating-point calculations.

This is totally based for IOTA future

The binary number system is ideal for use in digital computers because of its close affinity to Boolean algebra. But it is actually the ternary number system (base 3 arithmetic) that is closer to the system of minimum informatics-theoretic construction cost.

Assume, for the moment, that the cost of building a logic circuit that holds one digit is proportional to the numbers of digits in play, 16 for the hexadecimal number system, 10 fr decimal, 8 for the octal number system, and so down to 2 for the minimum usable base, for which flip-flop can be used.

But then note that the cost of representing a number is also proportional to the numbers of digits – decimal hex digits or trits (base 3 digits) or bits – needed to do so. For example, a binary number contains about 3.3 times as many bits as its decimal representation has digits. For what base b is the product of the two cost factors a minimum?

It turns out that the optimum base would be `e`, the base of the natural log 2.718281828459045 a fact first noted and published by engineers at engineering research associates (ERA) in 1950. But since a number base must be an integer, the closet we can come is to use base 3 (although 2 is the next best). Brian Hayes has traced use of this system back to the days of Kepler.

Also consider ternary logic as pre quantum, hence ternary signals can be introduced to a binary wave spectrum to achieve time harmonization and resonance synchronization with backwards propagation for N vectors for an `absolute state` of resulting noise reduction.

I would like to find a like minded humans to work with. Reply here.

A balanced-ternary version of FPGA would be a really interesting tool to have. I wonder if anybody is working on one of those 🤔

howdy. I’m a computer noob fiction writer looking for a yes/no on whether a ‘modern’ ternary computer would be susceptible to binary viruses/worms a la STUXnet. please help

Ternary never has and never will make sense for transistors. Balanced ternary makes a lot of sense for nanomagnetic computers which are 960,000 times more efficient then semiconductors. Magnetic north, south, and neutral. As of November 2023 we are still working on nanomagnetic computers.

“The Untold Story of the Signary Machine” may be found from 1995 in sci.math. This speaks from the then-future perspective of the 21st century. First, at the level of fundamentals: they’re not “trits” but just simply “signs”, being -, 0 and +. The numeric orthography, itself, which is capable of representing integers, rather than just natural numbers, without any extraneous markers (i.e. “sign bits”) is – “signary”, not “balanced ternary”. which comes off as 20th-centuryish, must like the 19th century “horseless carriage” would sound to 20th century ears.

A fundamental element exists, that is both reversible and equal its own inverse, and is called the “Rotate Gate”. It has 4 inputs (A,B,C,D) and 4 outputs (a,b,c,d) in which a = -A, (b,c,d) = (C,D,B) if A = +, (b,c,d) = (B,C,D) if A = 0 and (b,c,d) = (D,B,C) if A = -. It is complete, in the sense that all multi-variate signary functions can be composed from Rotate Gates. It is depicted in three dimensions as hexagonal wafer, with vertices (B,d,C,b,D,c) in order, and the (A,a) lines perpendicular to the hexagonal wafer, passing through its center, with A on one side, and a on the opposite side.

A Rotate Gate configuration is capable of implementing reversible computation, by its very design.

The implementation is photonic, not electronic and with each sign being expressible by one of two active states or an inactive state. A configuration of Rotate Gates is a signalling network or signalling diagram.

Because there is no longer need for any “sign bit”, no distinction needs to be made between “unsigned” and “signed” integral types. There is only the signed integer type, since every “sign” in the signary numeric orthography is literally a sign. So, the integers are signed-on-steroids.

The basic units of control flow are sequencing and the Fork, which has the form (sign: N, O, P), which transfers control to N if sign = -, O if sign = 0, and P if sign = +. Optimized variants of it may exist in which 1, 2 or all 3 of N, O, P default to the following instruction in sequence, or in which any 2 or all 3 of N, O and P are the same.

There is a Carry Sign, C, and an Extra Hand, X. The size of an addressable unit is called a Hand, which consists of 5 signs. A sign may be depicted as a colored dot, with red 0, yellow + and blue -, while a Hand may be depicted as a pentagon with colored dots on the vertices.

A family of operations is formed from the template add:r:s:t u, v, w, which adds ru + sv + tw → 243 X + u, where r, s, t denote signs and u, v, w denote hands. The default case is add = add:+:+:+. Numerous other instances of the template exist, such as (cmp v, w) = (add:0:+;- v, w). The arguments u, v, w are absent, respectively, in the cases where r = 0, s= 0 or t = 0.

Two multiplication operations exist: mul u, v for uv + X → 243 X + u and mul2 u, v for 2(uv + X) → 243 X + u.

Division is done by div u, v, which divides 243 X + u into v and with (quotient, remainder) → (u, v) and sign(|X| – |v|) → C. The resulting values of (u, v) are only defined if |X| ≥ |v|. The case of the output (C = -) generalizes the “divide-by-zero” error into the “too small to divide by” error.

The negate operation neg u, which gives u → -u, is just add:-:0:0 u.

The operations sadd u,v, max u,v and min u,v represent the respective operations sadd(u,v) → u, max(u,v) → u, min(u,v) → u, where sadd(u,v) is just (u + v) modulo 3, e.g. sadd(+,+) = – and sadd(-,-) = +. The min and max operations are with respect to the ordering – < 0 < +.

shift u represents shift(u,X) → u and shifts |X| positions through the carry sign, C, to the left if X > 0, or to the right if X < 0, and reduces to a “nop” if X = 0. One variant shifts off the edge, while another rotates. For a 5-sign rotate, if C = 0 then shift(++-+0, +-) yields -+00+, with C = + as a result.

The control-flow operation fork n, o, p represents the previously-mentioned Fork control-flow (C: n, o, p), with the carry sign C, by default; and more generally fork:s n, o, p, for an arbitrary sign s. flip n, o, p and twist n, o, p are both fork n, o, p, except with -C → C for flip and sadd(C,+) → C for twist.

The signary machine is a photonic processing unit called the Maxwell-2010 or Max-10, for short, because this was written in 1995 and 2010 was The Future. It is a highly-parallel optical signary processing unit, called a photonic signalling net or photonic net, for short. It had many more features, including a brain-wave interface and “fermionic” matter holography unit.

A 2004 snapshot and update of the 1995 sci.math article, complete with a C program that uses signary numerals, and some of the operations described here, applying it to invert the 32 x 32 scheduling matrix for the 2001 NFL season.

https://web.archive.org/web/20041109094515/http://www.uwm.edu/~whopkins/untold/signary.html

I wonder if ternary can benefit 3D applications where 3/4 of spatial coordinates have a negative value for one of the axis so you’re playing with signed numbers all the time.