Creating Raspberry Pi clusters is a popular hacker activity. Bitscope has been commercializing these clusters for a bit now and last year they created a cluster of 750 Pis for Los Alamos National Labs. You might wonder what an institution know for supercomputers wants with a cluster of Raspberry Pis. Turns out it is tough to justify taking a real high-speed cluster down just to test software. Now developers can run small test programs with a large number of CPU cores without requiring time on the big iron.

On the face of it, this doesn’t sound too hard, but hooking up 750 of anything is going to have its challenges. You have to provide power and carry away heat. They all have to communicate, and you aren’t going to want to house the thing in a few hundred square feet which makes heat and power even more difficult.

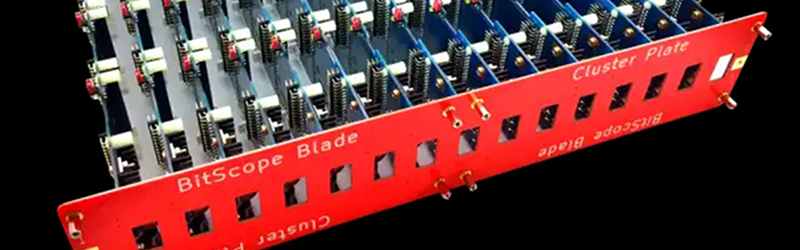

The system is modular with each module holding 144 active nodes, 6 spares, and a single cluster manager. This all fits in a 6U rack enclosure. Bitscope points out that you could field 1,000 nodes in 42U and the power draw — including network fabric and cooling — would be about 6 kilowatts. That sounds like a lot, but for a 1,000 node device, that’s pretty economical. The cost isn’t bad, either, running about $150,000 for 1,000 nodes. Sure, that’s a lot too but not compared to the alternatives.

We’ve seen fairly big Pi clusters before. If you really wanted to go small and low power, you could always try clustering the Pi Zero.

Had the pleasure of meeting the guys behind Bitscope/Metachip ~15 years ago when they sold me my first DSO – Glad to see they’re still around!

Wouldn’t it have been much cheaper to use virtualization to create 750 servers on a few bigger machines? If it’s just for experimenting with distributed systems like that I don’t really see the advantage of dedicated Raspberry Pi’s (especially if they use full sized ones with all the peripheral stuff like pictured on their website and not the compute modules).

would it be cheaper? maybe

would it be fun? no

Virtualization works fine, until an exploit on an virtual machine can read data from the hypervisor. Each RPi has it’s individual hardware/cpu/ram/firewall, thereby can’t be exploited with local exploits.

Does that really matter for a testing setup?

Also a large part of the web runs on AWS, Azure, Digital Ocean etc all VMs running on a hypervisor

There are a lot of things at places like Los Alamos that don’t go on public cloud, even for testing and prototyping. Don’t expose the structure od the enclosure and it’s less attack surface area. Think “If I know the shape of the vehicle, I can tell you something about what it does”.

If you don’t wish to or can’t run VMs on a potentially publicly reachable network, there are plenty of hypervisors you can install and run locally.

Vmware’s ESX can even be free if you don’t mind configuring each host independently (Although LLNL can easily afford even that)

The point being made was that just because it is a VM on a hypervisor doesn’t mean it’s an automatic security risk, even when it is run on the public Internet, let alone internal-only.

For the topic at hand however, VMs can only go so far. They already ported their supercomputers sub-hyperprocessor and OS to ARM and can run it on the Pi naively. ESX and most popular hypervisors out there are x86/x64 only, so literally won’t run the existing code at all. With the Pi the same clustering software and compiled code runs native and can be moved over to the supercomputer to do the same.

I can’t say for sure, but I’d rather build a cluster using the same architecture using off the shelf parts to test my code than add in the issue of porting my OS to a CPU type never before used and debug both the x86 cluster software and my own code at the same time. (One disaster at a time please)

Yes but real HPC clusters don’t want the OH of VM’s

I doubt that really matters in this case, since the cluster is being used to test HPC applications. E.g. the HPC application is distributed to all nodes in the cluster and tested for a few cycles to ensure everything is working correctly.

It’s not a multi-tenant situation where individual nodes have different customers/data/applications.

I’m also having a hard time believing this is worth the effort. HPC simulation needs to take into account inter-node communication (since HPC supercomputers are really compute nodes linked to a very fast networking backbone)… but that could be done cheaper with virtualization. Throw a few 384 core ThunderX servers at the problem and you’ll have your high core count, and virtualization to provide the appearance of multiple nodes.

The only perk I see is more realistic node-to-node latencies. E.g. a few large servers virtualizing the cluster will have fast inter-node communication between the local VMs, and slower latency to the other servers. But a complete hardware solution like the RPi setup would allow equal latency in all directions (*mostly, depending on how they are networked)

As someone commented below, core count matters. In order to test that many cores, you physically need that many cores. Your suggestion of using the 384 core ThunderX servers would mean they’d need 8 of them (the Bitscope cluster has 3000 cores), at a cost of $20k each, or $160k — $10k more than the Bitscope solution; and that Bitscope solution is actually 1000 nodes, which would actually cost over $200k to implement using ThunderX servers.

ZacharyTong is right though. LANL is interested in MPP where coupling matters. You’re not just launching N copies of the same containerized application like you would on a standard cluster, you have to concern yourself with making sure that each process of the job is placed optimally so that processes don’t spend much time waiting on results from each other. You also need to recover quickly from an individual crashed process or entire node that drops off the network without having to restart the entire job.

A two socket ThunderX2 and 24 Raspberry Pi 3s have the same number of cores, but on the ThunderX2 their shared memory latency is incredibly low as caches and DRAM are local. Cost aside, 24 Raspberry Pi 3s in a cluster model the scaling effects of a supercomputer much better than a single TX2 server.

I’m not sure a few servers pretending to run 750 machines at the same time is quite the same as 750 machines actually running at the same time

True but then the usb ethernet is not close to the io connect that are used in the real cluster.

But it is a two way street if the software have problems dealing with the latency it should be easier to find in a system with even bigger penalties. But i suspect that they can get troubles that wont show at all in the big iron.

The issue isn’t latency, it’s parallelism. Writing high performance multi core/thread software reveals a whole host of problems when trying to do things at the same time. If you run a virtual setup the virtual machine limit will be the number of cores in the system, more than that and there’s no benefit.

Core count matters, we ran into this problem at my company trying to simulate a couple hundred machines. If you want to truly test that many cores simultaneously, you actually need to have that many individual cores.

Lets say that the data being processed, and algorithms used, were of importance to national security (simulations of nuclear phenomena) and legally had to remain on site. That would prohibit using cheap external VM providers. But I’m sure you are right that the very first tests would involve a few VM’s just to check for basic configuration mistakes, then the second or third round of testing would be running on the above 750 node cluster, and if all is well then allocated a runtime slot on the main system.

750 consumer-grade physical computers are a better model of the RAS requirements of a large HPC system than a few large server-grade boxes running VMs. VMs scale too easily and cleanly.

VMs do not provide true parallelism. Thus avoiding a host of nasty race conditions and interlocks. They have a completely different set of nasty race conditions and interlock problems. :)

They also don’t provide network congestion, memory faults, and non-deterministic timing in the same way as real hardware.

The important thing of parallel supercomputing is the delays and bandwidth restrictions between the nodes. Those are not accurately modeled when you run things in virtual machines.

These tests should help to optimize things like: should we run across the matrix row-first or column first? You might get an entirely different answer if your virtual machines setup has wildly different communication overhead.

When testing you want to use actual hardware and not a virtual machine. Debugging on an emulator is not great practice at all. Sure, in theory it’s going to be the same, but if theory was the same as practice then nobody would need to test anything.

I agree, VM’s could have easily scaled to any number of servers, but turns out Los Alamos National Labs has a budget of $2.2 billion.

I’ve been wondering if there is a market / use case for a high node count system that is low power that does not need high performance nodes. Not just for RPi based ones but custom systems that boot over Ethernet.

Would the isolation with each node being a SOC give better security than many virtual nodes using high performance dedicated server arch?

Would you get high node count at a lower cost and running costs?

So my naive idea is a board that hosts multiple SOC’s with their ram and maybe some persistent local storage per node. Say a 1gig dual core arm chip (or Intel Atom), 1 gig ram, 32 gig of flash memory. Some interconnection so the board has one Ethernet port. I don’t know if you can create a lan on a circuit board.

I want to build one, just because. :) Does not need to be RPi based. Would be interesting to know if after that if it would be useful for something or just a fancy door stop in my house. LoL

You will need a fair amount of current.

https://en.m.wikipedia.org/wiki/Homopolar_generator

:-O you’re upside down!

Annie, are you ok?

Annie is in Australia

It’s just what happens when you’re struck by a smooth criminal.

With recent budget cuts, I’m sure the National Labs are _really_ using this for Bitcoin mining.

(Ha! I accidentally spelled it Bitcon)

“Creating Raspberry Pi clusters is a popular hacker activity.”

Demand, supply, just like the graphics card market and mining. One Pi per an order. ;-)

6KW isn’t bad at all for 4,000 cores.

On the other hand, 42U of two socket 24 core X86s comes out to 21.7KW, 2,000 cores (4,000 threads), and a much larger price tag. (about $600k list price from a vendor I use, certainly available for less from $CHEAP_VENDOR) But that covers warranty, mainstream OS & hypervisor license, support, etc. Depending on what you want to do for networking, figure another $40-50k for 40Gb network per node and give up 2u.

It’s the 21KW of power and 75K BTU of cooling that kills you. Standing behind one of these is both loud and warm. $DAY_JOB

you miss the part where one x86 core is ~5-10 slower than one Pee ARM one? not to mention ram/interconnect speed differences, that takes 21KW down to 2KW

4000 pee farm is a great way to simulate state of the art 20 year of supercomputer.

I wonder why anybody would want to gang 750 rpi’s together. As far as I can tell they don’t excel at anything.

Each one of them has 4 cores and is dirt cheap. Yes you can get more computing power from x86, but this is for testing HPC protocols where load is expected to be 100% on every thread at the same time so VMs can’t be successfully underprovisioned. As many cores and as complicated a network you can manage for testing the scaling of the protocols is what’s desired. And cheap crappy CPUs fits the bill for that.

I wonder (with such a large purchase) if Bitscope got a look inside the Broadcom code inside the RPi to make for optimization.

I would be deeply surprised. In SoC land 750 units is “less than a reel; if we are feeling generous we might condescend to sell you some that aren’t already soldered into dev kits” territory; not “valued customer who gets engineering support” territory.

One small problem with using that PiShop :-) to emulate a bigger cluster, numerical simulations are exquisitely sensitive to tiny differences in how floating point maths calculations are implemented. But for testing everything above that level, where things are a little more deterministic, it is a great idea.

So THAT’S why we can’t buy more than one raspi zero at a time… :)